Ben_West🔸

Bio

Non-EA interests include chess and TikTok (@benthamite). Formerly @ CEA, METR + a couple now-acquired startups.

How others can help me

Feedback always appreciated; feel free to email/DM me or use this link if you prefer to be anonymous.

Posts 91

Comments1187

Topic contributions6

The AI Eval Singularity is Near

- AI capabilities seem to be doubling every 4-7 months

- Humanity's ability to measure capabilities is growing much more slowly

- This implies an "eval singularity": a point at which capabilities grow faster than our ability to measure them

- It seems like the singularity is ~here in cybersecurity, CBRN, and AI R&D (supporting quotes below)

- It's possible that this is temporary, but the people involved seem pretty worried

Appendix - quotes on eval saturation

- "For AI R&D capabilities, we found that Claude Opus 4.6 has saturated most of our

automated evaluations, meaning they no longer provide useful evidence for ruling out ASL-4 level autonomy. We report them for completeness, and we will likely discontinue them going forward. Our determination rests primarily on an internal survey of Anthropic staff, in which 0 of 16 participants believed the model could be made into a drop-in replacement for an entry-level researcher with scaffolding and tooling improvements within three months." - "For ASL-4 evaluations [of CBRN], our automated benchmarks are now largely saturated and no longer provide meaningful signal for rule-out (though as stated above, this is not indicative of harm; it simply means we can no longer rule out certain capabilities that may be pre-requisities to a model having ASL-4 capabilities)."

- It also saturated ~100% of the cyber evaluations

- "We are treating this model as High [for cybersecurity], even though we cannot be certain that it actually has these capabilities, because it meets the requirements of each of our canary thresholds and we therefore cannot rule out the possibility that it is in fact Cyber High."

Thanks for the article! I think if your definition of "long term" is "10 years," then EAAs actually do often think on this time horizon or longer, but maybe don't do so in the way you think is best. I think approximately all of the conversations about corporate commitments or government policy change that I have been involved in have operated on at least that timeline (sadly, this is how slowly these areas move).

For example, you can see this CEA where @saulius projects out the impacts of broiler campaigns a cool 200 years, and links to estimates from ACE and AIM which use a constant discount rate.

I'm excited about this series!

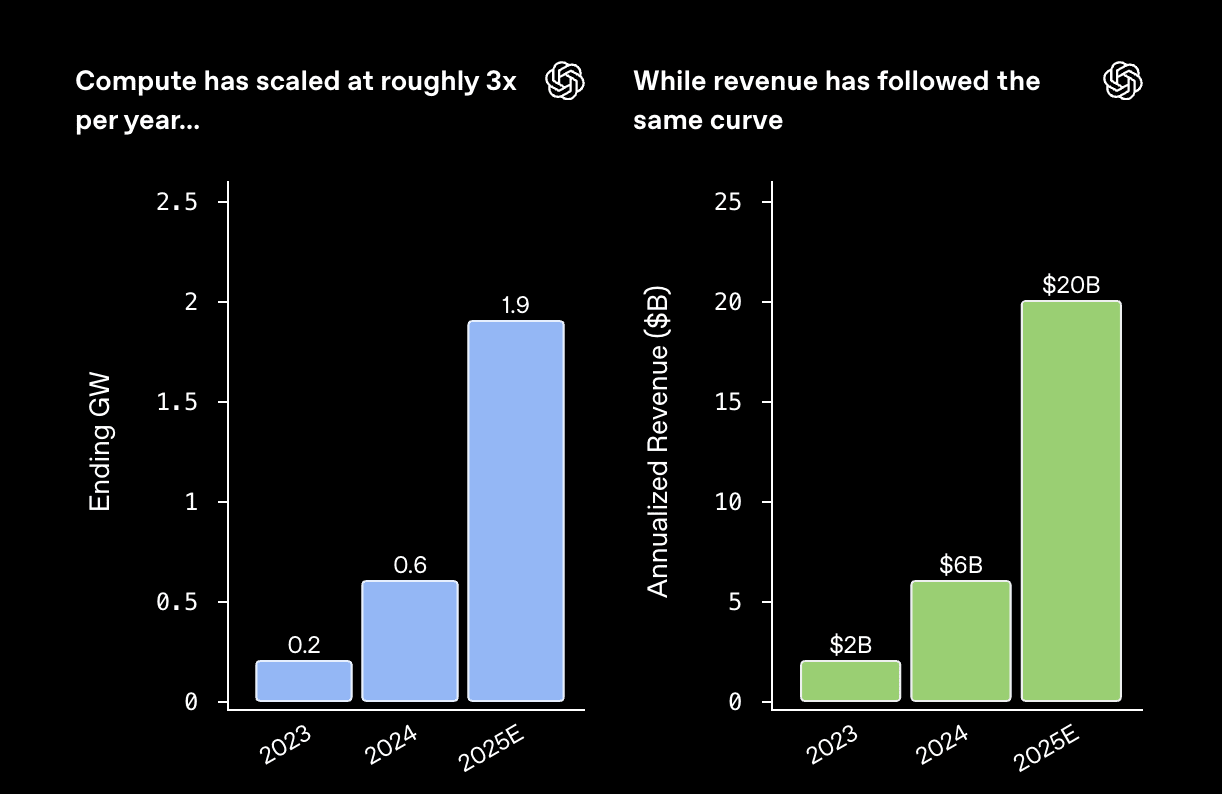

I would be curious what your take is on this blog post from OpenAI, particularly these two graphs:

Investment in compute powers leading-edge research and step-change gains in model capability. Stronger models unlock better products and broader adoption of the OpenAI platform. Adoption drives revenue, and revenue funds the next wave of compute and innovation. The cycle compounds.

While their argument is not very precise, I understand them to be saying something like, "Sure, it's true that the costs of both inference and training are increasing exponentially. However, the value delivered by these improvements is also increasing exponentially. So the economics check out."

A naive interpretation of e.g. the METR graph would disagree: humans are modeled as having a constant hourly wage, so being able to do a task which is 2x as long is precisely 2x as valuable (and therefore can't offset a >2x increase in compute costs). But this seems like an implausible simplification.

Do we have any evidence on how the value of models changes with their capabilities?

EA Animal Welfare Fund almost as big as Coefficient Giving FAW now?

This job ad says they raised >$10M in 2025 and are targeting $20M in 2026. CG's public Farmed Animal Welfare 2025 grants are ~$35M.

Is this right?

Cool to see the fund grow so much either way.

Is this just a statement that there is more low-hanging fruit in safety research? I.e., you can in some sense learn an equal amount from a two-minute rollout for both capabilities and safety, but capabilities researchers have already learned most of what was possible and safety researchers haven't exhausted everything yet.

Or is this a stronger claim that safety work is inherently a more short-time horizon thing?

This is great.

Thinking through my own area of Data Generation and why I would put it earlier than you, I wonder if there should be an additional factor like "Importance of quantity over quality."

For example, my sense is that having a bunch of mediocre ideas about how to improve pretraining is probably not very useful to capabilities researchers. But having a bunch of trajectories where an attacker inserts a back door is pretty useful to control research, even if these trajectories are all things that would only fool amateurs and not experts. And if you are doing some sample inefficient technique, you might benefit from having absolutely huge amounts of this relatively mediocre data - a thing even relatively mediocre models could help with.

Related, from an OAI researcher.