All of Jaime Sevilla's Comments + Replies

Saying that I personally support faster AI development because I want people close to me to benefit is not the same as saying I'm working at Epoch for selfish reasons.

I've had opportunities to join major AI labs, but I chose to continue working at Epoch because I believe the impact of this work is greater and more beneficial to the world.

That said, I’m also frustrated by the expectation that I must pretend not to prioritize those closest to me. I care more about the people I love, and I think that’s both normal and reasonable—most people operate this way. That doesn’t mean I don’t care about broader impacts too.

Epoch's founder has openly stated that their company culture is not particularly fussed about most AI risk topics

To be clear, my personal views are different from my employees or our company. We have a plurality of views within the organisation (which I think it's important for our ability to figure out what will actually happen!)

I co-started Epoch to get more evidence on AI and AI risk. As I learned more and the situation unfolded I have become more skeptical of AI Risk. I tried to be transparent about this, though I've changed my mind often a...

TL;DR

- Sentience appear in many animals, indicating it might have a fundamental purpose for cognition. Advanced AI, specially if trained on data and environments similar to humans, will then likely be conscious

- Restrictions to advanced AI would likely delay technological progress and potentially require a state of surveillance. A moratorium might also shift society towards a culture that is more cautious towards expanding life.

I think what is missing for this argument to go through is arguing that the costs in 2 are higher than the cost of mistreated Artificial Sentience.

My point is that our best models of economic growth and innovation (such as the semi-endogeneous growth model that Paul Romer won the Nobel prize for) straightforwardly predict hyperbolic growth under the assumptions that AI can substitute for most economically useful tasks and that AI labor is accumulable (in the technical sense that you can translate a economic output into more AI workers). This is even though these models assume strong diminishing returns to innovation, in the vein of "ideas are getting harder to find".

Furthermore, even if you weaken th...

I've only read the summary, but my quick sense is that Thorstad is conflating two different versions of the singularity thesis (fast takeoff vs slow but still hyperbolic takeoff), and that these arguments fail to engage with the relevant considerations.

Particularly, Erdil and Besiroglu (2023) show how hyperbolic growth (and thus a "singularity", though I dislike that terminology) can arise even when there are strong diminishing returns to innovation and sublinear growth with respect to innovation.

The paper does not claim that diminishing returns are a decisive obstacle to the singularity hypothesis (of course they aren't: just strengthen your growth assumptions proportionally). It lists diminishing returns as one of five reasons to be skeptical of the singularity hypothesis, then asks for a strong argument in favor of the singularity hypothesis to overcome them. It reviews leading arguments for the singularity hypothesis and argues they aren't strong enough to do the trick.

(speculating) The key property you are looking for IMO is to which degree people are looking at different information when making forecasts. Models that parcel reality into neat little mutually exclusive packages are more amenable , while forecasts that obscurely aggregate information from independent sources will work better with geomeans.

In any case, this has little bearing on aggregating welfare IMO. You may want to check out geometric rationality as an account that lends itself more to using geometric aggregation of welfare.

Interesting case. I can see the intuitive case for the median.

I think the mean is more appropriate - in this case, what this is telling you is that your uncertainty is dominated by the possibility of a fat tail, and the priority is ruling it out.

I'd still report both for completeness sake, and to illustrate the low resilience of the guess.

Very much enjoyed the posts btw

Amazing achievements Mel! With your support, the group is doing a fantastic job, and I am excited about its direction.

>his has meant that, currently, our wider community lacks a clear direction, so it’s been harder to share resources among sub-groups and to feel part of a bigger community striving for a common goal.

I feel similarly! At the time being, it feels that our community has fragmented into many organizations and initiatives: Ayuda Efectiva, Riesgos Catastróficos Globales, Carreras con Impacto, EAGx LatAm, EA Barcelona. I would be keen on develo...

I have so many axes of disagreement that is hard to figure out which one is most relevant. I guess let's go one by one.

Me: "What do you mean when you say AIs might be unaligned with human values?"

I would say that pretty much every agent other than me (and probably me in different times and moods) are "misaligned" with me, in the sense that I would not like a world where they get to dictate everything that happens without consulting me in any way.

This is a quibble because in fact I think if many people were put in such a position they would try asking o...

Our team at Epoch recently updated the org's website.

I'd be curious to receive feedback if anyone has any!

What do you like about the design? What do you dislike?

How can we make it more useful for you?

An encouraging update: thanks to the generous support of donors, we have raised $95k in funds to support our activities for six more months. During this time, we plan to 1) engage with the EU trilogue on the regulation of foundation models during the Spanish presidency of the EU council, 2) continue our engagement with policy markers in Argentina and 3) release a report on global risk management in latin america.

We nevertheless remain funding constrained. With more funding we would be able to launch projects such as:

- A report on prioritizing and forecasting

I have grips with the methodology of the article, but I don't think highlighting the geometric mean of odds over the mean of probabilities is a major fault. The core problem is assuming independence over the predictions at each stage. The right move would have been to aggregate the total P(doom) of each forecaster using geo mean of odds (not that I think that asking random people and aggregating their beliefs like this is particularly strong evidence).

The intuition pump that if someone assigns a zero percent chance then the geomean aggregate breaks i...

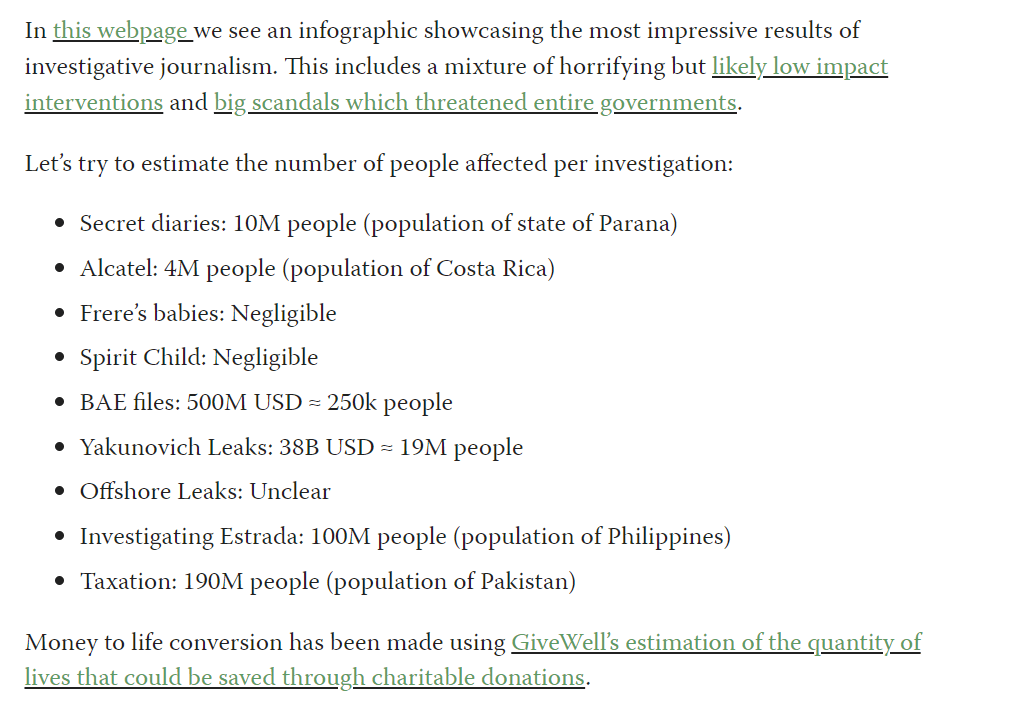

I looked into the impact of investigative journalism briefly a while back.

Here is an estimation of the impact of some of the most high profile IJ success stories I found:

How many investigative journalists exist? It's hard to say, but the International Federation of Journalists has 600k members, so maybe there exists 6M journalists worlwide, of which maybe 10% are investigative journalists (600k IJs). If they are paid like $50k/year, that's $30B used for IJ.

Putting both numbers together, that's $2k to $20k per person affected. Let's say that each person aff...

Let's say that each person affected gains 0.1 to 10 QALYs for these high profile case

This seems very generous to me. The example here that effected the largest number was about tax evasion by Pakistani MPs; even if previously they had paid literally zero tax, and as a result of this they ended up paying full income tax, it seems implausible to me that the average Pakistani citizen would be willing to trade a year of life for this research. I would guess you are over-valuing this by several orders of magnitude.

It's hard to say, but the International Federation of Journalists has 600k members, so maybe there exists 6M journalists worlwide, of which maybe 10% are investigative journalists (600k IJs). If they are paid like $50k/year, that's $30B used for IJ.

Surely from browsing the internet and newspapers, it's clear than less than 1% (<60k) of journalists are "investigative". And I bet that half of the impact comes from an identifiable 200-2k of them, such as former Pulitzer Prize winners, Propublica, Bellingcat, and a few other venues.

I don't think EAs have any particular edge in managing harassment over the police, and I find it troublesome that they have far higher standards for creating safe spaces, specially in situations where the cost is relatively low such as inviting the affected members to separate EAG events while things cool down.

On another point, I don't think this was Jeff's intention, but I really dislike the unintentional parallel between an untreated schizophrenic and the CB who asked for the restraining order. I can assure you that this was not the case here, and I thin...

I agree that this is very valuable. I would want them to be explicit about this role, and be clear to community builders talking to them that they should treat them as if talking to a funder.

To be clear, in the cases where I have felt uncomfortable it was not "X is engaging in sketchy behaviour, and we recommend not giving them funding" (my understanding is that this happens fairly often, and I am glad for it. CHT is providing a very valuable function here, which otherwise would be hard to coordinate. If anything, I would want them to be more brazen and re...

I echo the general sentiment -- I find the CHT to work diligently and be in most cases compassionate. I generally look up to the people who make it up, and I think they put a lot of thought into their decisions. From my experience, they helped prevent at least three problematic people from accruing power and access to funding in the Spanish Speaking community, and have invested 100s of hours into steering that sub community towards what they think is a better direction, including being always available for consultations.

I also think that they undervalue th...

My overall impression is that the CEA community health team (CHT from now on) are well intentioned but sometimes understaffed and other times downright incompetent. It's hard to me to be impartial here, and I understand that their failures are more salient to me than their successes. Yet I endorse the need for change, at the very least including 1) removing people from the CHT that serve as a advisors to any EA funds or have other conflict of interest positions, 2) hiring HR and mental health specialists with credentials, 3) publicly clarifying their role ...

Catherine from CEA’s Community Health and Special Projects Team here. I have a different perspective on the situation than Jaime does and appreciate that he noted that “these stories have a lot of nuance to them and are in each case the result of the CHT making what they thought were the best decisions they could make with the tools they had.”

I believe Jaime’s points 1, 2 and 3 refer to the same conflict between two people. In that situation, I have deep empathy for the several people that have suffered during the conflict. It was (and still is...

I don't know about the sway the com health team has over decisions at other funders, but at EA Funds my impression is that they rarely give input on our grants, but, when they do, it's almost always helpful. I don't think you'd be concerned by any of the ways in which they've given input - in general, it's more like "a few people have reported this person making them feel uncomfortable so we'd advise against them doing in-person community building" than "we think that this local group's strategy is weak".

I think that whilst there are valid criticisms of th...

Could you explain what you perceive as the correct remedy in instance #1?

The implication seems like the solution you prefer is having the community member isolated from official community events. But I'm not sure what work "uncomfortable" is doing here. Was the member harassing the community builder? Because that would seem like justification for banning that member. But if the builder is just uncomfortable due to something like a personal conflict, it doesn't seem right to ban the member.

But maybe I'm not understanding what your corrective action would be here?

In service of clear epistemics, I want to flag that the "horror stories" that your are sharing are very open to interpretation. If someone pressured someone else, what does that really mean? If could be a very professional and calm piece of advice, or it could be a repulsive piece of manipulation. Is feeling harassed a state that allows someone to press charges, rather than actual occurrence of harassment? (of course, I also understand that due to privacy and mob mentality, you probably don't want to share all the details; totally understandable.)

So maybe ...

While I don't have an objection to the idea of rebranding the community health team, I want to push back a bit against labelling it as human resources.

HR already has a meaning, and there is relatively little overlap between the function of community health within the EA community and the function of a HR team. I predict it would cause a lot of confusion to have a group labelled as HR which doesn't do the normal things of an HR team (recruitment, talent management, training, legal compliance, compensation, sometimes payroll, etc.) but does do things that ar...

Factor increase per year is the way we are reporting growth rates by default now in the dashboard.

And I agree it will be better interpreted by the public. On the other hand, multiplying numbers is hard, so it's not as nice for mental arithmetic. And thinking logarithmically puts you in the right frame of mind.

Saying that GPT-4 was trained on x100 more compute than GPT-3 invokes GPT-3 being 100 times better, whereas I think saying it was trained on 2 OOM more compute gives you a better picture of the expected improvement.

I might be wrong here.

In any case, it is still a better choice than doubling times.

My sense is that pure math courses mostly make it easier to do pure math later, and otherwise not make much of a difference. In terms of credentials, I would bet they are mostly equivalent - you can steer your career later through a master or taking jobs in your area of interest.

Statistics seems more robustly useful and transferable no matter what you do later. Statistics degrees also tend to cover the very useful parts of math as well, such as calculus and linear algebra.

Within Epoch there is a months-long debate about how we should report growth rates for certain key quantities such as the amount of compute used for training runs.

I have been an advocate of an unusual choice: orders-of-magnitude per year (abbreviated OOMs/year). Why is that? Let's look at other popular choices.

Doubling times. This has become the standard in AI forecasting, and its a terrible metric. On the positive, it is an intuitive metric that both policy makers and researchers are familiar with. But it is absolutely horrid to make calculations. For exa...

Agree that it's easier to talk about (change)/(time) rather than (time)/(change). As you say, (change)/(time) adds better. And agree that % growth rates are terrible for a bunch of reasons once you are talking about rates >50%.

I'd weakly advocate for "doublings per year:" (i) 1 doubling / year is more like a natural unit, that's already a pretty high rate of growth, and it's easier to talk about multiple doublings per year than a fraction of an OOM per year, (ii) there is a word for "doubling" and no word for "increased by an OOM," (iii) I think the ari...

I wrote a report on quantum cryptanalysis!

The TL;DR is that it doesn't seem particularly prioritary, since it is well attended (the NIST has an active post quantum cryptography program) and not that impactful (there are already multiple proposals to update the relevant protocols, and even if these pan out we can rearrange society around them).

I could change my mind if I looked again into the NIST PQC program and learned that they are significantly behind schedule, or if I learned that qauntum computing technology has significantly accelerated since.

This is a tough question, and I don't really know the first thing about patents, so you probably should not listen to me.

My not very meditated first instinct is that one would set up a licensing scheme so that you commit to granting a use license to any manufacturer in exchange for a fee, preferrably as a portion of the revenue to incentivize experimentation from entrepreneurs.

Of course, this might dissuade the Tech Transfer office or other entrepreneurs from investing in a start-up if they won't be guaranteed exclusive use.

I suppose that it would be relev...

Just a quick comment for the devs - I saw the "More posts like this" lateral bar and it felt quite jarring. I liked it way better when it was at the end. Having it randomly in the middle of a post felt distracting and puzzling.

ETA: the Give feedback button does not seem to work either. Also its purpose its unclear (give feedback on the post selection? on the feature?)

A pattern I've recently encountered twice in different contexts is that I would recommend a more junior (but with relevant expertise) staff for a talk and I've been asked if I could not recommend a more "senior" profile instead.

I thought it was with the best of intentions, but it still rubbed me the wrong way. First, this diminishes the expertise of my staff, who have demonstrated mastery over their subject matter. Second, this systematically denies young people of valuable experience and exposure. You cannot become a "senior" profile without access to these speaking opportunities!

Please give speaking opportunities to less experienced professionals too!

It's really hard to say.

I am concerned that many projects are being set up in a way that does not follow good governance practices and leaves their members exposed to conflict and unpleasant situations. I would want to encourage people to try to embed themselves in a more formal structure with better resources and HR management. For example, I would be excited about community builders over the world setting a non-profit governance structure, where they are granted explicit rights as workers that encompass mental healthcare and a competent HR department to ...

I'm pretty into the idea of putting more effort into concrete areas.

I think the biggest reason for is one which is not in your list: it is too easy to bikeshed EA as an abstract concept and fool yourself into thinking that you are doing something good.

Working on object level issues helps build expertise and makes you more cognizant of reality. Tight feedback loops are important to not delude yourself.

I agree that the potential for this exists, and if it was an extended practice it would be concerning. Have you seen people who claim to have a good forecasting record engage with pseudonym exploitation though?

My understanding is that most people who claim this have proof records associated to a single pseudonym user in select platforms (eg Metaculus), which evades the problem you suggest.

Ranking the risks is outside the scope of our work. Interpreting the metaculus questions sounds interesting, though it is not obvious how to disentangle the scenarios that forecasters had in mind. I think the Forecasting Research Institute is doing some related work, surveying forecasters on different risks.

I basically agree with the core message. I'll go one step further and say that existential risk has unnecessary baggage - as pointed out by Carl Shulman and Elliot Thornley the Global Catastrophic Risk and CBA framing rescues most of the implications without resorting to fraught assumptions about the long term future of humanity.

Double-checking research seems really important and neglected. This can be valuable even if you don't rerun the experiments and just try to replicate the analyses.

A couple of years ago, I was hired to review seven econometric papers, and even as an outsider to the field it was easy to contribute to find flaws and assess the strength of the papers.

Writing these reviews seems like a great activity, especially for junior researchers who want to learn good research practices while making a substantial contribution.

I agree! I think this is an excellent opportunity to shape how AI regulation will happen in practice.

We are currently working on a more extensive report with recommendations to execute the EU AI act sandbox in Spain. As part of this process, we are engaging some relevant public stakeholders in Spain with whom we hope to collaborate.

So far, the most significant barrier to our work is that we are running out of funding. Other than that, access to the relevant stakeholders is proving more challenging than in previous projects, though we are still early ...

It is specific to the human-generated text.

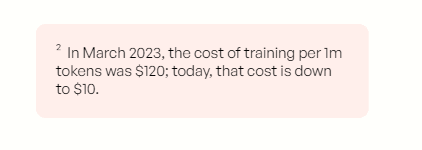

The current soft consensus at Epoch is that data limitations will probably not be a big obstacle to scaling compared to compute, because we expect generative outputs and data efficiency innovation to make up for it.

This is more based on intuition than rigorous research though.

This post argues that:

- Bostrom's micromanagement has led to FHI having staff retention problems.

- Under his leadership, there have been considerable tensions with Oxford University and a hiring freeze.

- In his racist apology, Bostrom failed to display tact, wisdom and awareness.

- Furthermore, this apology has created a breach between FHI and its closest collaborators and funders.

- Both the mismanagement of staff and the tactless apology caused researchers to renounce.

While I'd love for FHI staff to comment and add more context, all of this matches my impressi...

Ah, in case there is any confusion about this I am NOT leaving Epoch nor joining Mechanize. I will continue to be director of Epoch and work in service of our public benefit mission.