I think many EAs have a unique view about how one altruistic action affects the next altruistic action, something like altruism follows a power law in terms of its impact, and altruistic acts take time/energy/willpower; thus, it's better to conserve your resources for these topmost important altruistic actions (e.g., career choice) and not sweat it for the other actions.

However, I think this is a pretty simplified and incorrect model that leads to the wrong choices being taken. I wholeheartedly agree that certain actions constitute a huge % of your impact. In my case, I do expect my career/job (currently running Charity Entrepreneurship) will be more than 90% of my lifetime impact. But I have a different view on what this means for altruism outside of career choices. I think that being altruistic in other actions not only does not decrease my altruism on the big choices but actually galvanizes them and increases the odds of me making an altruistic choice on the choices that really matter.

One way to imagine altruism is much like other personality characteristics; being conscientious in one area flows over to other areas, working fast in one area heightens your ability to work faster in others. If you tidy your room, it does not make you less likely to be organized in your Google Docs. Even though the same willpower concern applies in these situations and of course, there are limits to how much you can push yourself in a given day, the overall habits build and cross-apply to other areas instead of being seen as in competition. I think altruism is also habit-forming and ends up cross-applying.

Another way to consider how smaller-scale altruism has played out is to look at some examples of people who do more small-scale actions and see how it affects the big calls. Are the EAs who are doing small-scale altruistic acts typically tired and taking a less altruistic career path or performing worse in their highly important job? Anecdotally, not really. The people I see willing to weigh altruism the highest in their career choice comparison tend to also have other altruistic actions they are doing (outside of career). This, of course, does not prove causality, but it is an interesting sign.

Also anecdotally, I have been in a few situations where the altruistic environment switches from one that does value small-scale altruism to one that does not, and people changed as a result (e.g., changing between workplaces or cause areas). Although the data is noisy, to my eye the trend also fits the ‘altruism as a galvanizing factor’ model. For example, I do not see people's work hours typically go up when they move from a valuing small scale altruism area to an non-valuing small scale altruism area.

Another way this might play out is connected to identity and how people think of a trait. If someone identifies personally with something (e.g., altruism), they are more likely to enact it out in multiple situations; it's not just in this case altruism is required, it is a part of who you are (see my altruism as a central purpose post for more on thinking this way). I think this factor that binds altruism to an identity can be reinforced by small-scale altruistic action but also can affect the most important choices.

Some examples of altruistic actions I expect to be superseded in importance by someone's career choice in most cases but still worth doing for many 50%+ EAs:

- Donating 10% (even of a lower salary/earnings level)

- Being Vegan

- Non-life-threatening donations (e.g., blood donations, bone marrow donations)

- Spending less to donate more

- Working more hours at an altruistic job

- Becoming an organ donor

- Asking for donations during some birthdays/celebrations.

- Getting your friends and family birthday cards / doing locally altruistic actions

- Not violating common sense morality (e.g., don’t lie, steal) on a whim or precarious reason. (This one is worth a whole other blog post).

Another benefit I did not even talk about is that costly virtue signalling can often be a more true reflection of someone's altruism. Personally, I am way more skeptical of someone pitching a career path as the most altruistic one if they have an extremely limited altruistic track record, and I think this view both in EA and the broader world is pretty common.

A darker edge to this argument I do not cover here is how many non-altruistic things have been done for “the greater good” where in fact this argument was just used as justification to do something for one's own self-interest. EA has been accused of this, and in my view not entirely unjustifiably in the past.

Overall, I would love to spread the idea of the model of altruism sharpening altruism a little bit more, as I think it’s a useful consideration when thinking about altruistic trade-offs.

I at least personally want to provide some datapoints against this.

I think "sacrifices for the sake of sacrifices" has felt to me like something that at many points has substantially reduced my motivation to do other altruistic things, especially when combined with external social obligations or expectations (like it's been the case with some of the veganism and donation stuff).

I also know many other people who feel like instincts towards virtue signaling and associated overstretching have substantially soured them in engaging with the EA community and also more broadly being altruistic with their efforts.

My crude model is that there are two forces pushing in opposite direction, and which effect wins out depends on the person and their circumstance.

If altruistic actions are ego-syntonic, they can be a source of emotional energy to fuel more altruism. And if you're operating under capacity, the gains may well be ~free.

If the action isn't ego-syntonic or otherwise energizing you, that effect is lost. It's just a cost you pay. I expect this is worse for things people do out of social pressure or a sense of obligation.

Meanwhile, doing stuff takes resources- physical energy, intellectual energy, money.... If one of those is your limiting reagent, and a minor altruistic action requires it, then that minor action trades off against your big ones. Even if it is ego syntonic and increases your available emotional energy, it's still a trade off because emotional energy wasn't your limiting reagent.

Seperately, many people aren't great at optimizing. Fact checking leisure reading (including social media) trades off against my core work. But "fact checking on only the most important projects, none for lesser projects" isn't an available stance for me. I can dial it up or down somewhat, but if I push away the instinct to check unless I'm sure something is important too often, I'll lose rigor in my core work as well. So that's just a tax I have to pay.

I do tend to think that most people's limiting factor is energy instead of time. E.g. it is rare to see someone work till they literally run out of hours on a project vs needing a break due to feeling tired. Even people working 12 hours a day, I still expect they run out of energy before time, at least long term. I would not typically see emotional energy as my limiting factor, but I do think it's basically always energy (a variable typically positively affected by altruism in other areas) vs. time or money (typically negatively affected).

This assumption seems totally out of left field to me. I agree altruism can increase energy, but in many other cases it uses it up.

Another factor is that recruitment to the EA community may be more difficult if it's perceived as very demanding.

I'm also not convinced by the costly signalling-arguments discussed in the post. (This is from a series of posts on this topic.)

(1) I think Joey's right, and I'll phrase the issue in this way - a lot of EAs underrate the impact of habit-formation and overrate the extent to which most of your choices even require active willpower. Your choices change who you are as a person, so what was once hard becomes easy.

I've always given at least 10% to effective charities, and now it's just something I do; it's barely something I have to think about, let alone require some heroic exertion of will. And while I'm not vegan, I am successfully eating less meat even on a largel6 keto diet, and what surprised me is how much easier it is than I thought it would be.

(2) Let's accept for the sake of argument that there is a lot of heterogeneity, such that for some people the impact of habit formation is weak and it is psychologically very difficult for them to consistently adhere to non-job avenues to impact (e.g. donating, being vegan, etc). Even so, how would one know in advance? Why not test it out, to see if you're in the group for which habit formation impact is high and these sacrifices are easy, or if you are in the other group?

Surely it's worth doing - the potential impact is significant, and if it's too hard you can of course stop! But many people will be surprised, I think, at just how easy certain things are when they become part of your daily routine.

I mean, yes, but I think it's at least as true that a lot of people overestimate the cost of "low impact" choices, for some people. People are just pretty variable, and however badly people understand themselves, strangers understand them even less. It's fantastic that you donate 10% and don't find it to be a hardship, but it's obviously dependent on a bunch of life circumstances that don't hold for everyone. They're not uncommon in EA either, but I think it's pretty alienating for people who aren't in that position to be told it's just an issue of habit formation.

I do think there's a problem where people worry that doing less impactful altruistic interventions like donating blood is low status, like announcing you don't have better things to do. That worry isn't unfounded, although I think it might be exaggerated. I think this is bad for lots of reasons and worth fighting against.

I think the model I would suggest is indeed close to what Joel is saying - try it out system as opposed to guessing a priori how you will be affected by things. More specifically, track your work hours/productivity (if you think that is where the bulk of your impact is coming from) and see if, for example, donating blood on the weekend negatively, positively, or has no effect on them. I think that my output has gotten higher over time, in part, due to pretty active testing and higher amounts of measurement. - Related post

Is perhaps testable by RCT amongst earn-to-givers because we could quantitatively measure their impact more easily.

Perhaps one group is coached on taking more small altruistic actions, the other is coached to preserve their resources for the big moments / decisions. I think six months to one year with yearly followups would be a suitable length.

I think limiting factor would be number of earn-to-givers willing to partake. Any other ways this could be tested objectively? It does seem very important to know, and it seems there's little compelling evidence either way.

How much of this is lost by compressing to something like: virtue ethics is an effective consequentialist heuristic?

I've been bought into that idea for a long time. As Shaq says, 'Excellence is not a singular act, but a habit. You are what you repeatedly do.'

We can also make analogies to martial arts, music, sports, and other practice/drills, and to aspects of reinforcement learning (artificial and natural).

It doesn't just say that virtue ethics is an effective consequentialist heuristic (if it says that) but also has a specific theory about the importance of altruism (a virtue) and how to cultivate it.

There's not been a lot of systematic discussion on which specific virtues consequentialists or effective altruists should cultivate. I'd like to see more of it.

@Lucius Caviola and I have written a paper where we put forward a specific theory of which virtues utilitarians should cultivate. (I gave a talk along similar lines here.) We discuss altruism but also five other virtues.

Thanks for writing this piece. Do you have any non-anecdotal evidence to support this argument? For example, does an intervention to get people to donate more also cause them to be more likely to give blood? I think this is one area where it’s very difficult to have correct intuitions so outside evidence, even with all the problems of the psychology literature, can be especially helpful.

I would like to point out that this is one of those things where n=1 is enough to improve people's lives (e.g., the placebo effect works in your favor), in the same way that I can improve my life by taking a weird supplement that isn't scientifically known to work but helps me when I take it.

For what it's worth, my life did seem to start going better (I started to feel more in touch with my emotional side) after becoming vegan.

Less anecdotal but only indirectly relevant and also hard to distinguish causation from correlation:

Ctrl+f for "Individuals who participate in consumer action are more likely to participate in other forms of activism" here

https://www.sentienceinstitute.org/fair-trade#consumer-action-and-individual-behavioral-change

Off the top of my head, it seems like "foot in the door" factors could support the OP's thesis (people are more likely to go along with larger requests after already doing smaller ones for the same person/cause, apparently related to cognitive dissonance reduction). Moral licensing effects would seem to go the other way. It would be interesting to hear from an expert in the social sciences which effect tends to be stronger.

I think I generally agree with the idea that "making altruism a habit will probably increase your net impact", and thinking of altruistic effort as a finite resource to spend is inaccurate.

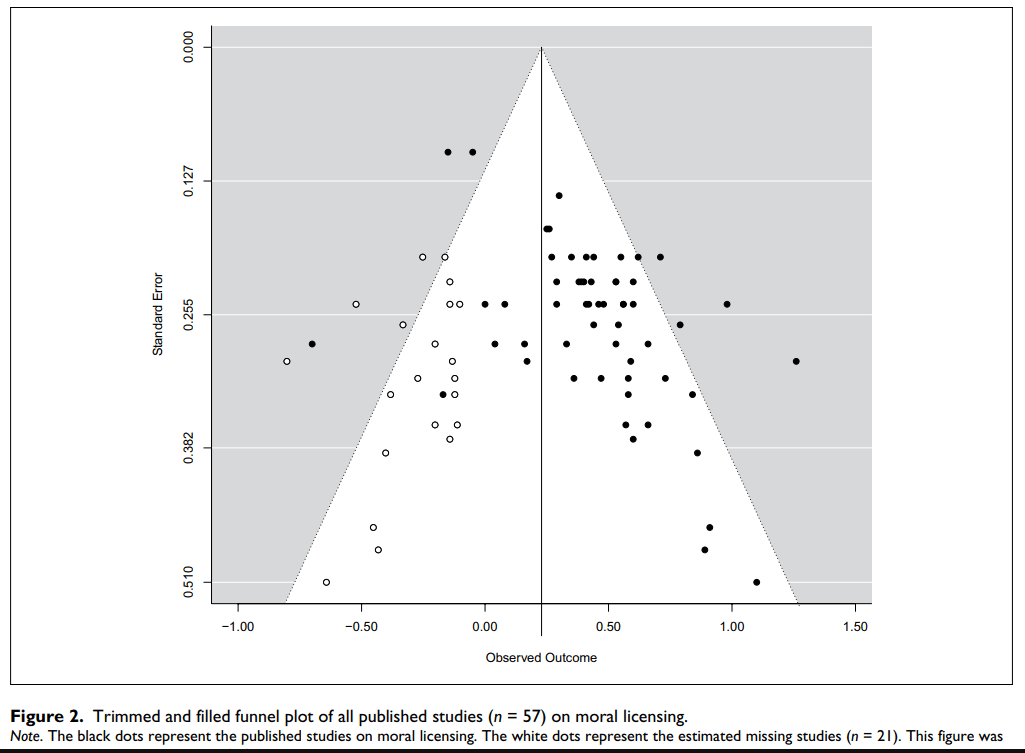

However I think there are is a force, "moral licensing" (https://journals.sagepub.com/doi/pdf/10.1177/0146167215572134) pushing in the opposite direction of habit formation.

My personal recommendation is that people should make altruism a habit where it does not feel like a large personal sacrifice. For almost all this will include generally acting morally under virtue ethics and deontology too, and include things like donating blood and being an organ donor. But depending on the person this can still include donating 10%, going vegan or at least reducing meat consumption etc.

While the idea of moral licensing makes sense to me in theory, I'm not too persuaded by the empirical evidence, at least from the cited meta-analysis - the publication bias is enormous, as the authors note.

Glad you mentioned 'moral licensing' -- which is something EAs really need to be aware of!

Did they go down, or stay the same?

In most cases the same or minor decrease

Crosspost to Lesswrong?