Note: This is a duplicate of this LessWong post.

The LISA Team consists of James Fox, Mike Brozowski, Joe Murray, Nina Wolff-Ingham, Ryan Kidd, and Christian Smith.

LISA’s Advisory Board consists of Henry Sleight, Jessica Rumbelow, Marius Hobbhahn, Jamie Bernardi, and Callum McDougall.

Everyone has contributed significantly to the founding of LISA, believes in its mission & vision, and assisted with writing this post.

TL;DR: The London Initiative for Safe AI (LISA) is a new AI Safety research centre. Our mission is to improve the safety of advanced AI systems by supporting and empowering individual researchers and small organisations. We opened in September 2023, and our office space currently hosts several research organisations and upskilling programmes, including Apollo Research, Leap Labs, MATS extension, ARENA, and BlueDot Impact, as well as many individual and externally affiliated researchers.

LISA is open to different types of membership applications from other AI safety researchers and organisations.

- (Affiliate) members can access talks by high-profile researchers, workshops, and other events. Past speakers have included Stuart Russell (UC Berkeley, CHAI), Tom Everitt & Neel Nanda (Google DeepMind), and Adam Gleave (FAR AI), amongst others.

- Amenities for LISA Residents include 24/7 access to private & open-plan desks (with monitors, etc), catering (including lunches, dinners, snacks & drinks), and meeting rooms & phone booths. We also provide immigration, accommodation, and operational support; fiscal sponsorship & employer of record (upcoming); and regular socials & well-being benefits.

Although we host a limited number of short-term visitors for free, we charge long-term residents to cover our costs at varying rates depending on their circumstances. Nevertheless, we never want financial constraints to be a barrier to leading AI safety research, so please still get in touch if you would like to work from LISA’s offices but aren't able to pay.

If you or your organisation are interested in working from LISA, please apply here

If you would like to support our mission, please visit our Manifund page.

Read on for further details about LISA’s vision and theory of change. After a short introduction, we motivate our vision by arguing why there is an urgency for LISA. Next, we summarise our track record and unpack our plans for the future. Finally, we discuss how we mitigate risks that might undermine our theory of change.

Introduction

London stands out as an ideal location for a new AI safety research centre:

- Frontier Labs: It is the only city outside of the Bay Area with offices from all major AI labs (e.g., Google DeepMind, OpenAI, Anthropic, Meta)

- Concentrated and underutilised talent (e.g., researchers & software engineers), many of whom are keen to contribute to AI safety but are reluctant or unable to relocate to the Bay Area due to visas, partners, family, culture, etc.

- UK Government connections: The UK government has clearly signalled their concern for the importance of AI safety by hosting the first AI safety summit, establishing an AI safety institute, and introducing favourable immigration requirements for researchers. Moreover, policy-makers and researchers are all within 30 mins of one another.

- Easy transport links: LISA is ideally located to act as a short-term base for visiting AI safety researchers from the US, Europe, and other parts of the UK who want to visit researchers (and policy-makers) in companies, universities, and governments in and around London, as well as those in Europe.

- Regular cohorts of the MATS program (scholars and mentors) because of the above (in particular, the favourable immigration requirements compared with the US).

Despite this favourable setting, so far little community infrastructure investment has been made. Therefore, our mission is to build a home for leading AI safety research in London by incubating individual AI safety researchers and small organisations. To achieve this, LISA will:

- Provide a research environment that is supportive, productive, and collaborative, where a diversity of ideas can be refined, challenged, and advanced;

- Offer financial stability, collective recognition, and accountability to individual researchers and new small organisations;

- Cultivate a London home for professional AI safety research by leveraging London's strategic advantages and building upon our existing ecosystem and partnerships;

- Foster epistemic quality and diversity amongst new AI safety researchers and organisations by facilitating mentorship & upskilling programmes and encouraging exploration of numerous AI safety research agendas.

LISA stands in a unique position to enact this vision. In 2023, we founded an office space ecosystem, which now contains organisations such as Apollo Research, Leap Labs, MATS, ARENA, BlueDot Impact, and many individual and externally affiliated researchers. We are poised to capitalise on the abundance of motivated and competent talent in London and the supportive environment provided by the UK government and other local organisations. Our approach is not just about creating a space for research; it is about building a community and a movement that can significantly improve the safety of advanced AI systems.

The Urgency

- Given the scale of the AI safety problem, traditional institutions are not covering all of the work that needs to be done.

- AI safety is a pre-paradigmatic field lacking a standardised framework or established paradigms. This demands a diverse range of research agendas to be explored and developed. Indeed, the most useful agendas may not yet exist.

- Whilst some of these agendas are being worked on at major tech companies, in academia, or in government labs, almost all AI safety agendas remain significantly underfunded, underexplored, and underdeveloped.

- Traditionally, academia has been the main player in conducting fundamental research for the public good. However, the recent pace and scope of AI capabilities advancement is an out-of-distribution event – unexpected, different in character, and without historical precedent. Therefore, innovative new structures like the UK Government's AI Safety Institute and incubators for AI safety research talent, early-stage organisations and new AI safety agendas, such as Constellation, FAR Labs, and LISA, are necessary to complement the AI safety research conducted in universities.

- There is a need for more high-quality AI safety researchers and organisations.

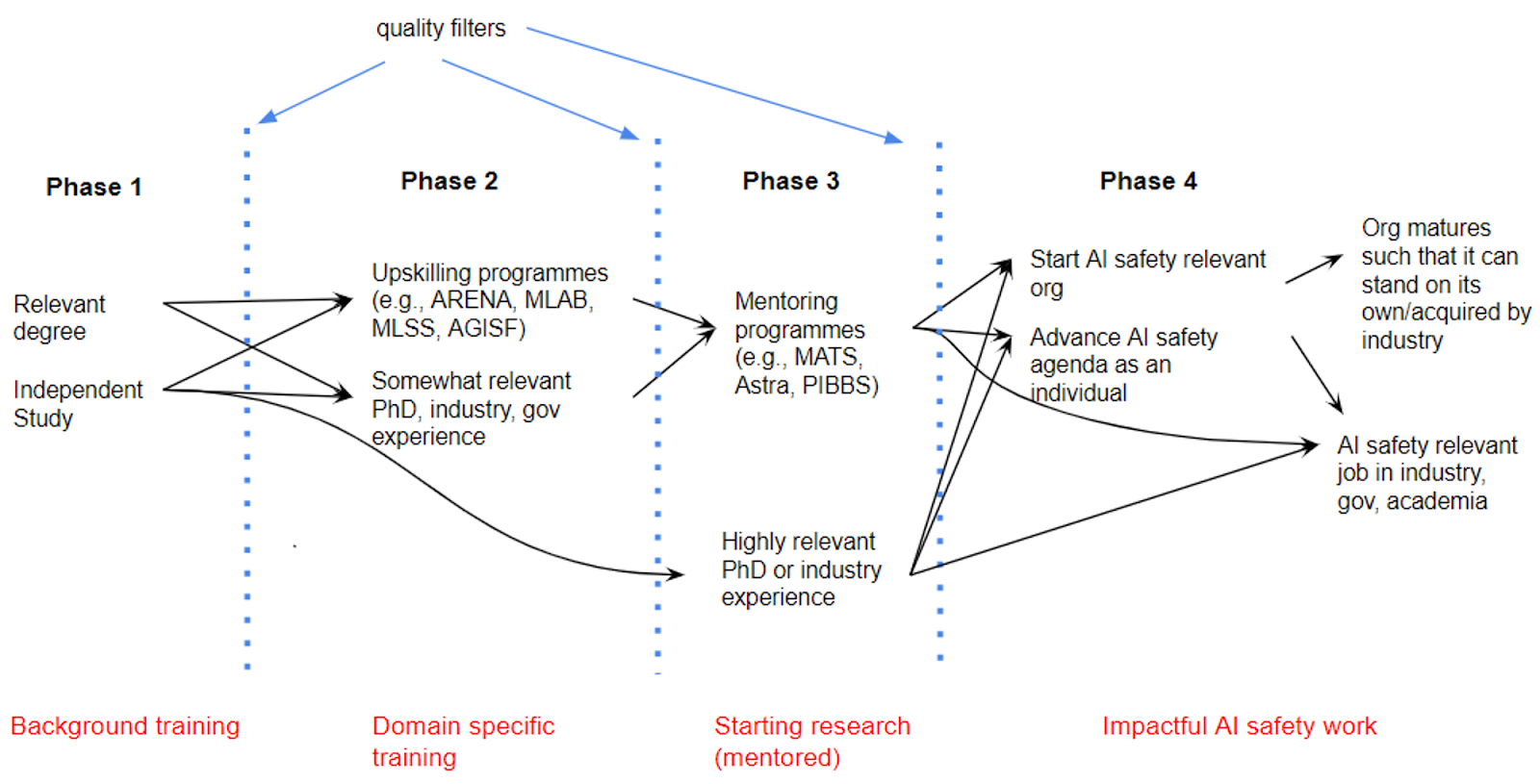

This figure illustrates the progression from motivated and talented individuals to researchers outputting high-impact AI safety work. LISA's activities are designed to address the critical bottleneck between Phase 3 and Phase 4 because it is difficult for talented individuals to solve for themselves.

- For example, at the end of MATS or highly relevant PhD programmes, many strong scholars still struggle to secure AI safety roles in existing organisations because there are few job opportunities in existing organisations or academia compared with the magnitude of the AI safety challenge.

- Individual researchers working alone are less productive. Therefore, support should mostly prioritise those with established mentors or research collaborators or target those with an exceptional research track record and mentality such that they will work well when introduced to new collaborators. Still, this is daunting because these individual and externally affiliated researchers lack a supportive network and community to enhance their motivation and challenge each other’s ideas.

- We should also be helping incubate new AI safety organisations that face many barriers:

- Networking and growth limitations: Finding co-founders, early hiring, organisational growth, and research collaboration is challenging without having large existing networks.

- Legal and administrative resistance: Promising researchers and would-be talented entrepreneurs have to circumvent many distractions to their core research (e.g., setting up the legal structures to receive grants and funding as an organisation or non-profit), which can be circumvented if an existing organisation (such as LISA) were to provide fiscal sponsorship.

- Work environment mismatch: Regular office spaces are often not conducive to the needs and focus of AI safety research, and it can be hard to find an environment with like-minded individuals (e.g., security concerns about certain research conversations, finding someone to help set up a package in PyTorch, arranging a 10-minute chat with someone to red team your latest interpretability idea etc). Indeed, there currently exists no other office space in London that only hosts AI safety professionals.

- Recognition and scaling challenges: Gaining recognition in the field and attracting speakers or hosting workshops is difficult without a certain level of scale and visibility. Emerging organisations often struggle to create impactful events that draw attention from the broader AI safety community.

Our Vision

Our mission is to be a professional research centre that improves the safety of advanced AI systems by supporting and empowering individual researchers and small organisations. We do this by creating a supportive, collaborative, and dynamic research environment that hosts members pioneering a diversity of AI safety research.

In the next two years, LISA’s vision is to:

- Be a premier AI safety research centre which has housed significant contributions to AI safety research due to its collaborative ecosystem of small organisations and individual researchers

- Have supported the maturation of member organisations by increasing their research productivity, impact, and recognition.

- Have positively influenced the career trajectories of LISA alumni, who will have transitioned into key positions in AI safety across industry, academia, and government sectors as these opportunities emerge and develop over time. Some of these would otherwise have been pursuing non-AI safety careers. Alumni will maintain links with LISA and its ecosystem, e.g., research collaborations & mentoring, speaking, and career events.

- Have advanced a diversity of AI safety research agendas and will have uncovered novel AI safety research agendas that significantly improve our understanding of how and why advanced AI systems work or our ability to control and align them.

- Have nurtured new AI safety talent and organisations by serving as a nurturing ground for new, motivated talent entering the field, positioning itself as a pivotal entry point for future leaders in AI safety research and new impactful AI safety organisations.

Our Track Record

We have been open since September 2023. In that time:

- In addition to our member organisations, we are also home to remote-working AI safety researchers affiliated with the University of Oxford, Imperial College London, University of Cambridge, University of Edinburgh, MILA, Metaculus, Conjecture, Anthropic, PIBBS, and Lakera (amongst others), as well as several funded Independent researchers.

- Recent AI safety papers featuring authors primarily working from the LISA offices include Copy Suppression: Comprehensively Understanding an Attention Head, How to Catch an AI Liar: Detection in Black-box LLMs by Asking Unrelated Questions, Sparse Autoencoders Find Highly Interpretable Features in Language Models, The Reversal Curse: LLMs trained on "A is B" fail to learn "B is A" and Taken out of context: On measuring situational awareness in LLMs.

- LISA Residents have helped develop new AI safety agendas as part of the MATS extension program, including sparse autoencoders for mechanistic interpretability, conditioning predictive models, developmental interpretability, defining situational awareness, formalising natural abstractions, and causal foundations.

- LISA Residents have been hired by leading external organisations to research AI safety, including Anthropic, Google DeepMind, the UK AI Safety Institute (formerly the UK Frontier AI Taskforce), and various leading universities.

Our Plans

We will focus on activities to yield four outputs:

- Provide a research environment that is supportive, productive, and collaborative with an office space that is a “melting pot” of epistemically diverse AI safety researchers working on collaborative research projects and LISA will offer comprehensive operational and research support as well as amenities such as workstations, meeting rooms & phone booths, and catering (including snacks & drinks).

- Offer financial stability, collective recognition, and accountability to individual researchers and new small organisations by subsidising office and operations overhead, providing fiscal sponsorship of new AI safety organisations, offering Legal & immigration support, and granting annual LISA Research Fellowships to support and mature individuals who have already shown evidence of high-impact AI safety research (as part of the MATS Program, Astra Fellowship, a PhD and/or postdocs, or otherwise).

- Cultivate a leading centre for AI safety research in London by admitting new member organisations and LISA Residents based on a rigorous selection process (relying on the advisory board) based on alignment with LISA’s mission, existing research competence, and cultural fit. We will host prominent AI safety researchers as speakers, hold workshops, and host other professional AI safety events and strengthen our partnerships with similar centres in the US (e.g., Constellation, FAR AI, and CHAI), UK (e.g., Trajan House) and likely new centres elsewhere, as well as with the UK Government’s AI safety institute and AI safety researchers in industry.

- Foster epistemic quality and diversity amongst new AI safety researchers & organisations by seasonally hosting established and proven domain-specific mentorship and upskilling programmes such as MATS and ARENA.

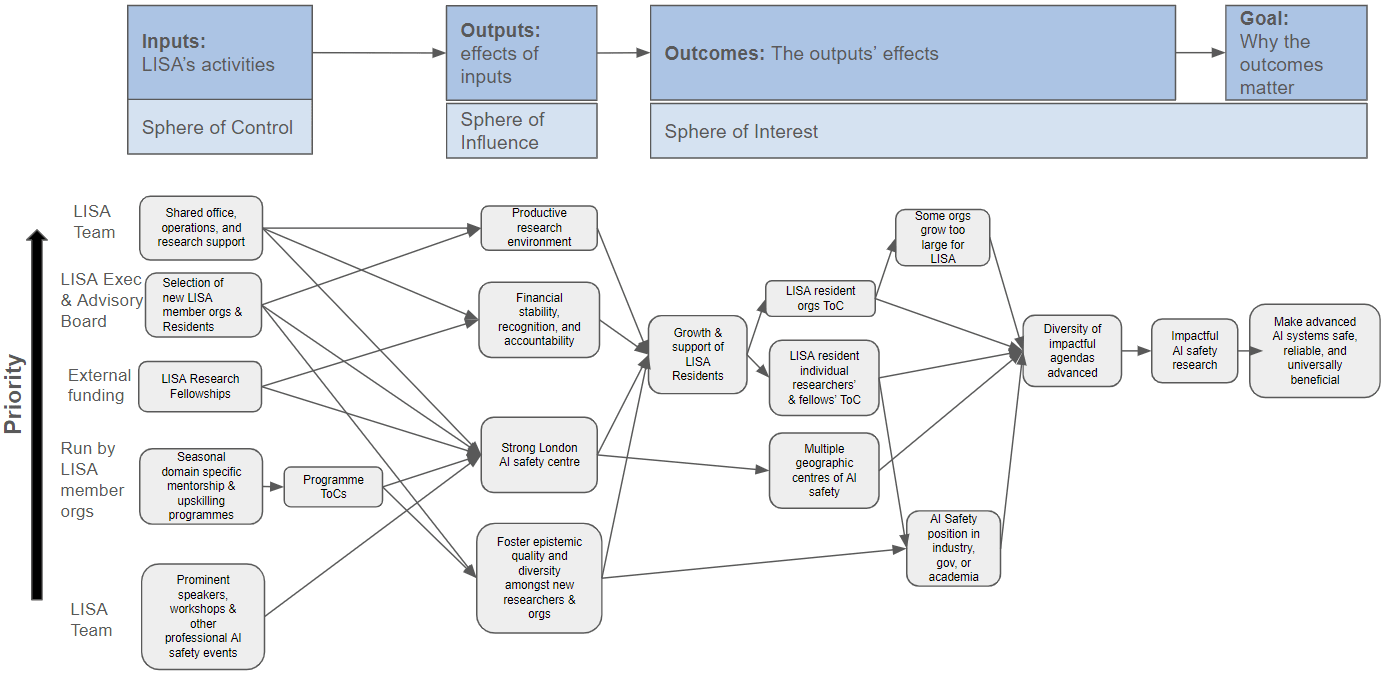

These outputs advance our theory of change:

Risks and Mitigations

The supported individual researchers & organisations might underperform expectations.

Mitigations:

- Support a diversity of individuals, organisations, and agendas: It is hard to know which AI safety agendas will be most promising, so we will support a wide range of research agendas to decorrelate failure. We will also guard against groupthink and foster a culture of humility and curiosity. If we are diversifying enough, we expect some fraction of ideas harboured here to fail.

- Expert evaluation: LISA utilises the diverse technical AI safety expertise of the LISA team and advisory board (consisting of member org representatives) to evaluate membership admissions. We draw from experience selecting mentors and mentees for MATS and solicit advice and references from MATS mentors, PhD supervisors, or industry employers(when applicable). Finally, we will predominantly only admit as Residents those with a track record of quality AI safety research (or a convincing reference) and evidence of an epistemic attitude of humility and curiosity.

- Regular impact analysis: We will routinely evaluate LISA Residents’ impact to ensure their work is still best supported at LISA. LISA Research fellows will attend biweekly meetings with LISA’s Research Director for constructive critique and mentorship and will be expected to report their progress every 3 months.

- Adaptive approach: LISA will regularly and rigorously gather insights into what is viewed as more or less impactful areas of AI safety research. We will then update and adapt research support priorities accordingly.

We might not be able to attract and retain top potential AI safety researchers.

Mitigations:

- Ensure that member organisations and residents value LISA.

- We have established an advisory board consisting of member organisation leads. We have frequent meetings to ensure they feel heard, their needs are met, and LISA is the most attractive home for them.

- LISA will continue evolving to accommodate its resident individuals’ and member organisations' growing needs and ambitions. Indeed, this is part of our motivation for moving to a larger office space.

- Attractive proposition: Our activities, outlined in our theory of change, will make LISA an appealing option for top researchers who might also have lucrative non-AI safety job offers. The current calibre of researchers and organisations based at LISA indicates waiting demand (even though we have yet to advertise ourselves beyond word of mouth).

- Facilitating industry roles: “If AI Safety research impact is power law distributed, won’t the best researchers all find jobs in industry instead of LISA anyway?”

- We view securing industry AI safety jobs for LISA residents as aligned with our mission. Since the best talent will also have great non-AI Safety job offers, providing a productive and attractive home is important.

- Many agendas are currently not supported in industry. We think it is important to support those advancing otherwise neglected research agendas.

Given the UK Government’s AI Safety Institute and other initiatives, we might be redundant.

This is a misconception. The AI Safety Institute (AISI) has a very different focus, concentrating on evaluating the impact and risks of frontier models. Instead, LISA will be a place where fundamental research can happen. We also house organisations like Apollo, a partner of AISI, so the relationship is complementary and collaborative. With regard to other initiatives, we think that the existence of more safety institutes is good for AI Safety and that it is good for individuals to have the choice between a range of options.

Great to see this.

Three concerns on the Theory of Change, and a suggestion:

1. ToC's tend to be neglected once written (hopefully not by y'all!) especially as they show a possible route to impact but not timings and "who all does what and by when" to achieve the shared goal by a particular date. (In this case, that could be the date you realistically expect bad or misaligned ASI to entrench.) Have you considered doing a Critical Path plan (aka "Critical Pathway") as NASA did for Apollo?

2. Another problem is that the last stages of your plan rely on a single channel, with no redundancy. A critical path plan, which allows more than one route to achieving the goal of ASI safety implementation, could be life-saving?!

3. AI development is moving very fast, and this ToC would seem to be (a) quite long in time and (b) numerous in stages, which means lots of potential delays and failure modes, when

If you were in dialogue with any of ....

.... and doing any of the following:

.... you might achieve prevention, preparedness or response capacity much sooner?

I'm happy to discuss further by phone, or link you in to some of those orgs.

Executive summary: The London Initiative for Safe AI (LISA) aims to become a premier AI safety research hub by cultivating a supportive environment for individual researchers and small organizations to advance impactful work and novel agendas, while strengthening the broader London ecosystem.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

This is a very interesting and timely proposal. Well done!

I am particularly interested in the idea of the meeting spaces. Given the nature of some policy conversations, it can often be difficult to schedule meetings on-site for locations - particularly if those locations have licensing requirements for visitors. It's also handy for centralising socio-legal research, since the amount of times I've had to travel to London then visit 4 or 5 locations in a day or so is a lot of wasted travel, when one could just schedule all the meetings at a purpose-made location. Booking venues can be a roll of the dice, as a bad venue can negatively impact a stakeholder meeting months in the making.

The spaces I mostly use for the more minor policy meetings in London are difficult to book and lack easy access which is something LISA seems to solve. If the registration postcode for LISA is the same as its physical location, then the location is also quite good for the policy end of things.

That is if it is possible for researchers to have visitors on site, and I'm not sure what the rules would be for that, but for those in frontline AI policymaking that would be a huge advantage, and would help attract that kind of specialism.

The talks in the events section also seem really good, with nice variation.

One piece of mixed feedback is that it's good that it's not just technical focused and that there's a 70/30 split technical and non-technical, but if you actually want to achieve the policy-maker reach you mention in the post it may make sense to expand that 30% a bit given that socio-legal and political researchers are a vital piece of actually pitching technical findings in a realistic way. The 70/30 split is still good and I can see the reason for it, but hopefully it'll be a flexible goal rather than a rigid limit. It also seems like there's an effort made to network people between specialisms via the co-working space which is a really great touch, and something that most other spaces struggle to do effectively.

All in all looks great, and quite excited to see what comes out of it!

Thanks for the kind words.

We agree that LISA can be a focal point for meetings between all types of AI safety experts (technical, socio-legal, and political) you mention. We believe that enormous value comes from facilitating exchanges of ideas between those with different expertise in a common venue dedicated to AI safety.

The location of LISA's office is likely to change to Old Street/Shoreditch in the coming months. This location is good for policy experts and technical researchers (near the Kings Cross tech offices). It also places it close to important transport links (Kings Cross & St Pancras, for travel to Cambridge and the continent; Paddington, for travel to Oxford; and Liverpool St, for many other locations).

Resident researchers can host visitors.

We also agree with your assertion that `socio-legal and political researchers are a vital piece of actually pitching technical findings'. There is certainly no rigid split between technical and non-technical. Each member application will be evaluated according to the criteria outlined in our membership overview document. Also, note that we expect many member applications to be (I) visiting researchers, often based in the US (or Europe), who want to work from an AI safety hub in London only for a short period, (ii) experts based in the UK (even London) who might only want to use the LISA office space once or twice a week.