Political scientist Baobao Zhang explains how social science research can help people in government, tech companies, and advocacy organizations make decisions regarding artificial intelligent (AI) governance. After explaining her work on public attitudes toward AI and automation, she explores other important topics of research. She also reflects on how researchers could make broad impacts outside of academia.

We’ve lightly edited Baobao’s talk for clarity. You can also watch it on YouTube and read it on effectivealtruism.org.

The Talk

Melinda Wang (Moderator): Hello, and welcome to this session on how social science research can inform AI governance, with Baobao Zhang. My name is Melinda Wang. I'll be your emcee. Thanks for tuning in. We'll first start with a 10-minute pre-recorded talk by Baobao, which will be followed by a live Q&A session.

Now I'd like to introduce you to the speaker for this session, Baobao Zhang. Baobao is a fellow at the Berkman Klein Center for Internet and Society at Harvard University and a research affiliate with the Centre for the Governance of AI at the University of Oxford. Her current research focuses on the governance of artificial intelligence. In particular, she studies public and elite opinions toward AI, and how the American welfare state could adapt to the increasing automation of labor. Without further ado, here's Baobao.

Baobao: Hello, welcome to my virtual presentation. I hope you are safe and well during this difficult time. My name is Baobao Zhang. I'm a political scientist focusing on technology policy. I'm a research affiliate with the Centre for the Governance of AI at the Future of Humanity Institute in Oxford. I'm also a fellow with the Berkman Klein Center for Internet and Society at Harvard University.

Today I will talk about how social science research can inform AI governance.

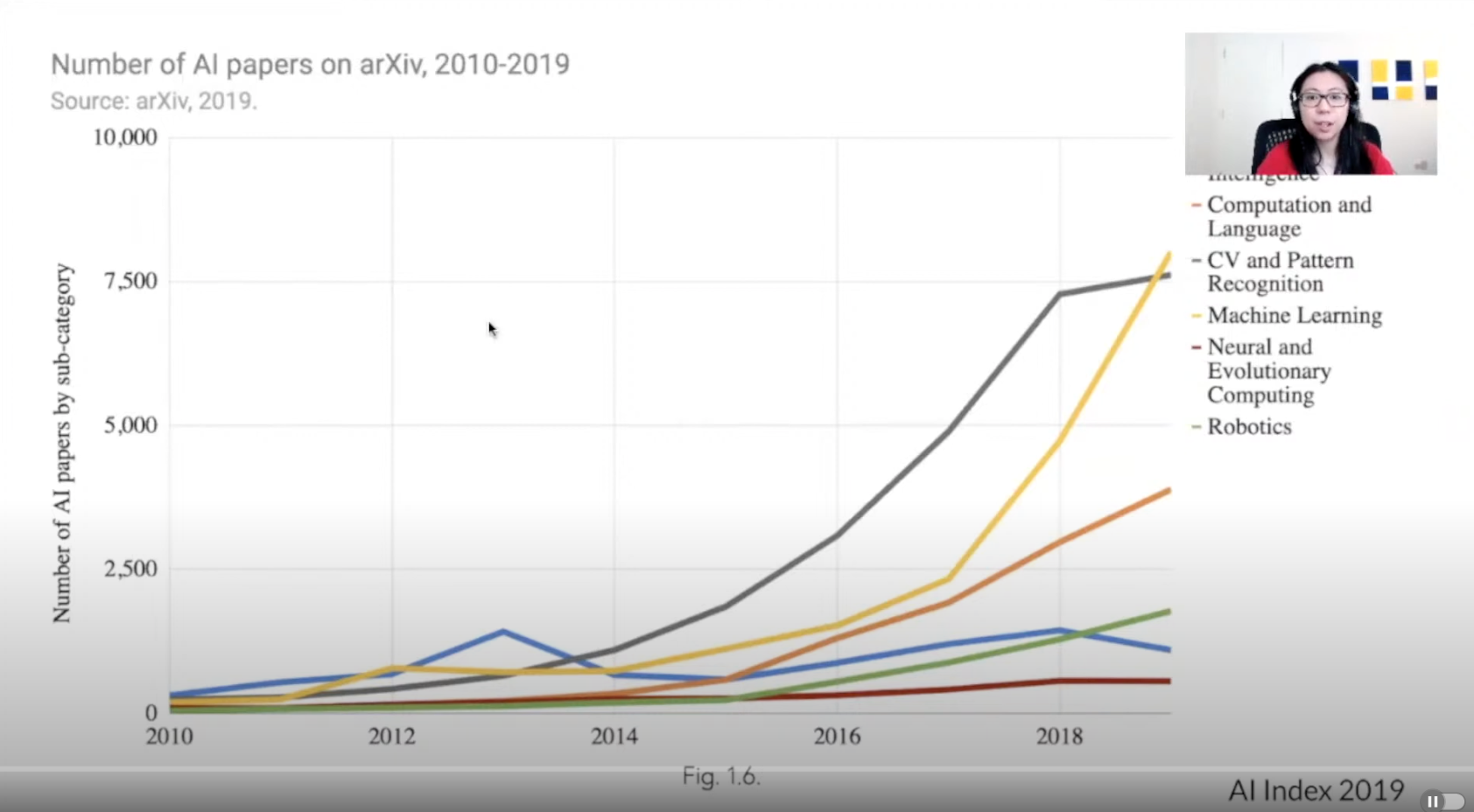

Advances in AI research, particularly in machine learning (ML), have grown rapidly in recent years. Machines can outperform the best human players in strategy games like Go and poker. You can even generate synthetic videos and news articles that easily fool humans.

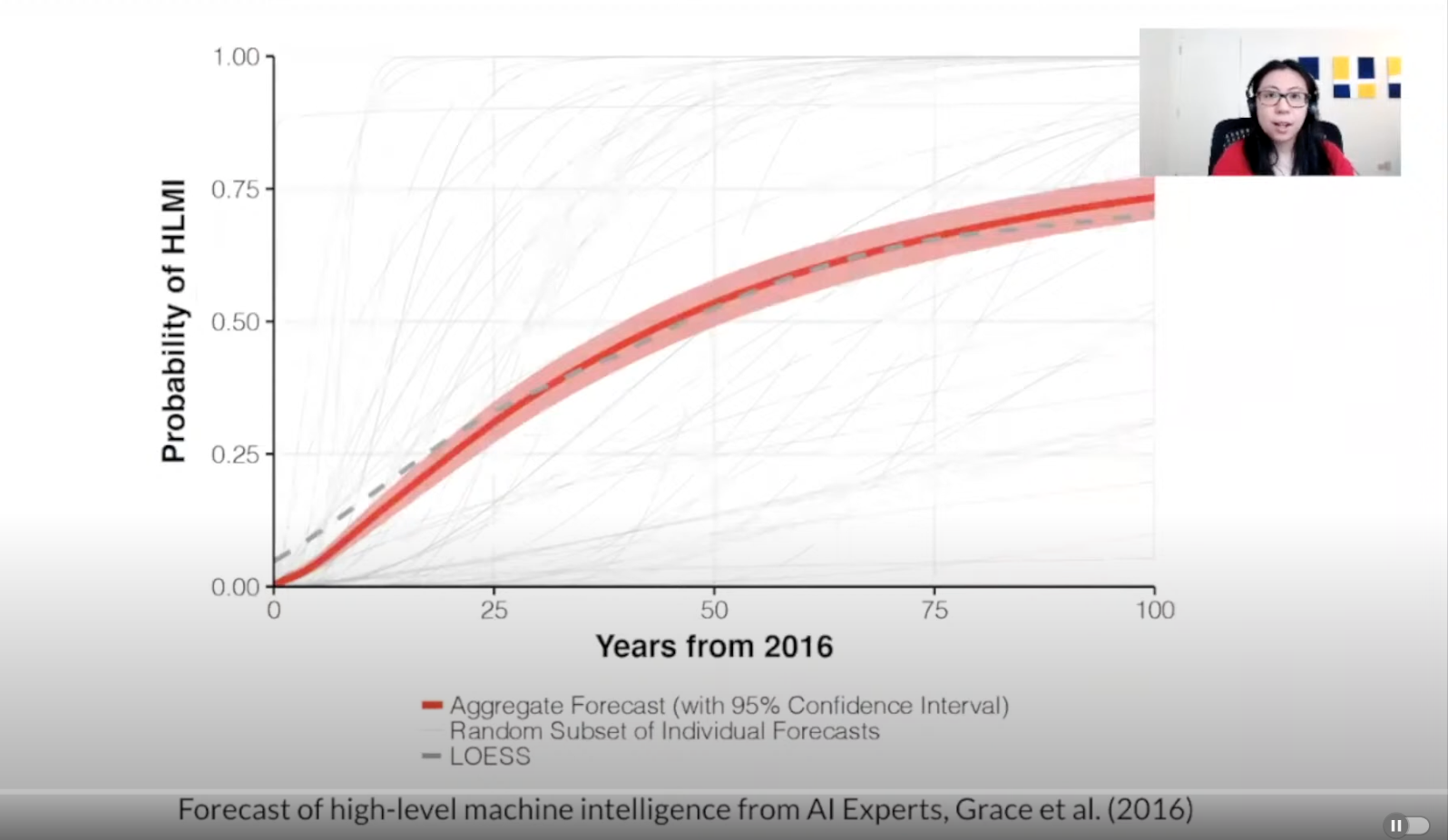

Looking ahead, ML researchers believe that there's a 50% chance of AI outperforming humans in all tasks by 2061. This [estimate] is based on a survey that my team and I conducted in 2016.

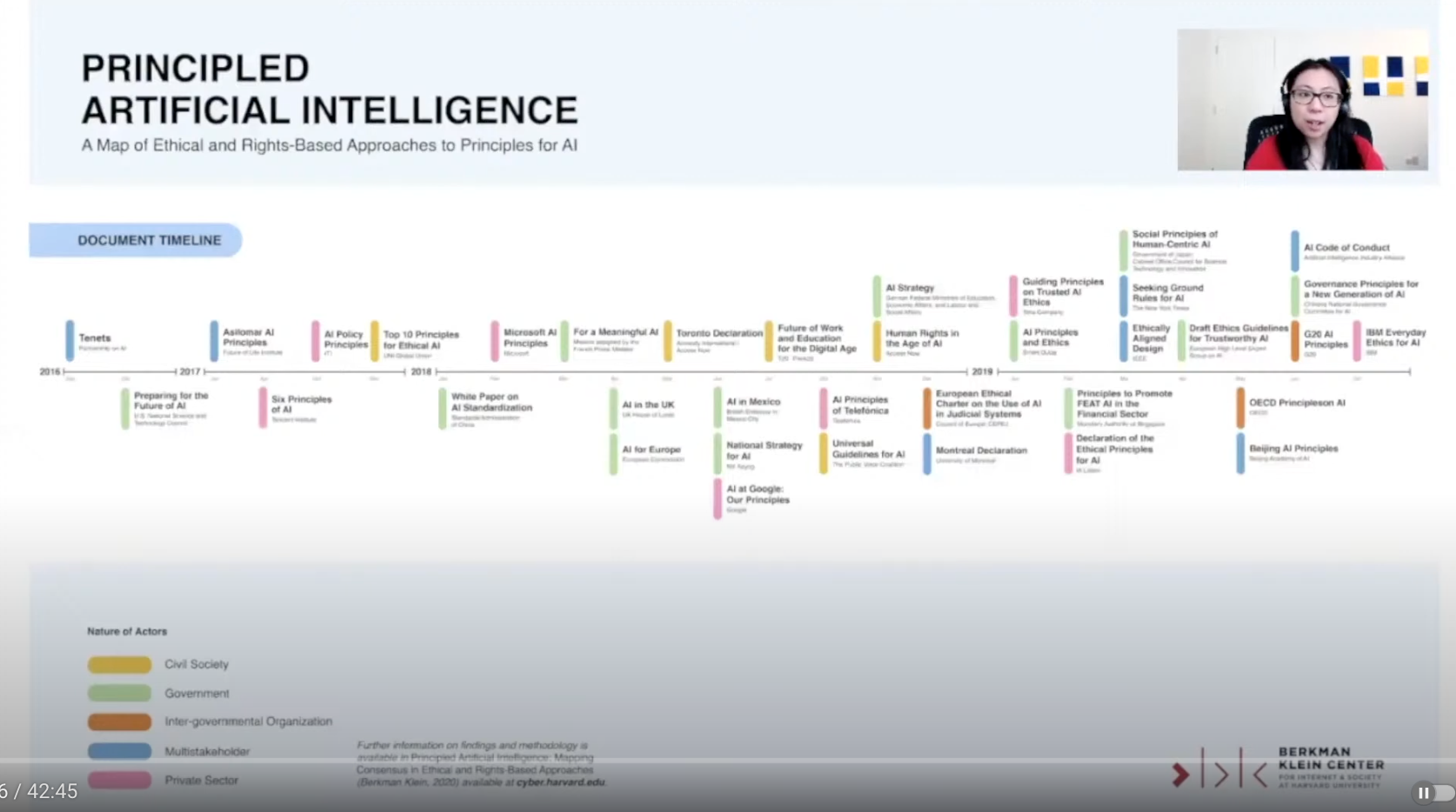

The EA community has recognized the potential risks, even existential risks, that unaligned AI systems pose to humans. Tech companies, governments, and civil society have started to take notice as well. Many organizations have published AI ethics principles to guide the development and deployment of the technology.

A report by the Berkman Klein Center counted 36 prominent sets of AI principles.

Now we're entering a phase where tech companies and governments are starting to translate these principles into policy and practice.

At the Centre for the Governance of AI (GovAI), we think that social science research — whether it’s in political science, international relations, law, economics, or psychology — can inform decision-making around AI governance.

For more information about our research agenda, please see “AI Governance: A Research Agenda” by Allan Dafoe. It's also a good starting place if you're curious about the topic and are new to it.

Here's a roadmap for my talk. I’ll cover:

- My research on public opinion toward AI

- EA social science research highlights on AI governance

- Research questions that I've been thinking about a lot lately

- How one can be impactful as a social scientist in this space

Why study the public’s opinion of AI?

From a normative perspective, we need to consider the voices of those who will be impacted by AI. In addition, public opinion has shaped policy in many other domains, including climate change and immigration; therefore, studying public opinion could help us anticipate how electoral politics may impact AI governance.

The research I'm about to present comes from this report.

It's based on a nationally representative survey of 2,000 Americans that Allan Dafoe and I conducted in the summer of 2018.

Here are the main takeaways from the survey:

1. An overwhelming majority of Americans think that AI should be carefully managed.

2. They considered all 13 governance challenges that we presented to them to be important.

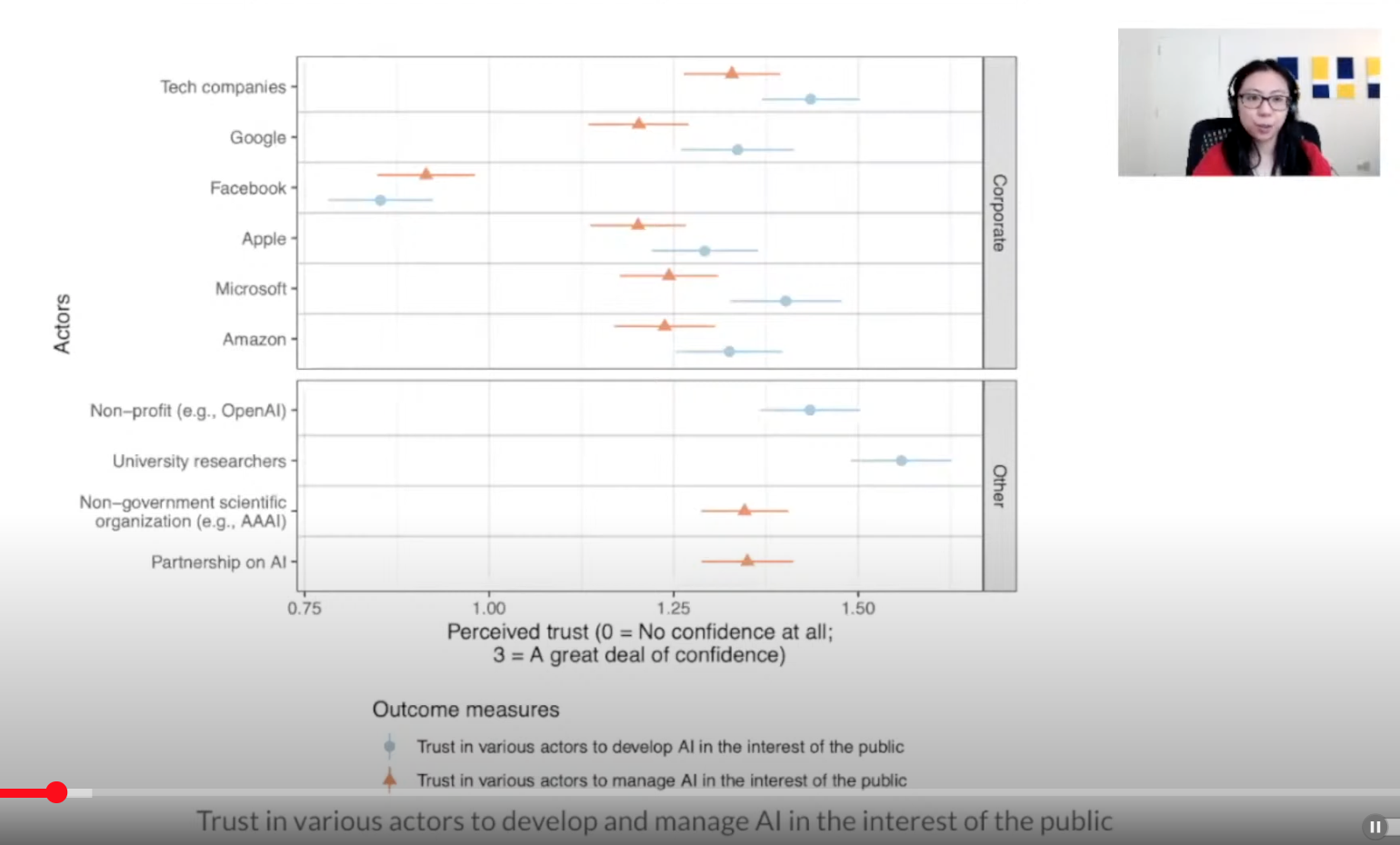

3. However, they have only low-to-moderate levels of trust in the actors who are developing and managing AI.

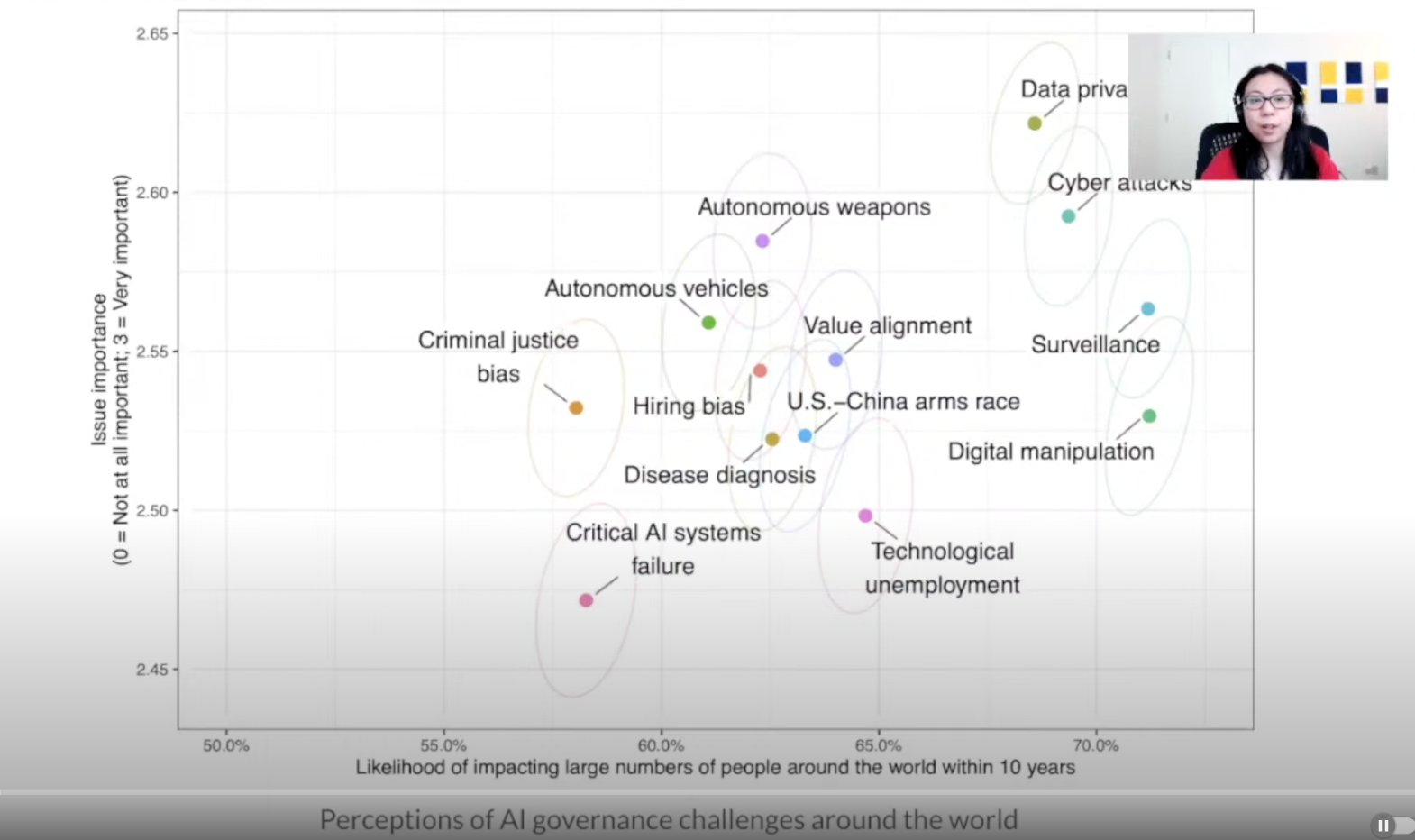

Now, on to some results. Here's a graph of Americans' view of AI governance challenges. Each respondent was randomly assigned to consider five challenges randomly selected from 13. The x-axis shows the respondents’ perceived likelihood that the governance challenge would impact large numbers of people around the world. The y-axis shows the perceived importance of the issue.

Those perceived to be high in both dimensions include protecting data privacy, preventing AI-enhanced cyber attacks, preventing mass surveillance, and preventing digital manipulation — all of which are highly salient topics in the news.

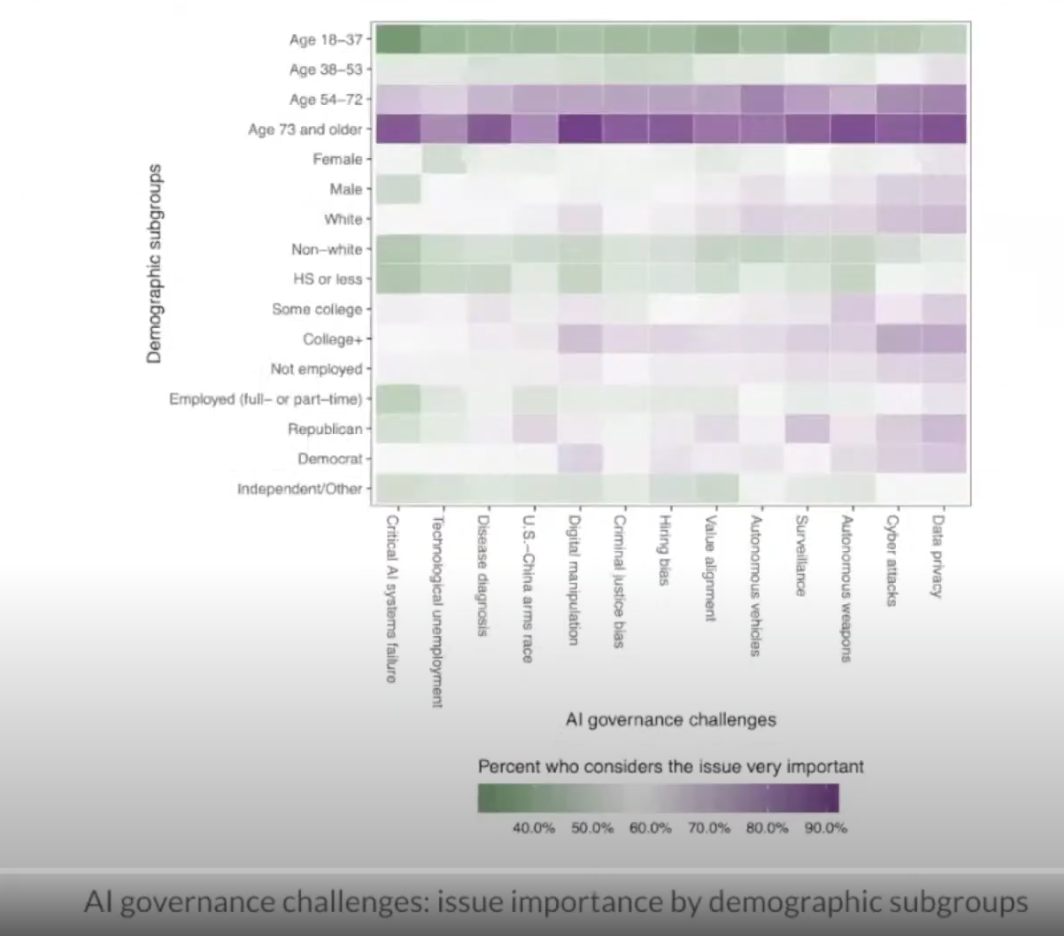

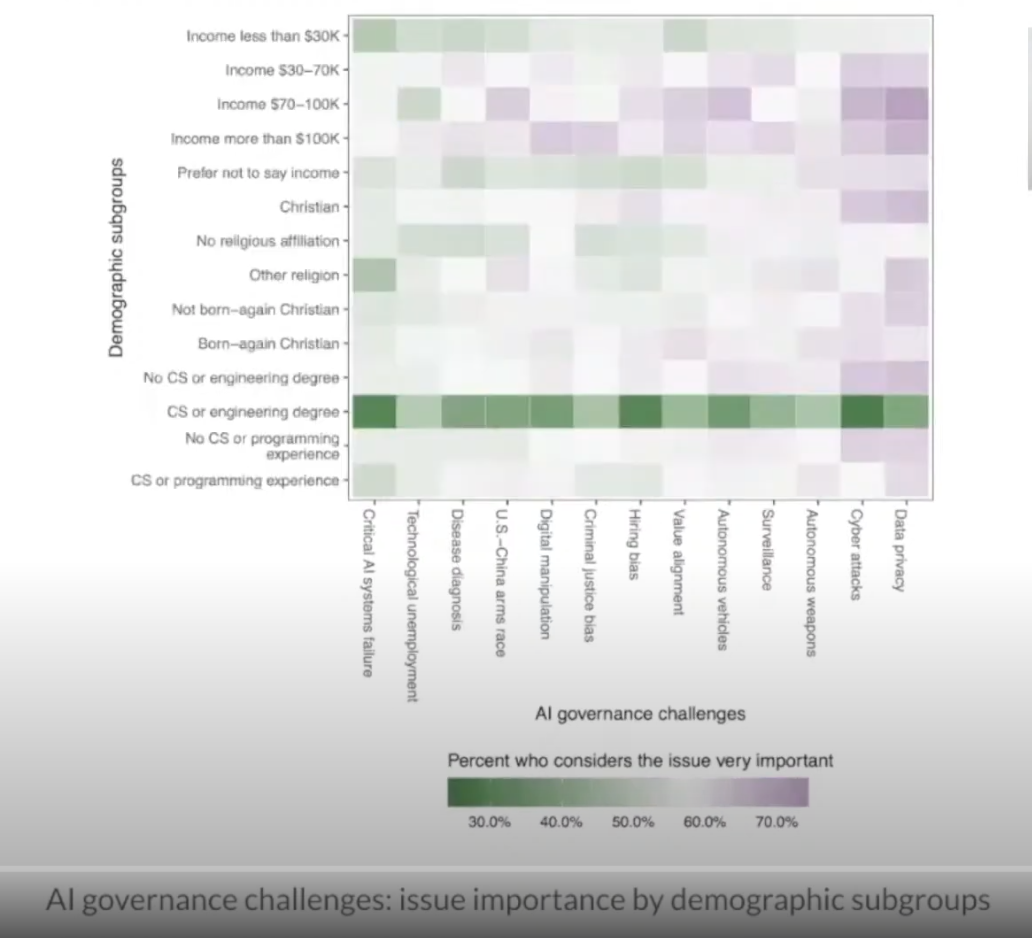

I’ll point out that the respondents consider all of these AI governance challenges to be important for tech companies and governments to manage. But we do see some variations between respondents when we break them down by subgroups.

Here we've broken it down by age, gender, race, level of education, partisanship, etc. We're looking at the issue’s perceived importance in these graphs. Purple means greater perceived issue importance. Green means lesser perceived issue importance.

I'll highlight some differences that really popped out. In this slide, you see that older Americans, in contrast to younger Americans, perceive the governance challenges presented to them to be more important.

Interestingly, those who have CS [computer science] or engineering degrees, in contrast to those who don't, perceive all of the governance challenges to be less important. We also observed this techno-optimism among those with CS or engineering degrees in other survey questions.

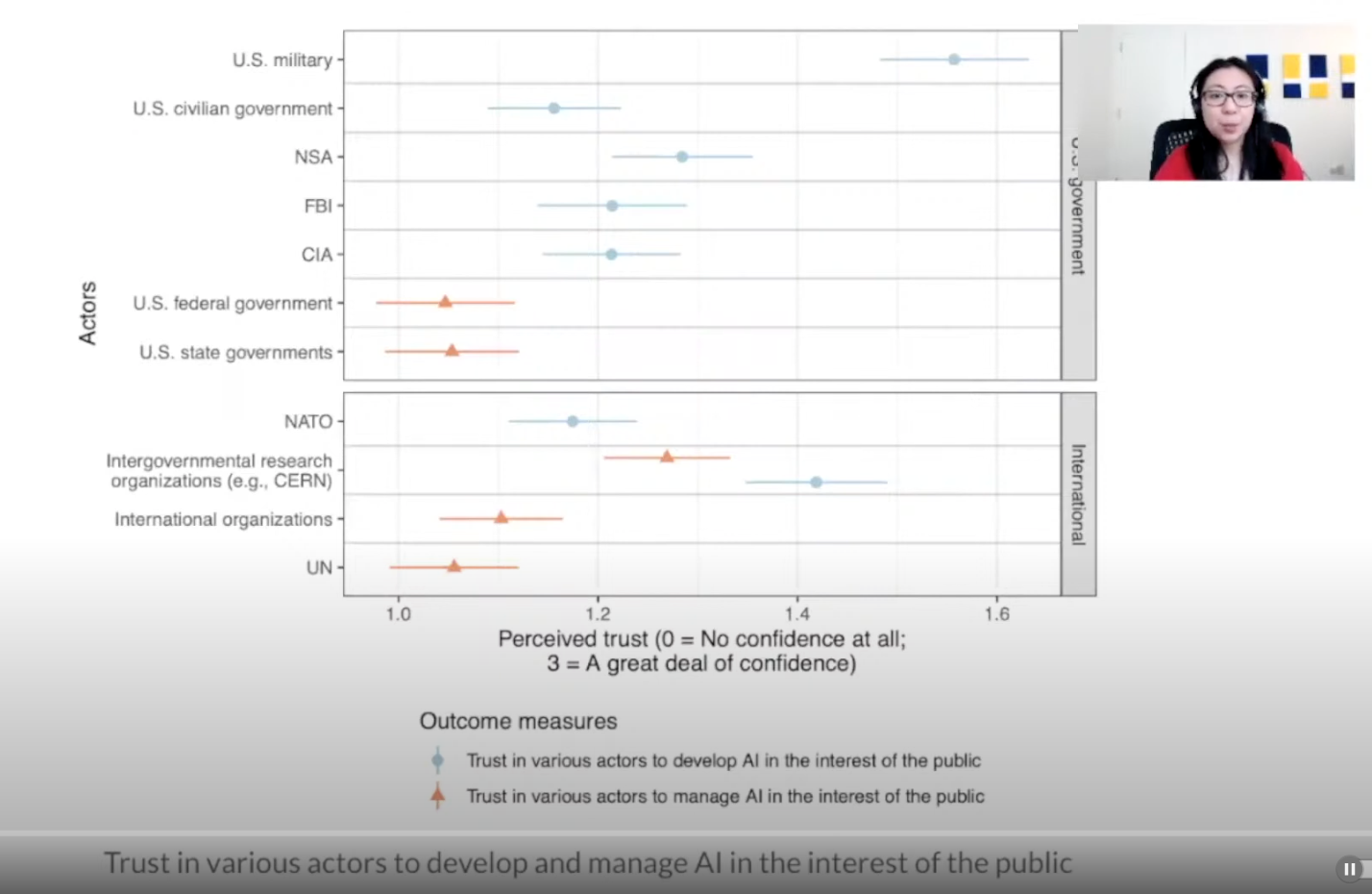

Despite Americans perceiving that these AI governance challenges are important, they have low to moderate levels of trust in the actors who are in a position to shape the development and deployment of AI systems.

There are a few interesting observations to point out in these slides:

- While trust in institutions has declined across the board, the American public still seems to have relatively high levels of trust in the military. This is in contrast to the ML community, whose members would rather not work with the US military. I think we get this seemingly strange result because the public relies on heuristics when answering this question.

- The American public seems to have great distrust of Facebook. Part of it could be the fallout from the Cambridge Analytica scandal. But when we ran a previous survey before the scandal broke, we observed similarly low levels of trust in Facebook.

I'm sharing just some of the results from our report. I encourage you to read it. We're currently working on a new iteration of the survey. And we're hoping to launch it concurrently in the US, EU, and China, [which will allow us] to make some interesting cross-country comparisons.

AI governance research by others in the effective altruism community

I would like to highlight two works by my colleagues in the EA community who are also working in AI governance.

First, there’s “Toward Trustworthy AI Development.” This paper came out recently. It's a massive collaboration among researchers in different sectors and fields, [with the goal of determining] how to verify claims made by AI developers who say that their algorithms are safe, robust, and fair. This is not merely a technical question. Suggestions in the report include creating new institutional mechanisms like bounties for detecting bias and safety issues in AI systems, and creating AI incident reports.

Second, there’s “The Windfall Clause” by my colleagues at GovAI. Here, the team considers this idea of a “windfall clause” as a way to redistribute the benefits from transformative AI. This is an ex-ante agreement, where tech companies — in the event that they make large profits from their AI systems — would donate a massive portion of those profits. The report combines a lot of research from economic history and legal analysis to come up with an inventive policy proposal.

New research questions

There are a lot of new and interesting research questions that keep me up at night. I’ll share a few of them with you, and let's definitely have a discussion about them during the Q&A.

- How do we build incentives for developing safe, robust, and fair AI systems — and avoid a race to the bottom? I think a lot of us are rather concerned about the rhetoric of an AI arms race. But it's also true that even the EU is pushing for competitiveness in AI research and development. I think the “Toward Trustworthy AI Development” paper gives some good recommendations on the R&D [research and development] front. But what will the market and policy incentives be for businesses and the public sector to choose safer AI products? That's still a question that I and many of my colleagues are interested in.

- How can we transition to an economic system where AI can perform many of the tasks currently done by humans? I've been studying perceptions of automation. Unfortunately, a lot of workers underestimate the likelihood that their jobs will be automated. They actually have an optimism bias. Even correcting workers’ [false] beliefs about the future of work in my studies has failed to make them more supportive of redistribution. And it doesn't seem to decrease their hostility toward globalization. So certainly there's a lot more work to be done on the political economy around the future of work.

- How do other geopolitical risks make AI governance more difficult? I think about these a lot. In Toby Ord's book, The Precipice, he talks about the risk factors that could increase the probability of existential risk. And one of these risks is great-power war. We're not at [that point], but there has certainly been a rise in aggressive nationalism from some of these great powers. Instead of coming together to combat the COVID pandemic, many countries are pointing fingers at each other. And I think these trends don't bode well for international governance. Therefore, thinking about how these trends might shape international cooperation around AI governance is definitely a topic that my colleagues and I are working on.

How to be impactful as a social scientist

I’ll conclude this presentation by talking about how one can be impactful as a social scientist. I have the great luxury of working in academia, where I have plenty of time to think and carry out long-term research projects. At the same time, I have to constantly remind myself to engage with the world outside of academia — the tech industry and the policy world — by writing op eds, doing consulting, and communicating with the media.

Fortunately, social scientists with expertise in AI and AI policy are also in demand in other settings. Increasingly, tech companies have sought to hire people to conduct research on how individual humans interact with AI systems, or what the impact of AI systems may be on society. Geoffrey Irving and Amanda Askell have published a paper called “AI Safety Needs Social Scientists.” I encourage you to read it if you're interested in this topic.

To give a more concrete example, some of my colleagues have worked with OpenAI to test whether their GPT-2 language model can generate news articles that fool human readers.

Governments are also looking for social scientists with expertise in AI. Policymakers in both the civilian government and in the military have an AI literacy gap. They don't really have a clear understanding of the limits and the potentials of the technology. But advising policymakers does not necessarily mean that you have to work in government. Many of my colleagues have joined think tanks in Washington, DC, where they apply their research skills to generate policy reports, briefs, and expert testimony. I recommend checking out the Center for the Security and Emerging Technology or CSET, based at Georgetown University. They were founded about a year ago, but they have already put out a vast collection of research on AI and US international relations.

Thank you for listening to my presentation. I look forward to your questions during the Q&A session.

Melinda: Thank you for that talk, Baobao. The audience has already submitted a number of questions. We're going to get started with the first one: [Generally speaking], what concrete advice would you give to a fresh college graduate with a degree in a social science discipline?

Baobao: That's a very good question. Thank you for coming to my talk.

One of the unexpected general pieces of advice that I would give is to have strong writing skills. At the end of the day, you need to translate all of the research that you do for different audiences: readers of academic journals, policymakers, tech companies [that want you to produce] policy reports.

Besides that, I think [you may need to learn certain skills depending on] the particular area in which you specialize. For me, learning data science and statistics is really important for the type of research that I do. For other folks it might be game theory, or for folks doing qualitative research, it might be how to do elite interviews and ethnographies.

But overall, I think having strong writing skills is quite critical.

Melinda: Great. [Audience member] Ryan asks, “What are the current talent gaps in AI safety right now?”

Baobao: That's a good question. I must confess: I'm not an AI safety expert, although I did talk about a piece that folks at OpenAI wrote called “AI Safety Needs Social Scientists.” And I definitely agree with the sentiment, given that the people who are working on AI safety want to run experiments. You can think of them as psychology experiments. And a lot of computer scientists are not necessarily trained on how to do that. So if you have skills in running surveys or psych experiments, that’s a skill set that I hope tech companies will acknowledge and recognize as important.

Melinda: That's really interesting. Would you consider psychology to be within the realm of social sciences, [in terms of how] people generally perceive it? Or do you mean fields related to STEM [science, technology, engineering, math]?

Baobao: I think psychology is quite interesting. People who are more on the neuroscience side might be [considered to be in] STEM. I work with some experimental psychologists, particularly social psychologists, and they read a lot of the literature in economics, political science, and communications studies. I do think that there's a bit of overlap.

Melinda: How important do you consider interdisciplinary studies to be, whether that's constrained within the realm of social science, or social science within STEM, etc.?

Baobao: I think it's important to work with both other social scientists and with computer scientists.

This is more [along the lines of] career advice and not related to AI governance, but one of the realizations I had in doing recent work on several COVID projects is that it’s important to have more than just an “armchair” [level of understanding] of public health. I try to get my team to talk with those who are either in vaccine development, epidemiology, or public health. And I'd like to see more of that type of collaboration in the AI governance space. We do that quite well at that, I think, at GovAI, where we can talk to in-house computer scientists at the Future of Humanity Institute.

Melinda: Yes. That's an interesting point and relates to one of the questions that just came in: How can we more effectively promote international cooperation? Do you have any concrete strategies that you can recommend?

Baobao: Yes. That's a really good question. I work a bit with folks in the European Union, and bring my AI governance expertise to the table. The team that I'm working with just submitted a consultation to the EU Commission. That type of work is definitely necessary.

I also think that collaboration with folks who work on AI policy in China is fruitful. I worry about the decoupling between the US and China. There's a bit of tension. But if you're in Europe and you want to collaborate with Chinese researchers, I encourage it. I think this is an area that more folks should look into.

Melinda: Yes, that's a really good point.

In relation to the last EAGxVirtual talk on biosecurity, can you think of any information hazards within the realm of AI governance?

Baobao: That's a good question. We do think a lot about all of our publications. At GovAI, we talk about being careful in our writing so that we don't necessarily escalate tensions between countries. I think that's definitely something that we think about.

At the same time, there is the open science movement in the social sciences. It’s tricky to [find a good] balance. But we certainly want to make sure our work is accurate and speaks to our overall mission at GovAI of promoting beneficial AI and not doing harm.

Melinda: Yes. A more specific question someone has asked is “Do you think this concept of info hazards — if it's a big problem in AI governance — would prohibit one from spreading ideas within the [discipline]?”

Baobao: That's a good question. I think the EA community is quite careful about not spreading info hazards. We're quite deliberate in our communication. But I do worry about a lot of the rhetoric that other folks who are in this AI governance space use. There are people who want to drum up a potential AI arms race — people who say, “Competition is the only thing that matters.” And I think that's the type of dangerous rhetoric that we want to avoid. We don't want a race to the bottom where, whether it's the US, China, or the EU, researchers only care about [winning, and fail to consider] the potential risks of deploying AI systems that are not safe.

Melinda: Yes. Great. So we're going to shift gears a little bit into more of the nitty-gritty of your talk. One question that an audience member asked is “How do you expect public attitudes toward AI to differ by nationality?”

Baobao: That's a good question. In the talk, I mentioned that at GovAI, we're hoping to do a big survey in the future that we run concurrently in different countries.

Judging from what I've seen of the literature, Eurobarometer has done a lot of good surveys in the EU. As you would expect, folks living in Europe — where there are tougher privacy laws — tend to be more concerned about privacy. But it’s not necessarily so that Chinese respondents are totally okay with a lack of privacy.

That’s why we're hoping to do a survey in which we ask [people in different countries] the same questions, around the same time. I think it’s really important to make these cross-national comparisons. With many questions, you get different responses because of how the questions are framed or worded, so [ensuring a] rigorous approach will yield a better answer to this question.

Melinda: Yes. I'd like you to unpack that a bit more. In what concrete ways can we be more culturally sensitive in stratifying these risks when it comes to international collaboration?

Baobao: I think speaking the [appropriate] language is really important. I can provide a concrete example. As my team worked on the EU consultation that I mentioned, we talked to folks who work at the European Commission in order to understand their particular concerns and “speak their language.”

They're concerned about AI competition and the potential for an arms race, but they don't want to use that language. They also care a lot about human rights and privacy. And so when we make recommendations, since we’ve read the [relevant] reports and spoken to the people involved in the decision-making, those are the two things that we try to balance.

And in terms of the survey research that we're hoping to do, we're consulting with folks on the ground so that our translation work is localized and [reflects] cultural nuances.

Melinda: Yes, that's a really good point about using the right language. It also [reminds] me of how people in the social sciences often think differently, and may use different [terminology] than people from the STEM realm, for example. The clash of those two cultures can sometimes result in conflicts. Do you have any advice on how to mitigate those kinds of conflicts?

Baobao: That's a good question. Recently, GovAI published a guide to [writing the] impact statements required for the NeurIPS conference. One of the suggestions was that computer scientists trying to [identify] the societal impact of their research talk with social scientists who can help with this translational work. Again, I think it’s quite important to take an interdisciplinary approach when you conduct research.

Melinda: Yes. Do you think there's a general literacy gap between these different domains? And if so, how should these gaps be filled?

Baobao: Yes, good question. My colleagues, Mike Horowitz and [Lauren] Kahn at the University of Pennsylvania, have written a piece about the AI literacy gap in government. They acknowledge that it's a real problem. Offering crash courses to train policymakers or social scientists who are interested in advising policymakers is one way to [address this issue]. But I do think that if you want to work in this space, doing a deep dive — not just taking a crash course — can be really valuable. Then, you can be the person offering the instruction. You can be the one writing the guides.

So yes, I do think there's a need to increase the average level of AI literacy, but it’s also important for the social science master's degree and PhD programs to train people.

Melinda: Yes, that's interesting. It brings up a question from [audience member] Chase: “Has there been more research done on the source of technical optimism from computer scientists and engineers? [Is this related to] overconfidence in their own education or in fellow developers?”

Baobao: That's a good question. We [work with] machine learning researchers at GovAI, and [they’ve done] research that we hope to share later this year. I can't directly address that research, but there may be a U-shape curve. If the x-axis represents your level of expertise in AI, and the y-axis represents your level of concern about AI safety or risks from AI, those who don't have a lot of expertise are kind of concerned. But those who have CS or engineering degrees are perhaps not very worried.

But then, if you talk to the machine learning researchers themselves, many are concerned. I think they're recognizing that what they work on can have huge societal impacts. You have folks who work in AI safety who are very concerned about this. But in general, I think that the machine learning field is waking up to these potential risks, given the proliferation of AI ethics principles coming from a lot of different organizations.

Melinda: Yes, it’s interesting how the public is, for once, aligned with the experts in ML. I suppose in this instance, the public is pretty enlightened.

I'm not sure if this question was asked already, but are organizations working on AI governance more funding-constrained or talent-constrained?

Baobao: That's a good question. May I [mention] that at GovAI, we're looking to hire folks in the upcoming months? We're looking for someone to help us on survey projects. And we're also looking for a project manager and other researchers. So, in some sense, we have the funding — and that's great. And now we just need folks who can do the research. So that's my plug.

I can't speak for all organizations, but I do think that there is a gap in terms of training people to do this type of work. I'm going to be a faculty member at a public policy school, and they're just beginning to offer AI governance as a course. Certainly, a lot of self-study is helpful. But hopefully, we'll be able to get these courses into the classrooms at law schools, public policy schools, and different PhD or master's degree programs.

Melinda: Yes. I think perhaps the motivation behind that question was more about the current general trend around a lot of organizations in EA being extremely competitive to get into — especially EA-specific job posts. I'm wondering whether you can provide a realistic figure for how talent-constrained AI governance is, as opposed to being funding-constrained.

Baobao: I can't speak to the funding side. But in terms of the research and the human-resource side, I think we're beginning to finally [arrive at] some concrete research questions. At the same time, we're building out new questions. And it's hard to predict what type of skill set you will need.

As I mentioned in my talk, GovAI has realized that social science skills are really important — whether you can do survey research, legal analysis, elite interviews on the sociology side, or translational work. So, although I don't have a good answer to that question, I think getting some sort of expertise in one of the social sciences and having expertise in AI policy or AI safety are the types of skills that we're looking for.

Melinda: Okay. Given that we have six minutes left, I'm going to shift gears a little bit and try to get through as many questions as possible. Here’s one: “What insights from nuclear weapons governance can inform AI governance?”

Baobao: I think that's a really good question. And I think you've caught me here. I have some insights into the dual-use nature of nuclear weapons. People talk about AI as a “general-purpose technology.” It could be both beneficial and harmful. And speaking from my own expertise in public opinion research, one of the interesting findings in this space is that people tend to reject nuclear energy because of its association with nuclear weapons. There's a wasted opportunity, because nuclear energy is quite cheap and not as harmful as, say, burning fossil fuels. But because of this negative association, we’ve sort of rejected nuclear energy.

[Turning to] trust in AI systems, we don't want a situation where people's association of AI systems is so negative that they reject applications that could be beneficial to society. Does that make sense?

Melinda: Yes.

Baobao: I recommend [following the work of] some of my colleagues in international relations, at organizations like CSET who have written about this. I'm sorry — it's not my area of expertise.

Melinda: Okay. The next question is “Is there a way we can compare public trust in different institutions, specifically regarding AI, compared to a general baseline trust in that institution — for example, in the case of the public generally having greater trust in the US military?”

Baobao: That's a good question. I think that, because AI is so new, the public relies on their heuristics rather than what they know. They're just going to rely on what they think of as a trustworthy institution. And one thing that I've noticed, and that I wrote about in my Brookings report, is how some areas of political polarization around AI governance map to what you would expect to find in other domains. It’s concerning, in the context of the US at least, that if we can't agree on the right policy solution, we might just [rely on] partisan rhetoric about AI governance.

For example, acceptance of facial recognition software maps to race and partisanship. African Americans really distrust facial recognition. Democrats tend to distrust facial recognition. Republicans, on the other hand, tend to have a greater level of acceptance. So you see this attitude towards policing being mapped to facial recognition.

You also see it in terms of regulating algorithmically curated social media content. There seems to be a bipartisan backlash against tech, but when you dig more deeply into it, as we've seen recently, Republicans tend to think about content moderation as censorship and [taking away] a right, whereas Democrats tend to see it as combating misinformation. So unfortunately, I do think that partisanship will creep into the AI governance space. And that's something that I'm actively studying.

Melinda: Yes. We only have one minute left. I'm going to go through the last questions really quickly. With regards to the specific data that you presented, you mentioned that the public considers all AI governance challenges important. Did you consider including a made-up governance challenge to check response bias?

Baobao: Oh, that's a really good suggestion. We haven't, but we certainly can do that in the next round.

Melinda: Okay, wonderful. And that concludes the Q&A part of this session. [...] Thanks for watching.