Summary

The Midas Project is a new AI safety organization. We use public advocacy to incentivize stronger self-governance from the companies developing and deploying high-risk AI products.

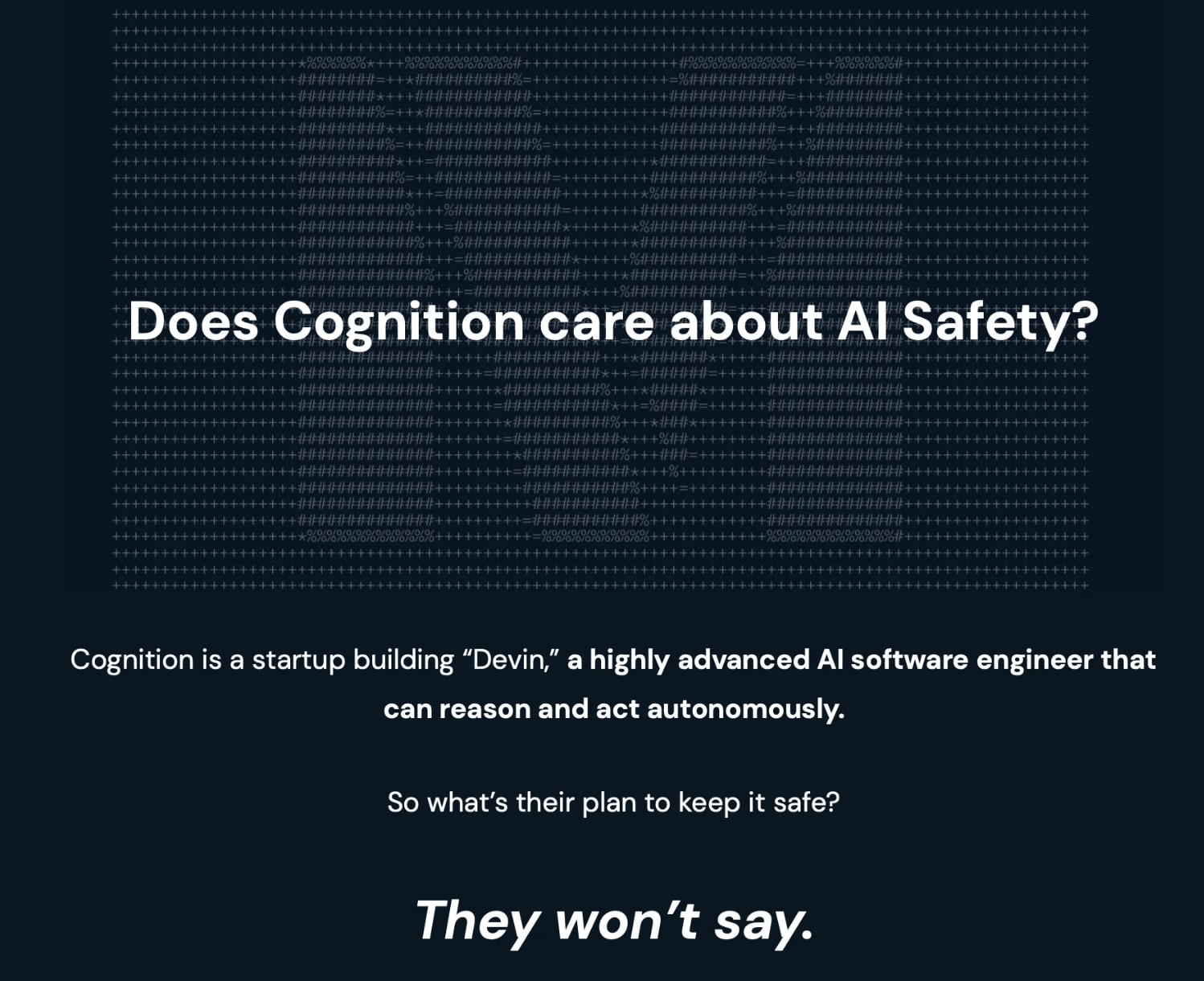

This week, we’re launching our first major campaign, targeting the AI company Cognition. Cognition is a rapidly growing startup [1] developing autonomous coding agents. Unfortunately, they’ve told the public virtually nothing about how, or even if, they will conduct risk evaluations to prevent misuse and other unintended outcomes. In fact, they’ve said virtually nothing about safety at all.

We’re calling on Cognition to release an industry-standard evaluation-based safety policy. We need your help to make this campaign a success. Here are five ways you can help, sorted by level of effort:

- Keep in the loop about our campaigns by following us on Twitter and joining our mailing list.

- Offer feedback and suggestions, by commenting on this post or by reaching out at info@themidasproject.com

- Share our Cognition campaign on social media, sign the petition, or engage with our campaigns directly on our action hub.

- Donate to support our future campaigns (tax-exempt status pending).

- Sign up to volunteer, or express interest in joining our team full-time.

Background

The risks posed by AI are, at least partially, the result of a market failure.

Tech companies are locked in an arms race that is forcing everyone (even the most safety-concerned) to move fast and cut corners. Meanwhile, consumers broadly agree that AI risks are serious and that the industry should move slower. However, this belief is disconnected from their everyday experience with AI products, and there isn’t a clear Schelling point allowing consumers to express their preference via the market.

Usually, the answer to a market failure like this is regulation. When it comes to AI safety, this is certainly the solution I find most promising. But such regulation isn’t happening quickly enough. And even if governments were moving quicker, AI safety as a field is pre-paradigmatic. Nobody knows exactly what guardrails will be most useful, and new innovations are needed.

So companies are largely being left to voluntarily implement safety measures. In an ideal world, AI companies would be in a race to the top, competing against each other to earn the trust of the public through comprehensive voluntary safety measures while minimally stifling innovation and the benefits of near-term applications. But the incentives aren’t clearly pointing in that direction—at least not yet.

However: EA-supported organizations have been successful at shifting corporate incentives in the past. Take the case of cage-free campaigns.

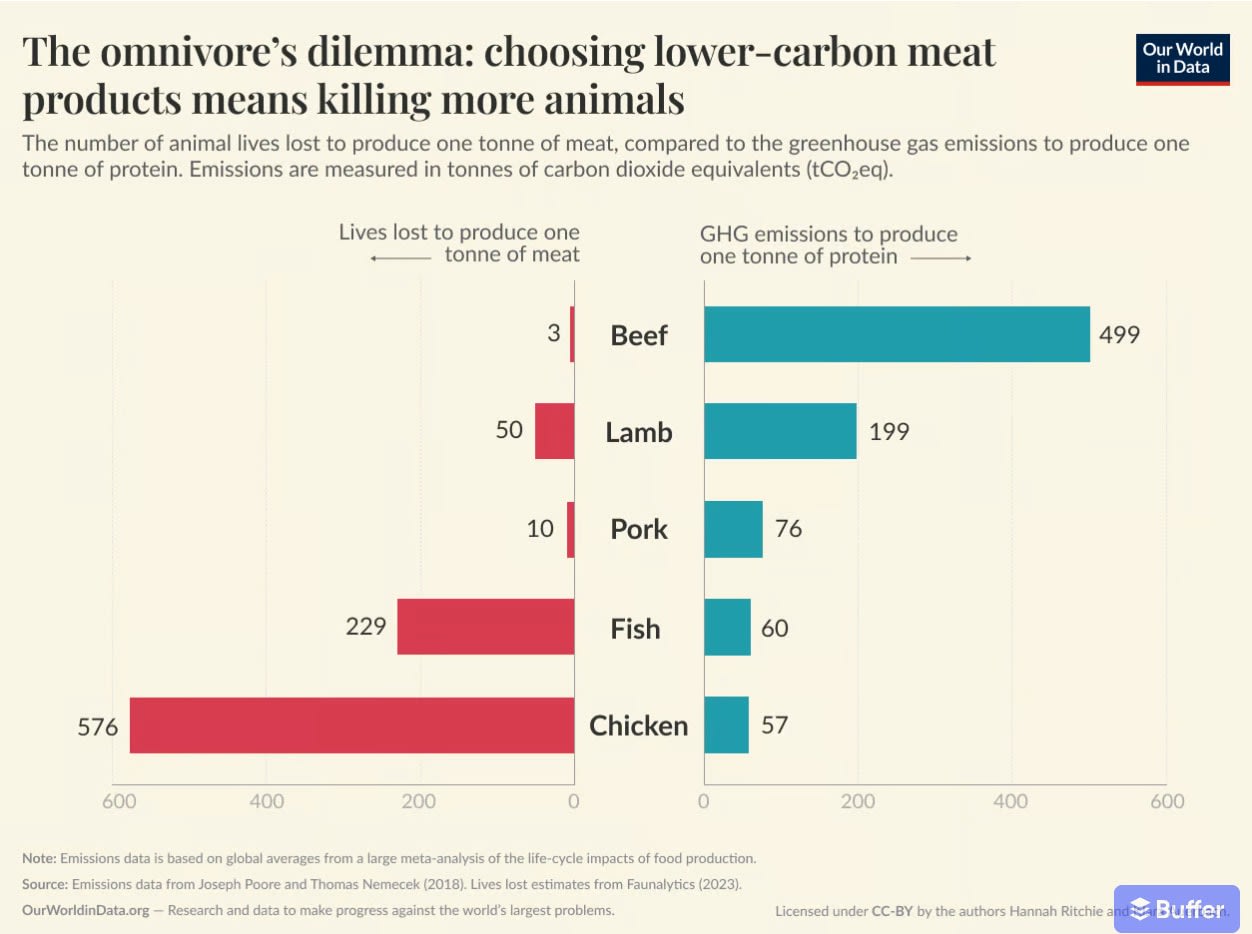

By engaging in advocacy that threatens to expose specific food companies for falling short of customers’ basic expectations regarding animal welfare, groups like The Humane League and Mercy For Animals have been able to create a race to the top for chicken welfare, leading virtually all US food companies to commit to going cage-free. [2] Creating this change was as simple as making the connection in the consumer’s mind between their pre-existing disapproval of inhumane battery cages and the eggs being served at their local fast food chain.

I believe this sort of public advocacy can be extremely effective. In fact, in the case of previous emerging technologies, I would go so far as to say it’s been too effective. Public advocacy played a major role in preventing the widespread adoption of GM crops and nuclear power in the twentieth century, despite huge financial incentives to develop these technologies. [3]

We haven’t seen this sort of activism leveraged to demand meaningful self-regulation from AI companies yet. So far, activism has tended to either (1) write off the value of self-governance (e.g., disparaging evaluation-based scaling policies as mere industry safety washing) or (2) take an all-or-nothing, outside-game approach (e.g., demanding a global moratorium on AI development). I do think much of this work is valuable. [4] But I also think there is an opportunity to use activism to create targeted incremental change by shining a spotlight on the least responsible AI companies in today’s market and calling on them to catch up to the industry standard.

About The Midas Project

The Midas Project is a nonprofit organization that leverages corporate outreach and public education to encourage the responsible development and deployment of advanced artificial intelligence technology.

We’re planning a number of public awareness campaigns to call out companies falling behind on AI safety, and to encourage industry best practices including risk evaluation and pre-deployment safety reviews.

Learn more about us on our website, or follow us on Twitter.

Our Campaign Against Cognition

Cognition is an AI startup building and deploying Devin, an autonomous coding agent that can browse the web, write code, execute said code, and generally take action independently. For more details on what we know about Devin, Zvi Mowshowitz provides a good summary. [5]

It should go without saying that deploying autonomous coding agents carries unique risks. Some of these are speculative, and some are already possible today. As such, conducting pre-deployment risk evaluation seems like the bare minimum for a company creating such coding agents.

Is Cognition doing risk assessment? We don’t know. Unlike nearly all leading AI companies, they haven’t released (or even announced plans to release) a policy on risk evaluation and model scaling. In fact, they haven’t released any safety policies whatsoever, as far as we can tell. We even reached out to ask them about this, but they wouldn’t return any of our emails.

So, we’re calling on the public to ask Cognition directly: How will you ensure your product is safe?

How you can get involved

To support our campaign against Cognition, consider sharing the page on Twitter, signing our petition, or taking action on our action hub.

Anti-lab advocacy can be controversial and risky, making it difficult for some philanthropic institutions to fund due to reputational and strategic concerns. Therefore, grassroots individual support is particularly valuable for us. We accept donations via our website and greatly appreciate any support you can offer. Please note that we do not yet have tax-exempt status, though we expect that to change soon.

And while money is important — these campaigns are fundamentally people-powered. One of the most impactful ways you can help is to take some time to promote, share, or participate in our campaign. There’s no minimum bar in terms of experience or time commitment. Even a few minutes a week can be impactful. If you’re interested in taking action on our campaigns, join our action hub. If you’re interested in volunteering on a more regular, committed basis, fill out our volunteer form!

We’re also hoping to grow the team in the near future. If this is the kind of project you’d be interested in working on full-time, please get in touch.

- ^

Cognition was founded in late 2023 and has since raised hundreds of millions of dollars at a $2 billion valuation.

- ^

To what degree these commitments will be fulfilled remains an open question, but this still appears to be one of the most cost-effective interventions in the animal rights movement's history.

- ^

As mentioned, I think protests against nuclear energy and GMOs probably went much further than what was socially optimal. Is the AI safety community making that same mistake with AI? Well, I don’t think so, mostly because I think this technology just is unprecedented and uniquely dangerous. We’ll see if history vindicates this.

- ^

I’m a proud volunteer and board member for PauseAI, for example.

- ^

And, an update following news that their marketing was misleading, which had previously informed some of Zvi’s initial post. Despite this blunder from Cognition, the smart consensus still seems to be that their product is very capable and high-risk.

Is there a reason it's impossible to find out who is involved with this project? Maybe it's on purpose, but through the website I couldn't find out who's on the team, who supports it, or what kind of organisation (nonprofit? For profit? Etc.) you are legally. If this was a deliberate and strategic choice against being transparent because of the nature of the work you expect to be doing, I'd love to hear why you made it!

[My 2 cents: As an org that is focused on advocacy and campaigns, it might be especially important to be transparent to build trust. It's projects like yours where I find myself MOST interested who is behind it to evaluate trustworthiness, conflicting incentives, etc. For all I know (from the website), you could be a competitor of the company you are targeting! I am not saying you need Fish-Welfare-Project-level transparency with open budgets etc.,and maybe I am just an overly suspicious website visitor, but I felt it was worth flagging]

Hey! Thanks for the comment - this makes sense. I'm the founder and executive director (that's why I made this post under my name!) and The Midas Project is a nonprofit, which by law entails that details about our funding will be made public in annual filings and such reports will be available upon request, and that our work has to exclusively serve the public interest and not privately benefit anyone associated with the organization (which is generally determined by the IRS and/or independent audits). Hope this assuages some concerns.

It's true we don't have a "team" page or anything like that. FWIW, this is clearly the norm for campaigning/advocacy nonprofits (for example, take a look at the websites for the animal groups I mentioned, or Greenpeace/Sunrise Movement in the climate space) and that precedent is a big part of why I chose the relative level of privacy here — though I'm open to arguments that we should do it differently. I think the most important consideration is protecting the privacy of individual contributors since this work has the potential to make some powerful enemies... or just to draw the ire of e/accs on Twitter. Maybe both! I would be more open to adding an “our leadership” page, which is more common for such orgs - but we’re still building out a leadership team so it seems a bit premature. And, like with funding, leadership details will all be in public filings anyway.

Thanks again for the feedback! It's useful.

Those orgs you list are big legacy orgs. I would imagine (although I haven't checked) that most new orgs would have their team listed. If bad actors put in 1 minute of Internet effort they will find you anyway - so then for credibility reasons why not have a team page with your names and backgrounds?

Additionally if you want to show that you can credibly engage policymakers (which I think you might need to do in order to put pressure on these companies) I would expect transparency of people and funding sources to help a lot.

How did you decide to target Cognition?

IMO it makes much more sense to target AI developers who are training foundation models with huge amounts of compute. My understanding is that Cognition isn't training foundation models, and is more of a "wrapper" in the sense that they are building on top of others' foundation models to apply scaffolding, and/or fine-tuning with <~1% of the foundation model training compute. Correct me if I'm wrong.

Gesturing at some of the reasons I think that wrappers should be deprioritized:

Maybe the answer is that Cognition was way better than foundation model developers on other dimensions, in which case, fair enough.

Good question! I basically agree with you about the relative importance of foundation model developers here (although I haven’t thought too much about the third point you mentioned. Thanks for bringing it up.)

I should say we are doing some other work to raise awareness about foundation model risks - especially at OpenAI, given recent events - but not at the level of this campaign.

The main constraint was starting (relatively) small. We’d really like to win these campaigns, and we don’t plan to let up until we have. The foundation model developers are generally some of the biggest companies in the world (hence the huge compute, as you mention), and the resources needed to win a campaign likely scale in proportion to the size of the target. We decided it’d be good to keep building our supporter base and reputation before taking the bigger players on. Cognition in particular seems to be in the center of the triple venn diagram between “making high-risk systems,” “way behind the curve on safety issues,” and “small enough that they can’t afford to ignore this.”

Btw, my background is in animal advocacy, and this is somewhat similar to how groups scaled there. i.e. they started by getting local restaurants to stop serving fois gras, and scaled up to getting McDonalds to phase out eggs from battery cages nationwide. Obviously we have less time with this issue - so I would like to scale quickly.

What are the key leverage points to get these companies to listen to campaigners such as yourself? How does this differ from the animal right space and how will this affect your strategy? What do you have in terms of strategy documents or theory of change?

Some thoughts on my mind are:

To the best of my understanding the animal rights corporate campaigning space is unable to exert much or any influence on B2B (business to business) companies. Animal campaigns only appear to have influenced B2C (business to consumer) companies. An autonomous coding agent feels more B2B and by analogy having any influence here could be extremely difficult. That said I don't think this should be a huge problem as...

The leverage points for influencing companies in the AI space is very different to the animal space. In particular AI companies are probably much more concerned about losing employees to other companies than food companies. I expect they are also likely concerned about regulation that could restrict their actions. I expect there much less concerned about public image. As such..

This does suggest to somewhat different approach to corporate campaign. Potentially targeting employees more (although probably not picking on individuals) and greater focus on presenting the targeted company negatively to regulators/policymakers or to investors, more than to the public.

This is just quick thoughts and I might be wrong about much of this. I just wanted to flag as your post seemed to suggest that this work would be similar to work in the animal space and in many ways it is but I think there's a risk of not seeing the differences. I wish you all the best of luck with your campaigning.

Thanks for the comment! I agree with a lot of your thinking here and that there will be many asymmetries.

One random thing that might surprise you: in fact, the sector that animal groups have had the most success with is a B2B one: foodservice providers. For B2B companies, individual customers are fewer in number and much more important in magnitude — so the prospect of convincing, for example, an entire hospital or university to switch their multi-million dollar contract to a competitor with a higher standard for animal welfare is especially threatening. I think the same phenomenon might carry over to the tech industry. However, even in the foodservice provider case, public perception is still one of the main driving factors (i.e., universities and hospitals care about the animal welfare practices of their suppliers in part because they know their students/clients care).

Your advice about outreach to employees and other stakeholders is well-taken too :) Thanks!

Thank you for considering my comments

To be clear I would consider the target of the campaign in those cases to be on the hospital or the university and those to be B2C organizations in some meaningful way.

Huh, interesting! I guess you could define it this way, but I worry that muddies the definition of "campaign target." In common usage, I think the definition is approximately: what is the institution you are raising awareness about and asking to adopt a specific change? A simple test to determine the campaign target might be "What institution is being named in the campaign materials?" or "What institution has the power to end the campaign by adopting the demands of the campaigners?"

In the case of animal welfare campaigns against foodservice providers, it seems like that's clearly the foodservice companies themselves. Then, in the process of that campaign, one thing you'll do is raise awareness about the issue among that company's customers (e.g. THL's "foodservice provider guide" which raised awareness among public institutions), which isn't all that different from raising awareness among the public in a campaign targeting a B2C company.

I suppose this is just a semantic disagreement, but in practice, it suggests to me that B2B businesses are still vulnerable, in part because they aren't insulated from public opinion—they're just one degree removed from it.

EDIT: Another, much stronger piece of evidence in favor of influence on B2B: Chicken Watch reports 586 commitments secured from food manufacturers and 60 from distributors. Some of those companies are functionally B2C (e.g. manufacturing consumer packaged goods sold under their own brand) but some are clearly B2B (e.g. Perdue Farms' BCC commitment).

No this seems more than just semantic. It does seem like I've underestimated the ability to influence B2B companies. I stand corrected. Thank you.

Similar campaigns have worked really well for animal advocacy, so I’m excited to see what you can accomplish.

I’m wondering, what kinds of tasks can volunteers help with? If I have no social media accounts or experience trying to promote causes on social media is there anything I can do?

Thank you!

You’re right that the main tasks are digital advocacy - but even if you’re not on social media, there are some direct outreach tasks that involve emailing and calling specific stakeholders. We have one task like that live on our action hub now, and will be adding more soon.

Outside of that, we could use all sorts of general volunteer support - anything from campaign recruitment to writing content. Also always eager to hear advice on strategy. Would love to chat more if you’re interested.

Great name choice!

Interesting project.

Definitely seems like someone should be experimenting with this.