About the EA Archive

The EA Archive is a project to preserve resources related to effective altruism in case of a sub-existential catastrophe such as nuclear war.

Its more specific, downstream motivating aim is to increase the likelihood that a movement akin to EA (i.e., one that may go by a different name and be essentially discontinuous with the current movement, but share the broad goal of using evidence and reason to do good) survives, reemerges, and/or flourishes without having to re-invent the wheel, so to speak.

It is a work in progress, and some of the subfolders at the referenced Google Drive are already slightly out of date.

Theory of Change

The theory of change is simple, if not very cheerful to describe: if copies of this information exist in many places around the world, on devices owned by many different people, it is more likely that at least one copy will remain accessible after, say, a war that kills most of the world's population.

Structure

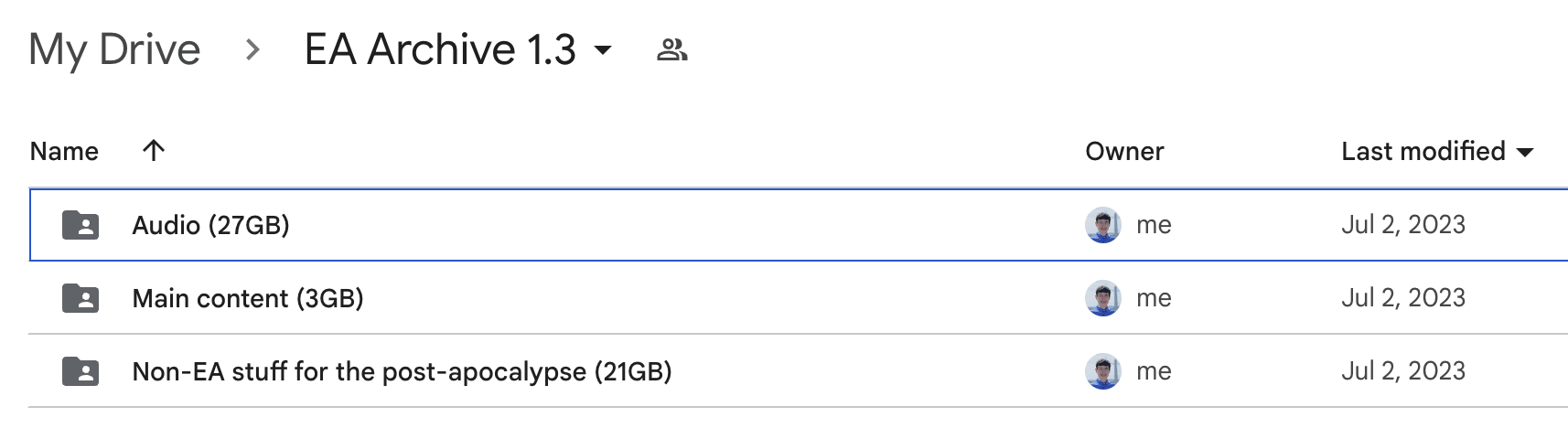

As shown in the screenshot, there are three folders. The smallest one, "Main content," contains html, pdf, and other static, text-based files. It is by far the most important to download.

If for whatever reason space isn't an issue and you'd like to download the larger folders to, that would be great too.

I will post a shortform quick take (at least) when there's been a major enough revision to warrant me asking for people to download a new version.

How you can help

1) Download!

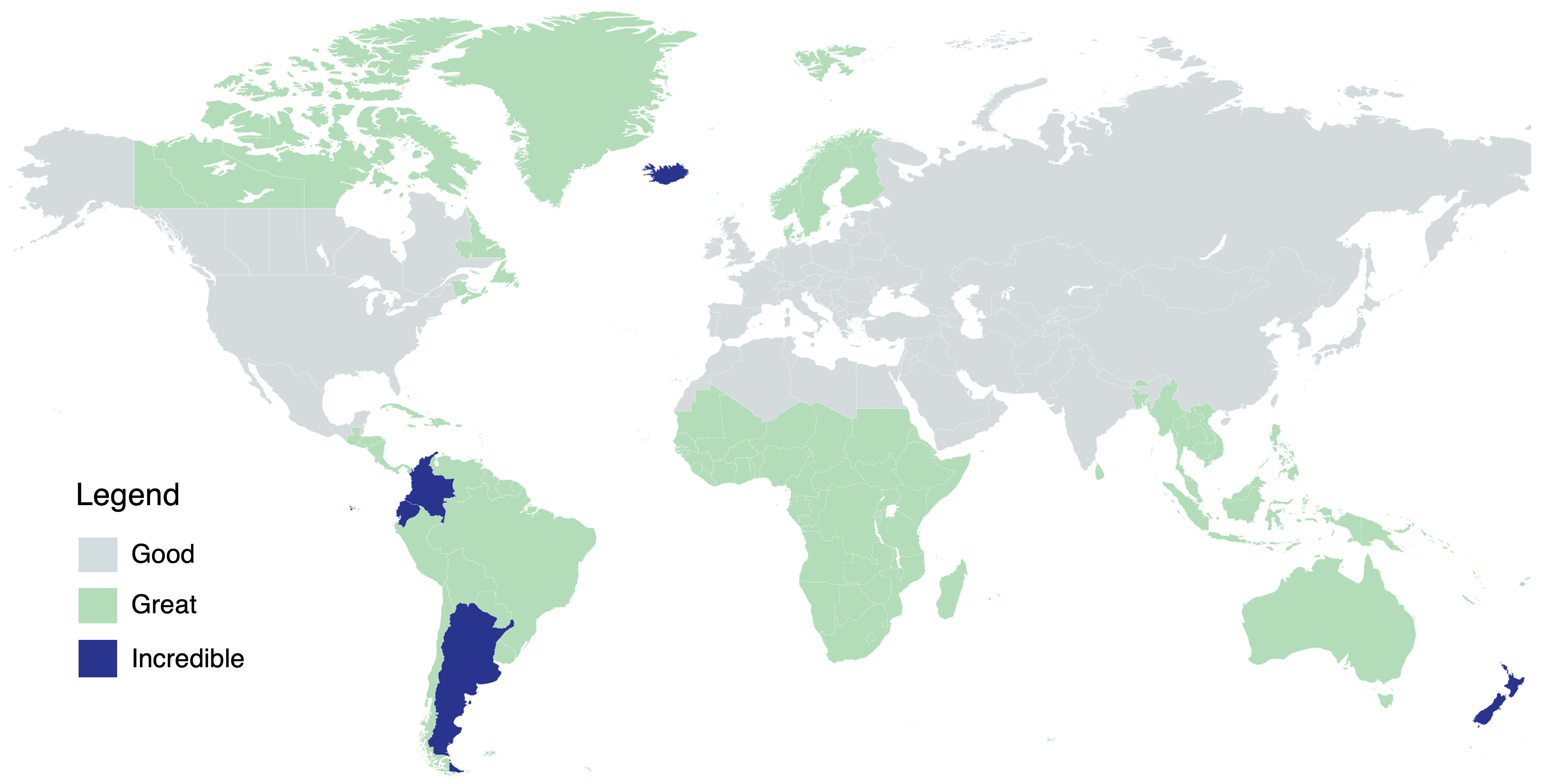

This project depends on people like you downloading and storing the Archive on a computer or flash drive that you personally have physical access to, especially if you live in any of the following:

- Southeast Asia + Pacific (esp. New Zealand)

- South and Central Africa

- Northern Europe (esp. Iceland)

- Latin America, Mexico City and south (esp. Ecuador, Colombia, and Argentina)

- Any very rural area, anywhere

If you live in one of the shaded areas (green or blue), I would love to buy you a flash drive to make this less annoying and/or enable you to store copies in multiple locations, so please get in touch via the Google Form, DM, or any other method.

2) Suggest/submit, and provide feedback

Currently, the limiting factor on the Archive's contents is my ability and willingness to find identify relevant resources and then scrape or download them (i.e., not the cost or feasibility of storage). If you notice something ought to be in there that isn't, please use this Google Form to do any of the following...

- Let me know what it is, broadly (good)

- Send me a list of urls containing the info (better)

- Send me a Google Drive link with the files you'd like added (best)

- Provide any general feedback or suggestions

I may have to be somewhat judicious about large video and audio files, but virtually any relevant and appropriate pdf/text/web/spreadsheet content should be fine.[1]

3) Share

Send this post to friends, especially other EAs who do not regularly use the Forum!

FAQ

How sure are you that this is at all necessary/helpful?

Not super sure! All things considered I think there's like a 70% chance I'd have done at least this much if I had done a lot more research.

Wasn't there a tweet?

Yeah, from an embarrassingly long time ago.

Wasn't there a website?

Yeah, but it proved more trouble than it was worth.

Contact info

- Email: aaronb50@gmail.com

- Twitter (DM): @AaronBergman18

- Also DM or comment here, on the Forum!

- ^

Fun fact: the plain text of all EA Forum posts takes up just about ~100MB (as of a few weeks ago), equivalent to about 2 hours of decent quality audio.

Note also that the Internet Archive (Wayback Machine) is working on an offline archive (which, if I understand correctly, is intended to be installable as a local server to have a copy of some parts of the web which you could "load" into a browser and navigate pages ordinarily).

I think it'd be cool to have a collection for effective altruism-related resources, which then maybe would picked up by some people saving offline storages.