Since Giving What We Can was formally founded in 2009, with just 23 founding members, the community has grown significantly – and we’re approaching 10,000 10% Pledgers (hopefully in the next couple of months)! The community as it stands today has now donated over $250 million and is predicted to donate over $1 billion across the lifetimes of our members.

If you’re a pledger, join us in sharing stories this week:

To celebrate our community’s impact over the last 15 years, we’re hoping to light up the internet for the next week with everyone’s stories and quotes. We’re inviting all of our pledgers to share (or re-share) your stories about pledging, photos from the early days, what the pledge has meant to you, or even your hopes for Giving What We Can and the 10% Pledge in the future. We’ll then compile a bunch of these stories and photos (and anything else we get) to highlight how powerful it can be to give significantly and effectively.

This is not only a great way to reflect on your giving and the community but also to show your friends and networks what the Pledge is all about – and hopefully encourage some of them to join us during next month’s Pledge Week (December 16th-22nd) as we near 10,000 lifetime pledges.

How to get involved:

- Post on social media tagging Giving What We Can and share your thoughts/story about pledging (anything from what motivated you to take the pledge to the story of how you found about it and how it's impacted your life so far!) along with your hopes for the future of Giving What We Can. (Bonus if you include a photo of you holding your pledge and/or wearing your pin – you could take a current one or share something from the past! If you prefer, you can instead use one of these customisable images to accompany your story, or even post this separately later in the week!)

- If you don’t use social media, submit a quote and photo to this form so we can share your story on our blog and social media.

- You can also add your story to our EA Forum thread

You can find some example post ideas and more information here

Don’t forget to add a diamond to your LinkedIn or X accounts to show you’re a pledger! Several new pledgers have mentioned the diamonds as one of the reasons they’ve pledged!

And most importantly, we hope you enjoy seeing memories and reflections from across the community during this exciting anniversary.

The last 15 years have shown that there are thousands of people who are willing to take giving to the most effective charities seriously, and who are willing to give a significant amount of their income away in pursuit of creating a better world for those now, and in the future.

We hope the next 15 years will bring us significant growth, and show that giving effectively and significantly can truly become a cultural norm.

Thank you for your continued giving, and for your continued support of Giving What We Can.

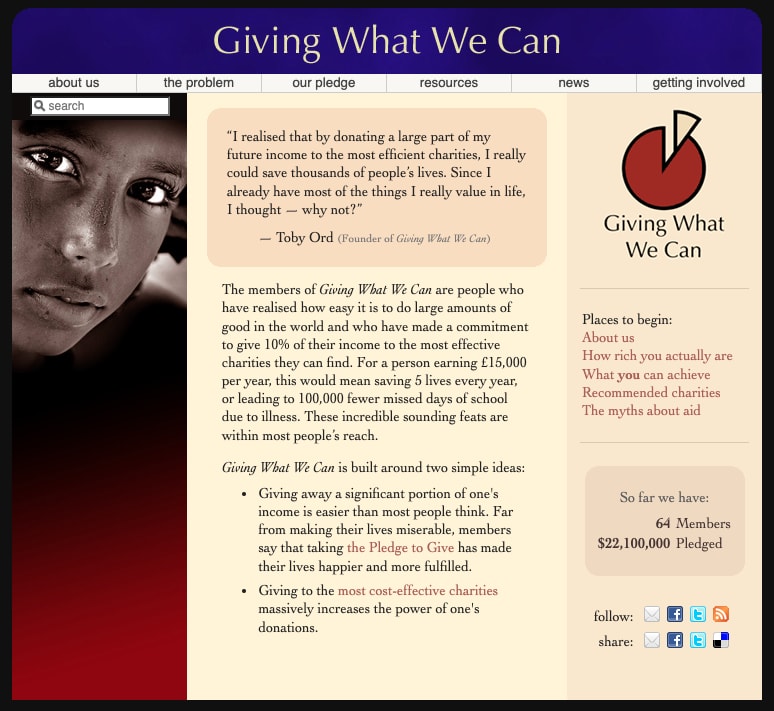

P.S. Here’s a throwback to the GWWC website in 2010 – that Toby Ord coded himself!

£300/$450 (~£450/$650 inflation-adjusted) per life then.. unfathomably low

https://old.reddit.com/r/EffectiveAltruism/comments/1gmtdrm/has_average_cost_to_save_a_life_increased_or/