(Poster's note: Given subject matter I am posting an additional copy here in the EA Forum. The theoretically canonical copy of this post is on my Substack and I also post to Wordpress and LessWrong.)

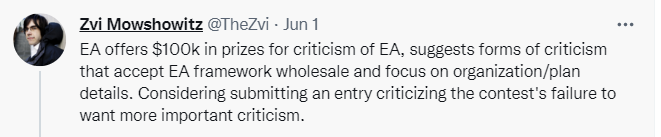

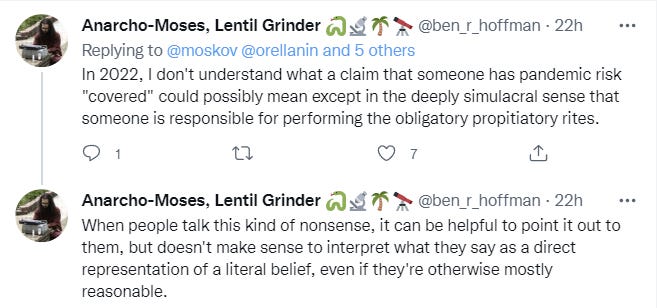

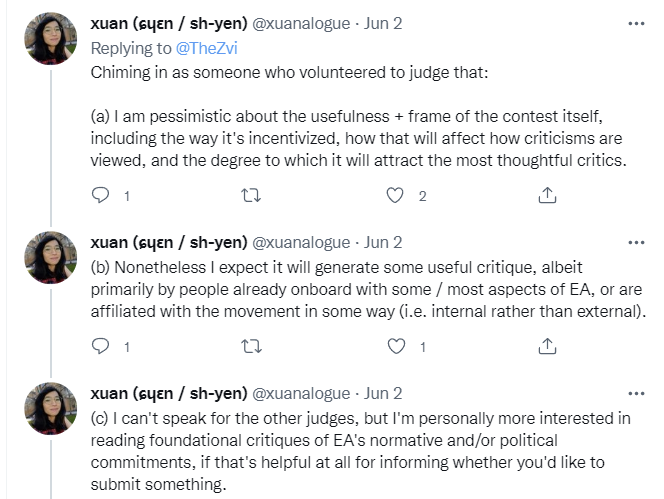

Recently on Twitter, in response to seeing a contest announcement asking for criticism of EA, I offered some criticism of that contest’s announcement.

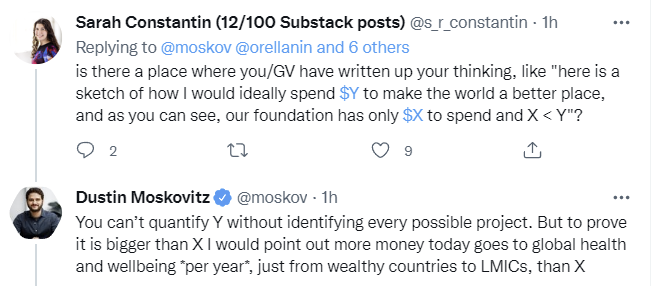

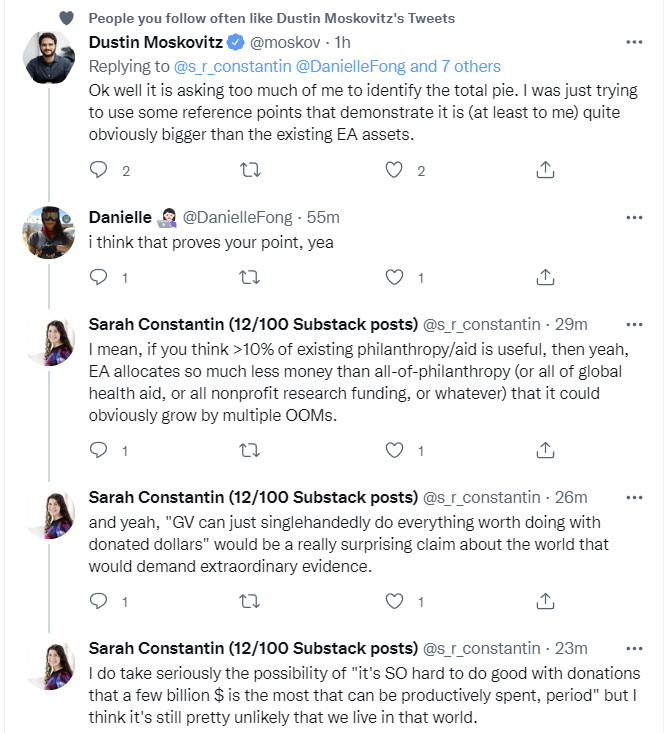

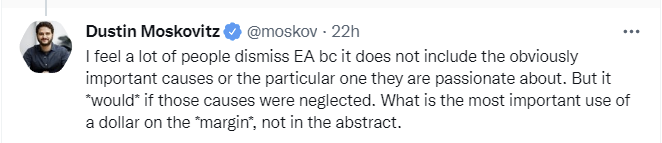

That sparked a bunch of discussion about central concepts in Effective Altruism. Those discussions ended up including Dustin Moskovitz, who showed an excellent willingness to engage and make clear how his models worked. The whole thing seems valuable enough to preserve in a form that one can navigate, hence this post.

This compiles what I consider the most important and interesting parts of that discussion into post form, so it can be more easily seen and referenced, including in the medium-to-long term.

There are a lot of offshoots and threads involved, so I’m using some editorial discretion to organize and filter.

To create as even-handed and useful a resource as possible, I am intentionally not going to interject commentary into the conversation here beyond the bare minimum.

As usual, I use screenshots for most tweets to guard against potential future deletions or suspensions, with links to key points in the threads.

(As Kevin says, I did indeed mean should there.)

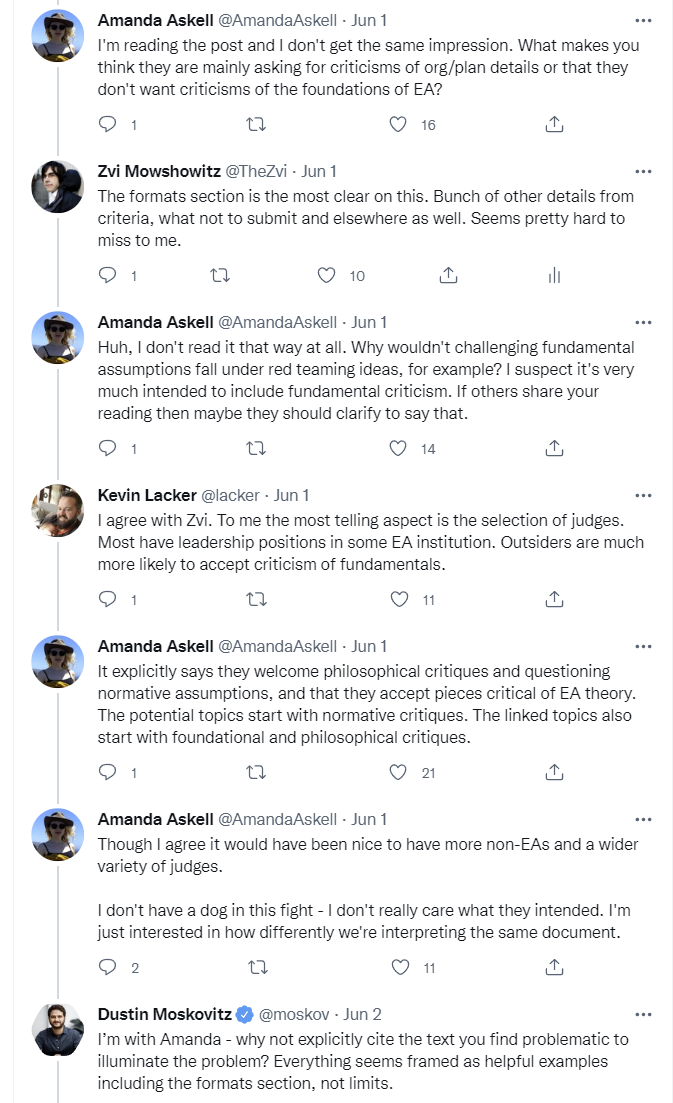

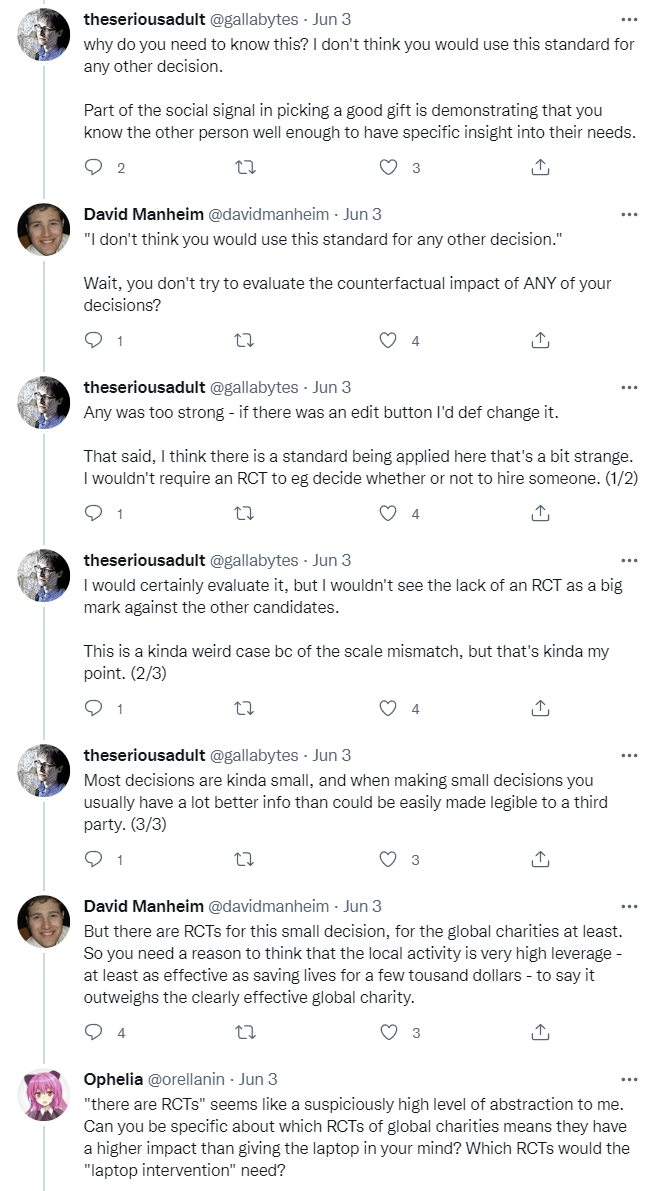

At this point there are two important threads that follow, and one additional reply of note.

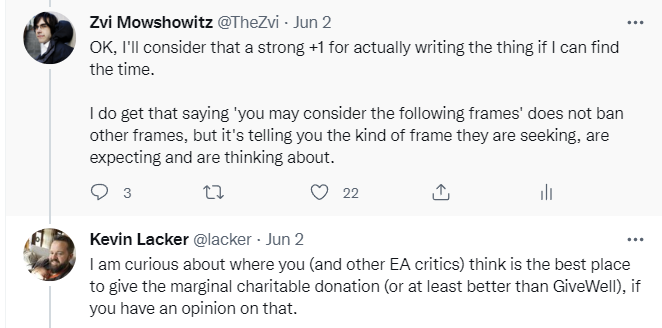

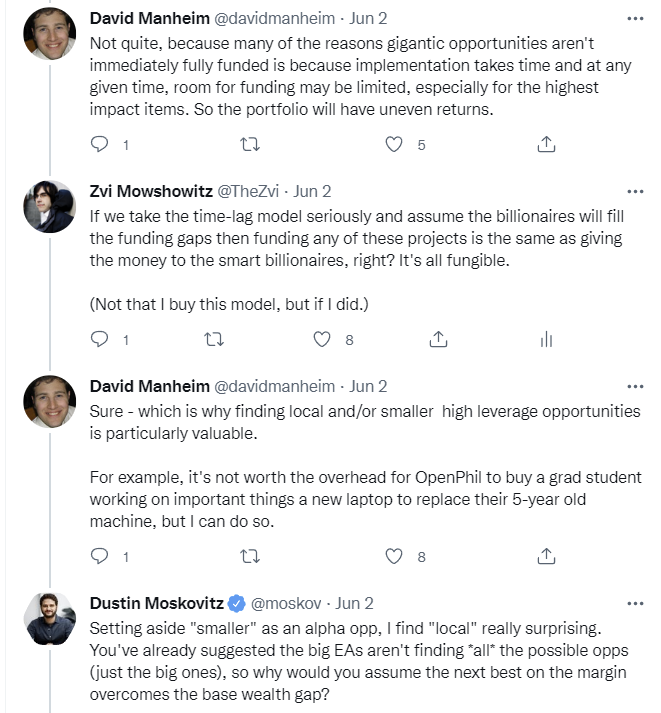

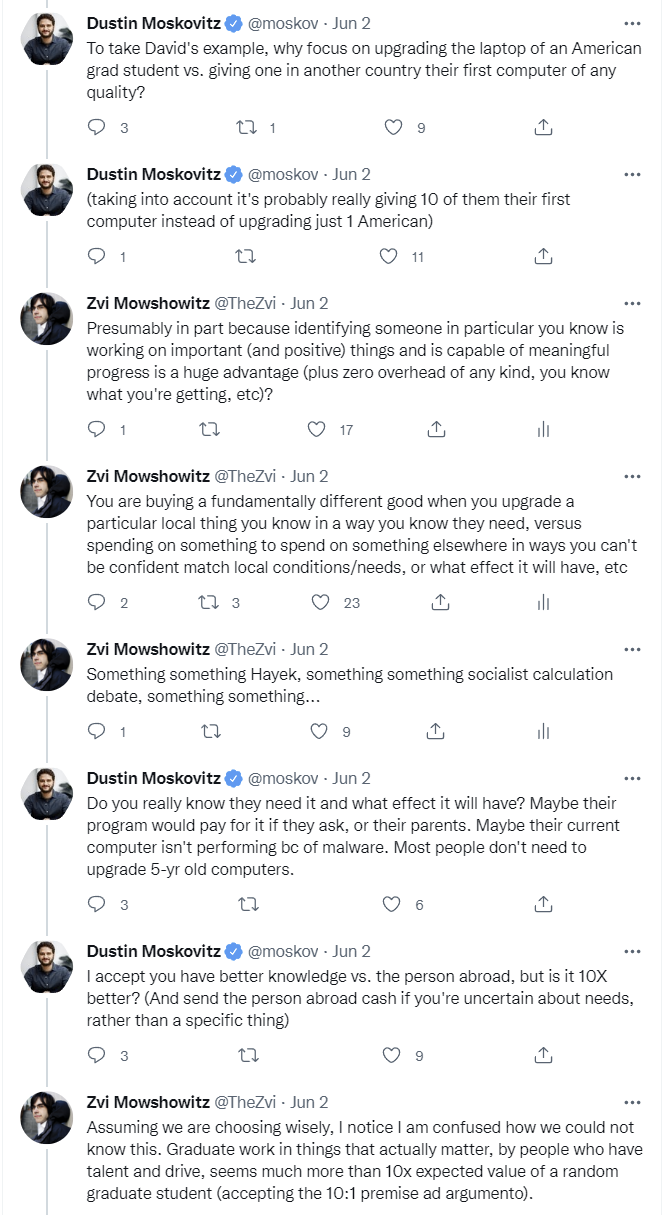

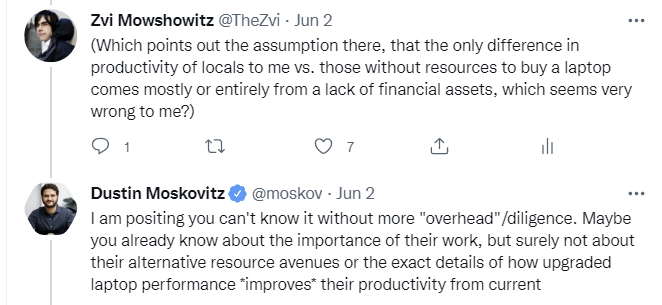

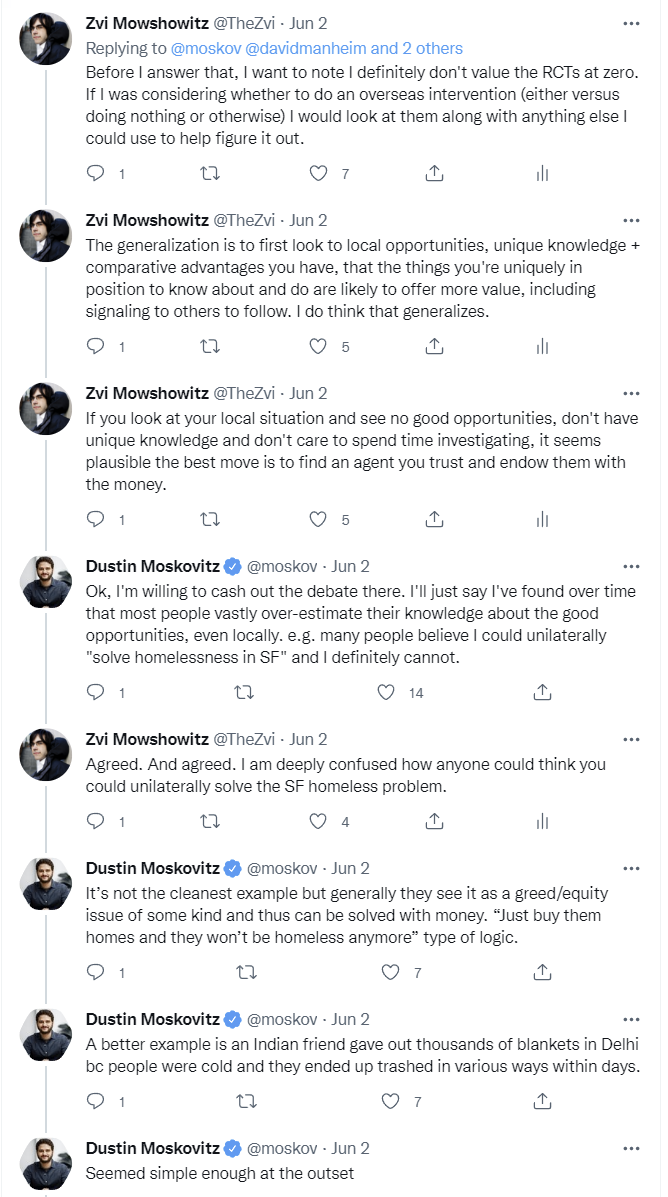

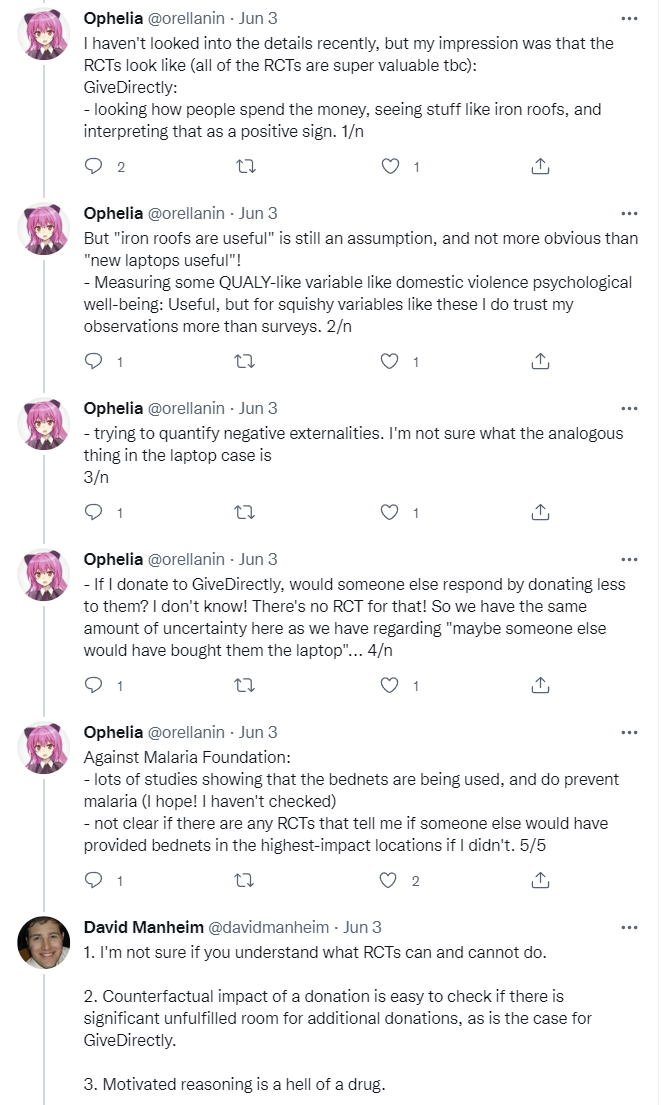

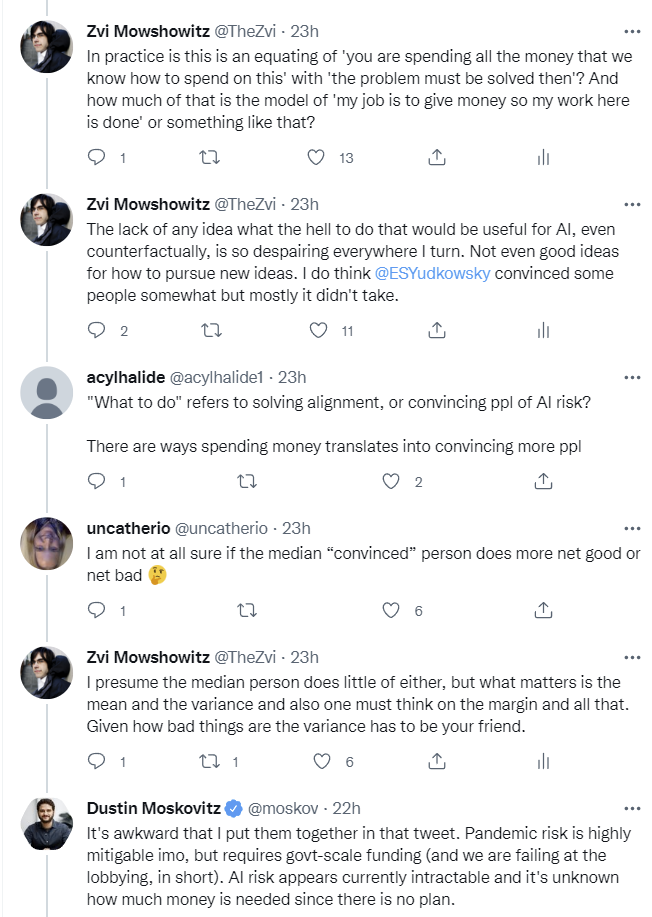

Thread one, which got a bit tangled at the beginning but makes sense as one thread:

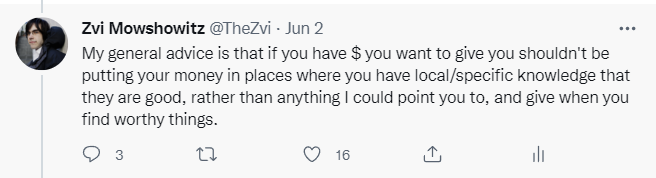

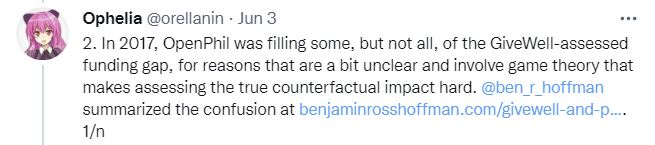

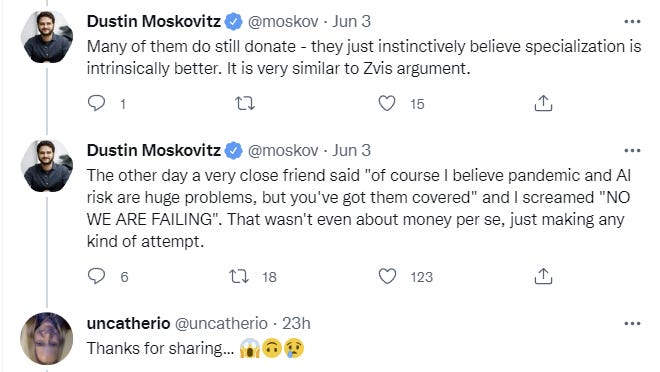

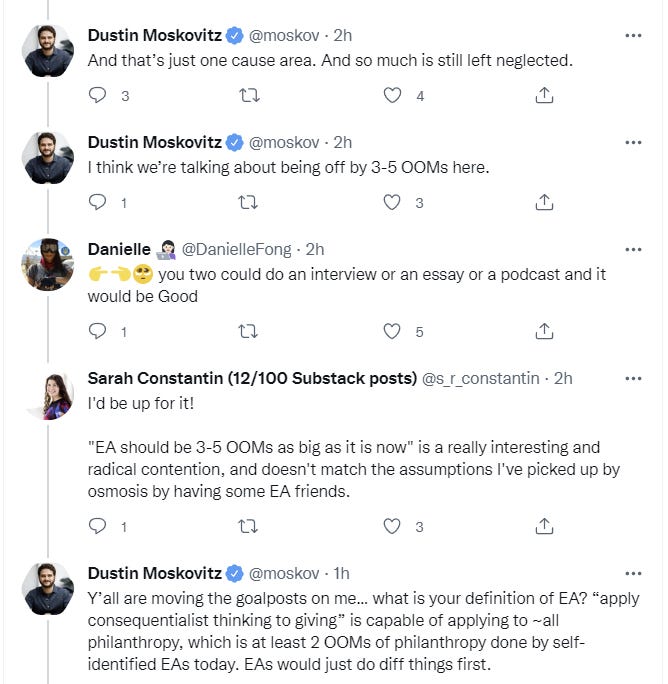

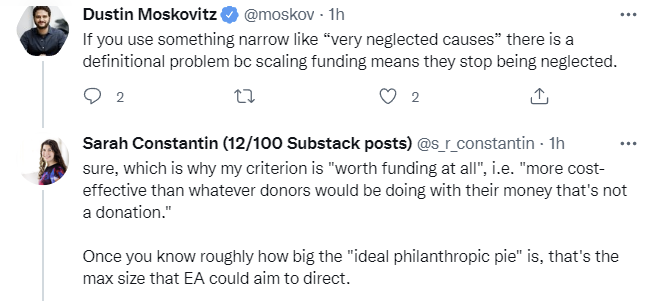

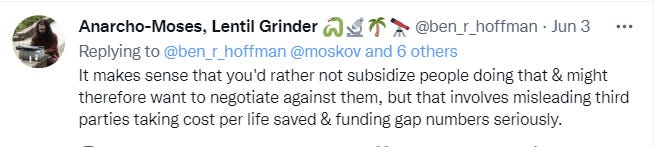

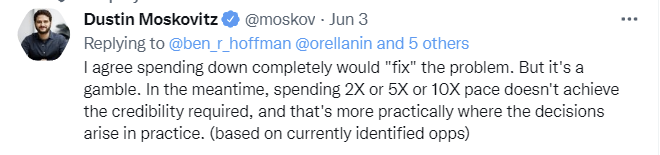

Thread two, which took place the next day and went in a different direction.

Link here to Ben’s post, GiveWell and the problem of partial funding.

Link to GiveWell blog post on giving now versus later.

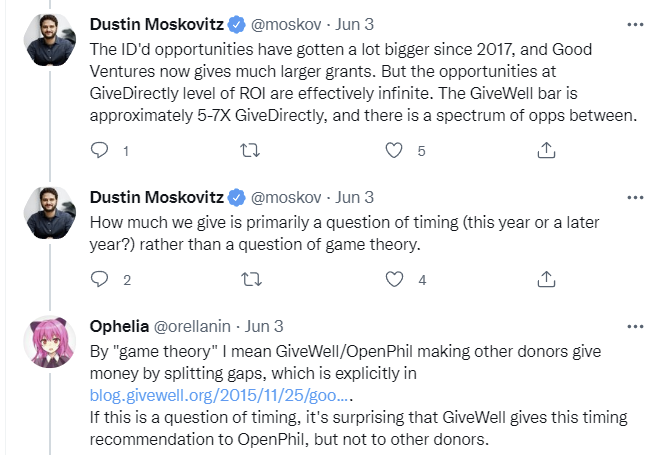

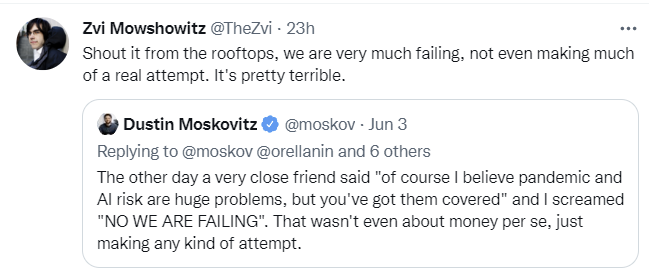

Dustin’s “NO WE ARE FAILING” point seemed important so I highlighted it.

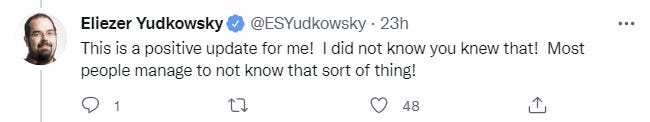

There was also a reply from Eliezer.

And this on pandemics in particular.

Sarah asked about the general failure to convince Dustin’s friends.

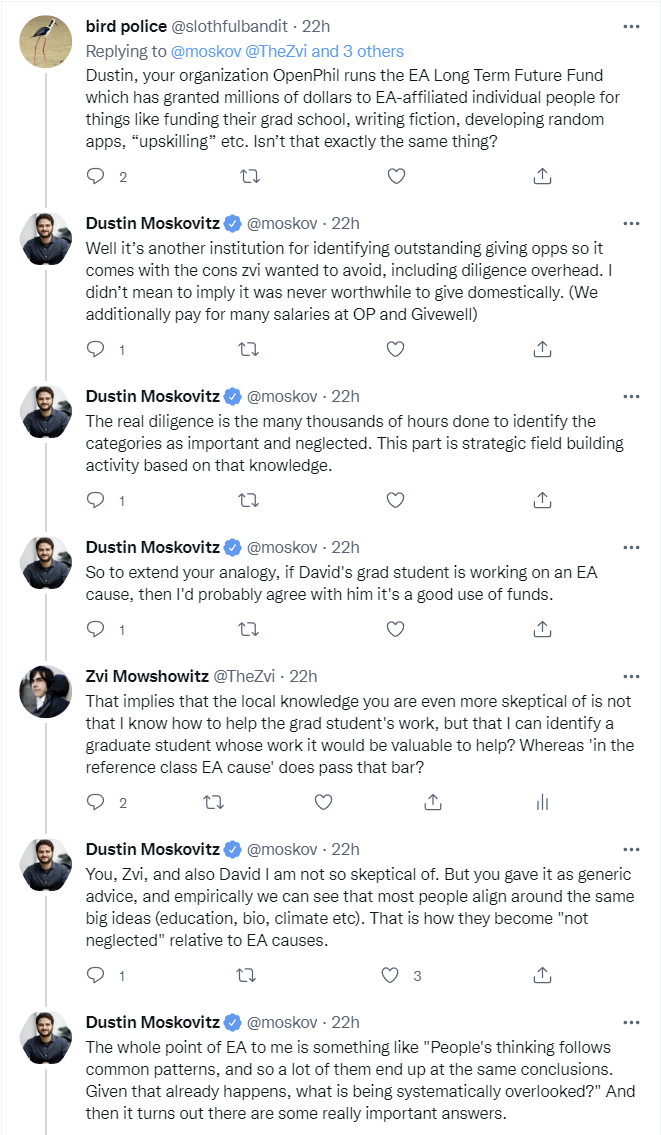

These two notes branch off of Ben’s comment that covers-all-of-EA didn’t make sense.

Ben also disagreed with the math that there was lots of opportunity, linking to his post A Drowning Child is Hard to Find.

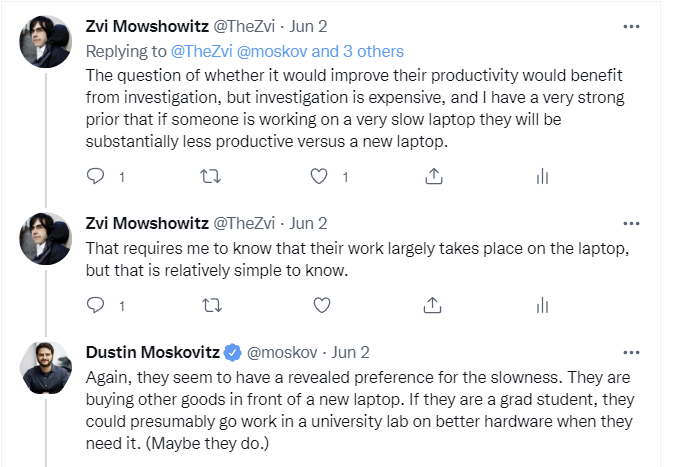

This thread responds to Dustin’s claim that you need to know details about the upgrade to the laptop further up the main thread, I found it worthwhile but did not include it directly for reasons of length.

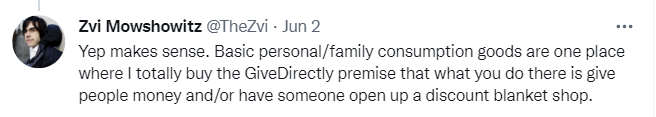

This came in response to Dustin’s challenge on whether info was 10x better.

After the main part of thread two, there was a different discussion about pressures perhaps being placed on students to be performative, which I found interesting but am not including for length.

This response to the original Tweet is worth noting as well.

Again, thanks to everyone involved and sorry if I missed your contribution.

I saw many bits of this discussion on Twitter and found them interesting, but missed more than one of the threads posted here. It was a great idea to collect them in one place, thanks for doing so!

I know this isn't the point of the thread but I feel the need to say that if people think a better laptop will increase their productivity they should apply to the EAIF.

https://funds.effectivealtruism.org/funds/ea-community

(If you work at an EA org, I think that your organisation normally should pay unless they aren't able to for legal/bureaucratic reasons)

I think the Aumann/outside-view argument for 'giving friends money' is very strong. Imagine your friend is about as capable and altruistic as you. But you have way more money. It just seems rational and efficient to make the distribution of resources more even? This argument does not at all endorse giving semi-random people money.

I've also had this thought (though wouldn't necessarily have thought of it as an outside view argument). I'm not convinced by counterarguments here in the thread so far.

Quoting from a reply below that argues for deferring to grantmakers (and thereby increasing their overhead with them getting more applications):

>You may also be unaware of ways it would backfire, and the reason something doesn't get funded is because others judge it to be net negative.

I mean, that's true in theory, but giving people who you know well (so have a comparative advantage at evaluating their character and competence) some extra resources isn't usually a high-variance decision. Sure, if one of your friends had a grand plan for having impact in the category of "tread carefully," then you probably want to consult experts to make sure it doesn't backfire. But you also want to talk to your friend/acquaintance to slow down in general, in that case, so it isn't a concept that only or particularly applies to whether to give them resources. And for many or even most people who work on EA topics, their work/activities don't come with high backfiring risks (at least I tentatively think so, even though I might agree with the statement "probably >10% of people in EA have predictably negative impact." Most people who have negative impact have low negative impact.)

>This would be like the opposite of the donor lottery, which exists to incentivize fewer deeper independent investigations over more shallow investigations.

I think both things are valuable. You can focus on comparative advantages and reducing overhead, or you can focus on benefits from scale and deep immersion.

One more thought on this: If someone is inexperienced with EA and feels unsuited for any grantmaking decisions, even in areas where they have local information that grantmakers lack, it makes more sense for them to defer. However, it gets tricky. They'll also tend to be bad at deciding who to defer to. So, yeah, they can reduce variance and go with something broadly accepted within the community. But that still covers a lot of things – it applies to longtermism as well as neartermism. Many funds in the community rely on quite specific normative views (and empirical one, but deference makes sense there more straightforwardly), and the person we're now talking about will be more poorly positioned to decide on this. So they're generally in a tricky situation and probably benefit from gaining a better understanding of several things. To summarize, I think if someone knows where and when to defer, they're probably also in a good enough position to decide that there's a particular person in their social environment who'd do good things if they had more money. (And the idea/proposal here is to only give money to people locally if you actually feel convinced by it, rather than doing it as a general policy. The original comment could maybe be interpreted as supporting a general policy or giving out money to less affluant acquaintances, whereas my stance is more like "Do it if it seems compellingly impactful to you!")

This would be like the opposite of the donor lottery, which exists to incentivize fewer deeper independent investigations over more shallow investigations.

You could also give it to someone far better informed about and a better judge of giving opportunities. I think grantmakers are in this position relative to the majority of EAs, but you would be increasing funding/decision-making concentration.

That doesnt really engage with the argument. If some other agent is values aligned and approximately equally capable why would you keep all the resources? It doesnt really make sense to value 'you being you' so much.

I dont find donor lotteries compelling. I think resources in Ea are way too concentrated. 'Deeper investigations' is not enough compensation for making power imbalances even worse.

I'm not suggesting you keep all the resources, I'm suggesting you give them to someone even more capable (and better informed) than someone equally capable, to increase the probability that they'll be directly allocated by someone more capable.

Keep in mind that many things you might want to fund are in scope of an existing fund, including even small grants for things like laptops. You can just recommend they apply to these funds. If they don't get any money, I'd guess there were better options you would have missed but should have funded first. You may also be unaware of ways it would backfire, and the reason something doesn't get funded is because others judge it to be net negative. We get into unilateralist curse territory. There are of course cases where you might have better info about an opportunity, but this should be balanced against having worse info about other opportunities.

Of course, if you are very capable, then plausibly you should join a fund as a grantmaker or start your own or just make your own direct donations, but you'd want to see what other grantmakers are and aren't funding and why, or where their bar for cost-effectiveness is and what red flags they use, at least.

This level of support for centralization and deferral is really unusual. I actually don't know of any community besides EA that endorses it. I'm aware it's a common position in effective altruism. But the arguments for it haven't been worked out in detail anywhere I know.

"Keep in mind that many things you might want to fund are in scope of an existing fund, including even small grants for things like laptops. You can just recommend they apply to these funds. If they don't get any money, I'd guess there were better options you would have missed but should have funded first. You may also be unaware of ways it would backfire, and the reason something doesn't get funded is because others judge it to be net negative."

I genuinely don't think there is any evidence (besides some theory-crafting around unilateralists curse) to think this level of second-guessing yourself and deferring is effective. Please keep in mind the history of the EA funds. Several funds basically never dispersed the funds. And the fund managers explicitly said they didn't have time. Of course things can improve but this level of deferral is really extreme given the communities history.

Suffice to day I don't think further centralizing resources is good nor is making things more bureaucratic. Im also not sure there is actually very much risk of 'unilateralist curse' unless you are being extremely careless. I trust most EAs to be at least as careful as the leadership. Probably the most dangerous thing you could possible fund is AI capabilities. Openphil gave 30M to OpenAI and the community has been pretty accepting of ai capabilities. This is way more dangerous than anything I would consider funding!

Ya, I guess I wouldn't have funded them myself in Open Phil's position, but I'm probably missing a lot of context. I think they did this to try to influence OpenAI to take safety more seriously, getting Holden on their board. Pretty expensive for a board seat, though, and lots of potential downside with unrestricted funding. From their grant writeup:

FWIW, I trust the judgement of Open Phil in animal welfare and the EA Animal Welfare Fund a lot. See my long comment here.

Luke from Open Phil on net negative interventions in AI safety (maybe AI governance specifically): https://forum.effectivealtruism.org/posts/pxALB46SEkwNbfiNS/the-motivated-reasoning-critique-of-effective-altruism#6yFEBSgDiAfGHHKTD

If I had to guess I would predict Luke is more careful than various other EA leaders (mostly cause of Luke's ties to Eliezer). But you can look at the observed behavior of OpenPhil/80K/etc and I dont think they are behaving as carefully as I would endorse with respect to the most dangerous possible topic (besides maybe gain of function research which Ea would not fund). It doesn't make sense to write leadership a blank check. But it also doesn't make sense to worry about the 'unilateralists curse' when deciding if you should buy your friend a laptop!

This seems divorced from the empirical reality of centralized funding. Maybe you should be more specific about what orgs I should trust to direct my funds? The actual situation is that we have huge amount of unspent money bottlenecked by evaluation. Can't find it right now but you can look up post mortem of EA Funds and other CEA projects on here which spent IIRC under 50%? of donations explicitly due to bottlenecks on evaluation.

This is why I was very glad to see that FTX set up 20? re-granters for their Future Fund. Under your theory of giving, such emphasis on on re-granters should be really surprising.

Relatedly, a huge amount of the information used by granters is just network capital. It seems inefficient for everyone else to discard their network capital. It doesn't seem like a stretch to think that my #1 best opportunity that I become aware of over a few years would be better than a grantmaker's ~100th best given that my network isn't a 100th of the size.

Maybe things are different in animal welfare, which is my focus, because of funding constraints?

All the things I come across in animal welfare are already in scope for Open Phil, Founders Pledge, Farmed Animal Funders, ACE or the EA Animal Welfare Fund, and they fund basically all the things I'd be inclined to fund, so if they don't fund something I'd be inclined to fund, I would want to know why before I did, since there's a good chance they had a good reason for not funding it. Some large EAA orgs like THL can also apparently absorb more funding.

My main disagreements I might continue to endorse after discussion would be that I suspect some things they fund are net negative or not net positive in expectation. So maybe rather than giving directly to a fund, I would top up some of their grants/recommendations. Also, I might give less weight than ACE to some things they view as red flags.

It does seem like the "not even spending the money" problem doesn't extend to spending on animal welfare as much, at least in the case of EA Funds that I know about.

ACE seems like a great example for the case against deferrence.

Do you also have a low opinion of Rethink? They spent a long time not getting funded.

What about direct action?

I know that in Sapphire's case, the two projects she is highest afaik on are Rethink and DXE / direct action generally. Neither have had an easy time with funders, so empirically it seems like she shouldn't expect so much alignment with funders in future either.

RP was getting funding, including from Open Phil in 2019 and EA Funds in 2018, the year it was founded, just not a lot. I donated to RP in late 2019/early 2020 (my biggest donation so far), work there now and think they should continue to scale (at least for animal welfare, I don't see or pay a lot of attention to what's going on in the other cause areas, so won't comment on them).

I think DxE is (and in particular their confrontational/disruptive tactics are) plausibly net negative, but I'm really not sure, and would like to see more research before we try to scale it aggressively, although we might need to fund it in order to collect data. I have also seen some thoughtful EAAs become less optimistic about or leave DxE over the years. I think their undercover investigations are plausibly very good and rescuing clearly injured or sick animals from factory farms (not small farms) is good in expectation, but we can fund those elsewhere without the riskier stuff.

I wouldn't rely exclusively on them, anyway. There are other funders/evaluators, too.

https://forum.effectivealtruism.org/posts/3c8dLtNyMzS9WkfgA/what-are-some-high-ev-but-failed-ea-projects?commentId=7htva3Xc9snLSvAkB

"Few people know that we tried to start something pretty similar to Rethink Priorities in 2016 (our actual founding was in 2018). We (Marcus and me, the RP co-founders, plus some others) did some initial work but failed to get sustained funding and traction so we gave up for >1 year before trying again. Given that RP -2018 seems to have turned out to be quite successful, I think RP-2016 could be an example of a failed project?"

Seems somewhat misleading to leave this out.

Interesting. I hadn't heard about this.

I think EA Funds, Founders Pledge and Farmed Animal Funders didn't exist back then, and this would have been out of scope for ACE (too small, too little room for more funding, no track record) and GiveWell (they don't grant to research) at the time, so among major funders/evaluators, it pretty much would have been on Open Phil. But Open Phil didn't get into farm animal welfare until late 2015/early 2016:

https://www.openphilanthropy.org/research/incoming-program-officer-lewis-bollard/

https://www.openphilanthropy.org/grants/page/12/?q&focus-area=farm-animal-welfare&view-list=false

So seeing and catching RP this early on for a new farm animal welfare team at Open Phil was plausibly a lot to ask then.

The story is more complicated but I can't really get into it in public. Since you work at Rethink you can maybe get the story from Peter. I've maybe suggested too simplistic a narrative before. But you should chat Peter or Marcus about what happened with Rethink and EA funding.

Thanks for the stuff about RP, that is not as bad as I had thought.

If you are aware of a major instance where your judgement differed from that of funds, why advocate such strong priors about the efficacy of funds?

I agree the investigations seem really good / plausibly highest impact (and should be important even just to EAs who want to assess priorities, much less for the sake of public awareness). And you can fund them elsewhere / fund individuals to do this -- yourself! Not via funds.

(This is a long comment, but hopefully pretty informative.)

I didn't fund anything that wasn't getting funding from major funders, I just didn't defer totally to the funders and so overweighted some things and struck out others.

I think I had little trust in funders/evaluators in EAA early on, and part of the reason I donated to RP was because I thought there wasn't enough good research in EAA, even supporting existing EAA priorities, and I was impressed with RP's. I trust Open Phil and the EA Funds much more now, though, since

My main remaining disagreement is that I think we should be researching the wild animal effects of farmed animal interventions and thinking about how to incorporate them in our decisions, since they can plausibly shift priorities substantially. This largely comes down to a normative/decision-theoretic disagreement about what to do under deep uncertainty/with complex cluelessness, though, not a disagreement about what would actually happen.

Yes, but I expect funders/evaluators to be more informed about which undercover investigators would be best to fund, since I won't personally have the time or interest to look into their particular average cost-effectiveness, room for more funding, track record, etc., on my own, although I can read others' research if they're published and come to my own conclusions on its basis. Knowing that one opportunity is really good doesn't mean there aren't far better ones doing similar work. I might give directly to charities doing undercover investigations (or whatever other intervention) rather than through a fund, but I'd prefer to top up orgs that are already getting funded or recommended, since they seem more marginally cost-effective in expectation.

Generally, if there's a bar for cost-effectiveness, some things aren't meeting the bar, and there are things above the bar (e.g. being funded by major funders or recommended by evaluators) with room for more funding, I think you should just top up things above the bar with room for more funding, but you can select among them. If there's an opportunity you're excited about, but not being funded by major funders, I think you should recommend they apply to EA Funds or others (and maybe post about them on the EA Forum), because

There may be exceptions to this, but I think this is the guide most EAs (or at least most EAAs) should follow unless they're grantmakers themselves, are confident they'd be selected as grantmakers if they applied, or have major normative disagreements with the grantmakers.

Since we are talking about funding people within your network that you personally know, not randos, the idea is that you already know this stuff about some set of people. Like, explicitly, the case for self-funding norms is the case for utilizing informational capital that already exists rather than discarding it.

I think it is not that hard to keep up with what last year's best opportunities looked like and get a good sense of where the bar will be this year. Compiling the top 5 opportunities or whatever is a lot more labor intensive than reviewing the top 5 and you already state being informed enough to know about and agree with the decisions of funders. So I disagree with level at which we should think we are flying blind.

Yes I think this will be the most common source of disagreement at least in your case, my case, sapphire's case. With respect to the things I know about being rejected this was the case.

All of that said I think I have updated from your posts to be more encouraging of applying for EA funding and/or making forum posts. I will not do this in a deferrential manner and to me it seems harmful to do so -- I think people should feel discouraged if you explicitly discard what you personally know about their competence etc.

How does this work? Do they get to see applicants? Or do they decide what to fund without seeing a bunch of applicants?

Still, the re-granters are grantmakers, and they've been vetted. They're probably much better informed than the average EA.

Wdym by "do they get to see the applicants"? (for context I am a regrant recipient) The future fund does one final review and possible veto over the grant, but I was told this was just to veto any major reputational risks / did not really involve effectiveness evaluation. My regranter did not seem to think its a major filter and I'd be surprised to learn that this veto has ever been exercised (or that it had been in a years time).

--

I mean, you made pretty specific arguments about the information theory of centralized grants. Once you break up across even 20 regranters, these effects you are arguing for -- the effects of also knowing about all the other applications -- become turbo diminished.

As far as I can tell none of your arguments are especially targeted at the average EA at all. You and sapphire are both personally much better informed than the average EA.

Are re-granters vetting applicants to the fund (or at least get to see them), or do they just reach out to individuals/projects they've come across elsewhere?

Yes, that's true. Still, grantmakers, including re-granters with experience or who get to see a lot of applicants (not necessarily all applicants), should - in expectation -

I'd say this is true even compared to a highly engaged EA who doesn't individually vet a lot of opportunities, let alone get real world feedback on many grants. Grantmakers are highly engaged EAs, anyway, so the difference comes from experience with grantmaking and seeing more opportunities to compare.

I think re-granters make more sense when an area is really vetting-constrained or taking a number of applicants that would be unmanageable without re-granters, and I agree my argument is weaker in that case. I would guess animal welfare is not that vetting-constrained. The FTX Future Fund is a new fund aiming to grant a lot of money, the scope of "improve the far future" seems wider than near-term animal welfare, and because of less feedback on the outcomes of interest, priors for types of interventions should be much weaker than for animal welfare. Animal advocates (including outside EA) have been trying lots of things with little success and a few types of things with substantial success, so the track record for a type of intervention can be used as a pretty strong prior.

I don't think that their process is so defined. Some of them may solicit applications, I have no idea. In my case, we were writing an application for the main fund, solicited notes from somebody who happened to be a re-granter without us knowing (or at least without me knowing), and he ended up opting to fund it directly.

--

No need to restate

--

It's definitely true that in a pre-paradigmatic context vetting is at its least valuable. Animal welfare does seem a bit pre-paradigmatic to me as well, relative to for example global health. But not as much as longtermism.

--

concretely:

It seems relevant whether regranters would echo your advice, as applied to highly engaged EA aware of a great-seeming opportunity to disburse a small amount of funds (for example, a laptop's worth of funds). I highly doubt that they would. This post by Linch https://forum.effectivealtruism.org/posts/vPMo5dRrgubTQGj9g/some-unfun-lessons-i-learned-as-a-junior-grantmaker does not strike me as writing by somebody who would like to be asked to micro manage <20k sums of money more than status quo.

Ah was looking forward to listening to this using the Nonlinear Library podcast but twitter screenshots don't work well with that. If someone made a version of this with the screenshots converted to normal text that would be helpful for me + maybe others.

Why not take it a step further and ask funders if you should buy yourself a laptop?

Would it be possible to have a written summary of this? Perhaps the flow of the discussions/argument could be summarized in a paragraph or two?