(Poster's note: Given subject matter I am posting an additional copy here in the EA Forum. The theoretically canonical copy of this post is on my Substack and I also post to Wordpress and LessWrong.)

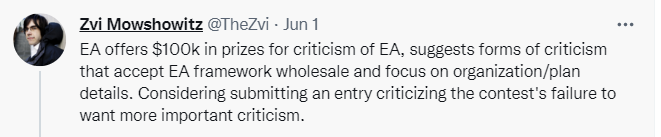

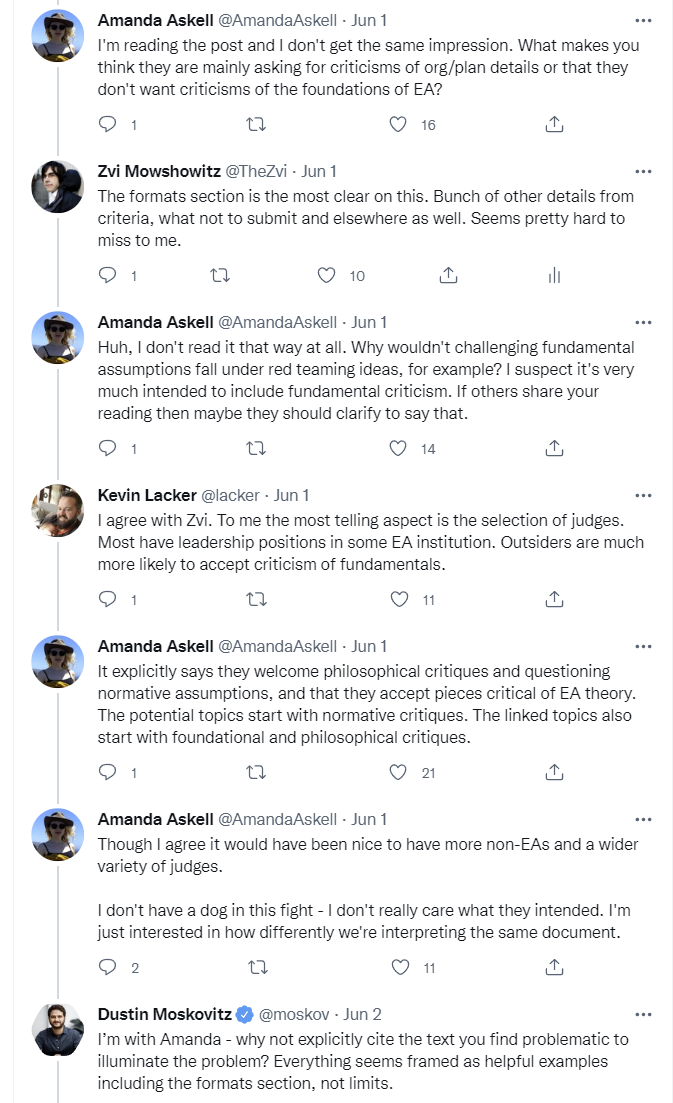

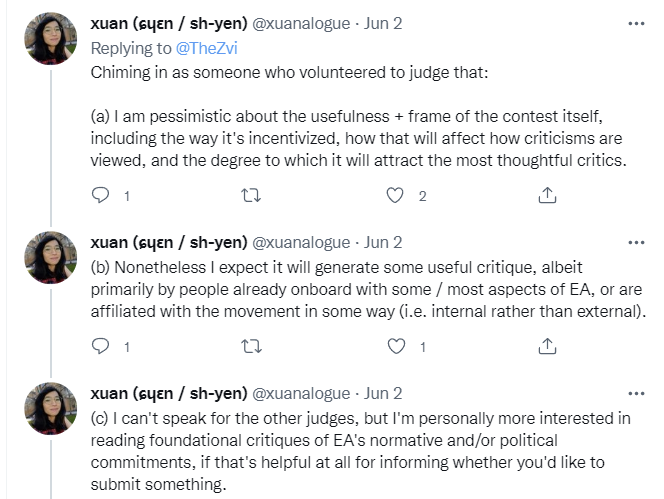

Recently on Twitter, in response to seeing a contest announcement asking for criticism of EA, I offered some criticism of that contest’s announcement.

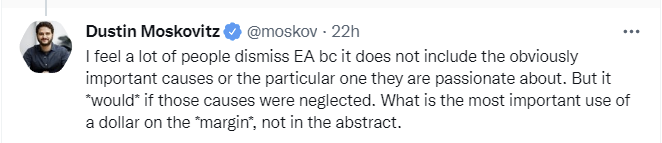

That sparked a bunch of discussion about central concepts in Effective Altruism. Those discussions ended up including Dustin Moskovitz, who showed an excellent willingness to engage and make clear how his models worked. The whole thing seems valuable enough to preserve in a form that one can navigate, hence this post.

This compiles what I consider the most important and interesting parts of that discussion into post form, so it can be more easily seen and referenced, including in the medium-to-long term.

There are a lot of offshoots and threads involved, so I’m using some editorial discretion to organize and filter.

To create as even-handed and useful a resource as possible, I am intentionally not going to interject commentary into the conversation here beyond the bare minimum.

As usual, I use screenshots for most tweets to guard against potential future deletions or suspensions, with links to key points in the threads.

(As Kevin says, I did indeed mean should there.)

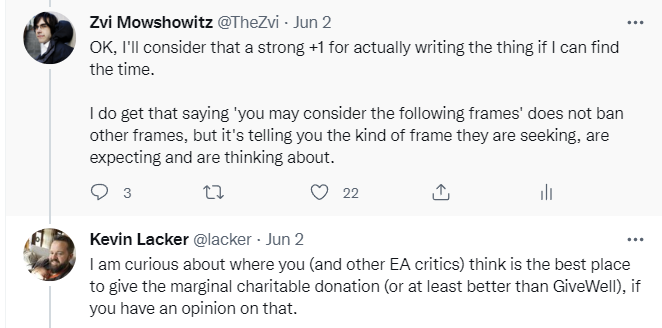

At this point there are two important threads that follow, and one additional reply of note.

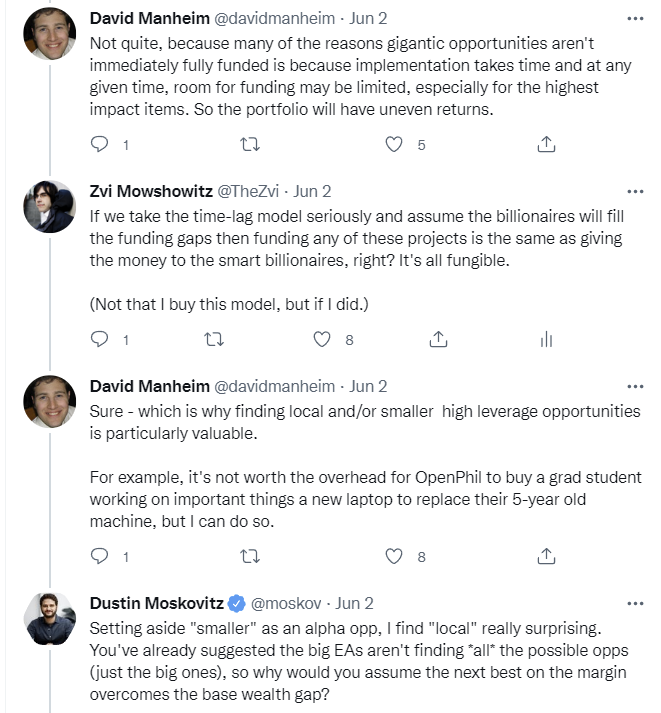

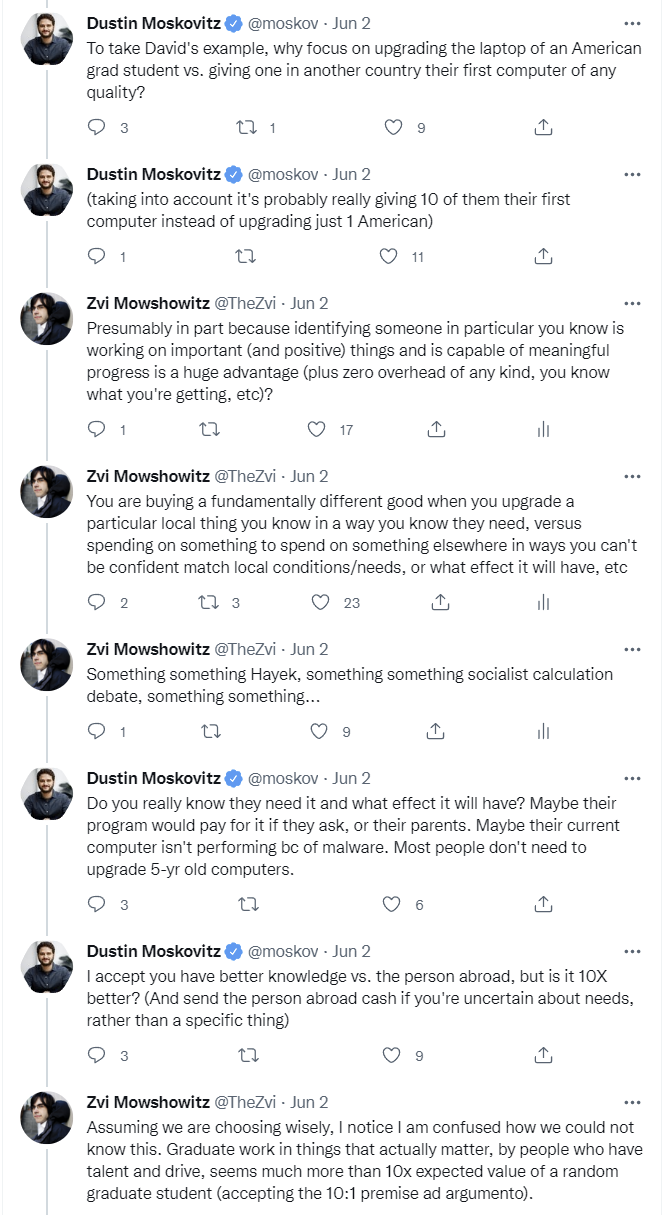

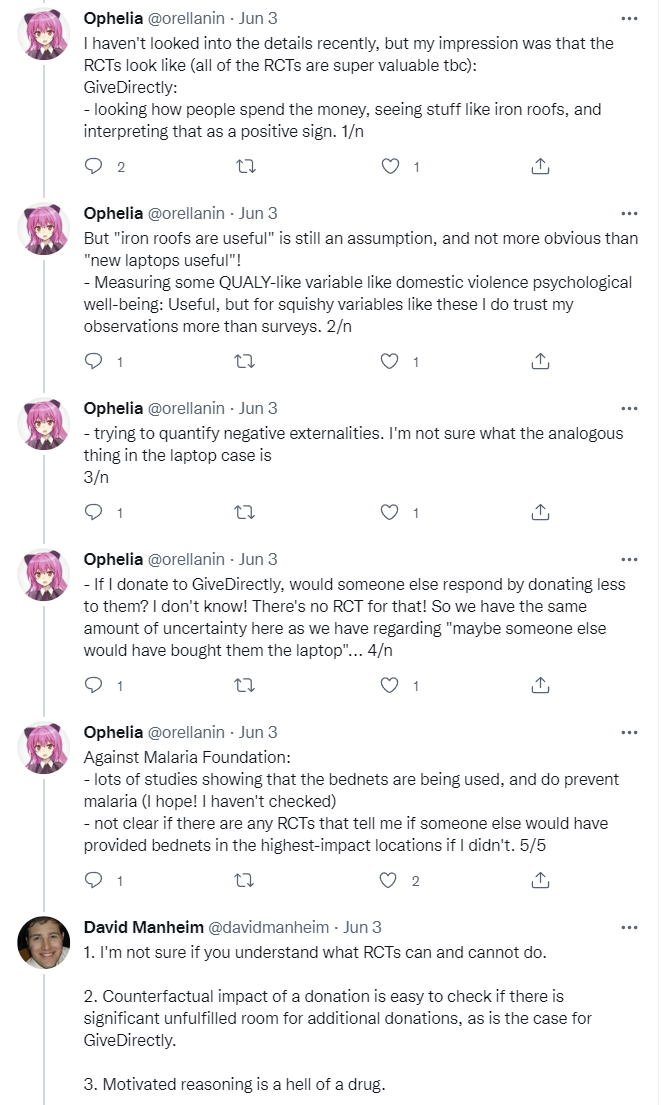

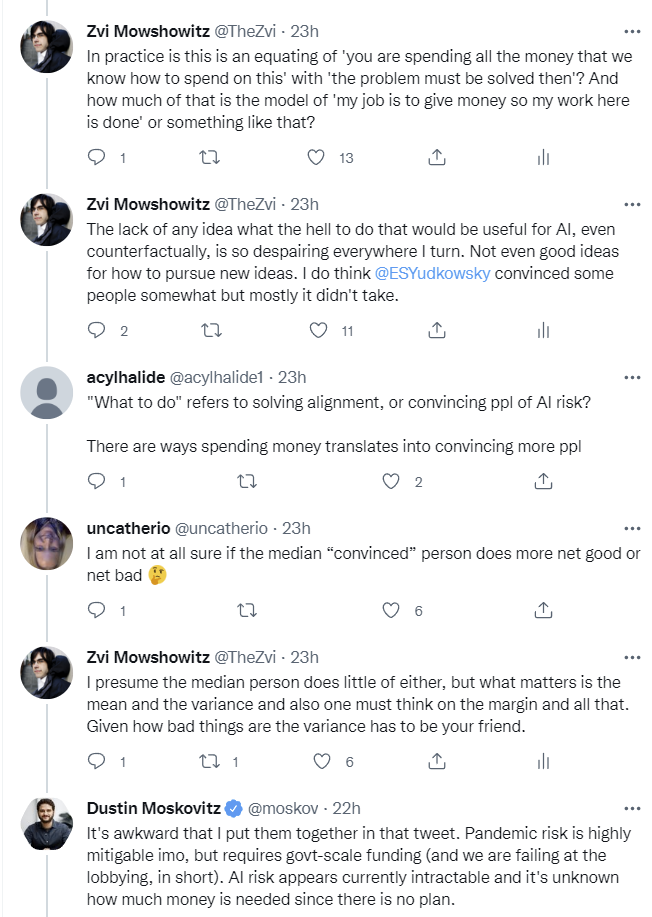

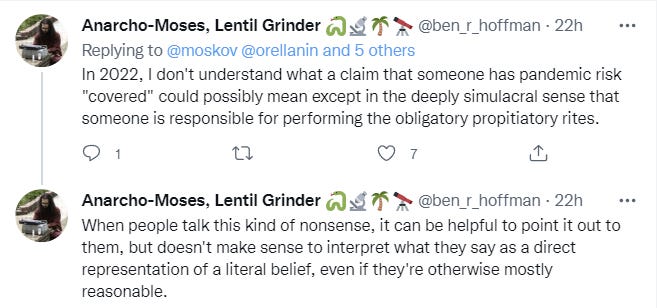

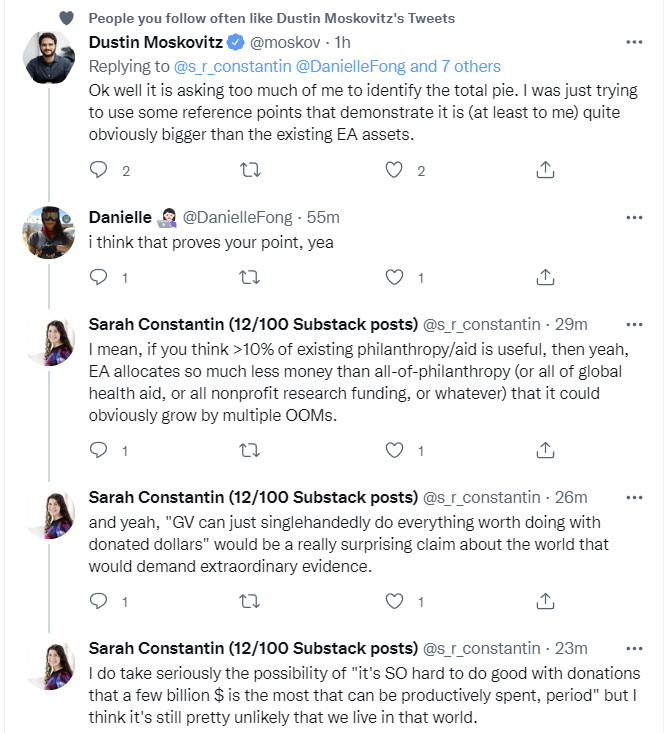

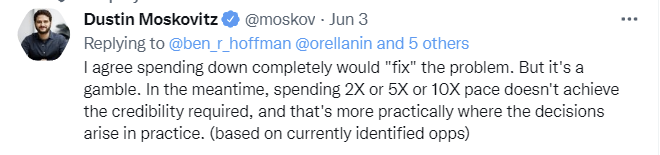

Thread one, which got a bit tangled at the beginning but makes sense as one thread:

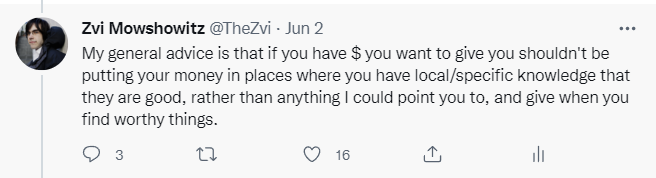

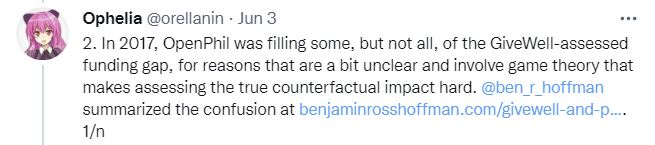

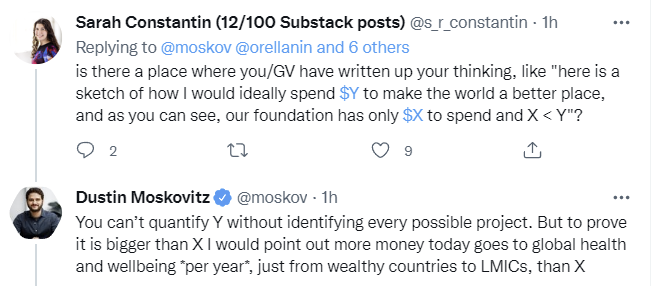

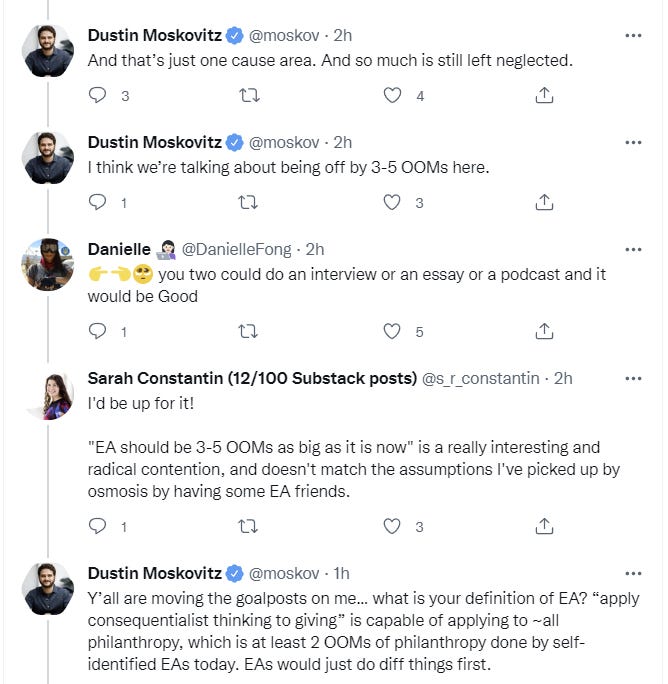

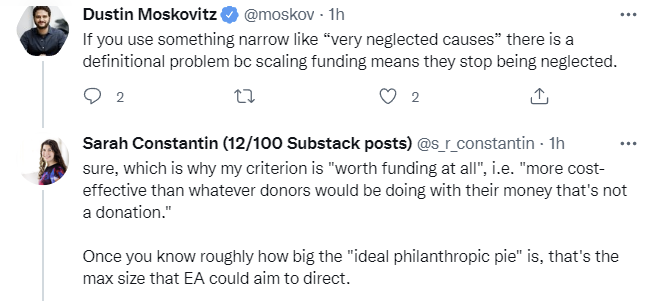

Thread two, which took place the next day and went in a different direction.

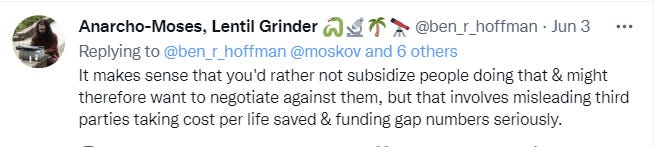

Link here to Ben’s post, GiveWell and the problem of partial funding.

Link to GiveWell blog post on giving now versus later.

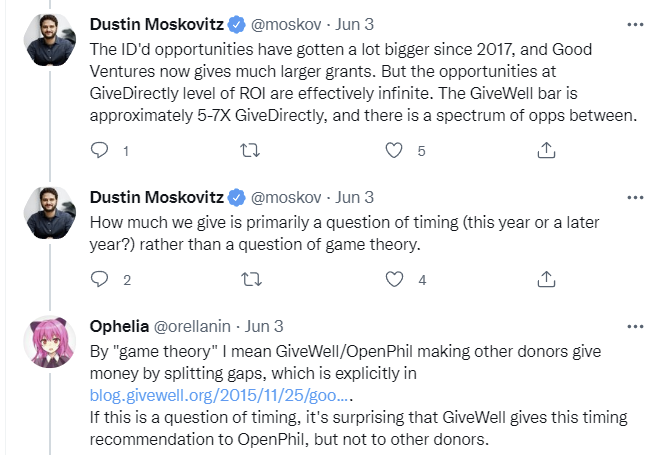

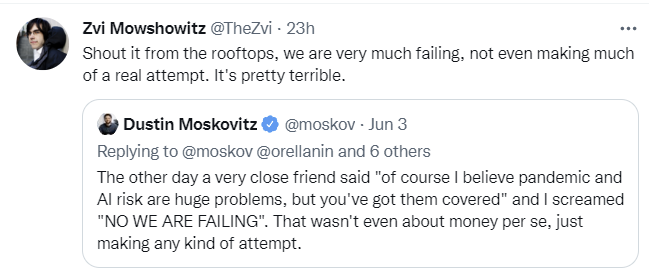

Dustin’s “NO WE ARE FAILING” point seemed important so I highlighted it.

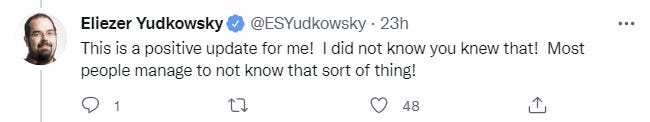

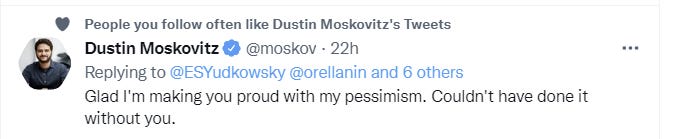

There was also a reply from Eliezer.

And this on pandemics in particular.

Sarah asked about the general failure to convince Dustin’s friends.

These two notes branch off of Ben’s comment that covers-all-of-EA didn’t make sense.

Ben also disagreed with the math that there was lots of opportunity, linking to his post A Drowning Child is Hard to Find.

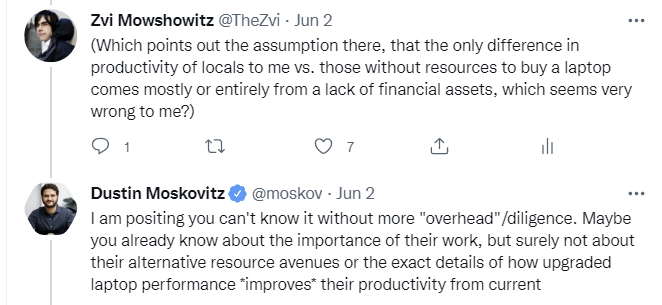

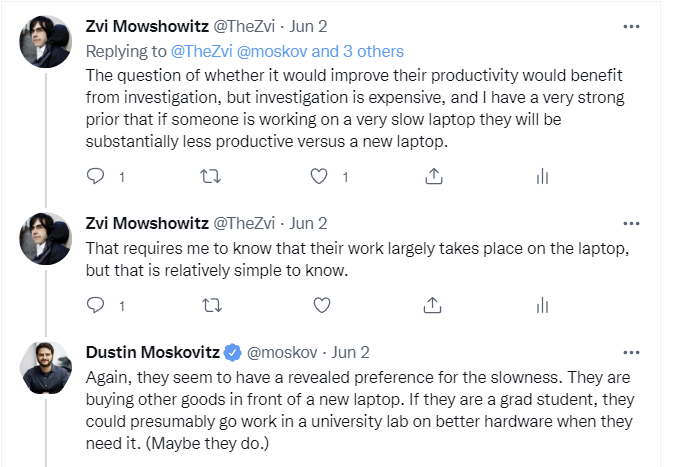

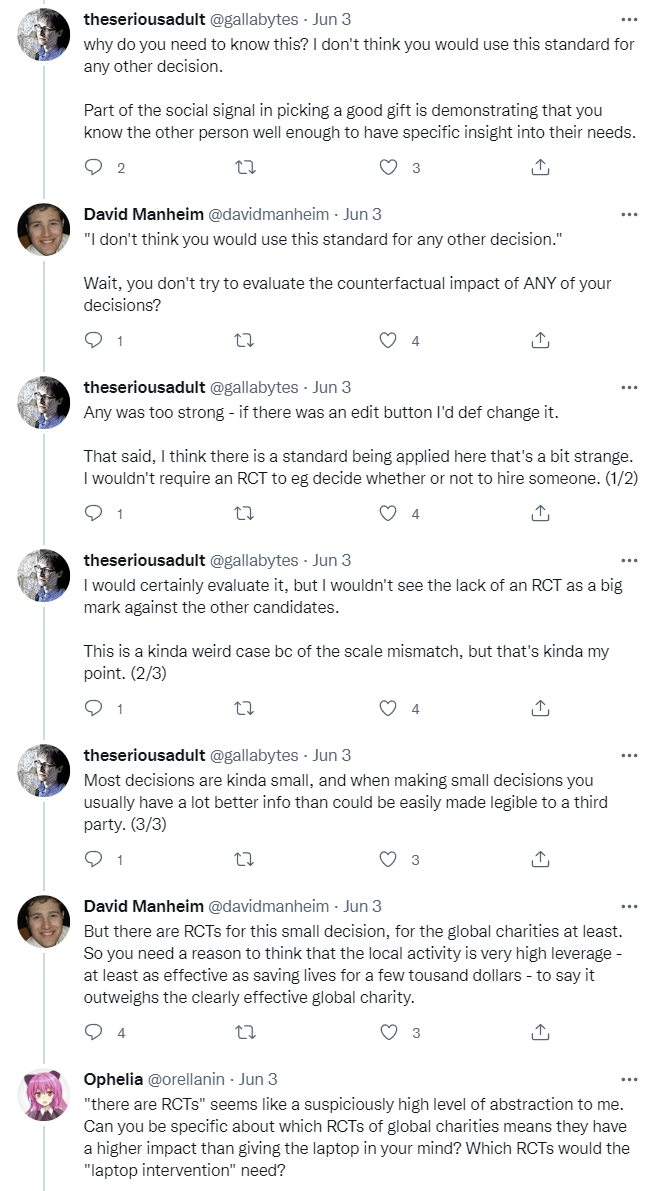

This thread responds to Dustin’s claim that you need to know details about the upgrade to the laptop further up the main thread, I found it worthwhile but did not include it directly for reasons of length.

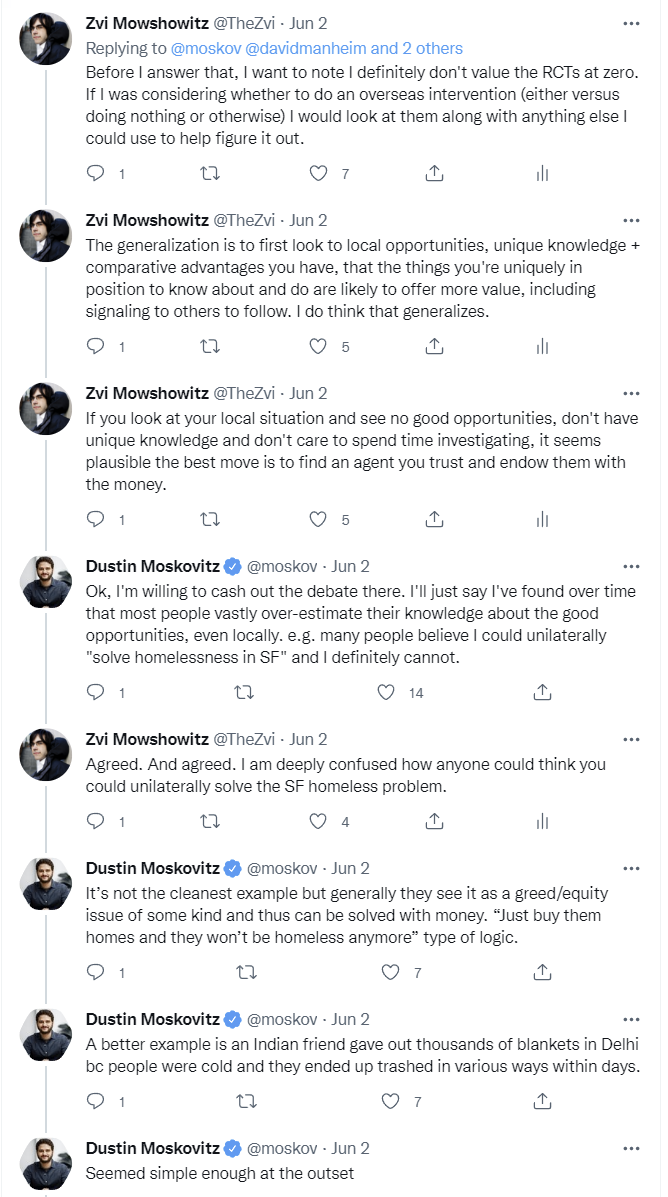

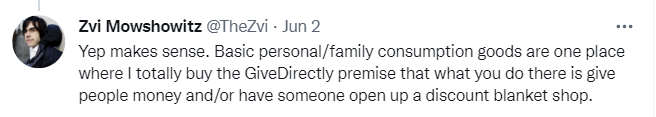

This came in response to Dustin’s challenge on whether info was 10x better.

After the main part of thread two, there was a different discussion about pressures perhaps being placed on students to be performative, which I found interesting but am not including for length.

This response to the original Tweet is worth noting as well.

Again, thanks to everyone involved and sorry if I missed your contribution.

This level of support for centralization and deferral is really unusual. I actually don't know of any community besides EA that endorses it. I'm aware it's a common position in effective altruism. But the arguments for it haven't been worked out in detail anywhere I know.

"Keep in mind that many things you might want to fund are in scope of an existing fund, including even small grants for things like laptops. You can just recommend they apply to these funds. If they don't get any money, I'd guess there were better options you would have missed but should have funded first. You may also be unaware of ways it would backfire, and the reason something doesn't get funded is because others judge it to be net negative."

I genuinely don't think there is any evidence (besides some theory-crafting around unilateralists curse) to think this level of second-guessing yourself and deferring is effective. Please keep in mind the history of the EA funds. Several funds basically never dispersed the funds. And the fund managers explicitly said they didn't have time. Of course things can improve but this level of deferral is really extreme given the communities history.

Suffice to day I don't think further centralizing resources is good nor is making things more bureaucratic. Im also not sure there is actually very much risk of 'unilateralist curse' unless you are being extremely careless. I trust most EAs to be at least as careful as the leadership. Probably the most dangerous thing you could possible fund is AI capabilities. Openphil gave 30M to OpenAI and the community has been pretty accepting of ai capabilities. This is way more dangerous than anything I would consider funding!