The following is a short supplement to an upcoming post on the implications for conscious significance (a framework for understanding the free will and determinism debate) but is a more general observation.

Paradigm Shifts vs Cultural Evolution

The world has undergone many paradigm shifts; where a profound truth has been revealed about the nature of the universe and our place within it. Individuals also go through their own personal paradigm shifts when they change their beliefs-which can be a frightening prospect. But I would argue it doesn’t need to be, because profound paradigm shifts seldom change as much as we expect.

This is because, if there is a significant practical benefit to behaviour in accordance with a fact about nature, cultural evolution will often find this behaviour before we discover the fact. A few examples without leaving the letter ‘ G’:

Gravity

Discovering gravity did not inform us about how we could move around by applying pressure with our limbs to the large gravitational body upon which we’re hurtling through space. We’d already worked out how to react to gravity without knowing exactly what it was (in fact, we still don’t know exactly what it is). It’s even feasible that we could have learned to fly while still maintaining a flat-earth perspective, and even with our incomplete understanding of gravity today, we are capable of space travel.

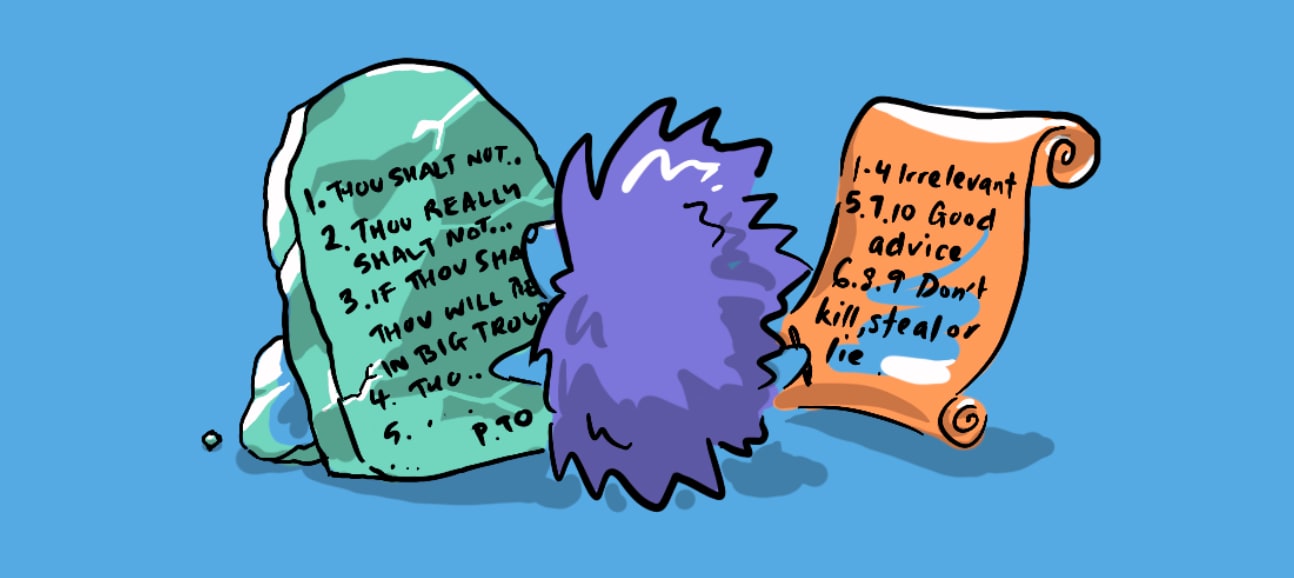

God is Dead

The 19th Century saw an increase in scientific discoveries such as Darwin’s theory of Evolution as well as increasingly secular forms of government born out of the Enlightenment. The associated atrophy of religious belief during this period in western philosophy was encapsulated in Nietzsche’s phrase…

“God is dead, God remains dead, and we have killed him”

… leading philosophers to grapple with Dostoevsky’s assertion that…

“If God is dead, all is permitted.”

Both Dostoevsky and Nietzsche independently assumed that God’s death leaves a moral vacuum.

In reality, a materialist worldview demands similar interpersonal ethics as a religious one. So, when belief declined, moral behaviour persisted, not by divine coincidence, but because many religious morals had differentially survived, primarily due to their facility for social cohesion.

Germs

From a modern perspective, the germ theory of disease seems a perfect counter-example of a profound truth that made a tremendous difference to everyday people; the imperative to wash one’s hands has itself saved billions of lives. However, even this theory, a version of which was proposed by Girolamo Fracastoro in 1546, failed to make a splash, partly because pseudoscientific theories had lucked upon some practices that were effective. The prevailing Miasma (or “Bad Air”) Theory at the time, at least, warned people away from rotting food and flesh, despite having no sound scientific explanation for why they should.

Even the paradigm of spiritual possession and witchcraft had developed some practices that informed behaviour consistent with the germ theory; the idea of quarantine, animistic gods providing treatment via plant leaves and concepts of impurity. This does not mean to say there was any merit to these beliefs, they are better viewed as rationalisations to justify practices born of utility. But over time, practices evolved such that germaphobic tendencies were in full swing before the likes of Louis Pasteur lead to the Germ Theory of Disease being fully accepted in the late 19th Century.

Genes

Genes are a recent paradigm shift, and the most profound. The discovery of DNA and the genetic code has revolutionised our understanding of life itself. But even this discovery has not changed as much as we might expect. The idea of heredity was already well established, and the idea of selective breeding was already in practice. And how much does the fact that you’re made of genes change your day-to-day life? Not much, unless you’re a geneticist.

But…

Over time, paradigm shifts do change everything, in a sense that they make what was previously impossible possible. Science and technology have enabled us to fly to the moon, build universal ethical frameworks, save lives and even edit genes—feats that would not have been possible without gaining an accurate picture of the world. But the discoveries that enabled these feats were not bolts from the blue, before we knew about them, we had already developed practices that were consistent with them. Importantly the anticipated consequences of these profound discoveries didn’t eventuate, and the feats they enabled did not arrive immediately, but required a continued process of cultural evolution to reveal their utility.

So…

The lesson I take from this is not to be afraid of paradigm shifts, and to recognise that new ideas don’t destroy the world to make it anew, but rather they reframe our understanding of the world to reveal new possibilities. Humans have a (often maligned) capacity to rationalise their behaviour, to understand new information in terms of information they already have, sometimes with bizarre results. However I believe this capacity is applicable in the case of paradigm shifts, enabling us accept new information without abandoning all the hard-won lessons of our personal and civilisational history. This approach is key to understanding the implications of ‘conscious significance’.

Related Material

- This post is a supplement to Implications where determinism is a profound paradigm shift, which is somewhat rationalised in my concept of conscious significance

- Another related post is It’s Subjective ~ the end of the conversation? Which tackles the idea of the centrality of conscious experience in ethical considerations, while distinguishing it from subjective relativism.

- On the topic of rationalising beliefs, my contagious beliefs simulation uses the mechanism of belief adoption via alignment with prior beliefs as a core principle, essentially enshrining cognitive bias as our primary conduit for learning.

Executive summary: Paradigm shifts in human understanding, while profound in their implications for future possibilities, often validate rather than overturn existing behaviors and practices that evolved through cultural trial-and-error before the theoretical understanding was established.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.