There has lately been conflict between different EAs over the relative priority of something like "truth-seeking" and something like "influence-seeking".

This has mostly been discussed in connection with controversy over Manifest's guest list, in the comments to these two posts. Here I'd like for us to discuss the question in general, and for it to be a better discussion than we sometimes have. To try a format that might help, I'll give us a selection of highly upvoted comments from those posts, and a set of prompts for discussion.

Highly upvoted comments about conflicts between truth-seeking and influencing:

From My experience at the controversial Manifest 2024:

Anna Salamon: 44 karma, 20 agree, 6 disagree.

“I want to be in a movement or community where people hold their heads up, say what they think is true, speak and listen freely, and bother to act on principles worth defending / to attend to aspects of reputation they actually care about, but not to worry about PR as such.”

huw: 102 karma, 41 agree, 16 disagree.

“EA needs to recognise that even associating with scientific racists and eugenicists turns away many of the kinds of bright, kind, ambitious people the movement needs. I am exhausted at having to tell people I am an EA ‘but not one of those ones’.”

David Mathers: 33 karma, 16 agree, 12 disagree

“I don't think we should play down what we believe to be popular, but I do think we should reject/eject people for believing stuff that is both wrong and bigoted and reputationally toxic.”

ThomasAquinus: 26 karma, 9 agree, 10 disagree

“The wisest among us know to reserve judgment [sic] and engage intellectually even with ideas we don't believe in. Have some humility -- you might not be right about everything! I think EA is getting worse precisely because it is more normie and not accepting of true intellectual diversity.”

In Why so many “racists” at Manifest?

Richard Ngo 116 karma, 42 upvotes, 22 downvotes

“I've also updated over the last few years that having a truth-seeking community is more important than I previously thought - basically because the power dynamics around AI will become very complicated and messy, in a way that requires more skill to navigate successfully than the EA community has. Therefore our comparative advantage will need to be truth-seeking.”

Peter Wildeford 32 karma, 21 upvotes, 10 downvotes

“Platforming racist / sexist / antisemetic / transphobic / etc. views -- what you call "bad" or "kooky" with scare quotes -- doesn't do anything to help other out-there ideas, like RCTs. It does the exact opposite! It associates good ideas with terrible ones.”

And this from and older post, [Linkpost] An update from Good Ventures :

Dustin Moskovitz 52 Karma, 15 upvotes, 2 downvotes

“ Over time, it seemed to become a kind of purity test to me, inviting the most fringe of opinion holders into the fold so long as they had at least one true+contrarian view; I am not pure enough to follow where you want to go, and prefer to focus on the true+contrarian views that I believe are most important.”

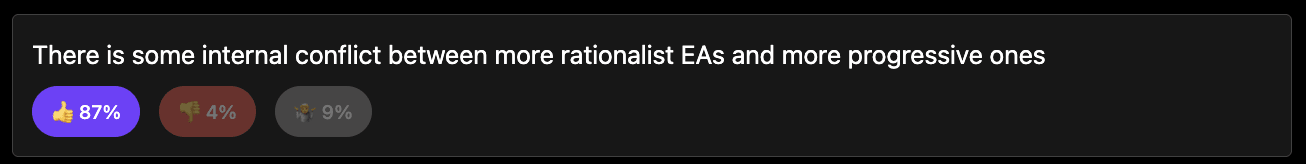

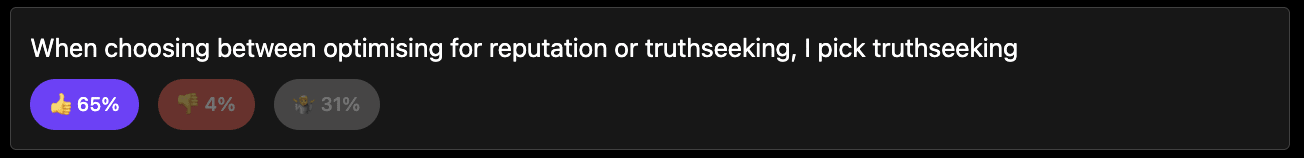

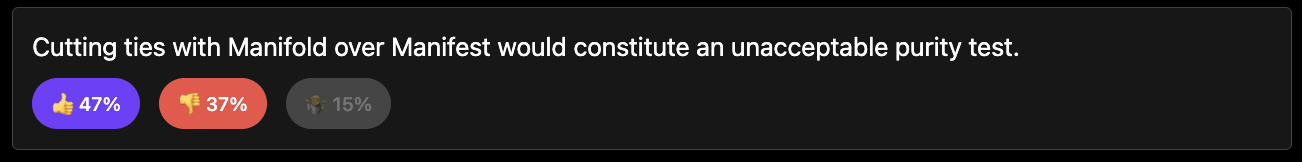

Likewise in the polls I ran, whether you trust that or not (112 respondents) (results here):

How this discussion should go:

The aim is to focus this discussion on the interaction between some notion of "truth- seeking" and some notion of "influence-seeking" and avoid many other things. That way we can have a narrow, more-productive discussion.

I have put some discussion prompts but you can use your own.

Please lets avoid points that are centrally about whether Manifest invited bad guests, Richard Hanania or other conflicts between EAs and rationalists. Though these can be used as examples.

I feel like the controversy over the conference has become a catalyst for tensions in the involved communities at large (EA and rationality).

It has been surprisingly common for me to make what I perceive to be totally sensible point that isn't even particularly demanding (about, e.g., maybe not tolerating actual racism) and then the "pro truth-seeking faction" seem to lump me together with social justice warriors and present analogies that make no sense whatsoever. It's obviously not the case that if you want to take a principled stance against racism, you're logically compelled to have also objected to things that were important to EA (like work by Singer, Bostrom/Savulescu human enhancement stuff, AI risk, animal risk [I really didn't understand why the latter two were mentioned], etc.). One of these things is not like the others. Racism is against universal compassion and equal consideration of interests (also, it typically involves hateful sentiments). By contrast, none of the other topics are like that.

To summarize, it seems concerning if the truth-seeking faction seems to be unable to understand the difference between, say, my comments, and how a social justice warrior would react to this controversy. (This isn't to say that none of the people who criticized aspects of Manifest were motivated by further-reaching social justice concerns; I readily admit that I've seen many comments that in my view go too far in the direction of cancelling/censorship/outrage.)

Ironically, I think this is very much an epistemic problem. I feel like a few people have acted a bit dumb in the discussions I've had here recently, at least if we consider it "dumb" when someone repeatedly fails at passing Ideological Turing Tests or if they seemingly have a bit of black-and-white thinking about a topic. I get the impression that the rationality community has suffered quite a lot defending itself against cancel culture, to the point that they're now a bit (low-t) traumatized. This is understandable, but that doesn't change that it's a suboptimal state of affairs.

If it bothers me, I can assume that some others will react similarly.

You don't have to be a member of the specific group in question to find it uncomfortable when people in your environment say things that are riling up negative sentiments against that group. For instance, twelve-year-old children are unlikely to attend EA or rationality events, but if someone there talked about how they think twelve-year olds aren't really people and their suffering matters less, I'd be pissed off too.

All of that said, I'm overall grateful for LW's existence; I think habryka did an amazing job reviving the site, and I do think LW has overall better epistemic norms than the EA forum (even though I think most of the people who I intellectually admire the most are more EAs than rationalists, if I had to pick only one label, but they're often people who seem to fit into both communities).