There has lately been conflict between different EAs over the relative priority of something like "truth-seeking" and something like "influence-seeking".

This has mostly been discussed in connection with controversy over Manifest's guest list, in the comments to these two posts. Here I'd like for us to discuss the question in general, and for it to be a better discussion than we sometimes have. To try a format that might help, I'll give us a selection of highly upvoted comments from those posts, and a set of prompts for discussion.

Highly upvoted comments about conflicts between truth-seeking and influencing:

From My experience at the controversial Manifest 2024:

Anna Salamon: 44 karma, 20 agree, 6 disagree.

“I want to be in a movement or community where people hold their heads up, say what they think is true, speak and listen freely, and bother to act on principles worth defending / to attend to aspects of reputation they actually care about, but not to worry about PR as such.”

huw: 102 karma, 41 agree, 16 disagree.

“EA needs to recognise that even associating with scientific racists and eugenicists turns away many of the kinds of bright, kind, ambitious people the movement needs. I am exhausted at having to tell people I am an EA ‘but not one of those ones’.”

David Mathers: 33 karma, 16 agree, 12 disagree

“I don't think we should play down what we believe to be popular, but I do think we should reject/eject people for believing stuff that is both wrong and bigoted and reputationally toxic.”

ThomasAquinus: 26 karma, 9 agree, 10 disagree

“The wisest among us know to reserve judgment [sic] and engage intellectually even with ideas we don't believe in. Have some humility -- you might not be right about everything! I think EA is getting worse precisely because it is more normie and not accepting of true intellectual diversity.”

In Why so many “racists” at Manifest?

Richard Ngo 116 karma, 42 upvotes, 22 downvotes

“I've also updated over the last few years that having a truth-seeking community is more important than I previously thought - basically because the power dynamics around AI will become very complicated and messy, in a way that requires more skill to navigate successfully than the EA community has. Therefore our comparative advantage will need to be truth-seeking.”

Peter Wildeford 32 karma, 21 upvotes, 10 downvotes

“Platforming racist / sexist / antisemetic / transphobic / etc. views -- what you call "bad" or "kooky" with scare quotes -- doesn't do anything to help other out-there ideas, like RCTs. It does the exact opposite! It associates good ideas with terrible ones.”

And this from and older post, [Linkpost] An update from Good Ventures :

Dustin Moskovitz 52 Karma, 15 upvotes, 2 downvotes

“ Over time, it seemed to become a kind of purity test to me, inviting the most fringe of opinion holders into the fold so long as they had at least one true+contrarian view; I am not pure enough to follow where you want to go, and prefer to focus on the true+contrarian views that I believe are most important.”

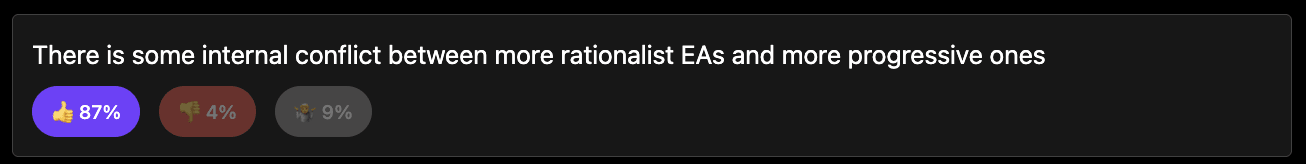

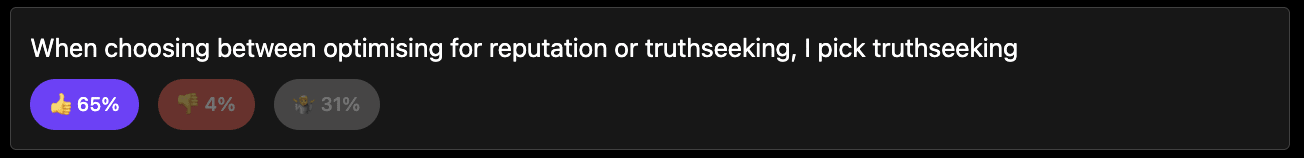

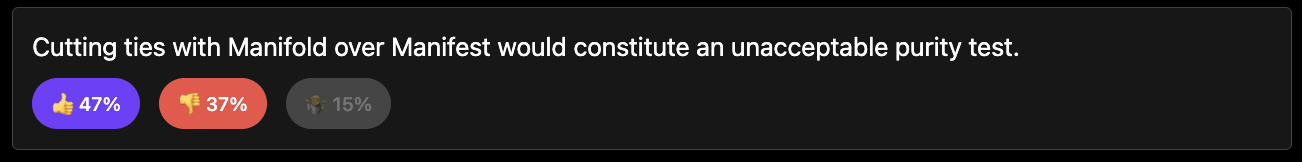

Likewise in the polls I ran, whether you trust that or not (112 respondents) (results here):

How this discussion should go:

The aim is to focus this discussion on the interaction between some notion of "truth- seeking" and some notion of "influence-seeking" and avoid many other things. That way we can have a narrow, more-productive discussion.

I have put some discussion prompts but you can use your own.

Please lets avoid points that are centrally about whether Manifest invited bad guests, Richard Hanania or other conflicts between EAs and rationalists. Though these can be used as examples.

"Influence-seeking" doesn't quite resonate with me as a description of the virtue on the other end of "truth-seeking."

What's central in my mind when I speak out against putting "truth-seeking" above everything else is mostly a sentiment of "I really like considerate people and I think you're driving out many people who are considerate, and a community full of disagreeable people is incredibly off-putting."

Also, I think considerateness axis is not the same as the decoupling axis. I think one can be very considerate and also great at decoupling; you just have to be able to couple things back together as well.

Let's try this again.

Offputting to whom? The vast majority of people arguing here are people who would never attend manifest. I'm not super worried if they are put off.

I imagine the view that many people have of the event is not how it was at all.

I feel like the controversy over the conference has become a catalyst for tensions in the involved communities at large (EA and rationality).

It has been surprisingly common for me to make what I perceive to be totally sensible point that isn't even particularly demanding (about, e.g., maybe not tolerating actual racism) and then the "pro truth-seeking faction" seem to lump me together with social justice warriors and present analogies that make no sense whatsoever. It's obviously not the case that if you want to take a principled stance against racism, you're logically compelled to have also objected to things that were important to EA (like work by Singer, Bostrom/Savulescu human enhancement stuff, AI risk, animal risk [I really didn't understand why the latter two were mentioned], etc.). One of these things is not like the others. Racism is against universal compassion and equal consideration of interests (also, it typically involves hateful sentiments). By contrast, none of the other topics are like that.

To summarize, it seems concerning if the truth-seeking faction seems to be unable to understand the difference between, say, my comments, and how a social justice warrior would react to this controversy. (This isn't to say that none of the people who criticized aspects of Manifest were motivated by further-reaching social justice concerns; I readily admit that I've seen many comments that in my view go too far in the direction of cancelling/censorship/outrage.)

Ironically, I think this is very much an epistemic problem. I feel like a few people have acted a bit dumb in the discussions I've had here recently, at least if we consider it "dumb" when someone repeatedly fails at passing Ideological Turing Tests or if they seemingly have a bit of black-and-white thinking about a topic. I get the impression that the rationality community has suffered quite a lot defending itself against cancel culture, to the point that they're now a bit (low-t) traumatized. This is understandable, but that doesn't change that it's a suboptimal state of affairs.

If it bothers me, I can assume that some others will react similarly.

You don't have to be a member of the specific group in question to find it uncomfortable when people in your environment say things that are riling up negative sentiments against that group. For instance, twelve-year-old children are unlikely to attend EA or rationality events, but if someone there talked about how they think twelve-year olds aren't really people and their suffering matters less, I'd be pissed off too.

All of that said, I'm overall grateful for LW's existence; I think habryka did an amazing job reviving the site, and I do think LW has overall better epistemic norms than the EA forum (even though I think most of the people who I intellectually admire the most are more EAs than rationalists, if I had to pick only one label, but they're often people who seem to fit into both communities).

Edited to be about 1 thing.

Well we agree that it doesn’t feel great to feel misunderstood.

Okay, what does not tolerating actual racism look like to you? What is the specific thing you're asking for here?

Up until recently, whenever someone criticized rationality or EA for being racist or for supporting racists, I could say something like the following:

"I don't actually know of anyone in these communities who is racist or supports racism. From what I hear, some people in the rationality community occasionally discuss group differences in intelligence, because this was discussed in writings by Scott Alexander, which a lot of people have read and so it gives them shared context. But I think this doesn't come from a bad place. I'm pretty sure people who are central to these communities (EA and rationality) would pretty much without exception speak up strongly against actual racists."

It would be nice if I could still say something like that, but it no longer seems like I can, because a surprising number of people have said things like "person x is quite racist, but [...] interesting ideas."

What do you think a community ought to value more than truth-seeking? What might you call the value you think trades off?

I don't really think it's this. I think it is "I don't want people associating me with people or ideas like that so I'd like you to stop please".

But let's take your case, that means you think that on the margin some notion of considerateness/kindness/agreeableness is more important than truth-seeking. Is that right?

And if so, why should EA be pushing for that at the margin. I get why people would push for influence over truth and I get why considerateness is valuable. But on the margin I would pick more truth. It feels like in the past, more considerateness might have led to less hard discussions about AI or even animal welfare. Seems those discussions have generally led us to positions we agree with in hindsight.

Could you say more about why you feel that way?

Certainly lots of people would have concluded that WAW and AI as subjects of inquiry and action were weird, pointless, stupid, etc. But that's quite different from the reactions to scientific racism.

Well had the consensus around WAW and AI taken longer to appear we might endorse the situation we are in less than we currently do.

Completely agree with Jason. I don't think those two discussions you are mentioning Nathan actually carry much risk of influence loss, and with AI maybe there has Even been meet influence gain. Things like that that 95 percent of people consider "weird, pointless and stupid" don't actually have a serious risk of reputational loss. I think looking at most past wins and saying "oh we might not have talked about that is we had been worried about influence" can be a bit of a strawman

It can't be a strawman if I am arguing it. I am the man.

We can disagree, but I think a move from truthseeking in the past would probably have led to a less-endorsed present (unless it turned off SBF somehow).

You're the man Nathan :D love it!

I might just not understand the strawman concept properly then, I thought someone could hold a position which was a "strawman" while they were arguing it but uncertain...

Anyway regardless my point is I don't think we have an example of truth seeking which led a serious cause area which then turned out to cause major influence loss for the community.

I think it's just really hard to say "don't discuss that" without nixing a lot of other useful discussion too. I wish there were a way to stop just racism, but already it's been implied that guests associated with genetics (Steve Hsu, the Collinses, etc) are also trafficking in unacceptable ideas. Should that be banned as well?

And if we'd taken this view 20 years ago we could be in a different place today. Singer advocates for disabled children to be killed, Bostrom partly advocates for a global police state, Yudkowsky doesn't think that babies are conscious. If we ignored all ideas that came from or near people with awful ideas, we would have lost a lot of ideas we now value.

If you want me to say "I don't like Hanania" I will say it. But if you want me to say "Manifest is bad because they invited 40 people who could conceivably be racist" then no, I don't endorse that.

It might be what you say for some people, but that doesn't ring true for my case (at all). (But also, compared to all the people who complained about stuff at Manifest or voiced negative opinions from the sidelines as forum users, I'm pretty sure I'm in the 33% that felt the least strongly and had fewer items to pick at.)

I don't like this framing/way of thinking about it.

For one thing, I'm not sure if I want to concede the point that it is the "maximally truth-seeking" thing to risk that a community evaporatively cools itself along the lines we're discussing.

Secondly, I think the issues around Manifest I objected to weren't directly about "what topics are people allowed to talk about?."

If some person with a history of considerateness and thoughtfulness wanted to do a presentation on HBD at Manifest, or (to give an absurd example) if Sam Harris (who I think is better than average at handling delicate conversations like that) wanted to interview Douglas Murray again in the context of Manifest, I'd be like "ehh, not sure that's a good idea, but okay..." And maybe also "Well, if you're going to do this, at least think very carefully about how to communicate about why you're talking about this/what the goal of the session is." (It makes a big difference whether the framing of the session is "We know this isn't a topic most people are interested in, but we've had some people who are worried that if we cannot discuss every topic there is, we might lose what's valuable about this community, so this year, we decided to host a session on this; we took steps x, y, and z to make sure this won't become a recruiting ground for racists;" or whether the framing is "this is just like every other session.") [But maybe this example is beside the point, I'm not actually sure whether the objectionable issue was sessions on HBD/intelligence differences among groups, or whether it was more just people talking about it during group conversations.]

By contrast, if people with a history of racism or with close ties to racists attend the conference and it's them who want to talk about HBD, I'm against it. Not directly because of what's being discussed, but because of how and by whom. (But again, it's not my call to make and I'm just stating what I would do/what I think would lead to better outcomes.)

(I also thought people who aren't gay using the word "fag" sounded pretty problematic. Of course, this stuff can be moderated case-by-case and maybe a warning makes more sense than an immediate ban. Also, in fairness, it seems like the conference organizers would've agreed with that and they simply didn't hear the alleged incident when it allegedly happened.)

Another way to frame it is through the concept of collective intelligence. What is good for developing individual intelligence may not be good for developing collective intelligence.

Think, for example, of schools that pit students against each other and place a heavy emphasis on high-stakes testing to measure individual student performance. This certainly motivates people to personally develop their intellectual skills; just look at how much time, e.g. Chinese children are spending on school. But is this better for the collective intelligence?

High-stakes testing often leads to a curriculum that is narrowly focused on intelligence-focused skills that are easily measurable by tests. This can limit the development of broader, harder-to-measure social skills that are vital for collective intelligence, such as communication, group brainstorming, deescalation, keeping your ego in check, empathy...

And such a testing-focused environment can discourage collaborative learning experiences because the focus is on individual performance. This reduction in group learning opportunities and collaboration limits overall knowledge growth.

It can exacerbate educational inequalities by disproportionately disadvantaging students from lower socio-economic backgrounds, who may have less access to test preparation resources or supportive learning environments. This can lead to a segmented education system where collective intelligence is stifled because not all members have equal opportunities to contribute and develop.

And what about all the work that needs to be done that is not associated with high intelligence? Students who might not excel in what a given culture considers high-intelligence (such as the arts, practical skills, or caretaking work) may feel undervalued and disengage from contributing their unique perspectives. Worse, if they continue to pursue individual intelligence, you might end up with a workforce that has a bad division of labor, despite having people that theoretically could have taken up those niches. Like what's happening in the US:

If you want to have more truth-seeking, you first have to make sure that your society functions. (E.g., if everyone is a college professor, who's making the food?)

To have a collective be maximally truth-seeking in the long run, you have to not solely focus on truth-seeking.

I would guess that the manifest crowd meaningfully adds to the decisionmaking capabilities of EA by sometimes coming up with very valuable ideas, so I think I disagree with the conclusion here.

I think factory farmed animals is the better example here. It can be pretty hurtful to tell someone you think a core facet of their life (meat eating) has been a horrendous moral error, just as was slavery or genocide. It seems we all feel fine putting aside the considerateness consideration when the stakes are high enough.

It seems to me that being considerate of others is still valuable even (especially!) if you're trying to convince them that they're making a horrendous moral error. Almost all attempts to change someone's mind in this way don't work, and failed attempts often contribute to memetic immunity. History is full of disagreeable-but-morally-correct people being tragically unpersuasive.

I agree with Lukas that "influence seeking" although important doesn't quite resonate with me as a positive virtue in the other side of "truth seeking"

That said I'll comment on the topic anyway :D

To do the most good we can, we need both truth and influence. They are both critically important, without one the other can become useless. If we could maximize both that might be best, but obviously we can't so at some point there will be tradeoffs.

I think this tradeoff might not actually be as critical a some seem to think think (but won't get into this here). I don't think it's that hard to sacrifice a little truth right on the margins of what is socially acceptable to avoid a lot of influence loss.

I don't think most "weird" ideas and thought trains actually carry much risk of reputational loss. As much as people have said there has been "reputational risk" in working on invertebrates welfare and would animal welfare, I struggle to see any actual evidence of influence lost through these. Influence may (unsure) have been lost through long-termism focus, but there also may have been influence gains there through attracting a wider range of people to EA and also donors who are interested in long term is sm

. I don't think we lose much truth seeking ability by just avoiding a few relatively unimportant topics which day high risk of influence loss (see Hananiah/ Bostrum scandal).

I also think more reputational loss and loss of influence may have actually come through what I see as unrelated mistakes (close association with SBF, Abbey purchase and handling etc.) which are at best peripherally associated with truth seeking.

Truth seeking seems to me worthless without influence. For example we could design the perfect AI alignment strategy, but if we have no influence to implement it then what have we achieved? Or we could figure out that protecting digital mind welfare was the most important thing we could do to reduce suffering, but if we have zero influence and no one is prepared to fund the work that needs doing to fix the problem then what's the point?

And if we are extremely influential without truth, then the influence is meaningless. We will just revert to societal norms and do no good on the margins. As a halfway example if we decided to optimize mostly for influence, then we might completely ditch all (or most) longtermist work for a while in the wake of SBF, which might be dangerous.

Those aren't the best examples but I hope the ideas comes through.

So you think the right tradeoff is like 90% truthseeking? Is that fair?

That might technically be correct but saying that appears to minimize the importance of influence

I would more frame it like we might only need to tradeoff 10 percent of truth seeking to have minimal impact on influence.

How do you think we could maintain that equilibrium?

To me it seems that once topics move beyond discussion it's very expensive to get them back. It doesn't feel like we'll have an honest community conversation about the Bostrom/Time article stuff for a while yet. Sometimes it feels like we still aren't capable of having an honest community conversation about Leverage.

If people only want to engage with this discussion on high stakes posts, then it feels likely we’ll only discuss this in stressful ways. Seems bad.

edit:

If this pretty inflammatory post (https://forum.effectivealtruism.org/posts/8YmFFFyys2YSmmrNd/in-defense-of-standards-a-fecal-thought-experiment) gets more upvotes than my deliberately boring and consensus attempt then I'm going to update towards this forum wanting controversy and heartache rather than to discuss things soberly.

Yeah, I agree I probably didn't get a good sense of where you were coming from. It's interesting because, before you made the comments in this post and in the discussion here underneath, I thought you and I probably had pretty similar views. (And I still suspect that – seems like we may have talked past each other!) You said elsewhere that last year you spoke against having Hanania as a speaker. This suggested to me that even though you value truth-seeking a lot, you also seem to think there should be some other kinds of standards. I don't think my position is that different from "truth-seeking matters a ton, but there should be some other kinds of standards." That's probably the primary reason I spent a bunch of time commenting on these topics: the impression that the "pro truth-seeking" faction in my view seemed to be failing to make even some pretty small/cheap concessions. (And it seemed like you were one of the few people who did make such concessions, so, I don't know why/if it feels like we're disagreeing a lot.)

(This is unrelated, but it's probably good for me to separate timeless discussion about norms from an empirical discussion of "How likely is it that Hanania changed a lot compared to his former self?" I do have pessimistic-leaning intuitions about the latter, but they're not very robust because I really haven't looked into this topic much, and maybe I'm just prejudiced. I understand that, if someone is more informed than me and believes confidently that Hanania's current views and personality are morally unobjectionable, it obviously wouldn't be a "small concession" for them to disinvite or not platform someone they think is totally unobjectionable! I think that can be a defensible view depending on whether they have good reasons to be confident in these things. At the same time, the reason I thought that there were small/cheap concessions that people could make that they weirdly enough didn't make, was that a bunch of people explicitly said things like "yeah he's pretty racist" or "yeah he recently said things that are pretty racist" and then still proceeded to talk as though this is just normal and that excluding racists would be like excluding Peter Singer. That's where they really lost me.)

Just as a heads-up, I'm planning to get off the EA forum for a while to avoid the time-sink issues, so I may not leave more comments here anytime soon.

On our interactions:

I imagine we do, though here I am sort of specifically trying to find a crux. Probably I'm being a bit grumpy about it, but all in all, I think I agree with you a lot.

I agree. I'm pretty moderately of generativeness without any kinds of incentives towards kindness.

I am less sure that shaming events with unkindness is the kind of incentive we want.

I can't speak for anyone else, but I am pretty willing to make trades here. And I have done so. Though I want to know what the trades are beforehand.

I don't really consider the "concessions" so far to be trades as such, I just think that a norm against racism is really valuable and we should allow people to break it only at much greater cost than we've seen. Though I take up that issue with manifest internally rather than on here.

I am trying to figure out the underlying disagreement, which I think causes me to cut in a different direction than I normally would.

Fair play.

Discussion prompt:

What exactly is the relationship between truth and influence? What is the conflict?

Discussion prompt:

We generally believe that the top 1% of philanthropic opportunities are often 100x better than the median. This seems to imply that the marginal better decision has big impacts. Isn’t this likely to be true on a macro scale also (suggesting truth-seeking is very valuable)? Likewise many influence-seeking orgs make trivial mistakes that vastly reduce their positive impact.

Frankly, the uneven distribution of philanthropic opportunities also suggests that influence seeking can be very valuable if EA wants to diversify its funding base away from Moskovitz/Tuna. There are a lot of people giving away a lot of money to causes that may be 100x worse than causes they could be giving to who could be appealed to: it's hard to impute comparably high value to "not worrying about PR" or high decoupling which is pretty orthogonal to truth seeking anyway.

Evidence that something might be exceptionally high value might be a good reason to pursue it to find out whether it is true despite it being unpopular, but even when the controversies on here concern the award of grants (which they usually don't), they tend not to be the ones the funders thought were 100x better than alternatives.

So I'm not sure if I agree or don't but this seems surprisingly like arguing that EA should bend it's values to whoever is willing to give resources. I see you argue in favour of flexing away from "ugly" views. Would you argue in favour of flexing towards them if there was a donor in that direction?

I think this is a good question, but probably a separate one from the one originally asked. From a utilitarian/consequentialist POV (which most EAs seem to use for most prioritization) you probably would care enough about the impact on the funding base to flex towards "ugly" rhetoric if "ugly" rhetoric was the most effective way of attracting more money to 100x causes, but this doesn't appear to be the world we actually live in.

But on the original point, I don't think much of the influence-undermining stuff that keeps coming up on here (some of which concerns "ugly" comments and some of which doesn't, but all of which seems to gets the blanket "we shouldn't care about optics" defence) really has anything to do with 100x better returns. If EA was getting widely panned because of the uncoolness of shrimp welfare then shrimp welfare advocates could argue that based on certain welfare assumptions the impact of pursuing what they're doing is 100x better than doing something with "better optics". I'm not sure such highly multiplicative returns are easily applied to promoting 'edgy' politicos, shortcircuiting process in a way which appears to create conflicts of interest or funding marginal projects which look like conspicuous consumption to altruistically-inclined outsiders

It seems as plausible to me that we live in this world as the world you suggest. Seems far easier to try and bend towards Elon or Theil bucks than unnanmed philanthropists I haven't heard of.

Seems untrue. FTX and the Time article sexual harassment stuff seem like the two biggest reputational factors and neither of those got the "we shouldn't care about optics" defence

Even if Elon and Thiel's philanthropic priorities were driven mainly by whether people associated with the organization offended enough people (which seems unlikely, looking at what they do spend most of their money on, which incidentally isn't EA despite Elon at least being very aware, and somewhat aligned on AI safety) it seems unlikely their willingness and ability to fund it exceeds everybody else.

I'd agree those were bigger overall than the more regular drama on here I was referring to, but they're also actions people generally didn't try to defend or dismiss at all. Whereas the stuff that comes up here about Person X saying offensive stuff or Organization Y having alleged conflicts of interest or Grant Z looking frivolous frequently do get dismissed on the basis that optics shouldn't be a consideration.

Most truths have ~0 effect magnitude concerning any action plausibly within EA's purview. This could be because knowing that X is true, and Y is not true (as opposed to uncertainty or even error regarding X or Y) just doesn't change any important decision. It also can be because the important action that a truth would influence/enable is outside of EA's competency for some reason. E.g., if no one with enough money will throw it at a campaign for Joe Smith, finding out that he would be the candidate for President who would usher in the Age of Aquarius actually isn't valuable.

As relevant to the scientific racism discussion, I don't see the existence or non-existence of the alleged genetic differences in IQ distributions by racial group as relevant to any action that EA might plausibly take. If some being told us the answers to these disputes tomorrow (in a way that no one could plausibly controvert), I don't think the course of EA would be different in any meaningful way.

More broadly, I'd note that we can (ordinarily) find a truth later if we did not expend the resources (time, money, reputation, etc.) to find it today. The benefit of EA devoting resources to finding truth X will generally be that truth X was discovered sooner, and that we got to start using it to improve our decisions sooner. That's not small potatoes, but it generally isn't appropriate to weigh the entire value of the candidate truth for all time when deciding how many resources (if any) to throw at it. Moreover, it's probably cheaper to produce scientific truth Z twenty years in the future than it is now. In contrast, global-health work is probably most cost-effective in the here and now, because in a wealthier world the low-hanging fruit will be plucked by other actors anyway.

What i currently take from this is you think that if we start some work which seems unpopular or controversial we should stop because we can discover it later?

If not, how much work should we do before we decide it’s not worth the reputation cost to discuss it carefully?

No, I think that extends beyond what I'm saying. I am not proposing a categorical rule here.

However, the usual considerations of neglectedness and counterfactual analysis certainly apply. If someone outside of EA is likely to done the work at some future time, then the cost of an "error" is the utility loss caused by the delay between when we would have done it and when it was done by the non-EA. If developments outside EA convince us to change our minds, the utility loss is measured between now and the time we change our minds. I've seen at least one comment suggesting "HBD" is in the same ballpark as AI safety . . . but we likely only get one shot at the AGI revolution for the rest of human history. Even if one assumes p(doom) = 0, the effects of messing up AGI are much more likely to be permanent or extremely costly to reverse/mitigate.

From a longtermist perspective, [1] I would assume that "we are delayed by 20-50 years in unlocking whatever benefit accepting scientific racism would bring" is a flash in the pan over a timespan of millions of years. In fact, those costs may be minimal, as I don't think there would be a whole lot for EA to do even if it came to accept this conclusion. (I should emphasize that this is definitely not implying that scientific racism is true or that accepting it as true would unlock benefits.)

I do not identify as a longtermist, but I think it's even harder to come up with a theory of impact for scientific racism on neartermist grounds.

Discussion prompt:

Truth-seekers don’t seem to search for the truth about how many grains of sand are on the beaches. Why are divisive truths specifically worth seeking?

Discussion prompt:

For you personally, what is the correct tradeoff between truthseeking and influence for EA?