There has lately been conflict between different EAs over the relative priority of something like "truth-seeking" and something like "influence-seeking".

This has mostly been discussed in connection with controversy over Manifest's guest list, in the comments to these two posts. Here I'd like for us to discuss the question in general, and for it to be a better discussion than we sometimes have. To try a format that might help, I'll give us a selection of highly upvoted comments from those posts, and a set of prompts for discussion.

Highly upvoted comments about conflicts between truth-seeking and influencing:

From My experience at the controversial Manifest 2024:

Anna Salamon: 44 karma, 20 agree, 6 disagree.

“I want to be in a movement or community where people hold their heads up, say what they think is true, speak and listen freely, and bother to act on principles worth defending / to attend to aspects of reputation they actually care about, but not to worry about PR as such.”

huw: 102 karma, 41 agree, 16 disagree.

“EA needs to recognise that even associating with scientific racists and eugenicists turns away many of the kinds of bright, kind, ambitious people the movement needs. I am exhausted at having to tell people I am an EA ‘but not one of those ones’.”

David Mathers: 33 karma, 16 agree, 12 disagree

“I don't think we should play down what we believe to be popular, but I do think we should reject/eject people for believing stuff that is both wrong and bigoted and reputationally toxic.”

ThomasAquinus: 26 karma, 9 agree, 10 disagree

“The wisest among us know to reserve judgment [sic] and engage intellectually even with ideas we don't believe in. Have some humility -- you might not be right about everything! I think EA is getting worse precisely because it is more normie and not accepting of true intellectual diversity.”

In Why so many “racists” at Manifest?

Richard Ngo 116 karma, 42 upvotes, 22 downvotes

“I've also updated over the last few years that having a truth-seeking community is more important than I previously thought - basically because the power dynamics around AI will become very complicated and messy, in a way that requires more skill to navigate successfully than the EA community has. Therefore our comparative advantage will need to be truth-seeking.”

Peter Wildeford 32 karma, 21 upvotes, 10 downvotes

“Platforming racist / sexist / antisemetic / transphobic / etc. views -- what you call "bad" or "kooky" with scare quotes -- doesn't do anything to help other out-there ideas, like RCTs. It does the exact opposite! It associates good ideas with terrible ones.”

And this from and older post, [Linkpost] An update from Good Ventures :

Dustin Moskovitz 52 Karma, 15 upvotes, 2 downvotes

“ Over time, it seemed to become a kind of purity test to me, inviting the most fringe of opinion holders into the fold so long as they had at least one true+contrarian view; I am not pure enough to follow where you want to go, and prefer to focus on the true+contrarian views that I believe are most important.”

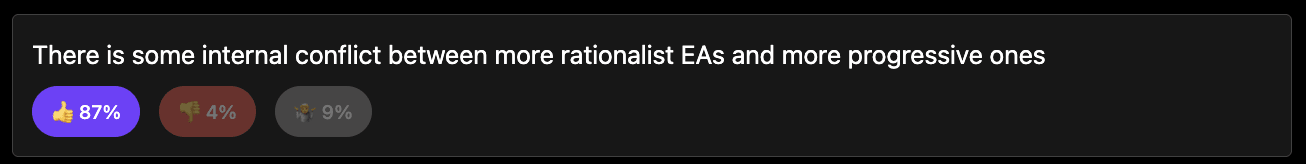

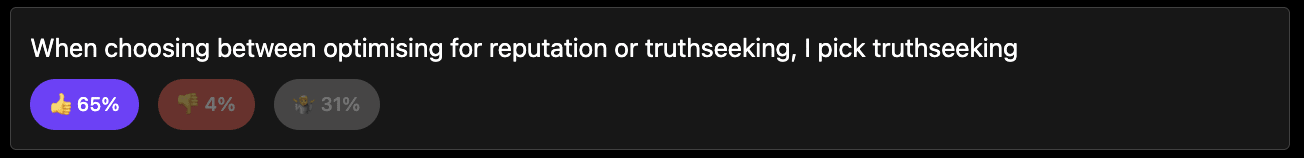

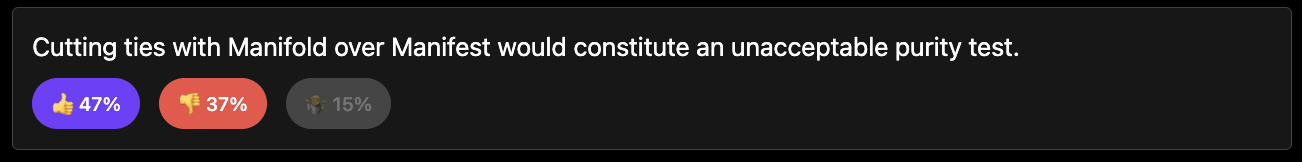

Likewise in the polls I ran, whether you trust that or not (112 respondents) (results here):

How this discussion should go:

The aim is to focus this discussion on the interaction between some notion of "truth- seeking" and some notion of "influence-seeking" and avoid many other things. That way we can have a narrow, more-productive discussion.

I have put some discussion prompts but you can use your own.

Please lets avoid points that are centrally about whether Manifest invited bad guests, Richard Hanania or other conflicts between EAs and rationalists. Though these can be used as examples.

I agree with Lukas that "influence seeking" although important doesn't quite resonate with me as a positive virtue in the other side of "truth seeking"

That said I'll comment on the topic anyway :D

To do the most good we can, we need both truth and influence. They are both critically important, without one the other can become useless. If we could maximize both that might be best, but obviously we can't so at some point there will be tradeoffs.

I think this tradeoff might not actually be as critical a some seem to think think (but won't get into this here). I don't think it's that hard to sacrifice a little truth right on the margins of what is socially acceptable to avoid a lot of influence loss.

I don't think most "weird" ideas and thought trains actually carry much risk of reputational loss. As much as people have said there has been "reputational risk" in working on invertebrates welfare and would animal welfare, I struggle to see any actual evidence of influence lost through these. Influence may (unsure) have been lost through long-termism focus, but there also may have been influence gains there through attracting a wider range of people to EA and also donors who are interested in long term is sm

. I don't think we lose much truth seeking ability by just avoiding a few relatively unimportant topics which day high risk of influence loss (see Hananiah/ Bostrum scandal).

I also think more reputational loss and loss of influence may have actually come through what I see as unrelated mistakes (close association with SBF, Abbey purchase and handling etc.) which are at best peripherally associated with truth seeking.

Truth seeking seems to me worthless without influence. For example we could design the perfect AI alignment strategy, but if we have no influence to implement it then what have we achieved? Or we could figure out that protecting digital mind welfare was the most important thing we could do to reduce suffering, but if we have zero influence and no one is prepared to fund the work that needs doing to fix the problem then what's the point?

And if we are extremely influential without truth, then the influence is meaningless. We will just revert to societal norms and do no good on the margins. As a halfway example if we decided to optimize mostly for influence, then we might completely ditch all (or most) longtermist work for a while in the wake of SBF, which might be dangerous.

Those aren't the best examples but I hope the ideas comes through.

How do you think we could maintain that equilibrium?

To me it seems that once topics move beyond discussion it's very expensive to get them back. It doesn't feel like we'll have an honest community conversation about the Bostrom/Time article stuff for a while yet. Sometimes it feels like we still aren't capable of having an honest community conversation about Leverage.