Confidence level: I’m a computational physicist working on nanoscale simulations, so I have some understanding of most of the things discussed here, but I am not specifically an expert on the topics covered, so I can’t promise perfect accuracy.

I want to give a huge thanks to Professor Phillip Moriarty of the university of Nottingham for answering my questions about the experimental side of mechanosynthesis research.

Introduction:

A lot of people are highly concerned that a malevolent AI or insane human will, in the near future, set out to destroy humanity. If such an entity wanted to be absolutely sure they would succeed, what method would they use? Nuclear war? Pandemics?

According to some in the x-risk community, the answer is this: The AI will invent molecular nanotechnology, and then kill us all with diamondoid bacteria nanobots.

This is the “lower bound” scenario posited by Yudkowsky in his post AGI ruin:

The nanomachinery builds diamondoid bacteria, that replicate with solar power and atmospheric CHON, maybe aggregate into some miniature rockets or jets so they can ride the jetstream to spread across the Earth's atmosphere, get into human bloodstreams and hide, strike on a timer.

The phrase “diamondoid bacteria” really struck out at me, and I’m not the only one. In this post by Carlsmith (which I found very interesting), Carlsmith refers to diamondoid bacteria as an example of future tech that feels unreal, but may still happen:

Whirling knives? Diamondoid bacteria? Relentless references to paper-clips, or “tiny molecular squiggles”? I’ve written, elsewhere, about the “unreality” of futurism. AI risk had a lot of that for me.

Meanwhile, the controversial anti-EA crusader Emille Torres cites the term “diamondoid bacteria” as a reason to dismiss AI risk, calling it “patently ridiculous”.

I was interested to know more. What is diamondoid bacteria? How far along is molecular nanotech research? What are the challenges that we (or an AI) will need to overcome to create this technology?

If you want, you can stop here and try and guess the answers to these questions.

It is my hope that by trying to answer these questions, I can give you a taste of what nanoscale research actually looks like. It ended up being the tale of a group of scientists who had a dream of revolutionary nanotechnology, and tried to answer the difficult question: How do I actually build that?

What is “diamondoid bacteria”?

The literal phrase “diamondoid bacteria” appears to have been invented by Eliezer Yudkowsky about two years ago. If you search the exact phrase in google scholar there are no matches:

If you search the phrase in regular google, you will get a very small number of matches, all of which are from Yudkowsky or directly/indirectly quoting Yudkowsky. The very first use of the phrase on the internet appears to be this twitter post from September 15 2021. (I suppose there’s a chance someone else used the phrase in person).

I speculate here that Eliezer invented the term as a poetic licence way of making nanobots seem more viscerally real. It does not seem likely that the hypothetical nanobots would fit the scientific definition of bacteria, unless you really stretched the definition of terms like “single-celled” and “binary fission”. Although bacteria are very impressive micro-machines, so I wouldn’t be surprised if future nanotech bore at least some resemblance.

Frankly, I think inventing new terms is an extremely unwise move (I think that Eliezer has stopped using the term since I started writing this, but others still are). “diamondoid bacteria” sounds science-ey enough that a lot of people would assume it was already a scientific term invented by an actual nanotech expert (even in a speculative sense). If they then google it and find nothing, they are going to assume that you’re just making shit up.

But diamondoid nanomachinery has been a subject of inquiry, by actual scientific experts, in a research topic called “diamondoid mechanosynthesis”.

What is “diamondoid mechanosynthesis”

Molecular nanotech (MNT) is an idea first championed by Eric Drexler, that the same principles of mass manufacturing that are used in todays factories could one day be miniaturized to the nanoscale, assembling complex materials molecule by molecule from the ground up, with nanoscale belts, gears, and manipulators. You can read the thesis here, It’s an impressive first theoretical pass at the nanotech problem, considering the limited computational tools available in 1991, and helped inspire many in the current field of nanotechnology (which mostly does not focus on molecular assembly).

However, Drexlers actual designs of how a molecular assembler would be built have been looked on with extreme skepticism by the wider scientific community. And while some of the criticisms have been unfair (such as accusations of pseudoscience), there are undeniably extreme engineering challenges. The laws of physics are felt very differently at different scales, presenting obstacles that have never been encountered before in the history of manufacturing, and indeed may turn out to be entirely insurmountable in practice. How would you actually make such a device?

Well, a few teams were brave enough to try and tackle the problem head on. The nanofactory collaboration, with a website here, was an attempt to directly build a molecular assembler. It was started in the early 2000’s, with the chief players beings Freitas and Merkle, two theoretical/computational physicists following on from the work of Drexler. The method they were researching to make this a reality was diamondoid mechanosynthesis(DMS).

So, what is DMS? Lets start with Mechanosynthesis. Right now, if you want to produce molecules from constituent molecules or elements, you would place reactive elements in a liquid or gas and jumble them around so they bump into each other randomly. If the reaction is thermodynamically favorable under the conditions you’ve put together (temperature, pressure, etc.), then mass quantities of the desired products are created.

This is all a little chaotic. What if we wanted to do something more controlled? The goal of mechanosynthesis is to precisely control the reactive elements we wish to put together by using mechanical force to precisely position them together. In this way, the hope is that extremely complex structures could be assembled atom by atom or molecule by molecule.

The dream, as expressed in the molecular assembler project, was that mechanosynthesis can be mastered to such a degree that “nano-factories” could be built, capable of building many different things from the ground up, including another nanofactory. If this could be achieved, then as soon as one nanofactory is built, a vast army of them would immediately follow through the power of exponential growth. These could then build nanomachines that move around, manipulate objects, and build pretty much anything from the ground up, like a real life version of the Star Trek matter replicator.

If you want to convert a dream into a reality, you have to start thinking of engineering, If you could make such a nano-factory, what would it be made out of? There are a truly gargantuan number of materials out there we could try out, but almost all of them are not strong enough to support the kind of mechanical structures envisaged by the nanofactory researchers. The most promising candidate was “diamondoid”.

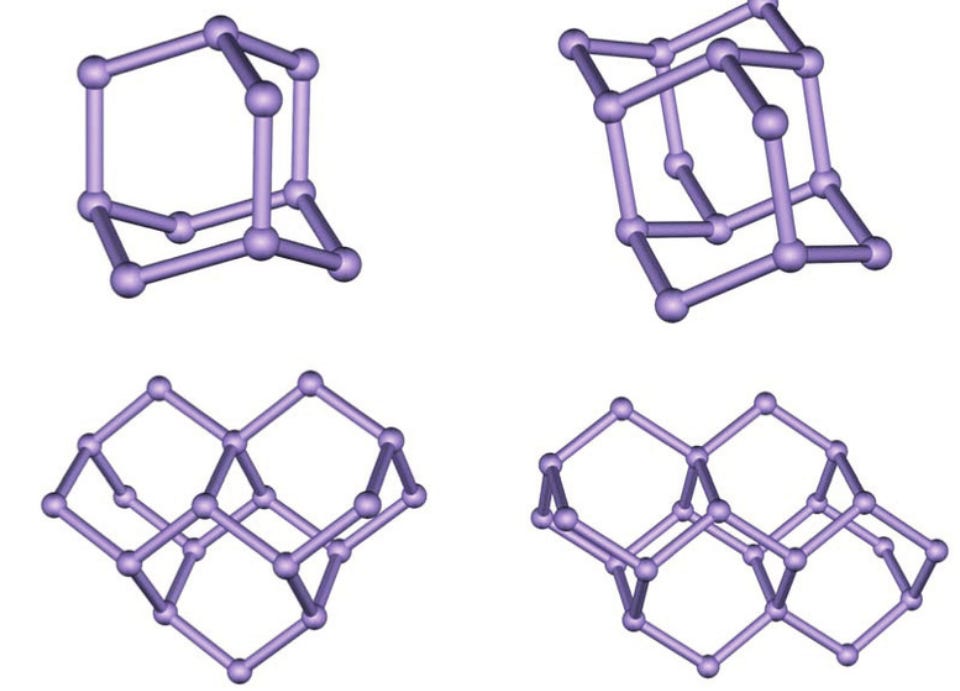

Now, what is “diamondoid”? You’d expect this to be an easy question to answer, but it’s actually a little thorny. The more common definition, the one used on wikipedia and most journal papers, is that diamondoid refers to a specific family of hydrocarbons like the ones shown below, with the simplest one being “adamantane”, with it’s strong, cage-like structure, and the other ones being formed by joining together multiple cages.

Image taken from here

These cages are incredibly strong and stable, which makes them a promising candidate material for building up large structures, and keeping them stable for assembly purposes.

The other definition, which seems to be mainly used by the small community of molecular nanotech(MNT) proponents, is that “diamondoid” just means “any sufficiently strong and stiff nanoscale material”. See this passage from the “molecular assembler” website:

Diamondoid materials also may include any stiff covalent solid that is similar to diamond in strength, chemical inertness, or other important material properties, and possesses a dense three-dimensional network of bonds. Examples of such materials are carbon nanotubes (illustrated at right) or fullerenes, several strong covalent ceramics such as silicon carbide, silicon nitride, and boron nitride, and a few very stiff ionic ceramics such as sapphire (monocrystalline aluminum oxide) that can be covalently bonded to pure covalent structures such as diamond.

This passage is very out of line with mainstream definitions. I couldn’t find a mention of “diamondoid” in any top carbon nanotube article. I’ve done a little research on aluminium oxide, and I have never in my life heard it called “diamondoid”, considering it neither contains the same elements as diamond, nor does it take the same structure as diamond or diamondoid hydrocarbons. This kind of feels like the “radical sandwich anarchy” section of this chart.

I really don’t want to get sidetracked into semantic debates here. But just know that the MNT definition is non-standard, might annoy material scientists, and could easily be used against you by someone with a dictionary.

In any case, it’s not a huge deal, because the molecular assembler team was focused on carbon-based diamond and diamondoid structures anyway.

The plan was to engage in both theoretical and experimental research to develop nanotech in several stages. Step 1 was to achieve working prototypes of diamond mechanosynthesis. Step 2 was to build on this to actually assemble complex molecular structures in a programmable mechanical manner. Step 3 was to find a way to parallelize the process, so that huge amounts of assembly could be done at once. Step 4 was to use that assembly to build a nanofactory, capable of building a huge number of things, including a copy of itself. The proposed timeline for this project is shown below:

They thought they would have the first three steps finished by 2023, and have working commercialized nanofactories by 2030. Obviously, this is not on track. I’m not holding this against them, as extremely ambitious projects rarely finish on schedule. They were also underfunded compared to what they wanted, furthering hampering progress.

How far did the project go, in the end?

DMS research: The theoretical side

The nanofactory collaboration put forward a list of publications, and as far as I can tell, every single one is theoretical or computational in nature. There are a few book chapters and patent applications, as well as about a dozen peer-reviewed scientific articles, mostly in non-prestigious journals1.

Skimming through the papers, they seem fine. A lot of time and effort has gone into them, I don’t see any obvious problems with their methodology, and the reasoning and conclusions seem to be a reasonable. Going over all of them would take way too long, but I’ll just pick one that is representative and relatively easy to explain: “Theoretical Analysis of Diamond Mechanosynthesis. Part II. C2 Mediated Growth of Diamond C(110) Surface via Si/Ge-Triadamantane Dimer Placement Tools”.

Please don’t leave, I promise you this is interesting!

The goal of this paper is simple: we want to use a tooltip to pick up a pair of carbon atoms (referred to as a “dimer”), place the dimer on a carbon surface (diamond), and remove the tooltip, leaving the dimer on the surface.

In our large world, this type of task is pretty easy: you pick up a brick, you place it where you want, and then you let it go. But all the forces present at our scale are radically different at the nanoscale. For example, we used friction to pick the brick up, but “friction” does not really exist at the single atom scale. Instead, we have to bond the cargo element to our tool, and then break that bond at the right moment. It’s like if the only way to lay bricks was to glue your hand to a brick, glue the brick to the foundation, and then rip your hand away.

Below we have the design for their tooltip that they were investigating here. We have our diamondoid cages from earlier, but we replace a pair of corner atoms with Germanium (or Si) atoms, and bond the cargo dimer to these corners, in the hopes it will make them easier to detach:

The first computational result is a checking of this structure using DFT simulations. I have described DFT and it’s strengths and shortcomings in this previous post. They find that the structure is stable in isolation.

Okay great, it’s stable on it’s own, but the eventual plan is to have a whole ton of these around working in parallel. So the next question they ask is this: if I have a whole bunch of these together, are they going to react with each other and ruin the tooltip? The answer, they find, is yes, in two different ways. Firstly, if two of these meet dimer-to-dimer, it’s thermodynamically favorable for them to fuse together into one big, useless tooltip. Secondly, if one encounters the hydrogen atoms on the surface of the other, it would tear them out to sit on the end of the cargo dimer, rendering it again useless. They don’t mention it explicitly, but I assume the same thing would happen if it encountered stray hydrogen in the air.

This is a blow to the design, and would mean great difficulty in actually using the thing large scale. In theory you could still pull it off by keeping the tools isolated from each other.

They check the stability of the tooltip location itself using molecular dynamics calculation, and find that it’s stable enough for purpose, with a stray that is smaller than the chemical bond distances involved.

And now for the big question: can it actually deposit the dimer on the surface? The following graph summarizes the DFT results:

On the left side, we have the initial state. The tooltip is carrying the cargo dimer. At this step, and at every other, a DFT calculation is taken out to calculate the entire energy of the simulation.

In the middle, we have the middle state. The tooltip has been lowered, carrying the tooltip to the surface, where the carbon dimer is now bonded both to the tooltip and to the diamond surface.

On the right, we have the desired final state. The tooltip has been retracted and raised, but the carbon is left behind on the surface.

All three states have been simulated using DFT to predict their energy, and so have a number of intermediate steps in between. From this, we can see that the middle step is predicted to be 3 eV more energetically favorable than the left state, meaning that there will be no problem progressing from left to middle.

The real problem they find is in going from the middle state to the right state. There is about a 5 eV energy barrier to climb to remove the tooltip. This is not a game ender, as we can apply such energy mechanically by pulling on the tooltip (I did a back of the envelope calculation and the energy cost didn’t seem prohibitive2).

No, the real problem is that when you pull on the tooltip, there no way to tell it to leave the dimer behind on the surface. In fact, it’s lower energy to rip up the carbon dimer as well, going right back to the left state, where you started.

They attempt a molecular dynamics simulation, and found that with the Germanium tip, deposition failed 4 out of 5 times (for silicon, it failed every time). They state this makes sense because the extra 1 eV barrier is small enough to be overcome, at least some of the time, by 17eV of internal (potential+kinetic) energy. If I were reviewing this paper I would definitely ask for more elaboration on these simulations, and where exactly the 17 eV figure comes from. They conclude that while this would not be good enough for actual manufacturing, it’s good enough for a proof of concept.

In a later paper, it is claimed that the analysis above was too simplistic, and that a more advanced molecular dynamics simulation shows the Ge tool reliably deposits the dimer on the surface every time. It seems very weird and unlikely to me that the system would go to the higher energy state 100% of the time, but I don’t know enough about how mechanical force is treated in molecular dynamics to properly assess the claim.

I hope that this analysis has given you a taste of the type of problem that is tackled in computational physics, and how it is tackled. From here, they looked at a few other challenges, such as investigating more tip designs, looking at the stability of large diamondoid structures, and a proposed tool to remove hydrogen from a surface in order to make it reactive, a necessary step in the process.

Experimental diamondoid research

Recall that the goal of this theoretical research was to set the stage for experimental results, with the eventual goal of actually building diamondoid. But if you look at the collaborators of the project, almost everyone was working on theory. Exactly one experimentalist team worked on the project.

The experimentalist in question was university of Nottingham professor Phillip Moriarty, of sixty symbols fame (he has a blog too). Interestingly enough, the collaboration was prompted by a debate with an MNT proponent in 2004, with Moriarty presenting a detailed skeptical critique of DMS proposals and Drexler-style nanotech in general. A sample of his concerns:

While I am open to the idea of attempting to consider routes towards the development of an implementation pathway for Mann et al.’s Si/Ge-triadamantane dimer placement reaction, even this most basic reaction in mechanochemistry is practically near-impossible. For example, how does one locate one tool with the other to carry out the dehydrogenation step which is so fundamental to Mann et al.’s reaction sequence?

….

Achieving a tip that is capable of both good atomic resolution and reliable single molecule positioning (note that the Nottingham group works with buckyballs on surfaces of covalently bound materials (Si(111) and Si(100)) at room temperature) requires a lot of time and patience. Even when a good tip is achieved, I’ve lost count of the number of experiments which went ‘down the pan’ because instead of a molecule being pushed/pulled across a surface it “decided” to irreversibly stick to the tip.

Despite the overall skepticism, he approved of the research efforts by Freitas et al, and the correspondence between them led to Moriarty signing on to the nanofactory project. Details on what happened next are scarce on the website.

Rather than try and guess what happened, I emailed Moriarty directly. The full transcripts are shown here.

Describing what happened, Moriarty explained that the work on diamond mechanosynthesis was abandoned after ten months:

Diamond is a very, very difficult surface to work with. We spent ten months and got no more than a few, poorly resolved atomic force microscopy (AFM) images. We’re not alone. This paper -- https://journals.aps.org/prb/cited-by/10.1103/PhysRevB.81.201403 (also attached)-- was the first to show atomic resolution AFM of the diamond surface. (There’d previously been scanning tunnelling microscopy (STM) images and spectroscopy of the diamond (100) surface but given that the focus was on mechanical force-driven chemistry (mechanosynthesis), AFM is a prerequisite.) So we switched after about a year of that project (which started in 2008) to mechanochemistry on silicon surfaces – this was much more successful, as described in the attached review chapter.

Inquiring as to why diamond was so hard to work with, he replied:

A key issue with diamond is that tip preparation is tricky. On silicon, it’s possible to recover atomic resolution relatively straight-forwardly via the application of voltage pulses or by pushing the tip gently (or not so gently!) into the surface – the tip becomes silicon terminated. Diamond is rather harder than silicon and so once the atomistic structure at the end is lost, it needs to be moved to a metal sample, recovered, and then moved back to the diamond sample. This can be a frustratingly slow process.

Moreover, it takes quite a bit of work to prepare high quality diamond surfaces. With silicon, it’s much easier: pass a DC current through the sample, heat it up to ~ 1200 C, and cool it down to room temperature again. This process routinely produces large atomically flat terraces.

So it turns out that mechanosynthesis experiments on diamond are hard. Like ridiculously hard. Apparently only one group ever has managed to successfully image the atomic surface in question. This renders attempts to do mechanosynthesis on diamond impractical, as you can’t tell whether or not you’ve pulled it off or not.

This is a great example of the type of low-level practical problem that is easy to miss if you are a theoretician (and pretty much impossible to predict if you aren’t a domain expert).

So all of those calculations about the best tooltip design for depositing carbon on diamond ended up being completely useless for the problem of actually building a nanofactory, at least until imaging technology or techniques improve.

But there wasn’t zero output. The experimental team switched materials, and was able to achieve some form of mechanosynthesis. It wasn’t on diamond, but Silicon, which is much easier to work with. And it wasn’t deposition of atoms, it was a mechanical switch operated with a tooltip, summarized in this youtube video. Not a direct step toward molecular assembly, but still pretty cool.

As far as I can tell, that’s the end of the story, when it comes to DMS. The collaboration appears to have ended in the early 2010’s, and I can barely find any mention of the topic in the literature past 2013. They didn’t reach the dream of a personal nanofactory: they didn’t even reach the dream of depositing a few carbon atoms on a diamond surface.

A brief defense of dead research directions

I would say that DMS research is fairly dead at the moment. But I really want to stress that that doesn’t mean it was bad research, or pseudoscience, or a waste of money.

They had a research plan, some theoretical underpinnings, and explored a possible path to converting theory into experimental results. I can quibble with their definitions, and some of their conclusions seem overly optimistic, but overall they appear to be good faith researchers making a genuine attempt to expand knowledge and tackle a devilishly difficult problem with the aim of making the world a better place. That they apparently failed to do so is not an indictment, it’s just a fact of science, that even great ideas mostly don’t pan out into practical applications.

Most research topics that sound good in theory don’t work in practice, when tested and confronted with real world conditions. This is completely fine, as the rare times when something works, a real advancement is made that improves the lives of everyone. The plan for diamondoid nanofactories realistically had a fairly small chance of working out, but if it had, the potential societal benefits could have been extraordinary. And the research, expertise, and knowledge that comes out of failed attempts are not necessarily wasted, as they provide lessons and techniques that help with the next attempt.

And while DMS research is somewhat dead now, that doesn’t mean it won’t get revived. Perhaps a new technique will be invented that allows for reliable imaging of diamondoid, and DMS ends up being successful eventually. Or perhaps after a new burst of research, it will prove impractical again, and the research will go to sleep again. Such is life, in the uncertain realms of advanced science.

Don’t worry, nanotech is still cool as hell

At this point in my research, I was doubting whether even basic nanomachines or rudimentary mechanosynthesis was even possible. But this was an overcorrection. Nanoscience is still chuggin along fine. Here, I’m just going to list a non-exhaustive list of some cool shit we have been able to do experimentally. (most of these examples were taken from “nanotechnology: a very short introduction”, written by Phillip Moriarty (the same one as before).

First, I’ll note that traditional chemistry can achieve some incredible feats of engineering, without the need for mechanochemistry at all. For example, in 2003 the Nanoputian project successfully built a nanoscale model of a person out of organic molecules. They used cleverly chosen reaction pathways to produce the upper body, and cleverly chosen reaction pathways to produce the lower body, and then managed to pick the exact right conditions to mix them together in that would bond the two parts together.

Similarly, traditional chemistry has been used to build “nanocars” , nanoscale structures that contain four buckyball wheels connected to a molecular “axle”, allowing it to roll across a surface. Initially, these had to be pushed directly by a tooltip. In later versions, such as the nanocar race, the cars are driven by electron injection or electric fields from the tooltip, reaching top speeds of 300 nm per hour. Of course, at this speed the nanocar would take about 8 years to cross the width of a human finger, but it’s the principle that counts.

The Nobel prize in 2016 was awarded to molecular machines, for developing molecular lifts, muscles, and axles.

I’ll note that using a tooltip to slide atoms around has been a thing since 1990, when IBM wrote their initials using xenon atoms. A team achieved a similar feat for selected silicon atoms on silicon surfaces in 2003, using purely mechanical force.

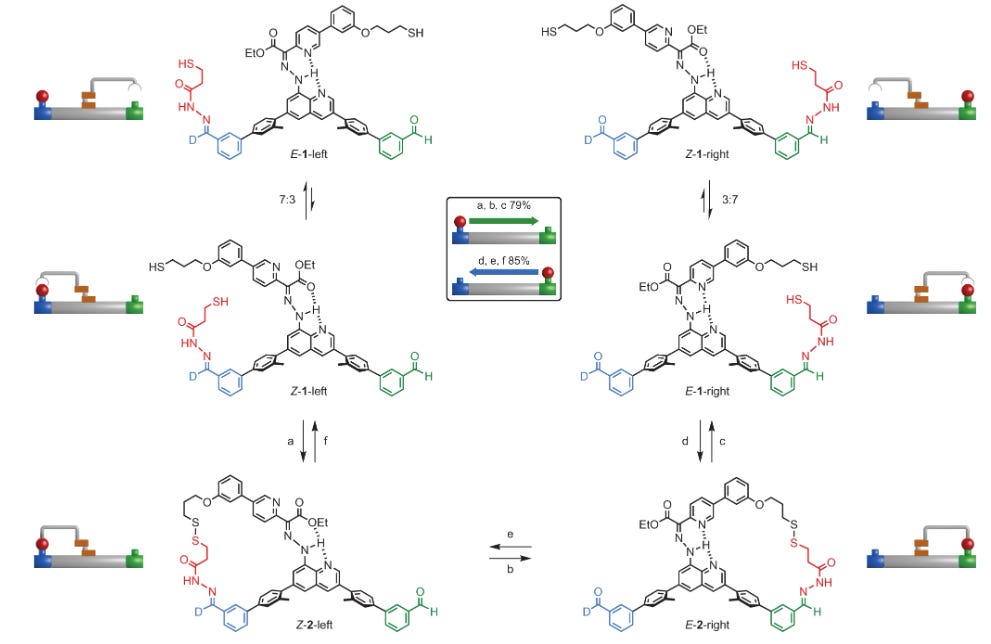

As for the dream of molecular assembly, the goal of picking atoms up and placing them down has been achieved by a UK team, which were able to use a chemical arm to pick up a cargo molecule bonded on one side, transfer it to another side, and drop it and leave it in place:

This is not mechanosynthesis as it is not powered by direct mechanical force, but from chemical inputs, such as varying the acidity of the solution. It is also based on more complex organic molecules, rather than diamondoid structures.

This brings us to what seems the most interesting and promising area : DNA based nanotech. This makes sense: over billions of years evolution already figured out a way to build extremely complex self-replicating machines, which can also build little bots as small as 20nm across. Actual bacteria are larger scale and more fragile than hypothetical nanofactories, but have the distinct advantage of actually existing. Why reinvent the wheel?

I have very little background in biology, so I won’t venture too deeply into the topic (which deserves a whole post of it’s own), but there have been a number of highly impressive achievements in DNA based nanotech. The techniques of DNA origami allow for DNA structures to fold up among themselves to form a variety of structures, such as spheres, cubes, and nanoflasks. One team used one such DNA nanorobot to target tumour growth in mice. The research is still some ways from practical human applications (and many such promising medical technologies end up being impractical anyway). Nonetheless, I’m impressed, and will be watching this space closely.

So are diamondoid bots a threat?

It’s very hard to prove that a technology won’t pan out, if it doesn’t inherently break the laws of physics. But a tech being “not proven to be impossible” does not mean the tech is “inevitable”.

With regards to diamondoid specifically, the number of materials that are not diamondoid outnumbers the number of materials that are by a truly ridiculously large margin. And although diamondoid has a lot going for it in terms of stiffness and strength, we saw that it also has shortcomings that make it difficult to work with, and potential minefields like the tooltips theoretically sticking to each other. So my guess is that if Drexler-style nanofactories are possible, they will not be built up of diamondoid.

How about nanofactories made of other materials? Well, again, there are a truly gargantuan number of materials available, which does give some hope. But then, this is also a ridiculously hard problem. We haven’t even scratched the surface of the difficulties awaiting such a project. Depositing one measly dimer on a surface turned out to be too hard, but once we achieved that, you have to figure out how to place the next one, and the next one, and build a proper complex structure without getting your tooltip stuck. You need a way to harvest your sources of carbon to build things up with. If you want to be truly self-sufficient and self-replicating, you need a source of energy for the mechanical force needed to rip atoms away, and a means of propulsion to move your entire nanofactory around.

Designs have been proposed for a lot of these problems (like in Drexlers thesis), but each step is going to be beset with endless issues and engineering challenges that would have to be trudged through, one step at a time. We’ve barely gotten to step 1.

Fusion power is often accurately mocked for having been “20 years away” for over three decades. It had proofs of concept and was understood, it seemed that all was left was the engineering, which ended up being ridiculously hard. To me, molecular nanotech looks about 20 years away from being “20 year away”. At the current rate of research, I would guess it won’t happen for at least 60 years, if it happens at all. I would be happy to be proven wrong.

I consulted professor Moriarty whether he thought the scenario proposed by Yudkowsky was plausible:

We are a long, long, loooong way from the scenario Yudkowsky describes. For example, despite it being 33 years since the first example of single atom manipulation with the STM (the classic Eigler and Schweizer Nature paper where they wrote the IBM logo in xenon atoms), there’s yet to be a demonstration of the assembly of even a rudimentary 3D structure with scanning probes: the focus is on the assembly of structures by pushing, pulling, and/or sliding atoms/molecules across a surface. Being able to routinely pick up, and then drop, an atom from a tip is a much more complicated problem.

Marauding swarms of nanobots won’t be with us anytime soon.

This seems like a good place to note that MNT proponents have a record of extremely over-optimistic predictions. See this estimation of MNT arrival from Yudkowsky in 1999:

As of '95, Drexler was giving the ballpark figure of 2015 (11). I suspect the timetable has been accelerated a bit since then. My own guess would be no later than 2010.

Could the rate of research accelerate?

Now, I can’t leave without addressing the most likely objection. I said greater than 60 years at the current rate of research. But what if the rate of research speeds up?

One idea is that the DNA or bio-based robots will be used to build a drexler-style nanofactory. This is the “first stage nanofactory” that yud mentions in list of lethalities, and it was the first step proposed by Drexler as well. I see how this could enable better tools and more progress, but I’m not sure how this would affect the fundamental chemistry issues that need to be overcome to build a non-protein based machine. How will the biobot stop two tooltips from sticking together?. If you want to molecularly assemble something, would in really be better for a tooltip to be held by a wiggly biologically based bot, instead of a precisely computerized control tooltip?

The more common objection is that artifical intelligence will speed this research up. Well, now we’re working with two high uncertainty, speculative technologies. To keep this simple I’ll restrict this analysis to the short term (the next decade or so), and assume no intelligence explosion occurs. I might revisit the subject in more depth later on.

First, forget the dream of advances in theory rendering experiment unnecessary. As I explained in a previous post, the quantum equations are just way too hard to solve with 100% accuracy, so approximations are necessary, which themselves do not scale particularly well.

Machine learning in quantum chemistry has been investigated for some time now, and there are promising techniques that could somewhat speed up a subset of calculations, and make some larger-scale calculations feasible that were not before. For my research, the primary speedups from AI come from using chatGPT to speed up coding a bit and helping to write bureaucratic applications.

I think if the DMS project were ran today, the faster codes would allow for slightly more accurate results, more calculations per paper allowing for more materials to be investigated, and potentially the saved time from writing and coding could allow for another few papers to be squeezed out. For example, if they used the extra time to look at silicon DMS as well as carbon DMS, they might have gotten something that could actually be experimentally useful.

I’m not super familiar with the experimental side of things. In his book, Moriarty suggests that machine learning could be applied to:

image and spectral classification in various forms of microscopy, automation of time-consuming tasks such as optimization of the tip of an STM or AFM, and the positioning of individual atoms and molecules.

So I think this could definitely speed up parts of the experimental process. However, there are still going to be a lot of human-scale bottlenecks to keep a damper on things, such as sample preparation. And as always with practical engineering, a large part of the process will be figuring out what the hell went wrong with your last experiment. There still is no AI capable of figuring out that your idiot labmate Bob has been contaminating your samples by accident.

What about super-advanced AGI? Well, now we’re guessing about two different speculative technologies at once, so take my words (and everyone else’s) with a double grain of salt. Obviously, an AGI would speed up research, but I’m not sure the results would be as spectacular as singularity theorists expect.

An AI learning, say, Go, can play a hundred thousand games a minute with little difficulty. In science, there are likely to be human-scale bottlenecks that render experimentation glacial in comparison. High quality quantum chemistry simulations can take days or weeks to run, even on supercomputing clusters. On the experimental side humans have to order parts, deliver them, prepare the samples, maintain the physical equipment, etc. It’s possible that this can be overcome with some sort of massively automated robotic experimentation system… but then you have to build that system, which is a massive undertaking in itself. Remember, the AI would not be able to use MNT to build any this. And of course, this is all assuming the AI is actually competent, and that MNT is even possible in practicality.

Overall, I do not think trying to build drexler-style nanomachinery would be an effective plan for the adversaries of humanity, at least as things currently stand. If they try, I think we stand a very good chance of detecting and stopping them, if we bother to look instead of admitting premature defeat.

Summary

- “Diamondoid bacteria” is a phrase that was invented 2 years ago, referring obliquely to diamondoid mechanosynthesis (DMS)

- DMS refers to a proposed technology where small cage-like structures are used to position reactive molecules together to assemble complex structures.

- DMS research was pursued by a nanofactory collaboration of scientists starting in the early 2000’s.

- The theoretical side of the research found some potentially promising designs for the very initial stages of carbon deposition but also identified some major challenges and shortcomings.

- The experimental side was unsuccessful due to the inability to reliably image the diamond surface.

- Dead/stalled projects are not particularly unusual and should not reflect badly on the researchers.

- There are still many interesting advances and technologies in the field of nanoscience, including promising advances in DNA based robotics.

- At the current rate of research, DMS style nanofactories still seem many, many decades away at the minimum.

- This research could be sped up somewhat by emerging AI tech, but it is unclear to what extent human-scale bottlenecks can be overcome in the near future.

Thanks for this thoughtful and detailed deep dive!

I think it misses the main cruxes though. Yes, some people (Drexler and young Yudkowsky) thought that ordinary human science would get us all the way to atomically precise manufacturing in our lifetimes. For the reasons you mention, that seems probably wrong.

But the question I'm interested in is whether a million superintelligences could figure it out in a few years or less. (If it takes them, say, 10 years or longer, then probably they'll have better ways of taking over the world) Since that's the situation we'll actually be facing.

To answer that question, we need to ask questions like

(1) Is it even in principle possible? Is there some configuration of atoms, that would be a general-purpose nanofactory, capable of making more of itself, that uses diamandoid instead of some other material? Or is there no such configuration?

Seems like the answer is "Probably, though not necessarily; it might turn out that the obstacles discussed are truly insurmountable. Maybe 80% credence." If we remove the diamandoid criterion and allow it to be built of any material (but still require it to be dramatically more impressive and general-purpose / programmable than ordinary life forms) then I feel like the credence shoots up to 95%, the remaining 5% being model uncertainty.

(2) Is it practical for an entire galactic empire of superintelligences to build in a million years? (Conditional on 1, I think the answer to 2 is 'of course.')

(3) OK, conditional on the above, the question becomes what the limiting factor is -- is it genius insights about clever binding processes or mini-robo-arm-designs exploiting quantum physics to solve the stickiness problems mentioned in this post? Is it mucking around in a laboratory performing experiments to collect data to refine our simulations? Is it compute & sim-algorithms, to run the simulations and predict what designs should in theory work? Genius insights will probably be pretty cheap to come by for a million superintelligences. I'm torn about whether the main constraint will be empirical data to fit the simulations, or compute to run the simulations.

(4) What's our credence distribution over orders of magnitude of the following inputs: Genius, experiments, and compute, in each case assuming that it's the bottleneck? Not sure how to think about genius, but it's OK because I don't think it'll be the bottleneck. Our distributions should range over many orders of magnitude, and should update on our observation so far that however many experiments and simulations humans have done didn't seem close to being enough.

I wildly guess something like 50% that we'll see some sort of super powerful nanofactory-like thing. I'm more like 5% that it consists of diamandoid in particular, there are so many different material designs and even if diamandoid is viable and in some sense theoretically the best, the theoretical best probably takes several OOMs more inputs to achieve than something else which is just merely good enough.

Hey, thanks for engaging. I saved the AGI theorizing for last because it's the most inherently speculative: I am highly uncertain about it, and everyone else should be too.

I would dispute that "a million superintelligence exist and cooperate with each other to invent MNT" is a likely scenario, but even given that, my guess would still be no. The usual disclaimer that the following is all my personal guesses as a non-experimentalist and non-future knower:

If we restrict to diamondoid, my credence would be very low, somewhere in the 0 to 10% range. The "diamondoid massively parallel builds diamondoid and everything else" process is intensely challenging: we only need one step to be unworkable for the whole thing to be kaput, and we've already identified some potential problems (tips sticking together, hydrogen hitting, etc). With all materials available, my credence is very likely (above 95%) that something self-replicating that is more impressive than bacteria and viruses is possible, but I have no idea how impressive the limits of possibility are.

I'd agree that this is almost certain conditional on 1.

To be clear, all forms of bonds are "exploiting quantum physics", in that they are low-energy configurations of electrons interacting with each other according to quantum rules. The answer to the sticky fingers problem, if there is one, will almost certainly involve the bonds we already know about, such as using weaker Van-der-Waals forces to stick and unstick atoms, as I think is done in biology?

As for the limiting factor: In the case of the million years of superintelligences, it would probably be a long search over a gargantuan set of materials, and a gargantuan set of possible designs and approaches, to identify ones that are theoretically promising, tests them with computational simulations to whittle them down, and then experimentally create each material and each approach and test them all in turn. The galaxy cluster would be able to optimize each step to calculate what balance will be fastest overall.

The balance will be different in the galaxy than in the human scale, because they would have orders of magnitude more compute available (including quantum computing), would have a galaxy worth of materials available, wouldn't have to hide from people, etc. So you really have to ask about the actual scenario, not the galaxy.

In the actual scenario of super-AI trying to covertly build nanotech, the bottleneck would likely be experimental. The problem is a twin dilemma: If you have to rely on employing humans in a lab, then they go at human pace, and hence will not get the job done in a few years. If you try to eliminate the humans from the production process, you need to build a specialized automated lab... which also requires humans, and would probably take more than a few years.

What part of the scenario would you dispute? A million superintelligences will probably exist by 2030, IMO; the hard part is getting to superintelligence at all, not getting to a million of them (since you'll probably have enough compute to make a million copies)

I agree that the question is about the actual scenario, not the galaxy. The galaxy is a helpful thought experiment though; it seems to have succeeded in establishing the right foundations: How many OOMs of various inputs (compute, experiments, genius insights) will be needed? Presumably a galaxy's worth would be enough. What about a solar system? What about a planet? What about a million superintelligences and a few years? Asking these questions helps us form a credence distribution over OOMs.

And my point is that our credence distribution should be spread out over many OOMs, but since a million superintelligences would be capable of many more OOMs of nanotech research in various relevant dimensions than all humanity has been able to achieve thus far, it's plausible that this would be enough. How plausible? Idk I'm guessing 50% or so. I just pulled that number out of my ass, but as far as I can tell you are doing the same with your numbers.

I didn't say they'd covertly be building it. It would probably be significantly harder if covert, they wouldn't be able to get as many OOMs. But they'd still get some probably.

I don't think using humans would mean going at a human pace. The humans would just be used as actuators. I also think making a specialized automated lab might take less than a year, or else a couple years, not more than a few years. (For a million superintelligences with an obedient human nation of servants, that is)

This is a very wild claim to throw out with no argumentation to back it up. Cotra puts a 15% chance on transformative AI by 2036, and I find his assumptions incredibly optimistic about AI arrival. (also worth noting that transformative AI and superintelligence are not the same thing). The other thing I dispute is that a million superintelligences would cooperate. They would presumably have different goals and interests: surely at least some of them would betray the other's plan for a leg-up from humanity.

You don't think some of the people of the "obedient nation" are gonna tip anyone off about the nanotech plan? Unless you think the AI's have some sort of mind-control powers, in which case why the hell would they need nanotech?

I said IMO. In context it was unnecessary for me to justify the claim, because I was asking whether or not you agreed with it.

I take it that not only do you disagree, you agree it's the crux? Or don't you? If you agree it's the crux (i.e. you agree that probably a million cooperating superintelligences with an obedient nation of humans would be able to make some pretty awesome self-replicating nanotech within a few years) then I can turn to the task of justifying the claim that such a scenario is plausible. If you don't agree, and think that even such a superintelligent nation would be unable make such things (say, with >75% credence), then I want to talk about that instead.

(Re: people tipping off, etc.: I'm happy to say more on this but I'm going to hold off for now since I don't want to lose the main thread of the conversation.)

Btw Ajeya Cotra is a woman and uses she/her pronouns :)

Much of the (purported) advantage of diamondoid mechanisms is that they're (meant to be) stiff enough to operate deterministically with atomic precision. Without that, you're likely to end up much closer to biological systems—transport is more diffusive, the success of any step is probabilistic, and you need a whole ecosystem of mechanisms for repair and recycling (meaning the design problem isn't necessarily easier). For anything that doesn't specifically need self-replication for some reason, it'll be hard to beat (e.g.) flow reactors.

I broadly endorse this reply and have mostly shifted to trying to talk about "covalently bonded" bacteria, since using the term "diamondoid" (tightly covalently bonded CHON) causes people to panic about the lack of currently known mechanosynthesis pathways for tetrahedral carbon lattices.

This terminology is actually significantly worse, because it makes it almost impossible for anyone to follow up on your claims. Covalent bonds are the most common type of bond in organic chemistry, and thus all existing bacteria have them in ridiculous abundance. So claiming the new technology will be "covalently bonded" does not distinguish it from existing bacteria in the slightest.

To correct some other weird definitions you made in your very short reply: "tetrahedral carbon lattice" is the literal exact same thing as diamond. The scientific definition of diamondoid is also not "tightly covalently bonded CHON", it specifically refers to hydrocarbon variants of adamantane, which are not tetrahedral (I discussed this in the post). Also, the new technology probably would not fit the definition of "bacteria", except in a metaphorical sense.

Now, I'm assuming you mean something like "in places where existing bacteria uses weak van der waals forces to stick together, the new tech will use stronger covalent bonds instead". If you have specific research you are referring to, I would be interested in reading it, because, again, you have made googling the subject impossible.

My problem here would be that in a lot of cases, you actually want the forces to be weak. If you want to assemble and reassemble things, and stick them and break them, having too strong forces will make life significantly harder (this was the subject of the theoretical study I looked at). There is a reason bricklayers don't coat their gloves in superglue when working with bricks.

As for the "tetrahedral carbon" if you are aware of other dedicated research efforts for mechanosynthesis that I have missed in my post, I would be genuinely interested in reading up on them. I did my best to look, and did my best to highlight the ones I could find in my extensively researched article which I'm unsure if you actually read.

What if he just said "Some sort of super-powerful nanofactory-like thing?"

He's not citing some existing literature that shows how to do it, but rather citing some existing literature which should make it plausible to a reasonable judge that a million superintelligences working for a year could figure out how to do it. (If you dispute the plausibility of this, what's your argument? We have an unfinished exchange on this point elsewhere in this comment section. Seems you agree that a galaxy full of superintelligences could do it; I feel like it's pretty plausible that if a galaxy of superintelligences could do it, a mere million also could do it.)

I would vastly prefer this phrasing, because it would be an accurate relaying of his beliefs, and would not involve the use of scientific terms that are at best misleading and at worst active misinformation.

As for the "millions of superintelligences", one of my main cruxes is that I do not think we will have millions of superintelligences in my lifetime. We may have lots of AGI, but I do not believe that AGI=superintelligence. Also, I think that if a few superintelligences come into existence they may prevent others from being built out of self-preservation. These points are probably out of scope here though.

I don't think a million superintelligences could invent nanotech in a year, with only the avalaible resources on earth. Unlike the galaxy, there is limited computational power available on earth, and limited everything else as well. I do not think the sheer scale of experimentation required could be assembled in a year, without having already invented nanotech. The galaxy situation is fundamentally misleading.

Lastly, I think even if nanotech is invented, it will probably end up being disappointing or limited in some way. This tends to be the case with all technologies: Did anyone predict that when we could build an AI that could easily pass a simple turing test, but be unable to multiply large numbers together? Hypothetical technologies get to be perfect in our minds, but as something actually gets built, it accumulates shortcomings and weaknesses from the inevitable brushes with engineering.

Cool. Seems you and I are mostly agreed on terminology then.

Yeah we definitely disagree about that crux. You'll see. Happy to talk about it more sometime if you like.

Re: galaxy vs. earth: The difference is one of degree, not kind. In both cases we have a finite amount of resources and a finite amount of time with which to do experiments. The proper way to handle this, I think, is to smear out our uncertainty over many orders of magnitude. E.g. the first OOM gets 5% of our probability mass, the second OOM gets 5% of the remaining probability mass, and so forth. Then we look at how many OOMs of extra research and testing (compared to what humans have done) a million ASIs would be able to do in a year, and compare it to how many OOMs extra (beyond that level) a galaxy worth of ASI would be able to do in many years. And crunch the numbers.

It really seems to me like the galaxy thing is just going to mislead, rather than elucidate. I can make my judgements about a system where one planet is converted into computronium, one planets contains a store of every available element, one planet is tiled completely with experimental labs doing automated experiments, etc. But the results of that hypothetical won't scale down to what we actually care about. For example, it wouldn't account for the infrastructure that needs to be built to assemble any of those components in bulk.

If someone wants to try their hand at modelling a more earthly scenario, I'd be happy to offer my insights. Remember, this development of nanotech has to predate the AI taking over the world, or else the whole exercise is pointless. You could look at something like "AI blackmails the dictator of a small country into starting a research program" as a starting point.

Personally, I don't think there is very much you can be certain about, beyond: "this problem is extremely fucking hard", and "humans aren't cracking this anytime soon". I think building the physical infrastructure required to properly do the research in bulk could easily take more than a year on it's own.

I agree with the claims "this problem is extremely fucking hard" and "humans aren't cracking this anytime soon" and I suspect Yudkowsky does too these days.

I disagree that nanotech has to predate taking over the world; that wasn't an assumption I was making or a conclusion I was arguing for at any rate. I agree it is less likely that ASIs will make nanotech before takeover than that they will make nanotech while still on earth.

I like your suggestion to model a more earthly scenario but I lack the energy and interest to do so right now.

My closing statement is that I think your kind of reasoning would have been consistently wrong had it been used in the past -- e.g. in 1600 you would have declared so many things to be impossible on the grounds that you didn't see a way for the natural philosophers and engineers of your time to build them. Things like automobiles, flying machines, moving pictures, thinking machines, etc. It was indeed super difficult to build those things, it turns out -- 'impossible' relative to the R&D capabilities of 1600 -- but R&D capabilities improved by many OOMs, and the impossible became possible.

Sorry, to be clear, I wasn't actually making a prediction as to whether nanotech predates AI takeover. My point is that that these discussions are in the context of the question "can nanotech be used to defeat humanity". If AI can only invent nanotech after defeating humanity, that's interesting but has no bearing on the question.

I also lack the energy or interest to do the modelling, so we'll have to leave it there.

My closing rebuttal: I have never stated that I am certain that nanotech is impossible. I have only stated that it could be impossible, impractical, or disappointing, and that the timelines for development are large, and would remain so even with the advent of AGI.

If I had stated in 1600 that flying machines, moving pictures, thinking machines, etc were at least 100 years off, I would have been entirely correct and accurate. And for every great technological change that turned out to be true and transformative, there are a hundred great ideas that turned out to be prohibitively expensive, or impractical, or just plain not workable. And as for the ones that did work out, and did transform the world: it almost always took a long time to build them, once we had the ability to. And even then they started out shitty as hell, and took a long, long time to become as flawless as they are today.

I'm not saying new tech can't change the world, I'm just saying it can't do it instantly.

Thanks for discussing with me!

(I forgot to mention an important part of my argument, oops -- You wouldn't have said "at least 100 years off" you would have said "at least 5000 years off." Because you are anchoring to recent-past rates of progress rather than looking at how rates of progress increase over time and extrapolating. (This is just an analogy / data point, not the key part of my argument, but look at GWP growth rates as a proxy for tech progress rates: According to this GWP doubling time was something like 600 years back then, whereas it's more like 20 years now. So 1.5 OOMs faster.) Saying "at least a hundred years off" in 1600 would be like saying "at least 3 years off" today. Which I think is quite reasonable.)

That argument does make more sense, although it still doesn't apply to me, as I would never confidently state a 5000 year forecast due to the inherent uncertainty of long term predictions. (My estimates for nanotech are also high uncertainty, for the record).

no worries, I enjoyed the debate!

As someone who studied materials science, I enjoyed this post and appreciated the effort you spent on making technical work legible for laypeople.

As a general comment, I would like to see a technical/mechanistic breakdown of other threat models for how AI could cause doom very soon – I would be surprised if this was the only example of a theoretical threat that is practically very unlikely/bottlenecked due to engineering reasons.

I also would like to see such breakdowns, but I think you are drawing the wrong conclusions from this example.

Just because Yudkowsky's first guess about how to make nanotech, as an amateur, didn't pan out, doesn't mean that nanotech is impossible for a million superintelligences working for a year. In fact it's very little evidence. When there are a million superintelligences they will surely be able to produce many technological marvels very quickly, and for each such marvel, if you had asked Yudkowsky to speculate about how to build it, he would have failed.

(Similarly, the technological marvels produced in the 20th century would not have been correctly guessed-how-to-build by people in the 19th century, yet they still happened, and someone in the 19th century could have predicted that many of them would happen despite not being able to guess how. E.g. heavier-than-air flight.)

Really well written post, thanks. I know how hard it is to explain this kind of difficult stuff, and I now understand a LOT more about nano-stuff in general than I did 20 minutes ago.

And I know this isn't a very EA comment, but I cant believe that one of the main guys in this story is

Professor Moriarty

...

Thanks, I found this helpful to read. I added it to my database of resources relevant for thinking about extreme risks from advanced nanotechnology.

I do agree that MNT seems very hard, and because of that it seems likely that it if it's developed it in an AGI/ASI hyper-tech-accelerated world it would developed relatively late on (though if tech development is hugely accelerated maybe it would still be developed pretty fast in absolute terms).

Great post, titotal!

I definitely would have preferred a TLDR or summary at the top, not the bottom. However, I definitely appreciate your investigation into this, as I have long loathed Eliezer’s use of the term once I realized he just made it up.

Thanks for the write up and I think I learned a lot of stuff. But at the same time I think you're getting a bit hung up on the particular word "diamondoid". It's normal in language for words to have different meanings in a base-level language versus in particular specialized jargons. For instance "speed" and "velocity" are synonyms in normal English but in physics jargon they're distinct. As Kuhn famously noted whether a lone helium atom counts as a "molecule" or not depends on whether one is speaking chemistry jargon or physics jargon (with general English not being precise enough to give an answer). And very few people would consider carbon a "metal" but that's just what it, along with every element heavier than helium, is called in astronomy jargon.

In general, in English, if you have a base word you can append the suffix "-oid" to create a new word that means "something similar to the base word without being it". So you might properly call a bean bag a "chairoid", something with many important chair properties without being a chair. And often these constructions get taken up and given precise meanings in different formal jargons for words like "sphereoid", "planetoid", or apparently "diamondoid".

As general practice I think it's good to avoid stepping on precise meanings in jargon when you're aware of them and there's another word that works as well. Use "speed" rather than "velocity" and call a rhombohedron "cube-like" rather than "cuboid". But at the same time I don't think it's a very bad mistake to use a word that makes sense in non-jargon English in a way that conflicts with established jargon. And if someone uses a word in a way that doesn't make sense in jargon terms but does in ordinary English usually its best to go for the non-jargon interpretation.

I think you've entirely missed my actual complaint here. There would have been nothing wrong with inventing a new term and using it to describe a wide class of structures. The problem is that the term already existed, and already had an accepted scientific definition since the 1960's (adamantane family materials). If a term already has an accepted jargon definition in a scientific field, using the same term to mean something else is just sloppy and confusing.

I think it's wrong to think of using a construction obeying the normal English rules of word construction as creating a new term. If I said that a person was "unwelcomable" that wouldn't really be inventing a new term despite the fact that it doesn't appear in a dictionary. It's still a normal English word because it's a normal English construction.

Yes, diamondoid as referring to the adamantane family might go back to the 1960s but in practice how many people understand it that way, 100,000? In theory everyone who has taken high school physics should understand the difference between speed and velocity but as people use it velocity is still most most commonly used as a synonym for speed and I think it's useless to try to police that. And likewise "-oid" constructions that happen to collide with some field's technical usage if the construction isn't used in the formal context of that field.