After reading this post, I found myself puzzling over the following question: why is Tetlock-style judgmental forecasting so popular within EA, but not that popular outside of it?

From what I know about forecasting (admittedly not much), these techniques should be applicable to a wide range of cases, and so they should be valuable to many actors. Financial institutions, the corporate sector in general, media outlets, governments, think tanks, and non-EA philanthropists all seem to face a large number of questions which could get better answers through this type of forecasting. In practice, however, forecasting is not that popular among these actors, and I couldn't think of/find a good reason why.[1]

The most relevant piece on the topic that I could find was Prediction Markets in The Corporate Setting. As the title suggests, it focuses on prediction markets (whose lack of success is similarly intriguing), but it also discusses forecasting tournaments to some extent. Although it does a great job at highlighting some important applicability issues for judgmental forecasting and prediction markets, it doesn't explain why these tools would be particularly useful for EAs. None of the reasons there would explain the fact that financial institutions don't seem to be making widespread use of forecasting to get better answers to particularly decision-relevant questions, either internally or through consultancies like Good Judgment.

Answers to this question could be that this type of forecasting is:

- Useful for EA, but not (much) for other actors. This solution has some support if we think that EAs and non-EAs are efficiently pursuing their goals. If this is true, then it suggests that EAs should continue supporting research on forecasting and development of forecasting platforms, but should perhaps focus less on getting other actors to use it.[2] My best guess is that this is not true in general, though it is more likely to be true for some domains, such as long-run forecasting.

- Useful for EA and other actors. I think that this is the most likely solution to my question. However, as mentioned above, I don't have a good explanation for the situation that we observe in the world right now. Such an explanation could point us to what are the key bottlenecks for widespread adoption. Trying to overcome those bottlenecks might be a great opportunity for EA, as it might (among other benefits) substantially increase forecasting R&D.

- Not useful. This is the most unlikely solution, but is still worth considering. Assessing the counterfactual value of forecasting for EA decisionmaking seems hard, and it could be the case that the decisions we would make without using this type of forecasting would be as good as (or maybe even better than) those we currently obtain.

It could be that I'm missing something obvious here, and if so, please let me know! Otherwise, I don't know if anyone has a good answer to this question, but I'd also really appreciate pieces of evidence that support/oppose any of the potential answers outlined above. For example, I would expect that by this point we have a number of relatively convincing examples where forecasting has led to decisionmaking that's considerably better than the counterfactual.

- ^

This is not to say that forecasting isn't used at all. For example, it is used by the UK government's Cosmic Bazaar, The Economist runs a tournament at GJO, and Good Judgment has worked for a number of important clients. However, given how popular Superforecasting was, I would expect these techniques to be much more widely used now if they are as useful as they appear to be.

- ^

Open Philanthropy has funded the Forecasting Research Institute (research), Metaculus (forecasting platform) and INFER (a program to support the use of forecasting by US policymakers).

My understanding is that the empirical basis for the forecasting comes from the academic research of Phillip Tetlock, summarised in the book Superforecasting (I read the book recently, it's pretty good).

Essentially, the research signed up people to conduct large amounts of forecasts about world events, and scored them on their accuracy. The research found that certain people were able to consistently outperform even top intelligence experts. These people used the sort of techniques familiar to EA: analysing problems dispassionately, breaking them down into pieces, putting percentage estimates on them, and doing frequent pseudo-bayesian "updates". I say pseudo-bayesian because a lot of them weren't actually using bayes theorem, instead just bumping the percentage points up and down, helped with the intuition they developed, which apparently still worked.

One theory as to why this type of forecasting works so well is that it makes a forecasting a skill with useful feedback: If a prediction fails, you can look at why, and adjust your biases and assumptions accordingly.

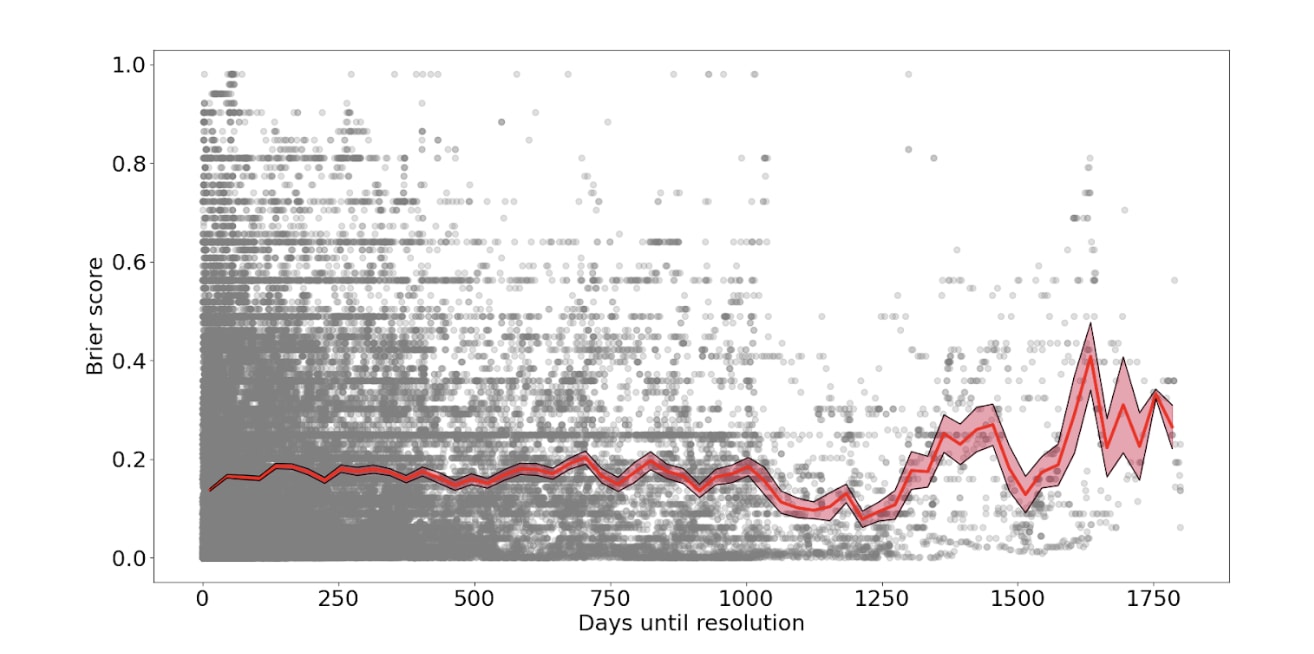

Two important caveats that are often overlooked with this research: First, all these predictions were of bounded probability, where the question-makers estimated probability was in the range between 5% and 95%. So no million to one shots, because you'd have to make a million of them to check if the predictions were correct. Second, they were all of short term predictions. Tetlock states multiple times in his book that he thinks forecasts beyond a few years will be fairly useless.

So, if the research holds up, the methods used by EA are the gold standard in short-term, bounded probability forecasting. It makes sense to use it for that purpose. But I don't think this means that expertise in these problems will transfer to unbounded, long term forecasts like "will AGI kill us all in 80 years". It's still useful to estimate those probabilities to more easily discuss the problem, but there is no reason to expect these estimates to have much actual predictive power.