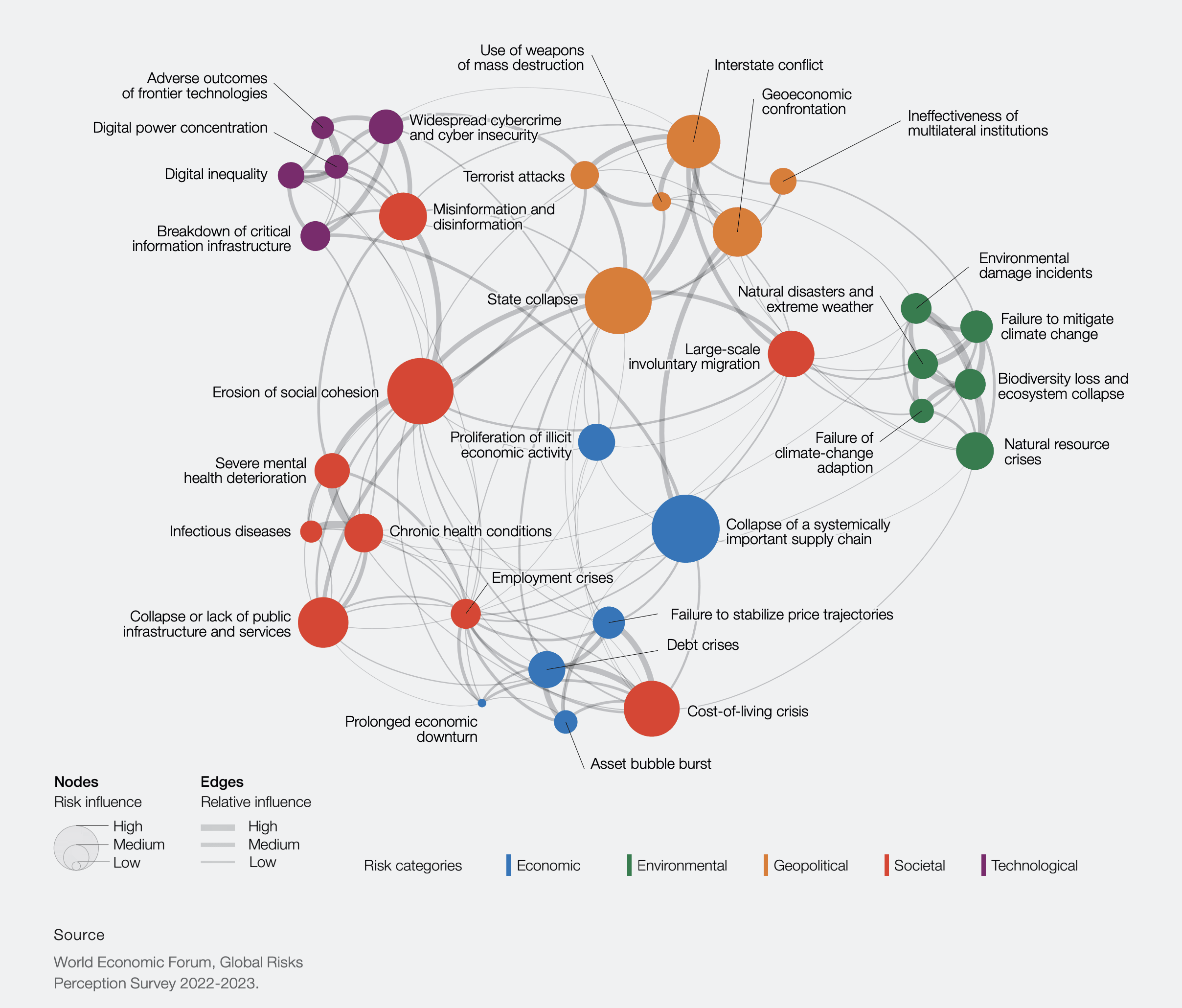

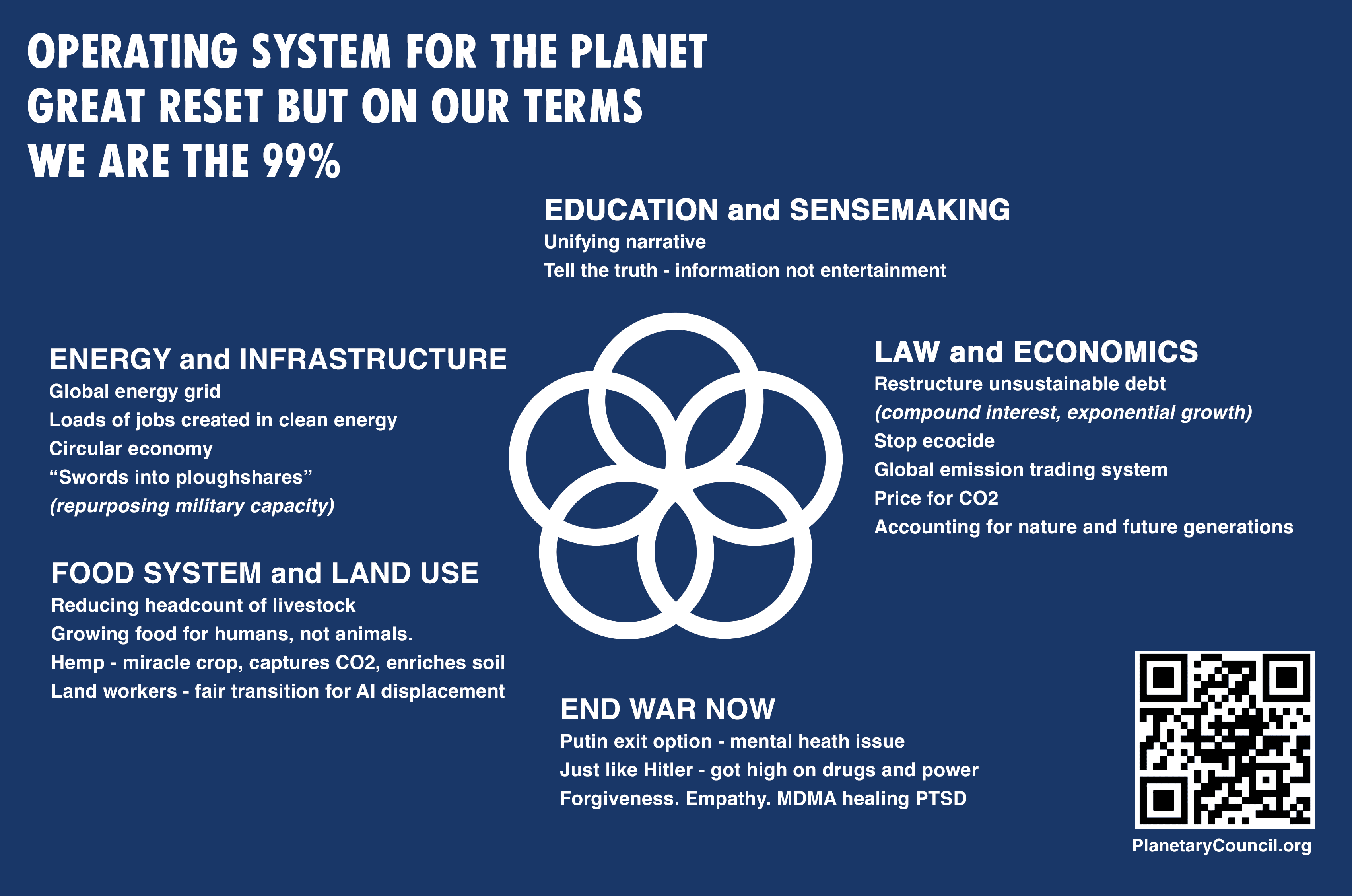

I am currently engaging more with the content produced by Daniel Schmachtenberger and the Consilience Project and slightly wondering why the EA community is not really engaging with this kind of work focused on the metacrisis, which is a term that alludes to the overlapping and interconnected nature of the multiple global crises that our nascent planetary culture faces. The core proposition is that we cannot get to a resilient civilization if we do not understand and address the underlying drivers that lead to global crises emerging in the first place. This work is overtly focused on addressing existential risk and Daniel Schmachtenberger has become quite a popular figure in the youtube and podcast sphere (e.g., see him speak at Norrsken). Thus, I am sure people should have come across this work. Still, I find basically no or only marginally related discussion of this work in this forum (see results of some searches below), which surprises me.

What is your best explanation of why this is the case? Are the arguments so flawed that it is not worth engaging with this content? Do we expect "them" to come to "us" before we engage with the content openly? Does the content not resonate well enough with the "techno utopian approach" that some say is the EA mainstream way of thinking and, thus, other perspectives are simply neglected? Or am I simply the first to notice, be confused, and care enough about this to start investigate this?

Bonus Question: Do you think that we should engage more with the ongoing work around the metacrisis?

Related content in the EA forum

- Systemic Cascading Risks: Relevance in Longtermism & Value Lock-In

- Interrelatedness of x-risks and systemic fragilities

- Defining Meta Existential Risk

- An entire category of risks is undervalued by EA

- Corporate Global Catastrophic Risks (C-GCRs)

- Effective Altruism Risks Perpetuating a Harmful Worldview

This would be surprising to me, since so much of tech progress is the creation of social coordination technologies (internet and social media platforms, cell phones and computers, new modes of transport, cheaper food and safer water that simplifies logistics of human movement, new institutions, new language... (read more)

Thank you for engaging with the content in a meaningful way and also taking the time to write up your experience. This answer was particularly helpful for me to get the sense that a) there is a productive way that more discussion can be had on this topic and b) some ideas for how this might be framed. So thank you very much!

I intensively skimmed the first suggested article "Technology is not Values Neutral. Ending the reign of nihilistic design", and found the analysis mostly lucid and free of political buzzwords. There's definitely a lot worth engaging with there. Similarly to what you write however, I got a sense of unjustified optimism in the proposed solution, which centers around analyzing second and third order effects of technology during their development. Unfortunately, the article does not appear to acknowledge that predicting such societal effects seems really hard... (read more)