I’m annoyed by the way some Effective Altruists use the expression “value drift”. I will avoid giving examples to not "point fingers"; it's not personal or about anyone specific:)

Note that I do overall appreciate the research that's been done on member dropout! My goals here are mostly to a) critique the way "value drift" has been used, and at the same time b) suggest other avenues to study value drift. I hope this doesn't come off in a bad way; I know it can be easy to critique and make research suggestions without doing it myself.

Here’s the way I’ve seen EAs use the term "value drift", and why it bothers me.

1. A focus on one value category

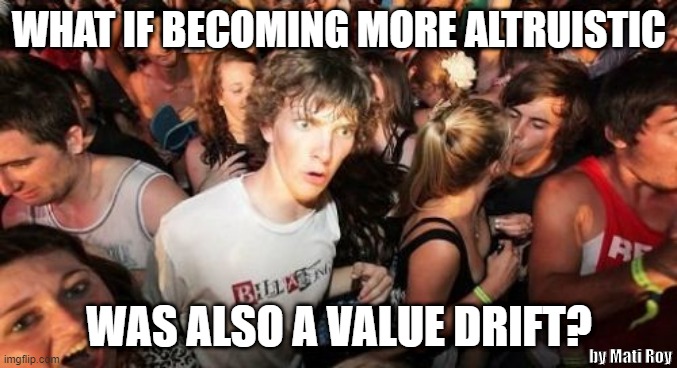

They mean something much more narrow, which is “someone becoming less of an effective altruist”. They never mention drifts from some altruistic values to other altruistic values or from some non-altruistic values to other non-altruistic values (ex.: going from a positive hedonist to a negative hedonist). And they especially never frame becoming more altruistic as a value drift, even though I have the impression a bunch of Effective Altruists approach outreach like that (but not everyone; ex.: Against moral advocacy). I feel it's missing perspective to frame 'value' in such a narrow idiosyncratic way.

2. A focus on the community, not the values

I think they often intrinsically care about “quitting the community of Effective Altruism” as opposed to becoming less of an effective altruist. Maybe this is fine, but don't call it a value.

a) Checking when it's desirable

I would be interested in seeing when quitting the community is desirable, even without a value drift. Some examples might include:

- if you're focusing on a specific intervention with its own community,

- if you're not interested in the community (which doesn't mean you can do effective altruism; and just want to donate to some prizes,

- if you're not altruist and were just in the community to hang out with cool people,

- if you have personal issues that need to be prioritized.

b) Checking when it's not actually value drift

A lot of the data are naturally just proxies or correlational, and they're likely good enough to be used the way they are. But I would be curious to know how much value drift-like behavior are not actually caused by a value drift. For example:

- someone can stop donating because they took a pay cut to do direct work,

- someone can stop donating because they had planned on giving more early in their career given they thought there would be more opportunities early on.

Someone can also better introspect on their values and change their behavior consequently, even though their fundamental values haven't change.

Toon Alfrink argues that most member dropouts aren't caused by value drift, but rather incentive drift; see zir argument in Against value drift. That seems overall plausible to me, except that I still put higher credence on intrinsic altruism in humans.

3. A focus on involvement in the community, not on other interventions

The main intervention they care about to reduce drifting towards non-altruism is increasing involvement with the Effective Altruism community. I’ve not seen any justification for focusing (exclusively) on that. Other areas that seem-to-me worth exploring include:

- Age-related changes, such as early brain development, puberty, senescence, and menopause

- Change in marital status, level of physical intimacy, pregnancy, and raising children

- Change in abundance / poverty / slack

- Change in level of political / social power

- Change in general societal trust, faith in humanity, altruistic opportunities

- Exposition to contaminants

- Consumption of recreational drugs, medicinal drugs, or hormones

- Change in nutrition

- Brain injuries

I haven’t really done any research on this. But 1, 2, 3, 4, and 5 seem to have plausible evolutionary mechanisms to support them. I think I’ve seen some research providing evidence on 1, 2, 3, 4, 5, 7; some evidence against 7 as well. i seems like a straightforward plausible mechanism. And 6 and 8 seem to often have an impact on that sort of things.

I do think community involvement also has a plausible evolutionary mechanism, as well as a psychological one. And there are reasons why some of the areas I proposed are less interesting to study: some have a Chesterton's fence around them, some seems to already be taken care of, some seems hard to influence, etc.

Additional motivation to care about value drift

I'm also interested in this from a survival perspective. I identify with my values, so having them changed would correspond to microdeaths. To the extent you disvalue death (yours and other's), you might also care about value drift for that reason. But that's a different angle: seeing value drift as an inherent moral harm instead of an instrumental one.

Summary of the proposal

If you're interested in studying member retention / member dropout, that's fine, but I would rather you call it just that.

Consider also checking when a member dropout is fine, and when a behavior that looks like a dropout isn't.

It also seems to me like it would be valuable to also research drifting of other values, as well as other types of interventions to reduce value drift. Although part of it comes from disvaluing value drift intrinsically.

Notification of further research

I'm aware of two people that have written on other interventions. If/once they publish it, I will likely post in the comment section. I might also post other related pieces in the comment section as I see them. So if you're interested, you can subscribe to the comment section (below the title: "..." > "Subscribe to comments").

Related work

- A Qualitative Analysis of Value Drift in EA

- Concrete Ways to Reduce Risks of Value Drift and Lifestyle Drift

Search the forum for "value drift" for more.

The first point I'd make here is that I'd guess people writing on these topics aren't necessarily primarily focused on a single dimension from more to less altruism. Instead, I'd guess they see the package of values and ideas associated with EA as being particularly useful and particularly likely to increase how much positive impact someone has. To illustrate, I'd rather have someone donating 5% of their income to any of the EA Funds than donating 10% of their income to a guide dog charity, even if the latter person may be "more altruistic".

I don't think EA is the only package of values and ideas that can cause someone to be quite impactful, but it does seem an unusually good and reliable package.

Relatedly, I care a lot less about drifts away from altruism among people who aren't likely to be very effectively altruistic anyway than among people who are.

So if I'm more concerned about drift away from an EA-ish package of values than just away from altruism, and if I'm more concerned about drift away from altruism among relatively effectiveness-minded people than less effectiveness-minded people, I think it makes sense to pay a lot of attention to levels of involvement in the EA community. (See also.) Of course, someone can keep the values and behaviours without being involved in the community, as you note, but being in the community likely helps a lot of people retain and effectively act on those values.

And then, as discussed in Todd's post, we do have evidence that levels of engagement with the EA community reduces drop-out rates. (Which is not identical to value drift, and that's worth noting, but it still seems important and correlated.)

Finally, I think most of the other 9 areas you mention seem like they already receive substantial non-EA attention and would be hard to cost-effectively influence. That said, this doesn't mean EAs shouldn't think about such things at all.

The post Reducing long-term risks from malevolent actors is arguably one example of EAs considering efforts that would have that sort of scope and difficulty and that would potentially, in effect, increase altruism (though that's not the primary focus/framing). And I'm currently doing some related research myself. But it does seem like things in this area will be less tractable and neglected than many things EAs think about.

I see, thanks!