LessWrong cross-post

This article is the script of the YT video linked above. It is an animated introduction to the idea of longtermism. The video also briefly covers "The Case for Strong Longtermism" by William MacAskill and then goes over Nick Bostrom's "Astronomical Waste: The Opportunity Cost of Delayed Technological Development". If you would like a refresher about Longtermism, you may read this script, and if you are curious about the animations, you can head to the video. This time the narrator is not me, but Robert Miles.

Consider this: scientific progress and the collective well-being of humanity have been on an upward trajectory for centuries, and if it continues, then humanity has a potentially vast future ahead in which we might inhabit countless star systems and create trillions upon trillions of worthwhile lives.

This is an observation that has profound ethical implications, because the actions we take today have the potential to impact our vast future, and therefore influence an astronomically large number of future lives.

Hilary Greaves and William MacAskill, in their paper “The Case for Strong Longtermism” define strong longtermism as the thesis that says, simplifying a little bit: “in a wide class of decision situations, the best action to take is the one that has the most positive effects on the far future”. It’s easy to guess why in light of what I just said. This is a consequence of the fact that the far future contains an astronomically large number of lives.

The question is: what are the actions that have the most positive effect on the far future? There are a few positions one could take, and if you want a deep dive into all the details I suggest reading the paper that I mentioned [note: see section 3 of "The Case for Strong Longtermism] .

In this video, I will consider two main ways in which we could most positively affect the far future. They have been brought forward by Nick Bostrom in his paper “Astronomical Waste: The Opportunity Cost of Delayed Technological Development”.

Bostrom writes: “suppose that 10 billion humans could be sustained around an average star. The Virgo supercluster could contain 10^23 humans”.

For reference, 10^23 is 10 billion humans multiplied by 10 trillion stars! And remember that one trillion is one thousand times a billion.

In addition, you need to also consider that future humans would lead vastly better lives than today’s humans due to the enormous amount of technological progress that humanity would have reached by then.

All things considered, the assumptions made are somewhat conservative - estimating 10 billion humans per star is pretty low considering that Earth already contains almost 8 billion humans, and who knows what may be possible with future technology?

I guess you might be skeptical that humanity has the potential to reach this level of expansion, but that’s a topic for another video. And the fact that it is not a completely guaranteed scenario doesn’t harm the argument (back to this later).

Anyway, considering the vast amount of human life that the far future may contain, it follows that delaying technological progress has an enormous opportunity cost. Bostrom estimates that just one second of delayed colonization equals 100 trillion human lives lost. Therefore taking action today for accelerating humanity’s expansion into the universe yields an impact of 100 trillion human lives saved for every second that it’s is brought closer to the present.

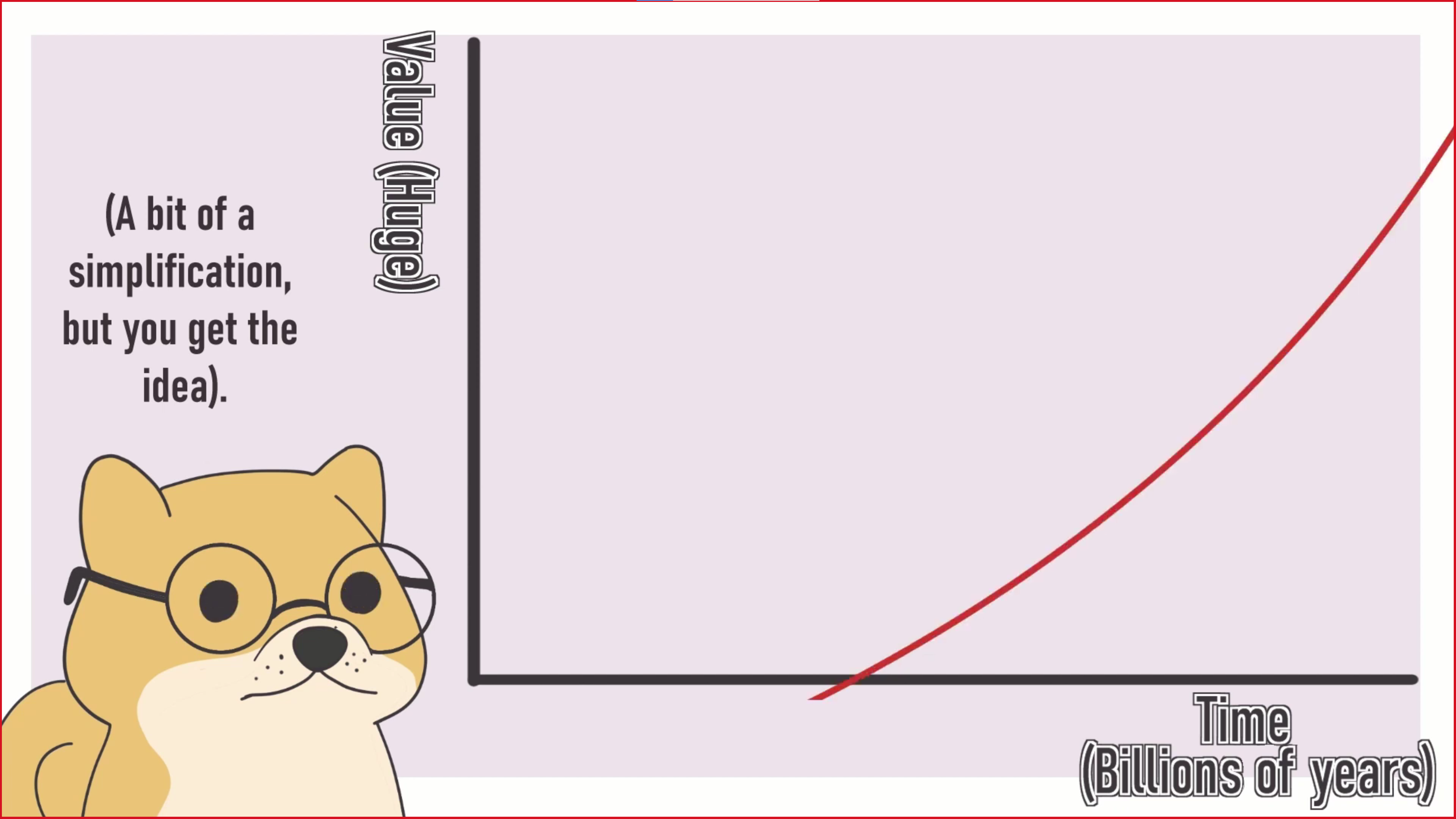

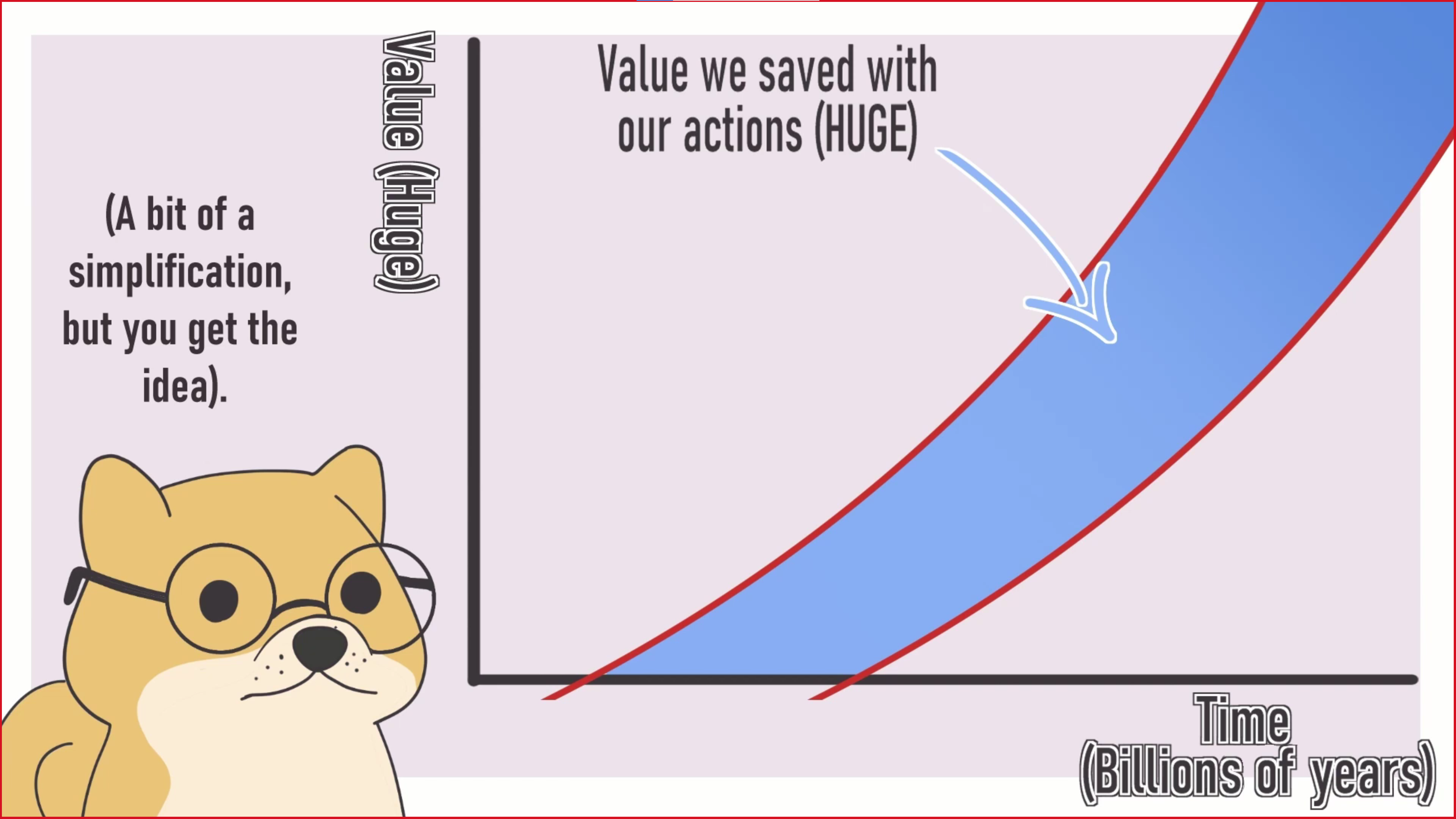

You can picture this argument like this. Here’s a graph with time on the horizontal axis and value (human lives, for example) on the vertical axis. We assume progress to be positive over time. If we accelerate key technological discoveries early on, then we are shifting all of this line to the left. See this area between the two lines? This is all the value we save.

But. Don’t get the idea that is even close to the most impactful thing we could do today.

Consider this: what if humanity suffers a catastrophe so large as to either wipe it out or curtail its potential forever? This would annihilate all of humanity’s future value in one go, which potentially means billions of years of lost value. Since we realistically measure the impact of accelerating scientific progress in just years or decades of accelerated progress, from an impact perspective, reducing the risk of an existential catastrophe trumps hastening technological development by many orders of magnitude. Bostrom estimates that a single percentage point of existential risk reduction yields the same expected value as advancing technological development by 10 million years

Now, I mentioned “expected value”, what is that? Well it’s an idea that’s at the heart of decision theory and game theory, and here, we’re using it to convert preventing risk to impact. How it basically works is that you multiply impact by its probability of occurring in order to estimate the impact in the average case. For example: if there is a 16% probability of human extinction, as estimated by Toby Ord, in order to get a number for how much value we are losing in expectation we multiply 0.16 by the number of future human lives. Consider that also when measuring the impact of accelerating technological progress, or even distributing malaria nets in Africa we must calculate the expected value of our impact and we can’t use crude impact without correcting it with a probability. There are no interventions that yield a 100% probability of working.

In his paper, Bostrom also makes the case that trying to prevent existential risk is probably the most impactful thing that someone who cared about future human lives (and lives, in general) could do. But there is another ethical point of view taken into consideration, which is called the “person affecting view”. If you don’t care about giving life to future humans who wouldn’t have existed otherwise, but you only care about present humans, and humans that will come to exist, then preventing existential risk and advancing technological progress have a similar impact. Here the reasoning:

All the impact would derive from increasing the chance that current humans will get to experience extremely long and fulfilling lives as a result of hastening transformative technological development. Potentially enabling virtually unlimited lifespans and enormously improving the quality of life for many people alive today or in the relatively near future.

If we increase this chance, either by reducing existential risk or by hastening technological progress, our impact will be more or less the same, and someone holding a person affecting view ought to balance the two.

Note: this is mostly about your earlier videos. I think this one was better done, so maybe my points are redundant. Posting this here because the writer has expressed some unhappiness with reception so far. I've watched the other videos some weeks ago and didn't rewatch them for this comment. I also didn't watch the bitcoin one.

First of, I think trying out EA content on youtube is really cool (in the sense of potentially high value), really scary, and because of this really cool (in the sense of "of you to do this".) Kudos for that. I think this could be really good and valuable if you incorporate feedback and improve over time.

Some reasons why I was/am skeptical of the channel when I watched the videos:

In general, the kind of questions I would ask myself, and the reason why I think all of the above are a concern are:

I'm somewhat concerned that the answer for too many people would be "no" for 3, and "yes" for 6. Obviously there will always be some "no" for 3 and some "yes"for 6, especially for such a broad medium like youtube, and balancing this is really difficult. (And it's always easier to take the skeptical stance.) But I think I would like to see more to tip the balance a bit.

Maybe one thing that's both a good indicator but also important in its own right is the kind of community that forms in the comment section. I've so far been moderately positively surprised by the comment section on the longtermism video and how your handling it, so maybe this is evidence that my concerns are misplaced. It still seems like something worth paying attention to. (Not claiming I'm telling you anything new.)

I'm not sure what your plans and goals are, but I would probably prioritise getting the overall tone and community of the channel right before trying to scale your audience.

Some comments on this video:

Again, I think it is really cool and potentially highly valuable that you're doing this, and I have a lot of respect for how you've handled feedback so far. I don't want to discourage you from producing further videos, just want to give an idea of what some people might be concerned about/why there's not more enthusiasm for your channel so far. As I said, I think this video is definitely in the IMO right direction and find this encouraging.

edit: Just seen the comment you left on Aaron Gertler's comment about engagement. Maybe this is a crux.

Update: as a result of feedback here and in other comments (and some independent thinking), we made a few updates to the channel.

I have also read CEA's models of community building, which were suggested in some comments.

The future direction the channel will take is more important than previous videos, but still, I wanted to let people know that I made these changes. I wanted to make a post to explain both these changes and future directions in detail, but I don't know if I'll manage to finish it, so in the meanwhile, I figured that it would probably be helpful to comment here.

I feel like this is the most central criticism I had so far. Which means it is also the most useful. I think it's very likely that what you said is also the sentiments of other people here.

I think you're right about what you say and that I botched the presentation of the first videos. I'll defend them a little bit here on a couple of points, but not more. I will not say much in this comment other than this, but know that I'm listening and updating.

1. The halo effect video argues in part that the evolution of that meme has been caused by the halo effect. It is certainly not an endorsement.

2. The truth is cringe video is not rigorous and was not meant to be rigorous. It was mostly stuff from my my intuitions and pre-existing knowledge. The example I used made total sense to me (and I considered it interesting because it was somewhat original), but heh apparently only to me.

Note: I'm not going to do only core EA content (edit: not even close actually). I'm trying to also do rationality and some rationality-adjacent and science stuff. Yes, currently the previous thumbnails are wrong. I fixed the titles more recently. I'm not fond of modifying previous content too hard, but I might make more edits.

Edit to your edit: yes.

Great work! I'm really excited about seeing more quality EA/longtermism-related content on YouTube (in the mold of e.g. Kurzgesagt and Rob Miles) and this was a fun and engaging example. Especially enjoyed the cartoon depictions of Nick Bostrom and Toby Ord :)

Quick note: the link in the video description for 'The Case for Strong Longtermism' currently links to an old version. It has since been significantly revised, so you might consider linking to that instead.

Agreed!

I was initially a little worried when I saw that one of the first videos this channel put up had "longtermism" right in the title, since explicitly using an EA/longtermism branding increases the potential downsides from giving a lot of people a bad first impression or something. (See also the awareness/inclination model of movement growth.) But:

Some caveats to that positive view (which I'm writing here because Writer indicated elsewhere an interest in feedback):

Agreed. I don't think this video got anything badly wrong, but do be aware that there are plenty of EA types on this forum and elsewhere who would be happy to read over and comment on scripts.

(But again, I do think the video is good and I'm glad it exists and is getting viewed!)

This makes me think that I would definitely use more feedback. It makes me kind of sad that I could have added this point myself (together with precising the estimate by Toby Ord) and didn't because of...? I don't know exactly.

Edit: one easy thing I could do is to post scripts in advance here or on LW and request feedback (other than messaging people directly and waiting for their answers, although this is often slow and fatiguing).

Edit 2: Oh, and the Slack group. Surely more ideas will come to me, I'll stop adding edits now.

Hey- Don't be sad. It's really brave to do work like this and make it public - thank you :) I'd definitely recommend this to newcomers (Indeed, I just did! Edited to add: The animations are so cool here!). You might consider sending future drafts through to CEA's press team- they have a large network of people and I have always found the quality of their feedback to be really high and helpful! Thanks again for this!

Hey, thanks a lot for this comment. It did brighten my mood.

I think I'll definitely want to send scripts to CEA's press team, especially if they are heavily EA related like this one. Do you know how can I contact them? (I'm not sure I know what's the CEA's press team. Do you mean that I should just send an e-mail to CEA via their website?)

Absolutely :) Sky Mayhew <sky@centreforeffectivealtruism.org> and <media@centreforeffectivealtruism.org>. Sky is incredibly kind and has always given me brilliant feedback when I've had to do external-facing stuff before (which I find intensely nerve-wracking and have to do quite a lot of ). I can't speak more highly of their help. The people who have given me feedback on my sharing your video have mainly done so in the form of WOW face reactions on Slack FWIW- I'll let you know if I get anything else. My husband also loves this type of stuff and was psyched to watch your video too. :)

Thanks a lot! This is definitely going to be helpful :)

FWIW, I think it's almost inevitable that a script of roughly the length you had will initially contain some minor errors. I don't think you should feel bad about that or like it's specific to you or something. I think it just means it's good to get feedback :)

(And I think these minor errors in this case didn't like create notably downside risk or something, and it is still a good video.)

Thank you a lot for this feedback. I was perceiving that the main worry people here were having about Rational Animations was potential downsides, and that they were having this feeling mainly due to our artistic choices (because I was getting few upvotes, some downvotes and feedback that didn't feel very "central" I figured that there was probably something about the whole thing that was putting off some people but that they weren't able to put their finger on. Am I correct in this assessment?). I feel like I want to kind of update the direction of the channel because of this, but I need some time to reflect and maybe write a post to understand if what I'm thinking is what people here are also thinking.

Also, stepping back, I think you mainly need to answer two questions, which suggest different types of required data, neither of which is karma on the Forum/LessWrong:

I think Q1 is best answered through actively soliciting feedback on video ideas, scripts, rough cuts, etc. from specific people or groups who are unusually likely to have good judgement on such things. This could be people who've done somewhat similar projects like Rob Miles, could be engaged EAs who know about whatever topic you're covering in a given vid, could be non-EAs who know about whatever topic you're covering in a vid, or groups in which some of those types people can be found (e.g., the Slack I made).

I think Q2 is best answered by the number of views your videos to date have gotten, the likes vs dislikes, the comments on YouTube, etc.

I think Forum/LessWrong karma does serve as weak evidence on both questions, but only weak evidence. Karma is a very noisy and coarse-grained metric. (So I don't think getting low karma is a very bad sign, and I think there are better things to be looking at.)

This is very very helpful feedback, thank you for taking the time to give it (here and on the other post). Also, I'm way less anxious getting feedback like this than trying to hopelessly gauge things by upvotes and downvotes. I think I need to talk more to individual EAs and engage more with comments/express my doubts more like I'm doing now. My initial instinct was to run away (post/interact less), but this feels much better other than being more helpful.

Yeah, I think it is worth often posting, but that this is only partly because you get feedback and more so for other reasons (e.g., making it more likely that people will find your post/videos if it's relevant to them, via seeing it on the home page, finding it in a search, or tags). And comments are generally more useful as feedback than karma, and comments from specific people asked in places outside the Forum are generally more useful as feedback than Forum comments.

(See also Reasons for and against posting on the EA Forum.)

I think maybe it's more like:

I think that this makes not upvoting a pretty reasonable choice for someone who just sees the post/video and doesn't take the time to think about the pros and cons; maybe they want to neither encourage nor discourage something that's unfamiliar to them and that they don't know the implications of. I wouldn't endorse the downvotes myself, but I guess maybe they're based on something similar but with a larger degree of worry.

Or maybe some people just personally don't enjoy the style/videos, separate from their beliefs about whether the channel should exist.

I wouldn't guess that lots of people are actively opposed to specific artistic choices. Though I could of course be wrong.

Thanks! Just updated the link :)

I should also mention that Toby Ord's 1/6 (17ish%) figure is for the chance of extinction this century, which isn't made totally clear in the video (although I appreciate not much can be done about that)!

Updated the description with "Clarification: Toby Ord estimates that the chance of human extinction this century (and not in general) is around 1/6, which is 16.66...%"

Actually, that's his estimate of existential catastrophe this century, which includes both extinction and unrecoverable collapse or unrecoverable dystopia. (I seem to recall that Ord also happens to think extinction is substantially more likely this century than the other two types of existential catastrophe, but I can't remember for sure, and in any case his 1/6 estimate is stated as being about existential catastrophe.)

Maybe you could say: "Clarification: Toby Ord estimates that the chance of an existential catastrophe (not just extinction) this century (not across all time) is around 1/6; his estimate for extinction only might be lower, and his estimate across all time is higher. For more on the concept of existential catastrophe, see https://www.existential-risk.org/concept.pdf"

(Or you could use a more accessible link, e.g. https://forum.effectivealtruism.org/tag/existential-catastrophe-1 )

Copy-pasted your text and included both links with an "and". Also... any feedback on the video? This post's performance seems a little disappointing to me, as always.

Edit: oh I just see now that you replied above

(Thanks for being receptive to and acting on this and Fin's feedback!)

Thanks, good catch! Possibly should have read over my reply...

Thanks for making both those updates :)

I'm not excited about the astronomical waste theory as part of introductory longtermist content. I think much smaller/more conservative get exactly the same point across without causing as many people to judge the content as hyperbolic/trying to pull one over on them.

That said, I was impressed by the quality level of the video, and the level of engagement you've achieved already! If every EA-related video or channel had to be perfect right away, no one would ever produce videos, and that's much worse than having channels that are trying to produce good content and seeking feedback as they go.

*****

One really easy win for videos like this: I counted 12 distinct characters (not counting Bostrom, Ord, or the monk character who shows up in several videos). All of them were animated in the style I think of as "white by default", including the citizens of 10,000 BC (which may predate white skin entirely).

This isn't some kind of horrible mistake, but it seems suboptimal for trying to reach a broad audience (which I figure is a big reason to make animated video content vs. other types of content).

My favorite example of effortless diversity (color, age, gender, etc.) in rationalist-adjacent cartoon sci-fi is Saturday Morning Breakfast Cereal.

On (1), all fair questions.

To sum it up, I'm critiquing this small section of an (overall quite good) video based on my guess that it wasn't great for engagement (compared to other options*), rather than because I think it was unreasonable. The "not great for engagement" is some combination of "people sometimes think gigantic numbers are sketchy" and "people sometimes think gigantic numbers are boring", alongside "more conservative numbers make your point just as well".

*Of course, this is easy for me to say as a random critic and not the person who had to write a script about a fairly technical paper!

I left two other comments with some feedback, but want to note:

I strong-upvoted this EA Forum post because I really like that you are sharing the script and video with the community to gather feedback

I refrained from liking the video on YouTube and don't expect to share it with people not famililar with longtermism as a means of introducing them to it because I don't think the video is high enough quality for it to be a good thing for more people to see it. I'd like to see you gather more feedback on the scripts of future videos related to EA before creating the videos.

I think your feedback in the other comment is mostly correct, but... aren't those relatively minor concerns? Do you think the video actually has net negative impact in its present form? Some of your criticism is actually about Bostrom's paper, and that seems like it had a fairly positive impact.

Assuming this is what your comment is in reference to: I looked at Bostrom's paper after and I think his sentence about 1% reduction in x-risk being like a 10M+ year delay before growth is actually intuitive given his context (he mentioned that galaxies exist for billions of years just before), so I actually think the version of this you put in the script is significantly less intuitive. The video viewer also only has the context of the video up to that point whereas thr paper reader has a lot more context from the paper. Also videos should be a lot more comprehensible to laypeople than Bostrom papers.

I think the question of whether the video will be net negative on the margin is complicated. A more relevant question that is easier to answer is "is it reasonable to think that a higher quality video could be made for a reasonable additional amount of effort and would that be clearly better on net to have given the costs and benefits?"

I think the answer to this is "yes" and if we use that as the counterfactual rather than no video at all, it seems clear that you should target producing that video, even if your existing video is positive on net relative to no video.

Oh sure, without a doubt, if there is a better video to be made with little additional effort, making that video is obviously better, no denying that.

I asked that question because you said:

And that's way more worrying than "this video could be significantly improved with little effort". At least I would like to start with a "do no harm" policy. Like, if the channel does harm then the channel ought to be nuked if the harm is large enough. If the channel has just room for improvement that's a different kettle of fish entirely.

Gotcha. I don't actually have a strong opinion on the net negative question. I worded my comment poorly.

Thanks for posting here for feedback. In general I think the video introduced too many ideas and didn't explain them enough.

Some point by point feedback:

It seems inappropriate to include "And remember that one trillion is one thousand times a billion" much later after saying "humanity has a potentially vast future ahead in which we might inhabit countless star systems and create trillions upon trillions of worthwhile lives" in the first sentence. If people didn't know what the word "trillion" meant later, then you already lost them at the first sentence.

Additionally, since I think that essentially all the viewers you care about do already know what "trillion" means, including "remember that one trillion is one thousand times a billion" will likely make some of them think that the video is created for a much less educated audience than them.

"I guess you might be skeptical that humanity has the potential to reach this level of expansion, but that’s a topic for another video." Isn't this super relevant to the topic of this video? The video is essentially saying that future civilization can be huge, but if you're skeptical of that we'll address that in another video. Shouldn't you be making the case now? If not, then why not just start the video with "Civilization can be astronomically large in the future. We'll address this claim in a future video, but in this video let's talk about the implications of that claim if true. [Proceed to talk about the question of whether we can tractably affect the size.]

"But there is another ethical point of view taken into consideration, which is called the “person affecting view”." I don't think you should have included this, at least not without saying more about it. I don't think someone who isn't already familiar with person affecting views would gain anything from this, but it could very plausibly just be more noise to distract them from the core message of the video.

"If you don’t care about giving life to future humans who wouldn’t have existed otherwise, but you only care about present humans, and humans that will come to exist, then preventing existential risk and advancing technological progress have a similar impact." I think I disagree with this and the reasoning you provide in support of it doesn't at all seem to justify it. For example, you write "If we increase this chance, either by reducing existential risk or by hastening technological progress, our impact will be more or less the same" but don't consider the tractability or neglectedness of advancing technological progress or reducing existential risk.

For the video animation, when large numbers are written out on the screen, include the commas after every three zeros so the viewers can actually tell at a glance what number is written. Rob narrates "ten to the twenty-three humans" and we see 100000000000000000000000 appear on the screen with 0's continuously being added and it gives me the impression that the numbers are just made-up (even though I know they're not).

"100 billion trillion lives" is a rather precise number. I'd like for you to use more careful language to communicate if things like this are upper or lower bounds (e.g. "at least" 100 billion trillion lives) or the outputs of specific numerical estimates (in which case, show us what numbers lead to that output.

I didn't recall this from Bostrom's work and had to pause the video after hearing it to try to understand it better. I wish you had either explained the estimate or provided a footnote citation to the exact place viewers could find where Bostrom explains this estimate.

After thinking about it for a minute, my guess is that Bostrom's estimate comes from a few assumptions: (a) Civilization reaches it's full scale (value realized per year) in a very short amount of time relative to 10 million years and (b) Civilization lasts a billion years. Neither of these assumptions seem like a given to me, so if this is indeed where Bostrom's (rough) estimate came from it would seem that you really ought to have explained it in the video or something. I imagine a lot of viewers might just gloss over it and not accept it.