This post summarizes the new paper Current and Near-Term AI as a Potential Existential Risk Factor, authored by Benjamin Bucknal and Shiri Dori-Hacohen. The paper diverges from the traditional focus on the potential risks of artificial general intelligence (AGI), instead examining how existing AI systems may pose an existential threat. The authors underscore the urgency of mitigating the harms from these AI systems by detailing how they might contribute to catastrophic outcomes.

Key points:

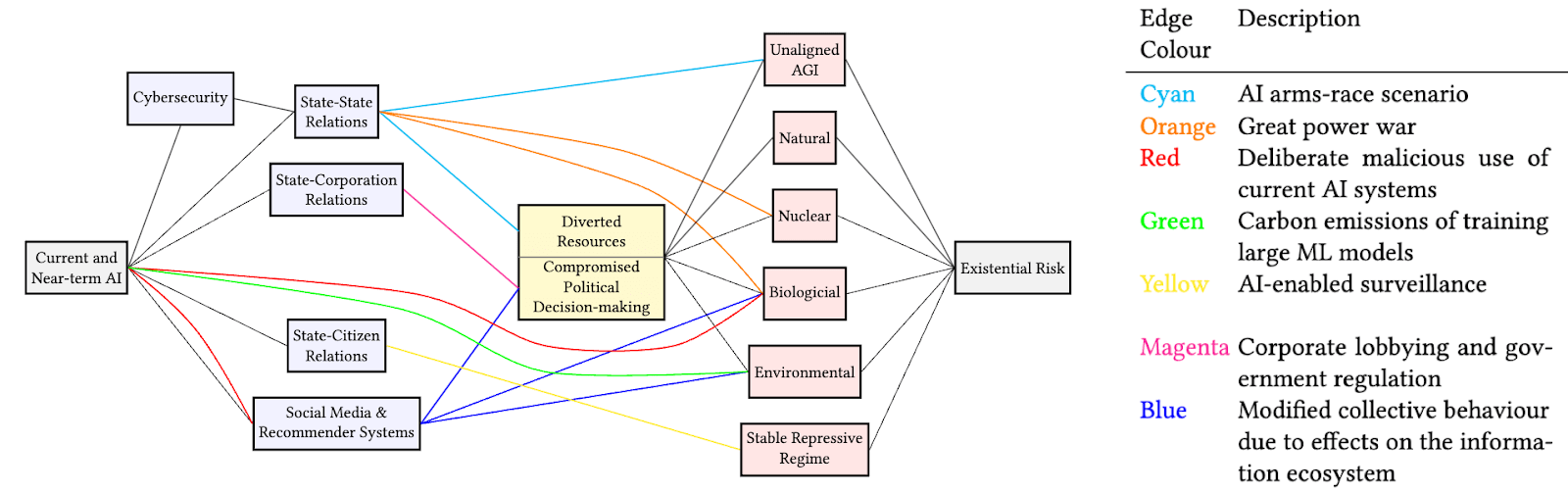

- Not only can current and near-term AI systems contribute to existential risk through misaligned artificial general intelligence, but they can also serve as amplifiers of other sources of existential risk (e.g., AI may help design dangerous pathogens.)

- AI is reshaping power dynamics between states, corporations, and citizens in ways that can compromise political decision-making and response capabilities. This includes:

- An AI arms race between states could lead to nuclear conflict and divert resources away from mitigating existential risks.

- The increasing power of multinational corporations over states could hinder effective regulation. AI technology is already outpacing ethical and legal frameworks.

- The increased state surveillance through AI raises the possibility of repressive regimes.

- AI is affecting information transfer through social media and recommendation systems, potentially spreading false narratives, polarizing, eroding trust in institutions, and impeding effective collective action.

- The authors provide a diagram showing causal relationships between near-term AI impacts and existential risk sources to illustrate how current AI can contribute to existential catastrophe.

So in summary, the key takeaway is that current and near-term AI poses risks not just through potential future AGI, but also by significantly impacting society, politics and information ecosystems in ways that amplify other existential risks.

Acknowledgment: The idea for this summary and its commission came from Michael Chen, whom I would like to thank for his support.

Thanks for the summary :)