Epistemic status: Some fundamental errors but worth leaving up

I think the error with this piece is that it is a hammer looking for a nail, rather than the reverse. Austin makes the point well here. Rather than saying "how to institute Futarchy," I guess it should say "how do we solve problems people already have"

Summary

- I would like there to be a regranter using prediction markets and I want your thoughts on how that process should work

- Manifund, a new granting org, is connected to the charity prediction market, Manifold, so is a uniquely good candidate

- Create prediction markets for each proposed project, scoring it against some metric if it happens or doesn't

- Order all projects according to which offer the most additional value if they happen than if they don't

- Fund all projects until the Futarchy regrantor uses its allocated budget for that period

- A discussion of different metrics

- A discussion of how this might be different from current grantmaking orgs

- Please correct any errors (even grammatical errors, I think I'd publish on here more if I didn't have to send it round to 10 people to check grammar first)

What is Futarchy and why is this a good opportunity?

Futarchy is a decision-making system where prediction markets are used to make decisions on what actions to take. You agree on some some kind of metric that you care about, and then predict how decisions will affect that metric. Futarchy generally implies a deterministic system, though this proposal is more about ironing the kinks out of the system so it could initially be advisory initially[1].

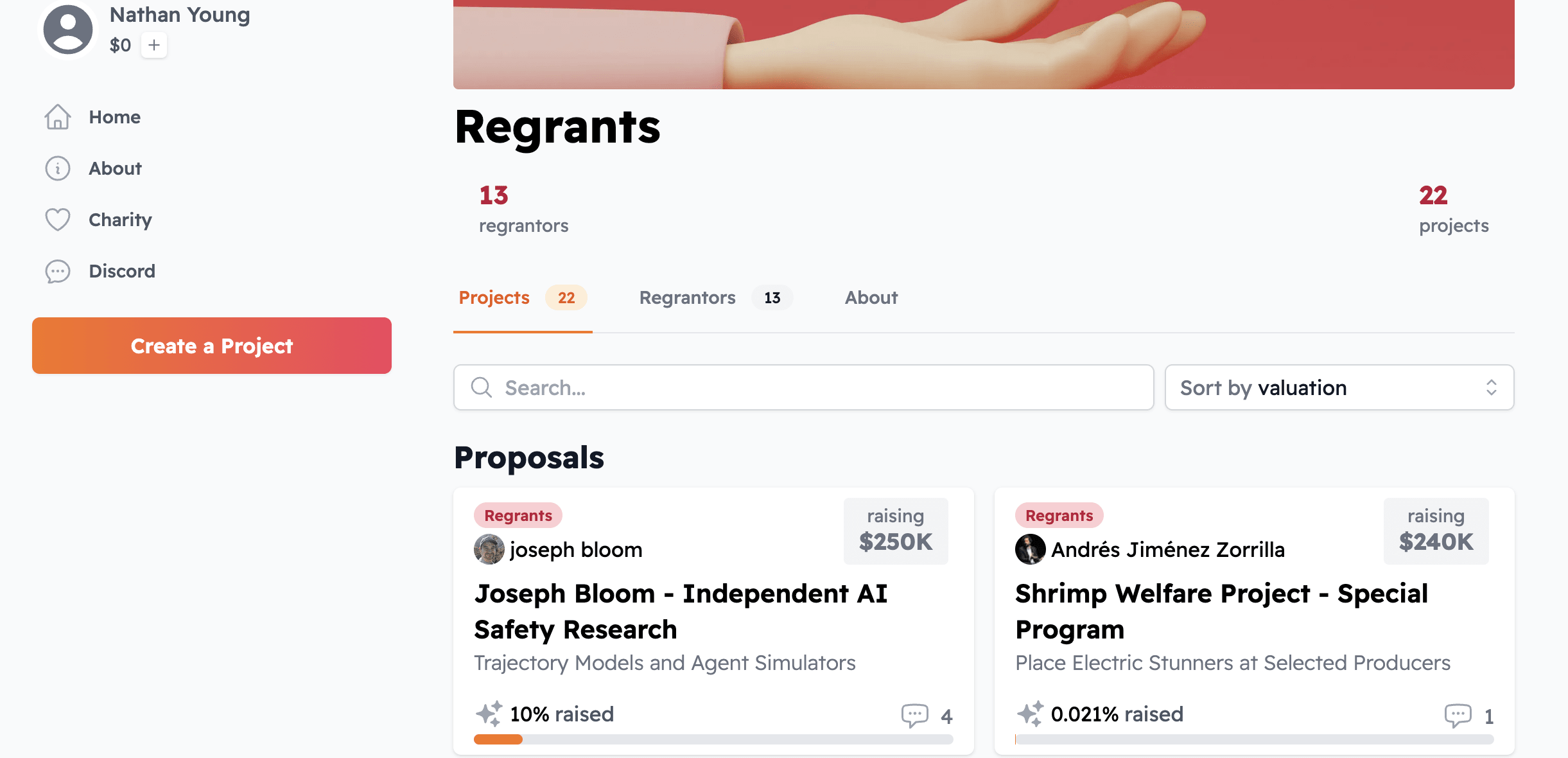

We can imagine running decision markets on a Manifund regrantor. Manifund is a new regranting org. Individuals get regranting budgets and then publicly allocate them. I guess that if they use their budget well they can argue to be given more.

Manifund is a good test bed for Futarchy for three reasons:

- They already run an impact market system, so one could try and do some kind of futures markets on the impact certificates. I don't know the legality or technicality of this.

- It's associated with a play money prediction market, Manifold - Austin Chen is a cofounder of both Manifold (the prediction market) and Manifund (the regrantor) hence the same part of the name. I think it'd be much easier to implement features than any other comparable one.

- The Manifund team ship things very quickly, and are into mechanistic design in general, so it just seems more likely to work here than maybe in other places.

How it might work?

So, it occurred to me that this would be a cool thing to exist, so this is me trying badly to do it. I don't pretend I'm going to do a good job here. I'm going to lay out what seems like the way I would do it, and then if, you know, please correct me,

For each grant proposal you want a prediction market with two sets of options, either 4 options or 2 continuous options in a single market[2]:

- The metric (perhaps binary) if the grant is fulfilled

- The metric (perhaps binary) if the grant is not fulfilled

The regrantor needs to prioritise grant opportunities above some funding bar. The key questions is "what's the value add". Here, the answer is the difference in the metric if it happens and if it doesn't. Perhaps divide by the size of the grant.

Suggested metrics

So, this requires that we have a clear metric, which is going to be a problem. Here are three suggested metrics:

- Impact Markets. We could see whether Manifund want to run a futures market (is this even legal, are they doing it?) or we could run our own prediction markets, charity prediction markets, sure, but of the future value of the grant.. I don't actually really know what the future time should be. 5? 10 years?[3] I also don't quite understand how the non-funding aspect of this works. I guess you choose a funding bar and only fund things with a good enough return on investment.

- Pros

- It is a financial mechanism that already exists

- They may already have this process

- Cons

- If the markets are on manifold, there isn't a way for many investors to get their money back, so may not invest properly

- It may be illegal somehow

- I have some vague foreboding that this won't actually work

- Pros

- Community/expert assessment. The community or small group scores grants 5 - 10 years later on how much value they created or destroyed. Then pays out the prediction markets based on who was correct. This is similar to an impact market, but doesn't require having huge, liquid impact markets. My intution from the accuracy of prediction markets vs Metaculus is that if you gatekeep the voting well (say, LessWrong/EA forum users) then this is as accurate as a liquid market.

- Pros

- Doesn't need lots of liquidity

- Cons

- Have to decide who votes and who doesn't

- Not real money

- Pros

- A vote. We could vote in 5 -10 years on if the grant should have been funded. This seems the easiest to implement. But I don't know that it can account for the second order effects. If there were secret or complex costs or benefits then I expect the market or assessment to figure that out. A straight vote might boil down much more to vibes.

- Pros

- Easy to implement

- Cons

- Worse incentives

- Pros

- Can you think of better?

Issues with futarchy

I reserve the right to edit these.

Issues I buy

- Issues of causality (ht Lizka). Prediction markets are markets and allow for hedging (where your true probability is skewed by other assets you hold) and strange correlations - the universes where an asset is more valuable may cause the decision rather than result from it.

- In this case an example might be a grant for Ajeya Cotra to work on global health. The world's where she is willing to do this are ones where alignment is solved and her talents are better used elsewhere. And we would consider it valuable work. But it doesn't mean it's a good idea in this world. Markets are capable of this sleight of hand and if we don't notice we can take the wrong signal from them.

- The additional complexity isn't worth it. I am a mechanistic design guy. I love the idea that our world is run by a series of beautiful systems. But I am a pragmatist too. And while such systems reduce friction (you can now dispute a grant by buying some shares in a prediction market rather than having to talk to the grant funder, if you know who they are) they increase complexity. In my experience this tradeoff is often quite poor.

- There is some chance it will seem too weird. I am open to the idea that this process will be weird and so harmful if it ever allocated a lot of money, especially in a surprising way.

- This is a hammer in search of a nail. While it's interesting to think about how Futarchy would work, it may not actually solve real problems grantmakers have. In this sense the effort would be better used in solving those. Austin (who co-runs manifund gives an example) "The problem that seems most important to solve: "finding projects that turn out to be orders of magnitude more successful/impactful than the rest". To the extent this doens't solve the top problems it's not the best idea

- People are better at predicting 5 years than betting 5 years. Lizka makes this point and I am unsure about it. Getting people to bet 5 - 10 years ahead seems like a stretch, but equally, that's what OpenPhil does. That said, perhaps the question is of incentives. Grantmakers are well-incentivised to think long term. Bettors, especially those betting in fiat (as opposed to a currency that appreciates over time or betting on impact) are explicitly not incentivised to take longer term bets due to discount rates.

The issues I don't buy

- People will manipulate the markets. I don't buy that someone can jump on the markets and mess with them. Manifold (or better real money) markets have great incentives against this. I am unaware of any time this has happened in a way that it would in a live prediction market.

Comparison to current grantmaking orgs

To me it seems more like GiveDirectly than GiveWell. Something that is less effective (due to the benefits of both secrecy and an org that manages decisions) but can be scaled much more. I'm doubtful that a futarchy regrantor would scale soon, but equally I sense one day that all regranting will happen like this.

It will be attractive to a certain kind of donor. I sense a certain kind of donor (often crypto) will find the idea very attractive. And since large crypto donors are comparably prevalent (and with a bull run, will be even more so), there seems reason for more so.

It would be good to test futarchy. I would like to understand why we haven't seen more futarchy. What are the kinks that need ironing out? I think there is value in testing in a real world situation. Maybe there are learnings transferrable to other orgs.

This was written quickly, let me know what you think

I dictated this and spent about an hour editing it. Please correct errors or make suggestions. I don't currently intend to put this into place, so if you want to, do! Please let me know.

- ^

It doesn't seem hard to remove the downside by saying there will be a committee who will veto markets if they seem obviously manipulated.

- ^

People's intuition seems to be that there should be 2 separate markets - "If A" and "If not A". To me this seems wrong. If you make a profit on such a market you need a way to take the money out of the market. I can go into the details in the comments, but this requires a "will A happen" market. And so you might as well have all 4 options as a single market.

- ^

A criticism here is "5 years, that's ages" but it seems normal grantmaking operates on this timescale also.

Thanks for the writeup, Nathan; I am indeed excited about the possibility of making better grants through forecasting/futarchic mechanisms. So I'll start from the other direction: instead of reaching for futarchy as a hammer, start with, what are current major problems grantmakers face?

The problem that seems most important to solve: "finding projects that turn out to be orders of magnitude more successful/impactful than the rest". Paul Graham describes funding seed-stage startups as "farming black swans", which rings true to me. To look at two example rounds from ACX Grants, which I've been involved in:

So right now, I'm most interested in mechanisms that help us find such founders/projects. Just daydreaming here, is there any kind of prediction mechanism that can turn out a report as informative as the ACX Grants 1-year project update? The information value in most prediction markets is "% chance given by the market", which misses out on the valuable qualitative sketches given by a retroactive writeup.

Other promising things:

Do you think there was a sense that this might be the case?

I guess you could encourage anyone to make markets, not just the funders. Then have some way to select the 10 most interesting markets. If you wanted you could try and run an LLM to generate text for some kind of premortem. Seems a bit galaxy brained though.

Some notes (written quickly!):

Relatedly, 2 years ago I wrote two posts about futarchy — I haven't read them in forever, they probably have mistakes, and my opinions have probably shifted since then, but linking them here anyway just in case:

Thanks for the thoughts (and your posts on Futarchy years ago, I found them to be a helpful review of the literature!)

I am too, though perhaps for different reasons. Long-term forecasting has slow feedback loops, and fast feedback loops are important for designing good mechanisms. Getting futarchy to be useful probably involves a lot of trial-and-error, which is hard when it takes you 5 years to assess "was this thing any good?"

Fwiw I think this is an issue with grantmaking too.

I thought "Causality might diverge from conditionality" is a big problem for "classic futarchy" too? E.g. if we're betting on welfare conditional on policy A and on welfare conditional on policy B, the market price for welfare conditional on policy A = expected welfare conditional on [policy A has the highest expected welfare at market close and thus is implemented] (or something) > expected welfare counterfactually conditional on policy A. I guess the ">" approaches "=" near market closing time, under certain reasonable assumptions? Huh.

I feel slightly attacked, which I don't think was your intention. I probably would have written a blunter response to someone elses, so i guess I'm a hypocrite. I wonder what the best forum responder in the world would look like.

On your first point, I think you are right. Causation and prediction markets are tricky. I guess I sort of think that there are bigger bottlenecks to figure out first. But maybe that's lax of me.

On your second point. "I'm a bit suspicious of metrics that depend on a vote 5 years from now." how do you reconcile this with OpenPhil's grantmaking, which probably often takes 5+ years to resolve. Do you criticise them for this? Feels like not and apples to apples comparison if you don't.

"me to believe that this is not a bad idea," I mean, I think presumably it wouldn't be a bad idea if it was a $50k grantmaker with a stopgap if it seemed to be funding obviously crazy or corrupt stuff. Feels like the bar of lizka thinking it's actively good is too high. If I managed that in a quick blog post I think we'd be really impressed.

"I'm also honestly not sure why you seem so skeptical that manipulation wouldn't happen," has there ever been any prediction market manipulation. Seems like there are quite a lot of markets running all the time? Other than Keynesian Beauty Contests and markets where you can literally change the criteria[1], has it ever happened? @austin do you know of any, I can't recall any. And if we think of examples of actual market manipulation, they would probably fool grantmakers too, right? And manifold's betting is all public, so you can't pump the market. I guess, "what's your story for how this has ever happened in a way it might happen here?"

I guess, maybe pick one of these as your biggest criticism and I might spend 30 mins figuring out my response to it?

Also, I wish that the Futarchy post on the forum wiki was your post on futarchy. Then we could argue some of this there, in what I suggest would be the "best" place for it. I have edited the lesswrong tag in that rough direction

I seem to recall someone bought a load of cinema tickets to rig a market, but in our case the way to do that would be to change the final metric, which would likely be really good.

Manifold markets hasn't solved longterm market incentives yet:

https://manifold.markets/Nu%C3%B1oSempere/this-question-will-resolve-positive-4a418ad86de3#jdUj58EdDXBbF8ZNyXaT

There are even accounts dedicated to messing with Manifold markets:

https://manifold.markets/ButtocksCocktoasten?tab=questions

Manifold doubled mana loan amounts this month to try to mitigate the longterm market incentive issues on the platform