What is this post?

This post is a companion piece to recent posts on evidential cooperation in large worlds (ECL). We’ve noticed that in conversations about ECL, the same few initial confusions and objections tend to get brought up: we hope that this post will be useful as the place that lists and discusses these common objections. We also invite the reader to advance additional questions or objections of their own.

This FAQ does not need to be read in order. The reader is encouraged to look through the section headings and jump to those they find most interesting.

ECL seems very weird. Are you sure you haven’t, like, taken a wrong turn somewhere?

We don’t think so.

ECL, at its core, takes two reasonable ideas that by themselves are considered quite plausible by many—albeit not completely uncontroversial—and notices that when you combine them, you get something quite interesting and novel. Specifically, ECL combines “large world” with “noncausal decision theory.” Many people believe the universe/multiverse is large, but that it might as well be small because we can only causally influence, or be influenced by, a small, finite part of it. Meanwhile, many people think you should cooperate in a near twin prisoners’ dilemma, but that this is mostly a philosophical issue because near twin prisoners’ dilemmas rarely, if ever, happen in real life. Putting the two ideas together: once you consider the noncausal effects of your actions, the world being large is potentially a very big deal.[1]

Do I need to buy evidential decision theory for this to work?

There are some different ways of thinking that take into account acausal influence and explain it in different ways. These include evidential decision theory and functional decision theory, as mentioned in our “ECL explainer” post. Updatelessness and superrationality are two other concepts that might get you all or part of the way to this kind of acausal cooperation.

- Evidential decision theory says that what matters is whether your choice gives you evidence about what the other agent will do.

- For example, if you are interacting with a near-copy, then the similarity between the two of you is evidence that the two of you make the same choice.

- Functional decision theory says that what matters is whether there is a logical connection between you and the other agent’s choices.

- For example, if you are interacting with a copy, then the similarity between the two of you is reason to believe there is a strong logical connection.

- That said, functional decision theory does not have a clear formalization, so it is not clear if and how this logical connection generalizes to dealing with merely near-copies (as opposed to full copies). Our best guess is that proponents of functional decision theory at least want the theory to recommend cooperating in the near twin prisoner’s dilemma.[2]

- Updatelessness strengthens the case for cooperation. This is because updatelessness arguably increases the game-theoretic symmetry of many kinds of interactions, which is helpful to get agents employing some types of decision procedures (including evidential decision theory) to cooperate.[3]

- Superrationality says that two rational thinkers considering the same problem will arrive at the same correct answer. So, what matters is common rationality.

- In game theory situations like the prisoner’s dilemma, knowing that the two answers, or choices, will be the same might change the answer itself (e.g., cooperate-cooperate rather than defect-defect).

- ECL was in fact originally named “multiverse-wide superrationality”.

We don’t take a stance in our “ECL explainer” piece on which of these decision theories, concepts, or others we do not list, is better overall. What matters is that they all (arguably) say that there is reason to cooperate in the near-twin prisoner’s dilemma and generalize from there to similar cases. Arguably, you might even be able to recover some behavior similar to ECL from causal decision theory, at least for ECL with different Everett branches (see Oesterheld, 2017, sec. 6.8).

Okay, I see the intuitive appeal of cooperating in the near twin prisoners’ dilemma, but I am still skeptical of noncausal decision theories. Is there any theoretical reason to take noncausal decision theories seriously?

In brief: Causal decision theory (CDT) falls short in multiple thought experiments.[4] This seems like reason enough to at least take noncausal decision theories seriously. It is worth mentioning, however, that in the academic literature, causal decision theory has a lot of support, and some academics actually take one-boxing in Newcomb’s problem as a decisive point against evidential decision theory.[5]

Details: One objection people could have is that we should not trust our intuitions in the near twin prisoner’s dilemma where causal decision theory arguably falls short. Because this thought experiment is highly contrived and unrealistic, our intuitions might be poorly trained for judging it. In contrast, we have a lot of experience with causation that tells us that whether something causes another thing is extremely relevant for decision-making. In fact, causation seems fundamental to the world: It seems like there is a matter of fact about what causes which other thing, and it seems silly to ignore that when making decisions.

While the debate between causal and noncausal decision theories is not settled, there are many arguments that point against causal decision theory and towards alternatives. We will sketch some prominent arguments against causal decision theory, but mostly advise readers to consult the sources below. Overall, we think the considerations at play do not justify high confidence in causal decision theory. There are of course many arguments in favor of causal decision theory and against any noncausal decision theory, which we don’t focus on here and which we advise readers to seek out if they want to engage with decision theory in detail.

(Note: The remainder of this section was kindly written by Sylvester Kollin.)

Why ain'cha rich?

In Newcomb’s problem, the act of two-boxing has lower average return than one-boxing, even from the causalist’s perspective. And therefore, it seems like the causalist is committed to a foreseeably worse option, which is arguably irrational.

See Lewis (1981) for the canonical reference; Ahmed and Price (2012, §1) and Ahmed (2014, §7.3.1) for a detailed characterization of the argument; and Joyce (1999, §5.1), Bales (2016), Wells (2017) and Oesterheld (2022) for counterarguments.

CDT is exploitable.

Causal decision theory (CDT) relies on some notion of a “causal probability” when making decisions.[6] However, looking ahead, they think about their own future choices as they would about any other proposition, and this yields an inconsistency in diachronic or sequential cases. Oesterheld and Conitzer (2021) use this fact to set up a (diachronic) Dutch book against CDT.[7]

See Spencer (2021), Ahmed (MS) and this post by Abram Demski for additional perspectives and formulations of what is essentially the same argument; Joyce (MS) for a discussion of how convincing the Dutch book argument is; and Rothfus (2021) for a formulation of “plan-based CDT” which avoids some of these problems. Also note that an updateless, cohesive (Mecham, 2010) or resolute (McClennen, 1990; Gauthier, 1997) version of CDT is not exploitable in this way.

CDT offers counterintuitive advice.

While opinions are split regarding what is rational in Newcomb’s problem[8], there are some other decision problems in which CDT clearly recommends the incorrect option. Consider the following decision problem from Ahmed (2014, p. 120)[9]:

Betting on the past. In my pocket (says Bob) I have a slip of paper on which is written a proposition P. You must choose between two bets. Bet 1 is a bet on P at 10:1 for a stake of one dollar. Bet 2 is a bet on P at 1:10 for a stake of ten dollars. So your pay-offs are as in [the table below]. Before you choose whether to take Bet 1 or Bet 2 I should tell you what P is. It is the proposition that the past state of the world was such as to cause you now to take Bet 2.

| P | ¬P |

Take Bet 1 | 10 | -1 |

Take Bet 2 | 1 | -10 |

Ahmed argues that any causal decision theory worthy of the name would recommend taking Bet 1, simply because taking Bet 1 causally dominates taking Bet 2.[10] But it is clearly irrational to take Bet 1: it seems obvious that if an agent decides to take Bet 2, then they should be very confident that P is true, and if they take Bet 1, then they should be very confident that P is false.

See Williamson and Sandgren (2021, forthcoming) for emendations of the standard theory to deal with this case, and Joyce (2016) for a critical discussion of whether this is a genuine decision problem.

Next, consider the following problem from Egan (2007):

The psychopath button. Paul is debating whether to press the "kill all psychopaths" button. It would, he thinks, be much better to live in a world with no psychopaths. Unfortunately, Paul is quite confident that only a psychopath would press such a button. Paul very strongly prefers living in a world with psychopaths to dying. Should Paul press the button?

CDT arguably recommends pressing the button, since this will have no causal effect on whether they are a psychopath or not. But they will instantly regret this: upon pressing the button, they are now confident that they’re a psychopath. Indeed, it seems irrational to press the button.

But note that it is much more contested what CDT actually recommends here than in the previous problem. See, e.g., Arntzenius (2008) and Joyce (2012).[11] See also Williamson (2019) for a defense of CDT’s recommendation.

EDT, medical Newcomb problems and the tickle defense.

One might grant that the recommendations of CDT might be counterintuitive at times, but that noncausal decision theories like evidential decision theory (EDT) also face its fair share of problems. Chief among these worries, at least traditionally, has been so-called “medical Newcomb’s problems”.[12] Consider the following problem from Egan (2007):

The smoking lesion. Susan is debating whether or not to smoke. She believes that smoking is strongly correlated with lung cancer, but only because there is a common cause—a condition that tends to cause both smoking and cancer. Once we fix the presence or absence of this condition, there is no additional correlation between smoking and cancer. Susan prefers smoking without cancer to not smoking without cancer, and she prefers smoking with cancer to not smoking with cancer. Should Susan smoke?

Most people agree that Susan should smoke (and this is indeed what CDT recommends). However, prima facie, it seems like EDT would recommend not smoking since that strongly correlates with having cancer, and having cancer and smoking is dispreferred to not having cancer and not smoking.

This has been forcefully rebutted by, e.g., Eells (1982, §6-8) and Ahmed (2014, §4). In short, the choice to smoke is plausibly preceded by a desire (a “tickle”) to do so, and at that point, smoking does not provide additional evidence of cancer. (This is known as “the tickle defense.”) See Oesterheld (2022) for a review and summary of these arguments.

Ahmed (2014, p. 88) writes (in relation to his discussion of the tickle defense): “The most important point to take forward from this discussion is the distinction between statistical correlations (relative frequencies) and subjective evidential relations in the form of conditional credences. It is the latter, not the former, that drive Evidential Decision Theory.”

Okay, so maybe I am not 100% confident in causal decision theory, but I am also not fully convinced by noncausal decision theory. What now?

Even if one isn’t convinced by the arguments against CDT, or by the arguments for EDT, one should plausibly have some decision-theoretic uncertainty (i.e., not be completely certain in CDT). MacAskill et al. (2021) then make the following argument: (i) we should deal with normative uncertainty by ‘maximizing expected choiceworthiness’; (ii) the stakes are much higher on EDT, due to the existence of correlated decision-makers; and so, (iii) even with a small credence in EDT, one should in practice follow the recommendations of EDT. MacAskill et al. call this the evidentialist’s wager. (See the paper for more details.) This argument could be extended to other decision theories with particularly high stakes. For commentary on this argument taken to its extreme, see the following section.

Is this a Pascal’s mugging?

In short: We don’t think so. As we explain in the above subsection, neither of ECL’s two core ingredients, “the world is large” and “noncausal effects can be action-guiding,” seem all that unlikely to be true.

In more words: There are a couple of cases in which one might think that ECL is a Pascal’s mugging:

1) One has very high credence in causal decision theory, and only takes ECL seriously because of the evidentialist’s wager. That is, one believes that one can only influence one’s causal environment, but one acts as though one has acausal influence because there’s a chance that acausal influence is real, and in those worlds, one has much more total influence.

We think Pascal’s mugging-type objections to lines of reasoning in this reference class are valid, and we share a discomfort with wagering on philosophical worldviews that we think are absurd. However, we do not think very high credence in causal decision theory is justified, which is required for this particular objection to get off the ground.

2) One thinks one’s acausal influence on other agents is small.

However, even if one’s acausal influence over individual agents is small, it would still be true that one’s total acausal influence is very large, without relying on wagers. A first reading of what it means to have a small acausal influence over an agent is: We definitely influence the agent’s action, but only by a small amount. Under this reading, influences still add up to a large effect over many agents.

A second reading is: We have a small probability of acausally influencing a given agent. So, if they only have two choices, like in the prisoner’s dilemma, then we only have a small chance of “changing” their decision. Under this reading, as long as our acausal influences over different agents are somewhat independent, then, given a very large number of agents, we should still have very high confidence that we are influencing a large number of agents. Oesterheld (2017a, p. 20, fn. 14) explains this second reading as follows: “Imagine drawing balls out of a box containing 1,000,000 balls. You are told that the probability of drawing a blue ball is only 1/1,000 and that the probabilities of different draws are independent. Given this information, you can tell with a high degree of certainty that there are quite a few blue balls in the box.”

We think belief in small acausal influence only leads to a Pascal’s mugging if you believe you have a low probability of influencing all agents and a high probability of influencing none of them (i.e., your acausal influences are fully correlated). (Apart from your acausal influence on exact copies, perhaps. But since they share your values, your influence on them doesn’t have any action-relevance anyway.) However, we don’t think that’s a likely view unless the belief is a consequence of being very confident in causal decision theory, which we’ve argued against in the previous section.

Should we be clueless about our acausal effects?

Complex cluelessness is a complicated topic that we aren’t experts on, so you should take what we say with a grain of salt. Nonetheless, here is our current view on the issue. In short: We think that cluelessness is a valid concern in general, but that it isn’t of much greater concern to ECL than it is to other cause areas and interventions.

Details: The cluelessness objection to ECL might look something like, “Okay, so if the world is large, then there will be lots of things going on in the world that we aren’t aware of,[13] and some of these things will be very weird to us. Perhaps we have acausal influence over some of these weird things that we don’t understand and this acausal influence has chaotic effects that could be any magnitude of good or bad. Then, it’s a mistake to not take this into account, because this could sign-flip the value of ECL.” While this is a valid concern, it remains regardless of whether you purposefully participate in ECL or choose to ignore ECL. If you believe the world is large and that acausal influence is (or might be) real, our actions have these unpredictable effects either way, whether we are purposefully focusing on them or only focusing on our predictable,[14] causal effects, e.g. by working on improving farm animal welfare.[15]

There is another version of the cluelessness objection to ECL that goes like this: “If the world is large and I have these acausal effects, then I am clueless. In the world where I am clueless, I am paralyzed and don’t know what to do. If the world is not large or I do not have any acausal effects, I am not clueless. I might as well wager on the scenario where I am not clueless and pretend the world is small and void of acausal effects.” This objection does indeed seem specific to ECL and to not necessarily apply to, say, alignment work. The objection comes down to a wager on not having acausal effects. We are personally not very comfortable with this wager and suspect that following the principle to always assume a world where one is not clueless would result in having to make many more uncomfortable wagers.

Does infinite ethics mess with ECL?

Infinite ethics seems even more complicated and confusing than acausal effects and complex cluelessness (see section above), and we haven’t spent a lot of time thinking about it. We will explain our current view on the issue, but you should take it with a grain of salt. Our immediate response here is to say that impossibility results (see Carlsmith, 2022, sec. V; Askell, 2018, sec. 5.1) around how to make choices in an infinite world are “everyone’s problem” and not just for people who consider ECL (Carlsmith, 2022, sec. XV, although Sandberg & Manheim, 2021, argue otherwise).[16]

On the other hand, largeness—perhaps infinite largeness—is one of ECL’s core ingredients. Therefore, it makes sense to inspect how ECL interacts with infinite ethics issues at least a little more closely. Example: If I gain one dollar, then, in an infinite universe with infinite copies of me, my value system gains infinite dollars, according to standard cardinal arithmetic. But if I were to gain two dollars instead, then, in the same infinite universe with infinite copies, standard cardinal arithmetic says this is no better than before—my value system still gains infinite dollars. This is a bizarre conclusion, and it’s problematic for ECL because it leads to different actions (e.g., defect to gain $1 vs. cooperate to gain $10) being evaluated as equally good.

No account of infinite ethics is fully satisfactory,[17] but some of the (clusters of) accounts—arguably the leading ones—rectify the conclusion here and say I’m better off in the second situation. The first of these accounts is based on expansionism/measure theory,[18] the second is about discounting in a semi-principled way (e.g., UDASSA),[19] and the third is Bostrom (2011, sec. 2.4–2.6)’s “hyperreal” approach.

Overall, we agree with those who point out that infinite ethics messes with everything, but we do not think that infinite ethics especially messes with ECL.[20][21]

Is ECL the same thing as acausal trade?

Typically, no. “Acausal trade” usually refers to a different mechanism: “I do this thing for you if you do this other thing for me.” Discussions of acausal trade often involve the agents attempting to simulate each other. In contrast, ECL flows through direct correlation: “If I do this, I learn that you are more likely to also do this.” For more, see Christiano (2022)’s discussion of correlation versus reciprocity, as well as Oesterheld (2017, sec. 6.1).

Is ECL basically a multiverse-wide moral trade?

If you define an agent’s morality as what that agent values, then, yes (kind of). The major caveat is that we can only do things for our ECL cooperation partners in our own light cone. If they value having ice cream themselves, we can’t help with that. The ECL mechanism—direct correlation—might also further constrain the space of possible trades.

In addition, we might not naturally describe many of the things other agents value (and that we want to cooperate with them on!) as moral values. For example, if there are agents out there who meet the conditions for trade and who really value there being lots of green triangles in our light cone because they think that’s funny, then ECL compels us to trade with them by making green triangles. One would be stretching things to call this cooperation a moral trade.

(Note: We are not moral philosophers by training, and it’s possible that some moral philosophers have a different definition of “moral trade” in mind. The EA Forum Wiki’s definition, which is the one we work with, reads: “Moral trade is the process where individuals or groups with different moral views agree to take actions or exchange resources in order to bring about outcomes which are better from the perspective of everyone involved” (link).)

Okay, I’ll grant that your toy setups work. But in the real world, we don’t have two neat actions with one labeled “cooperate.” We have lots of different available actions and compromise points. How is that supposed to work?

At a high level, we’ll note that it is common practice in science and philosophy to draw conclusions about the real world from toy setups. There are people who object to this practice, for instance, those who claim that game theory tells us nothing about the real world, but our overall stance on the issue is “all models are wrong, some are useful.” Our best guess is that the toy models we introduced in our ECL explainer are at least a little bit useful, and we expect more sophisticated models of acausal interactions to be more useful.

Nonetheless, the object-level question is still a good one. Uncertainty over what actions count as cooperations, and which cooperation point(s) to pick, is a real challenge to ECL. For instance, different agents’ understanding of the “default”/”do nothing” action might be quite different.[22] There is some work that tries to understand how agents might approach finding a cooperation point: see this paper by Johannes Treutlein and this post by Lukas Finnveden. On the other hand, we know of unpublished work that argues that the issue of different agents having different options from each other is severe enough to render ECL irrelevant.

We believe these issues only dampen the expected value of acting on ECL, by introducing uncertainty or constraining the set of agents we can cooperate with, instead of posing a fundamental problem to the framework. Do they dampen the expected value enough to make ECL irrelevant? Our current best guess is no. The most important reason for our view is that we are optimistic about the following:

- The following action is quite natural and hence salient to many different agents: commit to henceforth doing your best to benefit the aggregate values of the agents you do ECL with.

- Commitment of this type is possible.

- All agents are in a reasonably similar situation to each other when it comes to deciding whether to make this abstract commitment.

If this is true, then it does not matter that our concrete available actions are extremely different from each other. We can still cooperate by acausally influencing other agents to make this commitment by making this commitment ourselves.

You keep talking about “agents” for us to “do ECL” with… Who exactly are these agents?

The short answer is: Some subset of the naturally evolved civilizations and artificial superintelligences that exist throughout the multiverse. Which subset exactly, though, is complicated and not entirely clear. Fortunately, even if we can only do ECL with a narrow set of agents, it might still be the case that their values are roughly representative of a larger set of agents that it’s easier to draw inferences about (e.g., their values might be representative of all civilizations that arose out of evolutionary processes, plus their digital descendants).

In the simplest model of how ECL might work, all agents who do ECL cooperate with all other agents who do ECL. If this is the case in reality, then one just needs to figure out what kind(s) of agents tend to do ECL in order to cooperate with these agents and thus participate in the ECL trading commons.

However, it seems plausible that not everyone who does ECL has acausal influence over everyone else who does ECL:

- For example, maybe there are several different clusters of decision-making processes. Agents might have acausal influence over other agents within their own cluster, but not over agents from other clusters.

- Maybe some agents’ epistemic standpoints are too different. For example, if distant superintelligences already know everything about us and our actions, then perhaps from their perspective our actions are already fixed, and so there is nothing left for them to (acausally) influence. See Finnveden (2023) for more detail on when and why knowledge like this might destroy acausal influence.

- Updatelessness (Oesterheld, 2016; Soto, 2024) might help with this issue. We expect that anyone who wants to work on ECL will likely have to grapple somewhat with updatelessness or, alternatively, when to stop learning more about other civilizations.

Consequently, agents who do ECL might only be able to cooperate with a subset of the other agents who do ECL. If this is the case, then one needs to figure out what kind(s) of agents tend to do ECL and are similar enough to oneself in terms of decision-making and information.

As a final complication, not everyone we have acausal influence over necessarily has acausal influence over us. If Alice acausally influences Bob, and Bob acausally influences Charlie, and Charlie acausally influences Alice, should Alice try to benefit Bob’s values or Charlie’s values? Working from one of the toy models in our ECL explainer, we currently believe she should try to benefit Charlie’s values. Finnveden (2023) makes a related argument.

In practice, we recommend punting figuring out these details to the future. Humans now should focus on actions that are cooperative with a broad set of agents—i.e., actions that likely benefit the agents we can do ECL with without taking too much away from realizing our own values. At least, this is our best guess as to the present implications of ECL, given our present level of uncertainty. Examples of cooperative actions: making AGIs be cooperative (whether they be aligned or misaligned, and insofar as we build AGI at all); taking a more pluralistic stance towards morality than we otherwise would.

Is ECL action-relevant? Time-sensitive?

We believe ECL is action-relevant. We refer readers to Lukas Finnveden’s recent “Implications of evidential cooperation in large worlds”: rounding off a bunch of nuance, the key implication is that we should be trying hard to ensure that powerful, earth-originating AI systems—whether they be aligned or misaligned—engage in ECL.

Note that having our AIs engage in ECL is not simply punting ECL into the future. Working to increase the chance that future AIs engage in ECL is itself a salient action, in our view. Doing so puts us in the reference class of “civilizations that (genuinely) try to act cooperatively, in the ECL sense”: while not all civilizations in this reference class will succeed in implementing ECL, all will benefit from those that do succeed (to a first approximation, at least).[23]

The argument for time-sensitivity is that we might be able to increase the chance of future AI systems doing ECL in worlds where we cannot do so later, either because we build a misaligned AGI, or because the humans who end up in charge of an intent aligned AGI do not want their AGI to consider ECL, or because we manage to align an AI system’s values with ours but without guarding against grave epistemic errors from the distributional shift to thinking about ECL. This is most plausible on worldviews where views on decision theory, acausal influence, etc., are not convergent—i.e., where it is not the case that future people and/or AGI(s) will arrive at the same conclusions about ECL regardless of what we do this decade. Additionally, we might need to lock in some commitments early to reap the full benefits from ECL (e.g., commit to doing ECL sooner rather than later because our acausal influence might decrease over time—the argument here is similar to the argument for updatelessness).

That said, there are also potential downsides to working on making AI engage in ECL. In particular, this work might trade off with the probability of alignment success. It’s also contested for other reasons, though discussing the full case for and against is beyond the scope of this post.

How big a deal are the implications of ECL? How much does it change the impact of my actions?

This certainly deserves more investigation and its own detailed report. Unfortunately, we do not have anything like that yet. But here are a couple of things to note:

- Lukas Finnveden (2023) estimates that ECL increases the case for working on AI systems that benefit other value systems by 1.5x–10x.

- Note that this estimate does not include an estimate of the total value of this kind of work. Additionally, there is no comparison here to alignment work.

- Paul Christiano says (paraphrased from two emails; all direct quotes but rearranged and in bullet point form for readability):

- “I think that ECL or similar arguments may give us strong reasons to be generous to some other agents and value systems, especially other agents who do ECL (or something like that). I think this lines up with some more common-sensical criterion, like: it's better to be nice to people who would have been nice to you if your situations had been reversed. I think that these may be large considerations.

- If I had to guess, I think that figuring out what ECL recommends and then building an appropriate kind of AI might be 20% as good as building an aligned AI in expectation (with a reasonable chance of being close to 0% or 100%, but 20% as a wild guess of an average). [Later addendum by Paul: 20% seems too low.]

- There are other ways the ECL stuff can add value on top of alignment.

- It's unclear if it's tractable without just being able to align our AI (and this would be harder to get buy-in from the broader world). But we haven't studied it much and there are some obvious approaches that might work, so maybe I'd wildly guess that it's 20% as tractable as alignment. [Later addendum by Paul: 20% seems too low]

- So, [if I had to make up a number right now,] I'd guess that "Figuring out what ECL recommends, how seriously we take that recommendation, and whether it's tractable to work on" is 5-10% of the value of alignment. [...] By "tractable to work on" I meant actually getting it implemented. [...T]o clarify what the ratio [5-10%] means: I was imagining that an angel comes down and tells us all the ECL-relevant answers, and comparing that to how good it would be if an angel comes down and tells us all the alignment-relevant answers.

- Caspar Oesterheld, author of ECL’s seminal paper, estimates that him acting on ECL is roughly as valuable for his values as him not acting on ECL and multiplying his resources (and, by necessary extension, the resources of agents with his values that he has strong acausal influence over) by 1.5 to 5 times. (From conversation.)

- In other words, Caspar thinks you should be indifferent between:

- A universe in which “your reference class” (i.e. agents that find themselves in a similar decision situation as you, but don’t necessarily share your values) acts on ECL, and

- A universe in which your reference class doesn't act on ECL, but agents in your reference class that share your values get their resources 1.5–5x’ed.

Acknowledgements

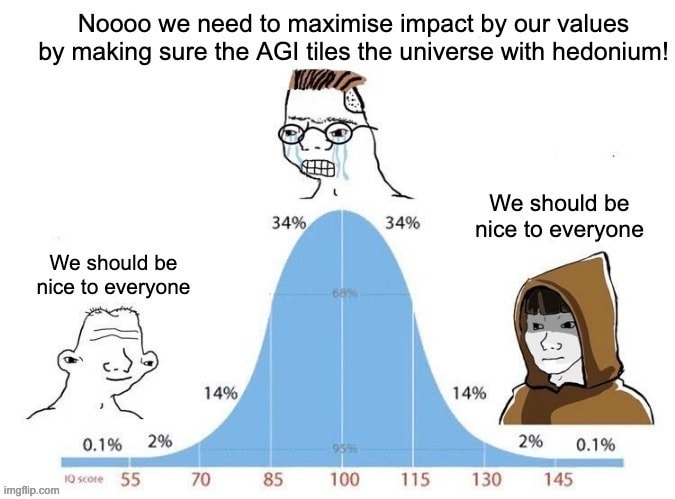

We are grateful to Caspar Oesterheld, Sylvester Kollin, Daniel Kokotajlo, Akash Wasil, Anthony DiGiovanni, Emery Cooper, and Tristan Cook for helpful feedback on an earlier version of this post. A special thanks goes to whoever created the great meme we use at the top.

- ^

H/T Daniel Kokotajlo for this framing.

- ^

While we believe proponents of functional decision theory—FDT—“want” for FDT to entail pretty broad forms of cooperation (e.g., the sort of cooperation that drives ECL), Kollin (2022) points out that FDT might not actually entail such cooperation. The implication being that FDT proponents might need to put forward a new decision theory that does have the cooperation properties they find desirable.

- ^

Updatelessness is closely related to updateless decision theory. Updateless decision theory, however, can be a bit of a quagmire because there doesn’t appear to be consensus on what exactly it refers to. Regardless of what is meant by updateless decision theory, the property of updatelessness can be used to augment decision theories like evidential decision theory (see, for example, this comment by Christiano).

- ^

Relatedly, see Christano (2018)’s exploration of why CDT has become the “default” decision theory despite being flawed.

- ^

And, presumably, against noncausal decision theories in general, though noncausal decision theories other than evidential are scarcely discussed in academia—you can find a list of some here. A significant minority of academic philosophers support evidential decision theory.

- ^

See Joyce (1990, §5) for a survey of different versions of CDT, as well as a general formulation.

- ^

Oesterheld and Contizer begin with the following decision problem:

Adversarial Offer: Two boxes, B1 and B2, are on offer. A (risk-neutral) buyer may purchase one or none of the boxes but not both. Each of the two boxes costs $1. Yesterday, the seller put $3 in each box that she predicted the buyer not to acquire. Both the seller and the buyer believe the seller’s prediction to be accurate with probability 0.75.

CDT recommends buying one of the boxes. But then here is the book/pump:

Adversarial Offer with Opt-Out: It is Monday. The buyer is scheduled to face the Adversarial Offer on Tuesday. He also knows that the seller’s prediction was already made on Sunday. As a courtesy to her customer, the seller approaches the buyer on Monday. She offers to not offer the boxes on Tuesday if the buyer pays her $0.20.

Here, CDT recommends paying the seller, despite being able to not pay anything, and not purchase any of the boxes.

- ^

See the 2020 PhilPapers Survey (Bourget & Chalmers), and also Oesterheld’s survey of earlier surveys (2017), for more on the precise numbers involved.

- ^

- ^

Hedden (2023) embraces Ahmed’s point, and makes a distinction between causal and counterfactual decision theory; and argues in favor of the latter, inter alia on the grounds that counterfactual decision theory does not recommend taking Bet 1 in Ahmed’s problem.

- ^

Although, see Ahmed (2014) for an even more damning problem, exploiting the instability of CDT, where the standard responses of Arntzenius and Joyce arguably do not work.

- ^

XOR Blackmail from Levinstein and Soares (2020, §2) is another decision problem which is seen as a counterargument to EDT. No tickle defense is available here. See Ahmed (2021, §3.4) for a discussion and defense of EDT.

- ^

Beyond unawareness, there are other things discussed under the heading of “deep uncertainty”. For example, I can be aware that my actions might have some effect on another agent’s behavior, yet I don’t feel comfortable at all placing sharp probabilities on the various possibilities.

- ^

Of course, many of our strictly causal effects lie beyond the limits of predictability (see chaos theory).

- ^

That is, unless you think our net acausal effects are in expectation zero if we focus on just causal effects, but unpredictable when we focus on acausal effects—this seems like an odd and incorrect position, though. Nevertheless, if one is worried about this, then this might be motivation to do foundational/philosophical work on ECL, or on cluelessness itself.

- ^

A concern we don’t discuss which also points towards infinite ethics being everyone’s problem goes something like: if the universe is infinite, then there is infinite happiness and infinite suffering, and so nothing anyone can do can affect the universe’s total happiness or suffering (Bostrom, 2011).

- ^

Carlsmith (2022): “Proposals for how to do this [compare different infinite values] tend to be some combination of: silent about tons of choices; in conflict with principles like ‘if you can help an infinity of people and harm no one, do it’; sensitive to arbitrary and/or intuitively irrelevant things; and otherwise unattractive/horrifying.”

- ^

This approach has been put forward in at least three different forms. One by Vallentyne and Kagan (1997) and a second by Arntzenius (2014)—these are referred to as “Catching up” and “Expected catching up”, respectively, in MacAskill et al. (2021, pp. 29-32)—and another by Bostrom (2011, sec. 2.3).

- ^

There is no discussion of UDASSA in the academic literature. Nonetheless, it appears to be endorsed by Evan Hubinger, Paul Christiano, and Holden Karnofsky, though Joe Carlsmith’s opinions on UDASSA are more mixed. Karnofsky says:

UDASSA, [the way it works] is you say, “I’m going to discount you by how long of a computer program I have to write to point to you.” And then you’re going to be like, “What the hell are you talking about? What computer program? In what language?” And I’m like, “Whatever language. Pick a language. It’ll work.” And you’re like, “But that’s so horrible. That’s so arbitrary. So if I picked Python versus I picked Ruby, then that’ll affect who I care about?” And I’m like, “Yeah it will; it’s all arbitrary. It’s all stupid, but at least you didn’t get screwed by the infinities.”

Anyway, I think if I were to take the closest thing to a beautiful, simple, utilitarian system that gets everything right, UDASSA would actually be my best guess. And it’s pretty unappealing, and most people who say they’re hardcore say they hate it. I think it’s the best contender. It’s better than actually adding everything up.

- ^

There are some accounts of infinite ethics that do especially mess with ECL, such as Bostrom (2011, sec. 3.2–3.4)’s “causal approach”.

- ^

See also Oesterheld (2017, sec. 6.10)’s brief discussion of ECL and infinite ethics.

- ^

Example: Say, the other agent can pay $1, which results in us getting $10, or they can do nothing (i.e., keep $1 for themself). We can direct-transfer any amount of dollars to them (so they get exactly the amount we pay), including nothing. We don’t know how much exactly we should transfer to maximize the chance of them paying $1, let alone to maximize expected dollars. Another complication is that the other agent’s understanding and our understanding of “do nothing” might be quite different. For example, maybe they have the option to pay $1 and us getting $10 (cooperate), to keep $1 and give us $1 at no cost to them (meaning both of us have $1 in the end), and to keep $1 without affecting our payoff. We might think the default “do nothing, don’t cooperate” action is for them to keep $1 and give us $1 at no cost. They might think the default “do nothing, don’t cooperate” action is for them to keep $1. This means we might be considering the decision from different starting positions, which might lead us to have very different ideas of what actions are equivalent to each other.

- ^

The “genuinely” bit is needed because if we only superficially try to get our advanced AI systems to do ECL, then, according to evidential decision theory, this is evidence that other civilizations like us will also only superficially try to do ECL.

Separately, it’s not as black-and-white as there being a single reference class for civilizations that tried to do ECL. For instance, different civilizations might put different levels of effort into doing ECL. Nonetheless, we think the reference class notion is a useful one.

Note also that the reference class is dynamic rather than static. In joining the reference class, we make it larger not only because we ourselves joined it, but also because we acausally influence other civilizations into joining it.

I would add that acausal influence is not only not Pascalian, but that it can make other things that may seem locally Pascalian or at least quite unlikely to make a positive local difference — like lottery tickets, voting and maybe an individual's own x-risk reduction work — become reasonably likely to make a large difference across a multiverse, because of variants of the Law of Large Numbers or Central Limit Theorem. This can practically limit risk aversion. See Wilkinson, 2022 (EA Forum post).

This doesn't necessarily totally eliminate all risk aversion, because the outcomes of actions can also be substantially correlated across correlated agents for various reasons, e.g. correlated agents will tend to be biased in the same directions, the difficulty of AI alignment is correlated across the multiverse, the probability of consciousness and moral weights of similar moral patients will be correlated across the multiverse, etc.. So, you could only apply the LLN or CLT after conditioning separately on the different possible values of such common factors to aggregate the conditional expected value across the multiverse, and then you recombine.

On cluelessness: if you have complex cluelessness as deep uncertainty about your expected value conditional on the possibility of acausal influence, then it seems likely you should still have complex cluelessness as deep uncertainty all-things-considered, because deep uncertainty will be infectious, at least if its range is higher (or incomparable) than that assuming acausal influence is impossible.

For example, suppose

Then the unconditional expected effects are still roughly -5*10^49 to 10^50, assuming the obvious intertheoretic comparisons between causal and acausal decision theories from MacAskill et al., 2021, and so deeply uncertain. If you don't make intertheoretic comparisons, then you could still be deeply uncertain, but it could depend on how exactly you treat normative uncertainty.

If you instead use precise probabilities even with acausal influence and the obvious intertheoretic comparisons, then it would be epistemically suspicious if the expected value conditional on acausal influence were ~0 and didn't dominate the expected value without acausal influence. One little piece of evidence biasing you one way or another gets multiplied across the (possibly infinite) multiverse under acausal influence.[1]

Maybe the expected value is also infinite without acausal influence, too, but a reasonable approach to infinite aggregation would probably find acausal influence to dominate, anyway.

I downvoted this post for the lack of epistemic humility. I don't mind people playing with thought experiments as a way to yield insight, but they're almost never a way of generating 'evidence'. Saying things like CDT 'falls short' because of them is far too strong. Under a certain set of assumptions, it arguably recommends an action that some people intuitively take issue with - that's not exactly a self-evident deficiency.

Personally, I can't see any reason to reject CDT based on the arguments here (or any I've seen elsewhere). They seem to rely on sleights such amphiboly, vagueness around what we call causality, asking me to accepting magic or self-effacing premises in some form, and an assumption of perfect selfishness/attachment to closed individualism, both of which I'd much sooner give up than accept a theory that basically defies known physics. For example:

In this scenario I don't actually see the intuition that supposedly pushes CDTs to take bet one. It's not clearly phrased, so maybe I'm not parsing it as intended, but it seems to me like the essence is supposed to be that the piece of paper says 'you will take bet 2.' If I take bet 2, it's true, if not, it's false. Since I'm financially invested in the proposition being true, I 'cause' it to be so. I don't see a case for taking bet 1, or any commitment to evidential weirdness from eschewing it.

This is doing some combination of amphiboly and asking us to accept self-effacing premises.

There are many lemmas to this scenario, depending on how we interpret it, and none given me any concern for CDT:

Finally if we somehow still insist that pressing the button will necessarily make the world better and that CDT will require Paul to press it while other decision theories will not, this seems like a strike against all those other decision theories. Why would we want to promote algorithms that worsen the world?

If we're imagining that we get to determine how an AGI thinks, I would rather than give it an easily comprehensible and somewhat altruistic motivation than a perfectly selfish motivation with greater complexity that's supposed to undo that selfishness.

Newcomb's problem is similar. I won't go through all the lemmas in detail, because there are many and some are extremely convoluted, but an approximation of my view is that it's incredibly underspecified how we know that Omega supposedly knows the outcome and that he's being honest with us about his intentions, and what we should do as a consequence. For example:

I realise there are many more scenarios, but these arguments feel liturgical to me. If the rejection of CDT can't be explained in a single well defined and non-spooky case of how it evidently fails by its own standards, I don't see any value in generating or 'clearing up confusions about' ever more scenarios, and strongly suspect those who try of motivated reasoning.

Executive summary: This post addresses common objections and questions about evidential cooperation in large worlds (ECL), which argues we should cooperate with distant civilizations that use similar reasoning.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.