Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Both China and the US take action to regulate AI

Last week, regulators in both China and the US took aim at generative AI services. These actions show that China and the US are both concerned with AI safety. Hopefully, this is a sign they can eventually coordinate.

China’s new generative AI rules

On Thursday, China’s government released new rules governing generative AI. China’s new rules, which are set to take effect on August 15th, regulate publicly-available generative AI services. The providers of such services will be criminally liable for the content their services generate.

The rules specify illegal content as:

“inciting subversion of national sovereignty or the overturn of the socialist system, endangering national security and interests or harming the nation’s image, inciting separatism or undermining national unity and social stability, advocating terrorism or extremism, promoting ethnic hatred and ethnic discrimination, violence and obscenity, as well as fake and harmful information [...]”

Providers are also expected to respect intellectual property rights, protect personal information, and avoid using discriminatory training data.

The rules are some of the strictest regulations of generative AI to date. In order to publicly release generative AI services, Chinese companies will need to be able to tightly control what their models generate. According to Helen Toner, the Director of Georgetown’s Center for Security and Emerging Technology (CSET), the rules represent “a pretty significant set of responsibilities, and will make it hard for smaller companies without an existing compliance and censorship apparatus to offer services.”

However, even large companies will face regulatory challenges. Baidu is still waiting on government approval to release its chatbot, Ernie. Baidu isn’t alone — currently no LLM is publicly available in China.

China is balancing regulation with promoting technological innovation. Critically, the rules only apply to publicly-available generative AI. They do not regulate research done by AI labs. This distinction represents a change from an earlier draft of the rules, which also regulated the “research and development” of generative AI. The earlier draft would have also required companies to verify the accuracy of data they used to train their models, which, given the huge amount of data used to train LLMs, would have been an impossible task.

In the face of a stalling recovery after the pandemic, China might have to rely on technological innovation to drive economic growth. China aims to become a world leader in AI by 2030. At the same time as regulators fear the dangers of AI, they want to keep Chinese AI development competitive with the US. The final draft of the rules on generative AI represents China’s attempt at balancing those interests.

The FTC investigates OpenAI

The FTC is investigating OpenAI over possible breaches of consumer protection law. On Thursday — the same day as China released its new rules — the Washington Post reported that the FTC had opened an investigation into OpenAI. The FTC is investigating whether OpenAI has engaged in unfair or deceptive practices that have caused "reputational harm" to consumers. It is also investigating a March security incident disclosed by OpenAI, where a system bug allowed some users to access payment-related information and other users' chat history.

The FTC sent a civil investigative demand to OpenAI, which requested documents pertaining to possible breaches of consumer protection law. If the FTC finds that OpenAI did breach consumer protection laws, it can compel OpenAI to reach an agreement known as a consent decree, in which OpenAI would change its practices with FTC oversight. If OpenAI does not comply with the agreement, the FTC could levy fines.

The FTC’s investigation represents the most serious challenge to generative AI services in the US to date. The US has lagged behind the rest of the world (notably the EU, and now China) in developing AI regulations. In the meantime, federal agencies have had to rely on existing legislation to regulate AI. Despite facing questions over its authority, the FTC has remained committed to regulating tech companies. It has warned that there is no "AI exemption" to consumer protection laws.

The US and China are independently concerned with AI safety, and are both moving quickly to regulate private actors. Hopefully, they can eventually coordinate to avoid an AI arms race.

Results from a tournament forecasting AI risk

A new report presents the views of both AI experts and ‘superforecasters’ on AI risk. In this story, we summarize the key results and discuss their implications.

The report pits AI experts against ‘superforecasters’. The Forecasting Research Institute (FRI) has released results from its 2022 Existential Risk Persuasion Tournament. In this tournament, both subject domain experts and ‘superforecasters’ predicted and debated the likelihoods of various catastrophic and existential risks.

‘Superforecasting’ is a term coined by Philip Tetlock, who is one of the authors of FRI’s report. Tetlock’s prior research has found that certain individuals, dubbed 'superforecasters,' consistently outperform experts in forecasting many near-term geopolitical events.

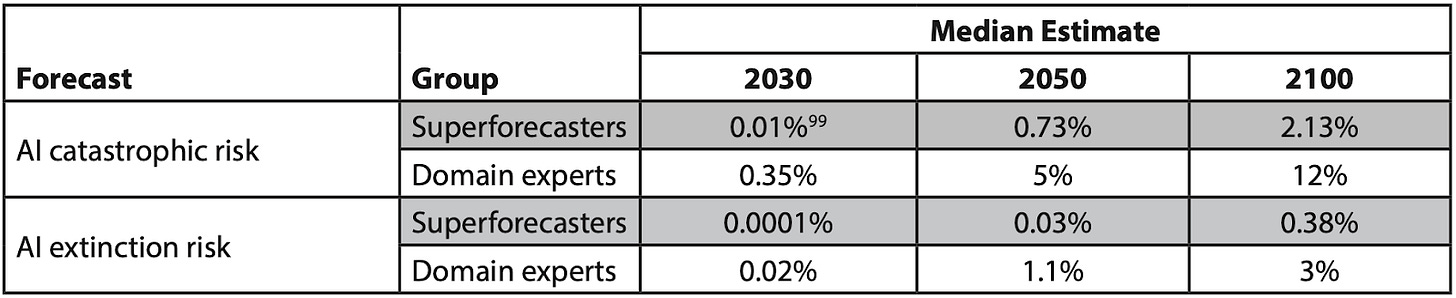

Superforecasters and AI experts disagreed about AI risk. One key result from the report is that superforecasters predicted lower probabilities of catastrophic or existential events than domain experts, and particularly from AI. The median superforecaster gave a 2.31% risk of catastrophe and 0.38% risk of extinction due to AI by 2100. In contrast, the median AI domain expert gave a 12% risk of catastrophe and 3.9% risk of extinction.

In the tournament, neither superforecasters nor AI experts were able to persuade each other to change their probabilities. However, it might be argued that we should give more weight to the views of superforecasters. After all, they have made more accurate predictions than experts in Tetlock’s previous research.

AI risk might not be “superforecastable.” Some of the methods that superforecasters use aren’t applicable to AI risk. One such method is the “outside view.” Rather than rely on their internal assessments, superforecasters identify a reference class of similar past events. For example, an expert might predict the outcome of a basketball game based on their in-depth knowledge of the players and coaches. In contrast, a superforecaster might predict the outcome by looking at the results of games between teams with similar records.

The “outside view” approach is difficult or impossible to apply to AI risk because there is no clear reference class. Perhaps the outside view is to continue extrapolating economic trends. Another outside view is that we should zoom out and recognize we are in a biologically significant period; prior periods are the emergence of singular cellular life, then multicellular life, and now we are approaching the transition from biological to digital life. There is no backlog of AI catastrophes to learn from. In statistics, an AI catastrophe would be a “tail event” — a rare but incredibly impactful event. Nassim Nicholas Taleb has described many tail events as “black swans.” Black swans are almost impossible to be completely certain about. Because of their importance, we must prepare for them nonetheless.

In sum, we do not find this report reassuring. Despite their disagreement about probabilities, AI experts and superforecasts agreed that AI presented the greatest threat of human extinction of any of the risks.

Updates on xAI’s plan

On Friday, xAI held a Twitter Space in which gave details about its plan.

xAI’s plan is to build “maximally-curious” AGI. During the call, Musk argued that the safest way to build an AGI is to be maximally-curious, rather than to align it to human values directly. He believes that a curious AGI would leave humanity alone because humanity is interesting. At the same time, a curious AGI would help satisfy xAI’s goal of “understanding the universe.”

However, it is not at all clear that a superintelligent AGI curious about humans would help them flourish. By analogy, “many scientists are curious about fruit flies, but this rarely ends well for the fruit flies.”

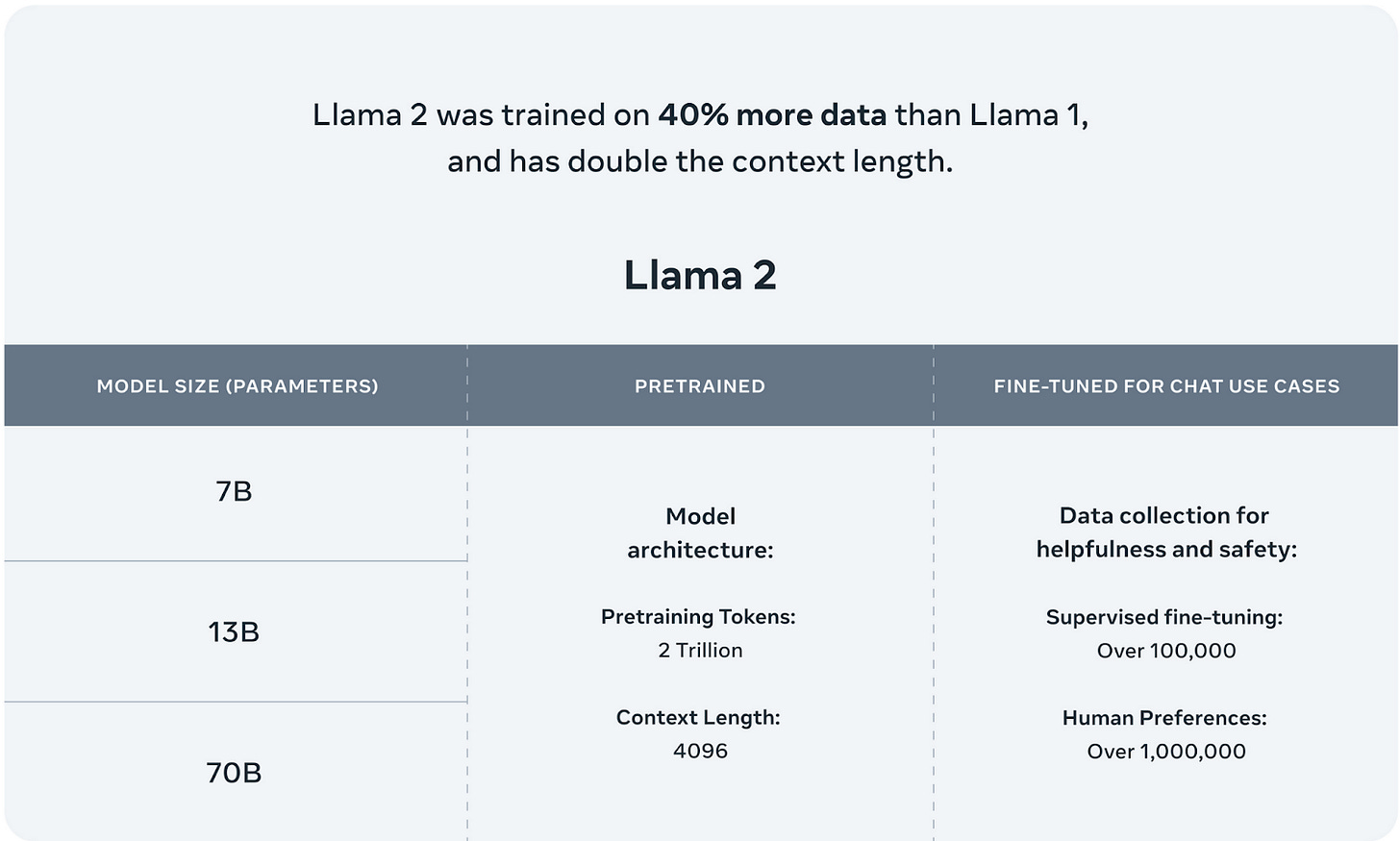

Meta releases Llama 2, open-source and commercially available

Meta has released Llama 2, its newest language model, for research and commercial use.

Llama 2 is now the most capable open-source language model. Llama 2 was trained with 40% more data than Llama 1, and its benchmark results indicate that it's now the most capable open-source language model.

Meta affirms its commitment to open-source models. Meta Together with the model, Meta released a statement and list of signatories in support of its approach to AI safety:

“We support an open innovation approach to AI. Responsible and open innovation gives us all a stake in the AI development process, bringing visibility, scrutiny and trust to these technologies. Opening today’s Llama models will let everyone benefit from this technology.”

In addition to being open-source, Llama 2 is available for commercial use. Businesses will be able to fine-tune and deploy the model for their own purposes.

Open-source models increase the probability of misuse. Llama 2 will likely prove a valuable tool for AI safety researchers. However, equally likely is that it will be used maliciously. After the model parameters for Llama 1 were leaked online, Senators Hawley and Blumenthal released a letter criticizing Meta for the leak. In the letter, they write:

“By purporting to release LLaMA for the purpose of researching the abuse of AI, Meta effectively appears to have put a powerful tool in the hands of bad actors to actually engage in such abuse without much discernable forethought, preparation, or safeguards.”

Language model misuse isn’t hypothetical. Already, cybercriminals are using generative AI to launch email phishing attacks. By releasing Llama 2 open-source, Meta has either ignored this potential for misuse, or wagered that allowing misuse in the short term will contribute to AI safety in the long term.

Links:

- Test your ability to predict which sorts of tasks ChatGPT 4 can accomplish.

- CAIS released a new report modeling the impact of AI safety field building. Here’s the introduction, student model, and professional model.

- A new short film explores the dangers of AI in military decision-making.

- Anthropic might give control of the company to a group of trustees not tied to Anthropic’s financial success.

- Senators Markey and Budd announce legislation to assess the biosecurity risks of AI.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, and An Overview of Catastrophic AI Risks

Subscribe here to receive future versions.