Four days ago I posted a question Why are you reluctant to write on the EA Forum?, with a link to Google Form. I received 20 responses.

This post is in three parts:

- Summary of reasons people are reluctant to write on the EA Forum

- Suggestions for making it easier

- Positive feedback for the EA Forum

- Replies in full

Summary of reasons people are reluctant to write on the EA Forum

The form received 20 responses over four days.

All replies included a reason for being reluctant or unable to write on the EA Forum. Only a minority of replies included a concrete suggestion for improvement.

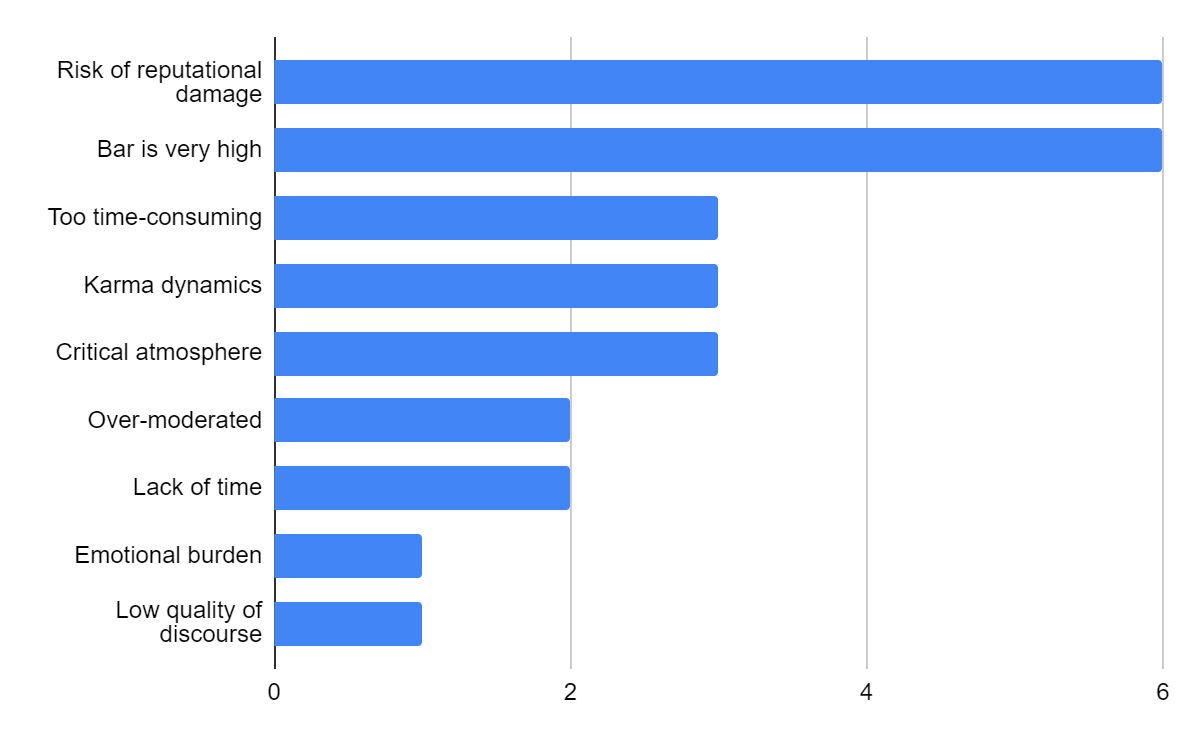

I have attempted to tally how many times each reason appeared across the 20 responses[2]:

Suggestions for making it easier to contribute

I give all concrete suggestions for helping people be less reluctant to contribute to the forum, in chronological order in which they were received:

- More discourse on increasing participation: "more posts like these which are aimed at trying to get more people contributing"

- Give everyone equal Karma power: "If the amount of upvotes and downvotes you got didn't influence your voting power (and was made less prominent), we would have less groupthink and (pertaining to your question) I would be reading and writing on the EA-forum often and happily, instead of seldom and begrudgingly."

- Provide extra incentives for posting: "Perhaps small cash or other incentives given each month for best posts in certain categories, or do competitions, or some such measure? That added boost of incentive and the chance that the hours spent on a post may be reimbursed somehow."

- "Discussions that are less tied to specific identities and less time-consuming to process - more Polis like discussions that allow participants to maintain anonymity, while also being able to understand the shape of arguments."

- Lower the stakes for commenting: "I'm not sure if comment section can include "I've read x% of the article before this comment"?"

Positive feedback for the EA Forum

The question invited criticism of the Forum, but it did nevertheless garner some positive feedback.

For an internet forum it's pretty good. But it's still an internet forum. Not many good discussions happen on the internet.

Forum team do a great job :)

Responses in full

All responses can be found here.

- ^

- ^

You can judge for yourself here whether I correctly classified the responses.

I considered lumping "too time-consuming" and "lack of time" together, but decided against this because the former seems to imply "bar is very high", while the latter is merely a statement on how busy the respondent's life is.

Pseudonymity should work in most cases to address the risk of reputational damage, albeit at the cost of the potential reputational upsides for posting.