This is the second post in a series. The first post is here and the third post is here. The fourth post is here and the fifth post is here.

3: Defusing the initial worry

The previous section lay out the initial worry: Since Pascal’s Mugger’s argument is obviously unacceptable, we should reject the long-termists’ argument as well; expected utility arguments are untrustworthy. This section articulates an important difference between the long-termist’s proposal and the Mugger’s, and elaborates on how expected-utility reasoning works.

The initial worry can be defused. There are pretty good arguments to be made that the expected utility of giving money to the Mugger is not larger than the expected utility of giving money to the long-termist, and indeed that the expected utility of giving money to the Mugger is not high at all, relative to your other options.

Think about all the unlikely things that need to be true in order for giving the Mugger $5 to really save 10^10^10^10 lives. There have to be other dimensions that are accessible to us. They have to be big enough to contain that many people, yet easily traversable so that we can affect that many people. The fate of all those people needs to depend on whether or not a certain traveller has $5. That traveler has to pick you, of all people, to ask for the money. There has to be no time to explain, nor any special gadget or ability the traveler can display to convince you. Etc.

Now consider another thing that could happen. You give the $5 to AMF instead, and your donation saves a child’s life. The child grows up to be a genius inventor, who discovers a way to tunnel to different dimensions. Moreover, the dimensions thus reached happen to contain 10^10^10^10 lives in danger, which we are able to save using good ol’ Earthling ingenuity…

It’s not obvious that you are more likely to save 10^10^10^10 lives by donating to the Mugger than you are to save that many lives by donating to AMF. Each possibility involves a long string of very unlikely events. The case could be made that the AMF scenario is more probable than the Mugger scenario; failing that, it’s at least not obvious that the Mugger scenario is more probable—and hence, it’s not obvious that the expected utility of paying the Mugger is higher than the expected utility of donating to AMF.

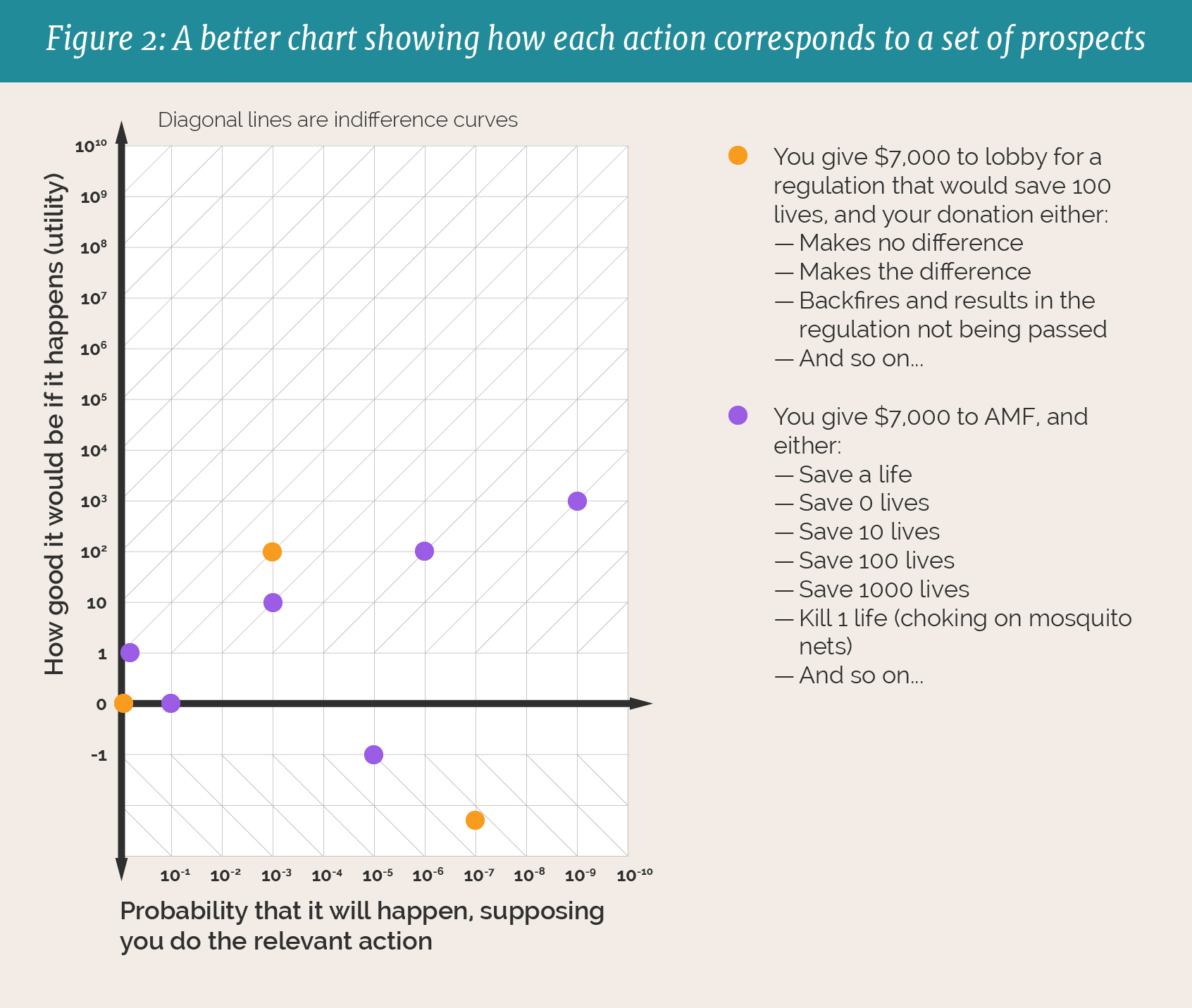

These considerations generalize. Thus far we have ignored the fact that each action has multiple possible outcomes. But really, each possible action should be represented on the chart by a big set of stars, one for each possible outcome of that action:

Each action has a profile of possible outcomes. The expected utility of an action is the sum of the expected utilities of all its possible outcomes:

A is the action we are contemplating, i ranges over all possible outcomes, P(i,A) is the probability of getting outcome i, supposing we do A, and U(i,A) is the utility of getting outcome i supposing we do A.

Thus, to argue that the expected utility of giving him $5 is higher than the expected utility of giving the money to AMF, the Mugger would need to give us some reason to think that the profile of possible outcomes of the former adds up to something more than the profile of possible outcomes of the latter. He has utterly failed to do this—even the sort of outcome he draws our attention to, the outcome in which you save 10^10^10^10 lives, is arguably more likely to occur via donating to AMF.

Can we say the same about the long-termist? No. Again, using AMF and x-risk as an example, it’s possible that we would save a child’s life from malaria, who would then grow up to prevent human extinction. But it’s much less probable that we’ll prevent human extinction via saving a random child than we will by trying to do it directly—the chain of unlikely events is long in both cases, but clearly longer in the latter.

So the initial worry can be dispelled. Even though the probability that the Mugger is telling the truth is much larger than 0.1^10^10^10, this isn’t enough to make donating to him attractive to an expected-utility maximizer. In fact, we have no particular reason to think that donating to the Mugger is higher expected-utility than donating to e.g. AMF, and arguably the opposite is true: Arguably, by donating to AMF, we stand an even higher chance of achieving the sort of outcome that the Mugger promises. Moreover, we can’t say the same thing about the long-termist; we really are more likely to prevent human extinction by donating to a reputable organization working on it directly than by donating to AMF. So we’ve undermined the Mugger’s argument without undermining the long-termist’s argument.

You may not be satisfied with this solution—perhaps you think there is a deeper problem which is not so easily dismissed. You are right. The next section explains why.

4. Steelmanning the problem: funnel-shaped action profiles

The problem goes deeper than the initial worry.

The “Pascal’s Mugging” scenario was invented recently by Eliezer Yudkowsky. But the more general philosophical problem of how to handle tiny probabilities of vast utilities has been around since 1670, when Blaise Pascal presented his infamous Wager. pointed out that the expected utility of an infinitely good possibility (e.g. going to heaven for eternity) is going to be larger than the expected utility of any possible outcome of finite reward, so long as the probability of the former is nonzero. So if you obey expected utility calculations, you’ll be a “fanatic”, living your entire life as if the only outcomes that matter are those of infinite utility. This is widely thought to be absurd, and so has spawned a large literature on infinite ethics.

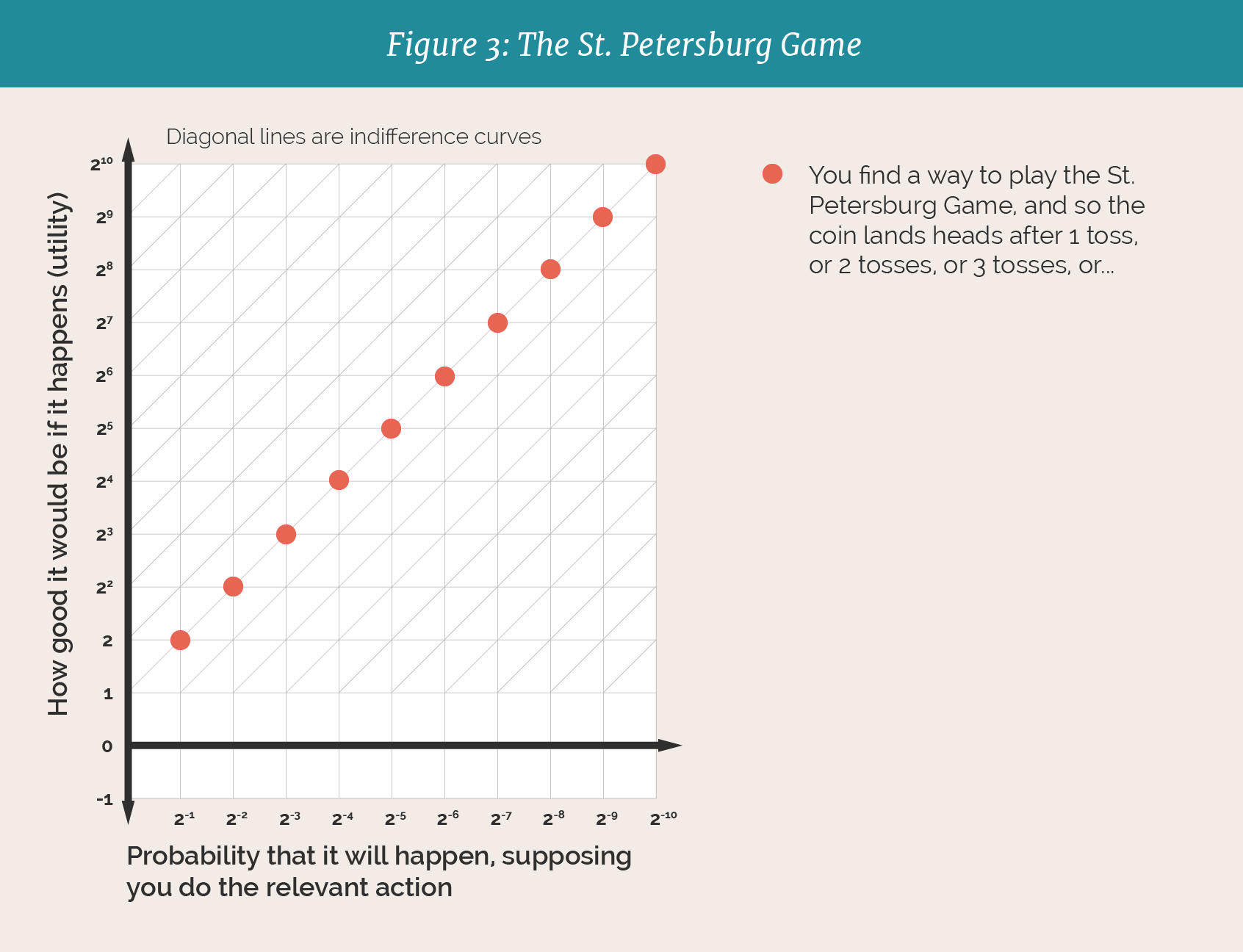

A common response is to say that infinities are weird and leave this to the philosophers and mathematicians to sort out. But as the St. Petersburg paradox in 1713 shows, we still get problems even if we restrict our attention to outcomes of finite utility, and even if we restrict our attention to only finitely many possible outcomes. Consider the St. Petersburg Game. A fair coin is tossed repeatedly until it comes up heads, at toss n. When heads finally occurs, the game ends and produces an outcome of utility 2^n: showed here:

If we consider all possible outcomes, the expected utility of playing the St. Petersburg game is infinite: There are infinitely many stars, and each one adds +1 to the total expected utility for playing the game. So, as with the original Pascal’s Wager involving possibilities of infinite reward, if we obey expected utility calculations we will be “fanatics” about opportunities to play St. Petersburg games. If we restrict our attention to the first N possible outcomes, the expected utility of playing the St. Petersburg game will be N—which is still problematic. People trying to sell us a ticket to play this game shouldn’t be able to make us pay whatever they want simply by drawing our attention to additional possible outcomes.

It gets worse. There’s another game, the Pasadena Game, which is like the St. Petersburg game except that possible outcomes oscillate between positive and negative utility as you look further and further to the right on the chart. The expected utility of the Pasadena Game is undefined, in the following sense: Depending on which order you choose to count the possible outcomes, the expected utility of playing the Pasadena Game can be any positive or negative number.

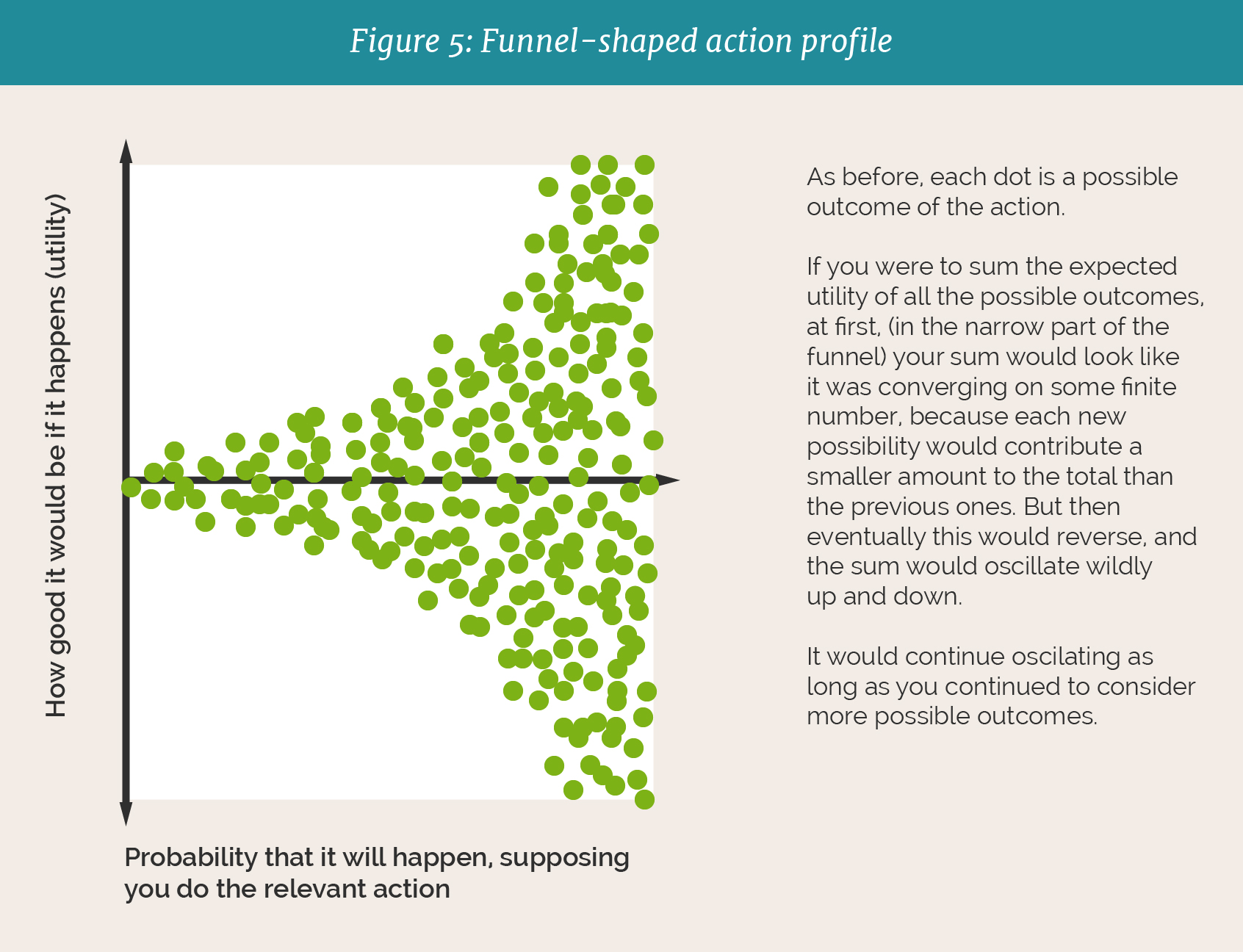

Good thing we aren’t playing these games, right? Unfortunately, we are playing these games every day. Consider a real-life situation, such as the one discussed earlier about where to donate your $7,000. Consider one of the actions available to you in that situation—say, donating $7,000 to AMF. Imagine making the chart bigger and bigger as you consider more and more possible outcomes of that action: “What if all of the nets I buy end up killing someone? What if they save the life of someone who goes on to cure cancer? What if my shipment of nets starts world war three?” At first, as you add these possibilities to the chart, they make little difference to the total expected utility of the action. They are more improbable than they are valuable or disvaluable—farther to the right than they are above or below—so they add or subtract tiny amounts from the total sum. Here is what that would look like:

Eventually you would start to consider some extremely unlikely possibilities, like “What if the malaria nets save the life of a visitor from another dimension who goes on to save 10^10^10^10 lives…” and “What if instead the extradimensional visitor kills that many people?” Then things get crazy. These outcomes are extremely improbable, yes, but they are more valuable or disvaluable than they are improbable. (This key claim will be argued for more extensively in the appendix.) So they dominate the expected utility calculation. Whereas at the beginning, the expected utility of donating $7,000 to AMF was roughly equal to saving 1 life for sure, now the expected utility oscillates wildly from extremely high to extremely low, as you consider more and more outlandish possibilities. And this oscillation continues as long as you continue to consider more possibilities. Visually, the action profile for donating $7,000 to AMF is funnel-shaped:

Considering all possible outcomes, actions with funnel-shaped action profiles have undefined expected utility. If instead you only consider outcomes of probability p or higher for some sufficiently tiny p… then expected utility will be well-defined but it will be mostly a function of whatever is happening in the region near the cutoff. (The top-right and bottom-right corners of the funnel will weigh the most heavily in your decision-making.) For discussion of why this is, see appendix 8.1 (forthcoming).

So the problem posed by tiny probabilities of vast utilities is that all available actions have funnel-shaped action profiles, making a mockery out of expected utility maximization. The problem is not that it would be difficult to calculate expected utilities; the problem is that expected utility calculations would give absurd answers if calculated correctly. Even if expected utility maximization wouldn’t recommend giving money to the Mugger, it would probably recommend something similarly silly.

This is the second post in a series. The first post is here and the third post is here. The fourth post is here and the fifth post is here.

Notes:

10: O is the set of all possible outcomes. I assume that there are countably many possible outcomes. I don’t think the problems would go away if we were dealing with uncountably many.

11: Note that the deeper problem is also discussed by Yudkowsky in his original post. There are, as I see it, three levels to the problem: Level I, discussed in section 2. Level II, discussed in section 4, which is about funnel-shaped action profiles. Level III, mentioned in section 4, which is the fully general version of the problem where we consider all possible outcomes, including those involving infinite rewards.

12: The precise form this takes depends on some details. See Hájek (2012) and the SEP for further discussion.

13: See e.g. Bostrom (2011) for a good introduction and overview. For a cutting-edge demonstration of how paradoxical infinite ethics can get (impossibility results galore!) see Askell (2018)

14: The probability of landing 7 heads in a row is 0.5ˆ7 = 0.0078125. So the probability of getting at least one tails before then is 0.9921875. So if e.g. N = 1 billion, then you’ll pay hundreds of millions of dollars to play a game that has a 99% chance of paying less than $64.

15: See e.g. Hajek & Smithson (2012)

16: This assumes what I take to be the standard view, in which strictly speaking there are infinitely many possible outcomes consistent with your evidence at any given time. If instead you think that there really are only finitely many possibilities, then see the next sentence.

17: I call it “funnel-shaped” because that’s an intuitive and memorable label. It’s not a precisely defined term. Technically, the problem is a bundle of related problems: Expected utility being undefined, and/or unduly sensitive to tiny changes in exactly which tiny possibilities you consider, and/or infinite for every action.

18: To spell that out a bit: We’d like to say that the ideally correct way to calculate expected utilities would be to consider all infinitely many possibilities, but that of course for practical purposes we should merely try to approximate that by considering some reasonably large set of possibilities. With funnel-shaped action profiles, there seems to be no such thing as a reasonably large set of possibilities, and no good reason to approximate the ideal anyway, since the ideal calculation always outputs “undefined.”

19: For example, if some possibilities have infinite value, then if one of our actions is even the tiniest bit more likely to lead to infinite value than another action, we’ll prioritize it. If there is a possibility of infinite value for every available action, then the expected utilities of all actions will be the same. For more discussion of this sort of thing, see appendix 8.3 (forthcoming).

Bibliography for this section

Askell, Amanda. (2018) Pareto Principles in Infinite Ethics. 2018. PhD Thesis. Department of Philosophy, New York University.

-This thesis presents some compelling impossibility results regarding the comparability of many kinds of infinite world. It also draws some interesting implications for ethics more generally.

Hájek, Alan. (2012) Is Strict Coherence Coherent? Dialectica 66 (3):411-424.

–This is Hajek’s argument against regularity/open-mindedness, based on (among other things) the St. Petersburg paradox.

Hájek, Alan and Smithson, Michael. (2012) Rationality and indeterminate probabilities. Synthese (2012) 187:33–48 DOI 10.1007/s11229-011-0033-3

–The argument appears here also, in slightly different form.

Bostrom, N. "Infinite Ethics." (2011) Analysis and Metaphysics, Vol. 10: pp. 9-59

–Discusses various ways to avoid "infinitarian paralysis," i.e. ways to handle outcomes of infinite value sensibly.

I am not very experienced in philosophy but I have a question.

You present a problem that needs solving: The funnel-shaped action profiles lead to undefined expected utility. You say that this conclusion means that we must adjust our reasoning so that we don't get this conclusion.

But why do you assume that this cannot simply be the correct conclusion from utilitarianism? Can we not say that we have taken the principle axioms of utilitarianism and, through correct logical steps, deduced a truth (from the axiomatic truths) that expected utility is undefined for all our decisions?

To me the next step after reaching this point would not be to change my reasoning (which requires assuming that the logical processes applied were incorrect, no?) but rather to reject the axioms of utilitarianism, since they have rendered themselves ethically useless.

I have a fundamental ethical reasoning that I would guess is pretty common here? It is this: Given what we know about our deterministic (and maybe probabilistic) universe, there is nothing to suggest any existence of such things as good/bad or right choices/wrong choices and we come to the conclusion that nothing matters. However this is obviously useless and if nothing matters anyway then we might as well live by a kind of "next best" ethical philosophy that does provide us with right/wrong choices, just in case of the minuscule chance it is indeed correct.

However you seem to have suggested that utilitarianism just takes you back to the "nothing matters" situation which would mean we have to go to the "next next best" ethical philosophy.

Hmm I just realised your post has fundamentally changed every ethical decision of my life...

It would be greatly appreciated if anyone answers my question not only the OP, thanks!

Welcome to the fantastic world of philosophy, friend! :) If you are like me you will enjoy thinking and learning more about this stuff. Your mind will be blown many times over.

I do in fact think that utilitarianism as normally conceived is just wrong, and one reason why it is wrong is that it says every action is equally choiceworthy because they all have undefined expected utility.

But maybe there is a way to reconceive utilitarianism that avoids this problem. Maybe.

Personally I think you might be interested in thinking about metaethics next. What do we even mean when we say something matters, or something is good? I currently think that it's something like "what I would choose, if I was idealized in various ways, e.g. if I had more time to think and reflect, if I knew more relevant facts, etc."

Huh it's concerning that you say you see standard utilitarianism as wrong because I have no idea what to believe if not utilitarianism.

Do you know where I can find out more about the "undefined" issue? For me this is pretty much the most important thing for me to understand since my conclusion will fundamentally determine my behaviour for the rest of my life, yet I can't find any information except for your posts.

Thanks so much for your response and posts. They've been hugely helpful to me

The philosophy literature has stuff on this. If I recall correctly I linked some of it in the bibliography of this post. It's been a while since I thought about this I'm afraid so I don't have references in memory. Probably you should search the Stanford Encyclopedia of Philosophy for the "Pasadena Game" and "st petersburg paradox"

Good post but we shouldn't assume the "funnel" distribution to be symmetric about the line of 0 utility. We can expect that unlikely outcomes are good in expectation just as we expect that likely outcomes are good in expectation. Your last two images show actions which have an immediate expected utility of 0. But if we are talking about an action with generally good effects, we can expect the funnel (or bullet) to start at a positive number. We also might expect it to follow an upward-sloping line, rather than equally diverging to positive and negative outcomes. In other words, bed nets are more likely to please interdimensional travelers than they are to displease them, and so on.

Also, the distribution of outcomes at any level of probability should follow a roughly Gaussian distribution. Most bizarre, contorted possibilities lead to outcomes that are neither unusually good nor unusually bad. This means it's not clear that the utility is undefined; as you keep you looking to sets of unlikelier outcomes you are getting a series of tightly finite expectations rather than big broad ones that might easily turn out to be hugely positive or negative based on minor factors. Your images of the funnel and bullet should show much more density along the middle, with less density at the top and bottom. We still get an infinite series, so there is that philosophical problem for people who want a rigorous idea of utilitarianism, but it's not a big problem for practical decision making because it's easy to talk about some interventions being better than others.

I'm not assuming it's symmetric. It probably isn't symmetric, in fact. Nevertheless, it's still true that the expected utility of every action is undefined, and that if we consider increasingly large sets of possible outcomes, the partial sums will oscillate wildly the more we consider.

Yes, at any level of probability there should be a higher density of outcomes towards the center. That doesn't change the result, as far as I can tell. Imagine you are adding new possible outcomes to consideration, one by one. Most of the outcomes you add won't change the EV much. But occasionally you'll hit one that makes everything that came before look like a rounding error, and it might flip the sign of the EV. And this occasional occurrence will never cease; it'll always be true that if you keep considering more possibilities, the old possibilities will continue to be dwarfed and the sign will continue to flip. You can never rest easy and say "This is good enough;" there will always be more crucial considerations to uncover.

So this is a problem in theory--it means we are approximating an ideal which is both stupid and incoherent--but is it a problem in practice?

Well, I'm going to argue in later posts in this series that it isn't. My argument is basically that there are a bunch of reasonably plausible ways to solve this theoretical problem without undermining long-termism.

That said, I don't think we should dismiss this problem lightly. One thing that troubles me is how superficially similar the failure mode I describe here is to the actual history of the EA movement: People say "Hey, let's actually do some expected value calculations" and they start off by finding better global poverty interventions, then they start doing this stuff with animals, then they start talking about the end of the world, then they start talking about evil robots... and some of them talk about simulations and alternate universes...

Arguably this behavior is the predictable result of considering more and more possibilities in your EV calculations, and it doesn't represent progress in any meaningful sense--it just means that EAs have gone farther down the funnel-shaped rabbithole than everybody else. If we hang on long enough, we'll end up doing crazier and crazier things until we are diverting all our funds from x-risk prevention and betting it on some wild scheme to hack into an alternate dimension and create uncountably infinite hedonium.

>Imagine you are adding new possible outcomes to consideration, one by one. Most of the outcomes you add won't change the EV much. But occasionally you'll hit one that makes everything that came before look like a rounding error, and it might flip the sign of the EV.

But the probability of those rare things will be super low. It's not obvious that they'll change the EV as much as nearer term impacts.

This would benefit from an exercise in modeling the utilities and probabilities of a certain intervention to see what the distribution actually looks like. So far no one has bothered (or needed, perhaps) to actually enumerate the 2nd, 3rd, etc... order effects and estimate their probabilities. All this theorizing might be unnecessary if our actual expectations follow a different pattern.

>So this is a problem in theory--it means we are approximating an ideal which is both stupid and incoherent.

Are we? Expected utility is still a thing. Some actions have greater expected utility than others even if the probability distribution has huge mass across both positive and negative possibilities. If infinite utility is a problem then it's already a problem regardless of any funnel or oscillating type distribution of outcomes.

>Arguably this behavior is the predictable result of considering more and more possibilities in your EV calculations, and it doesn't represent progress in any meaningful sense--it just means that EAs have gone farther down the funnel-shaped rabbithole than everybody else.

Another way of describing this phenomenon is that we are simply seizing the low hanging fruit, and hard intellectual progress isn't even needed.

Yes, if the profiles are not funnel-shaped then this whole thing is moot. I argue that they are funnel-shaped, at least for many utility functions currently in use (e.g. utility functions that are linear in QALYs) I'm afraid my argument isn't up yet--it's in the appendix, sorry--but it will be up in a few days!

If the profiles are funnel-shaped, expected utility is not a thing. The shape of your action profiles depends on your probability function and your utility function. Yes, infinitely valuable outcomes are a problem--but I'm arguing that even if you ignore infinitely valuable outcomes, there's still a big problem having to do with infinitely many possible finite outcomes, and moreover even if you only consider finitely many outcomes of finite value, if the profiles are funnel-shaped then what you end up doing will be highly arbitrary, determined mostly by whatever is happening at the place where you happened to draw the cutoff.

That's what I'd like to think, and that's what I do think. But this argument challenges that; this argument says that the low-hanging fruit metaphor is inappropriate here: there is no lowest-hanging fruit or anything close; there is an infinite series of fruit hanging lower and lower, such that for any fruit you pick, if only you had thought about it a little longer you would have found an even lower-hanging fruit that would have been so much easier to pick that it would easily justify the cost in extra thinking time needed to identify it... moreover, you never really "pick" these fruit, in that the fruit are gambles, not outcomes; they aren't actually what you want, they are just tickets that have some chance of getting what you want. And the lower the fruit, the lower the chance...

>The shape of your action profiles depends on your probability function

Are you saying that there is no expected utility just because people have different expectations?

>and your utility function

Well, of course. That doesn't mean there is no expected utility! It's just different for different agents.

>I'm arguing that even if you ignore infinitely valuable outcomes, there's still a big problem having to do with infinitely many possible finite outcomes,

That in itself is not a problem, imagine a uniform distribution from 0 to 1.

>if the profiles are funnel-shaped then what you end up doing will be highly arbitrary, determined mostly by whatever is happening at the place where you happened to draw the cutoff.

If you do something arbitrary like drawing a cutoff, then of course how you do it will have arbitrary results. I think the lesson here is not to draw cutoffs in the first place.

>That's what I'd like to think, and that's what I do think. But this argument challenges that; this argument says that the low-hanging fruit metaphor is inappropriate here: there is no lowest-hanging fruit or anything close; there is an infinite series of fruit hanging lower and lower, such that for any fruit you pick, if only you had thought about it a little longer you would have found an even lower-hanging fruit that would have been so much easier to pick that it would easily justify the cost in extra thinking time needed to identify it... moreover, you never really "pick" these fruit, in that the fruit are gambles, not outcomes; they aren't actually what you want, they are just tickets that have some chance of getting what you want. And the lower the fruit, the lower the chance...

There must be a lowest hanging fruit out of any finite set of possible actions, as long as "better intervention than" follows basic decision theoretic properties which come automatically if they have expected utility values.

Also, remember the conservation of expected evidence. When we think about the long run effects of a given intervention, we are updating our prior to go either up or down, not predictably making it seem more attractive.

I think that the assumption of the existence of a Funnel shaped distribution with undefined expected value of things we care about is quite a bit stronger than assuming that there are infinitely many possible outcomes.

But even if we restrict ourselves to distributions with finite expected value, our estimates can still fluctuate wildly until we have gathered huge amounts of evidence.

So while i am sceptical of the assumption that there exists a sequence of world states with utilities tending to infinity and even more sceptical of extremely high/low utility world states being reachable with sufficient probability for there to be undefined expected value (the absolute value of the utility of our action would have to have infinite expected value, and i'm sceptical of believing this without something at least close to "infinite evidence"), i still think your post is quite valuable for starting a debate on how to deal with low probability events, crucial considerations and our decision making when expected values fluctuate a lot.

Also, even if my intuition about the impossibility of infinite utilities was true (I'm not exactly sure what that would actually mean, though), the problems you mentioned would still apply to anyone who does not share this intuition.