Doing good things is hard.

We’re gonna look at some deep tensions that attach to trying to do really good stuff. To keep it relatable(?!), I’ve included badly-drawn animals.

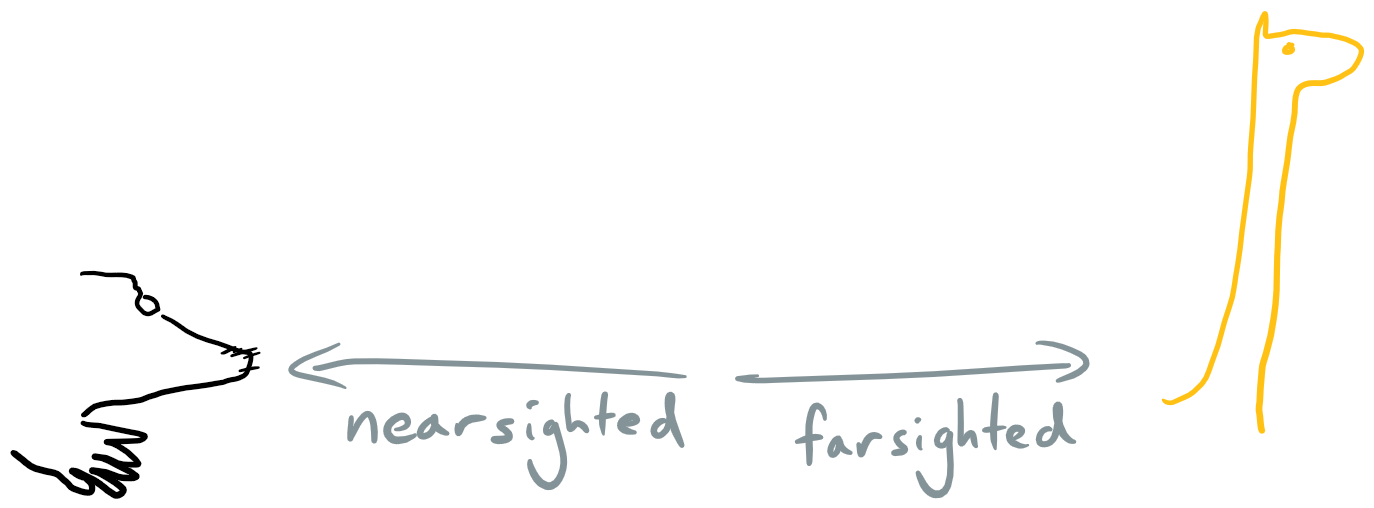

The mole pursues goals which are within comprehension and reach. At best the mole knows the immediate challenges extremely well and does a great job at dealing with them. At worst, the mole is digging in a random direction.

The giraffe looks into the distance, focusing on the big picture, and perhaps on challenges that will come up later but aren’t even apparent today. At best the giraffe identifies crucial directions to steer in. At worst, the giraffe doesn’t look where they’re going and trips over, or has ideas which are dumb because they don’t engage with details.

Moles have much more direct feedback loops than giraffes, so it’s harder to be a good giraffe than a good mole. When there’s a well-specified achievable goal, you can set a mole at it. Consequently many industries are structured with lots of mole-shaped roles. Idealists are often giraffes.

The beaver is industriously focused on the task at hand. The beaver rejects distractions and gets s*** done. At best, they are extremely productive. At worst, they miss big improvements in how they could go about things, or execute on a subtly wrong version of the task that misses most of the value.

The elephant is always asking how things are going, and whether the task is the right one. At their best, the elephant reorients things in better directions or finds big systemic improvements. At their worst, the elephant fails to get anything done because they can’t settle on what they’re even trying to do.

The mole and beaver are cousins, as are the giraffe and elephant. But you certainly get mole-elephants (applying lots of meta but only to local goals), or giraffe-beavers (just focused on the object-level of the big-picture).

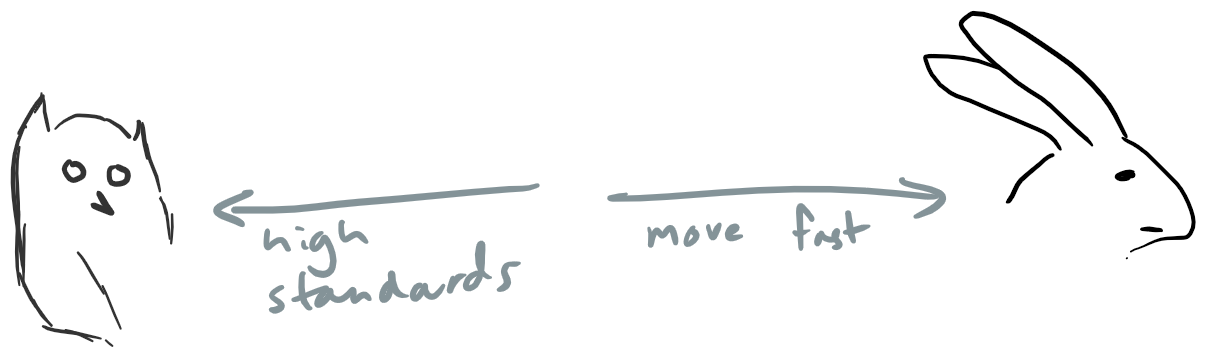

The owl is a perfectionist. They have high standards for things, and want everything to meet those. At their best, they make things excellent, and perfectly crafted. If you want to produce the best X in the world, you probably need at least one owl involved. At their worst, they stall on projects because there’s something not quite right that they can’t see how to fix, and it’s unsatisfying.

The hare likes to ship things. They feel urgency all the time, and hate letting the perfect be the enemy of the good. At their best, they just make things happen! The hare can also be a good learner because they charge at things — sometimes they bounce off and get things wrong, but they get loads of experience so loads of chances to learn. At their worst, the hare produces loads of stuff but it’s all junk.

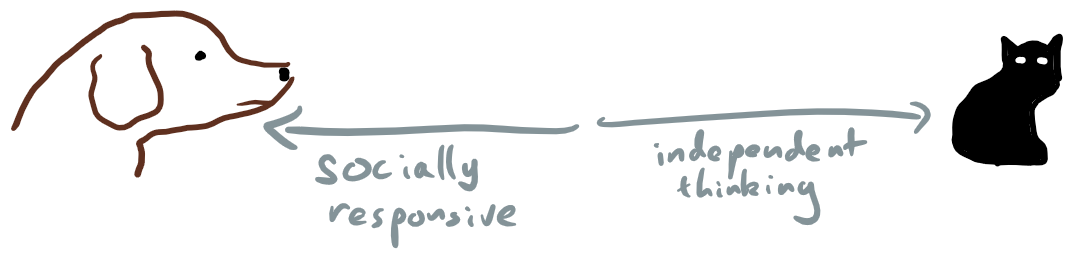

The dog is very socially governed / approval-seeking. They are excited to do things that people (particularly the cool people) will think are cool. At their best, they provide a social fabric which makes coordination simple (if someone else wants a thing done, they’re happy to do it without slowing everything down by making sure they understand the deep reasons why it’s desired). They also make sure gaps are filled — they jump onto projects and help out with things, or pick up balls that everyone agrees are important. At their worst, they chase after hype and status without providing any meaningful checks on whether the ideas they’re following are actually good.

The cat doesn’t give tuppence for what anyone else thinks. They’re just into working out what seems good and going for it. All new ideas involve at least a bit of cat. At their best, cats head into the wilderness and bring back something amazing. At their very worst they do something stupid and damaging that proper socialisation would have stopped. The more normal cat failure modes are to wander too far from consensus and end up working on things that don’t matter (where consensus would have made it easier to see that they didn’t matter), or to not know how to bring their catch back to the pack, so have it languish. Cats are proverbially difficult to herd.

All of the animals are archetypes. They’re each attending to something important, but each is pathological when taken too far. I think they’re useful to understand. The most valuable characteristics will vary quite a lot with task/project/role. Often I think on each dimension we need some balance; that can occur by pairing people with different strengths, but it can also be good if individuals learn how to integrate the strengths of both archetypes. Sometimes this might mean switching between them (e.g. I think this is often correct for the beaver and elephant); sometimes it might mean a deeper integration.

Many of the people I’ve talked to about this identify more readily with one end of some (or all) of the dimensions. You might like to take a minute and see if that seems true for you. (In some cases the answer might vary with context — maybe you’re a hare for fiction writing but lean owlish for programming.) Probably the end you identify with represents a strength. That’s worth holding onto and leaning into! See if you can design your work around your strengths.

But also perhaps try connecting with what’s good about its opposite. I think real mastery often involves being able to access the strengths of all the archetypes.

This was originally written for the Research Scholars Programme onboarding. Thanks to several people (both inside & outside RSP) who provided helpful comments or inspiration.

Interesting idea!

I'm keen for the language around this to convey the correct vibe about the epistemic status of the framework: currently I think this is "here are some dimensions that I and some other people feel like are helpful for our thinking". But not "we have well-validated ways of measuring any of these things" nor "this is definitely the most helpful carving up in the vicinity" nor "this was demonstrated to be helpful for building a theory of change for intervention X which did verifiably useful things". I think the animal names/pictures are kind of playful and help to convey that this isn't yet attempting to be in epistemically-solid land?

I guess I'm interested in the situations where you think an abbreviation would be helpful. Do you want someone to make an EA personality test based on this?