Epistemic status: Quite speculative but I think these risks are important to talk about.

Important edit to clarify what might not have been obvious before: I don't think EA orgs should pay "low" salaries. I use the term 'moderate' rather than low because I don't think paying low salaries is good (for reasons mentioned by both myself and Stefan in the comments). Instead, I'm talking about concerns with more orgs paying $150,000+ salaries (or 120%+ of market rate) on regular basis, not paying people $80,000 or so. Obviously exceptions apply like I mentioned to Khorton below but it should be at least the point where everyone's (and their families/dependents) material needs can be met.

There’s been a fair bit of discussion recently about the optics and epistemics related with EA accruing more money. However, one thing I believe hasn’t been discussed in great detail is the potential issue of worsening value alignment with increasing salaries.

In summary, I have several hypotheses about how this could look:

- High salaries at EA orgs will draw people for whom altruism is less of a central motivation, as they are now enticed by financial incentives.

- This will lead to reduced value alignment to key EA principles, such that employees within EA organisations might have less of a focus on doing the most good, relative to their other priorities.

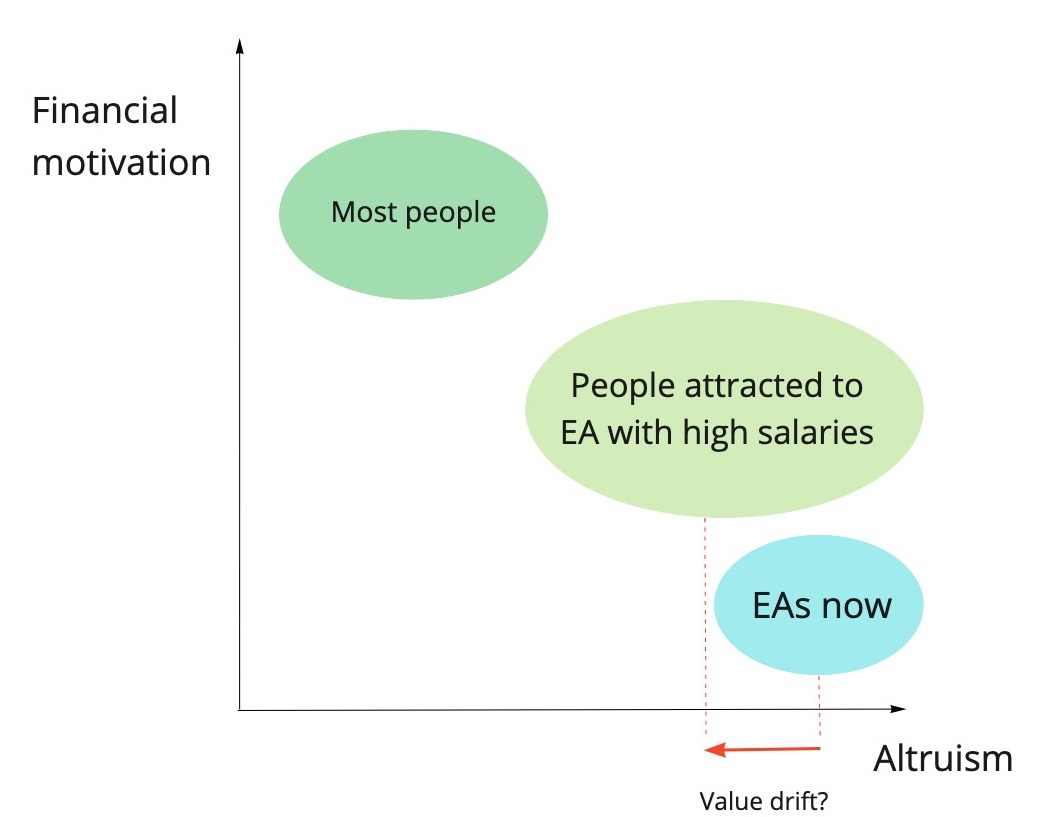

- If left unmitigated, this could lead to gradual value drift within EA as a community, [1] where we are no longer truly focused on maximising altruistic impact.

The trade-offs

First and foremost, high salaries within EA orgs obviously can be good. There are clear benefits e.g. attracting high-calibre individuals that would otherwise be pursuing less altruistic jobs, which is obviously great. In addition, even the high salaries we pay might still be much below what we perceive the impact of these roles to be, so it’s still worth it from an altruistic impact perspective. However, I generally think there’s been little discourse about the dangers of high salaries within EA, so I’ll focus on those within this piece.

The pull of high salaries

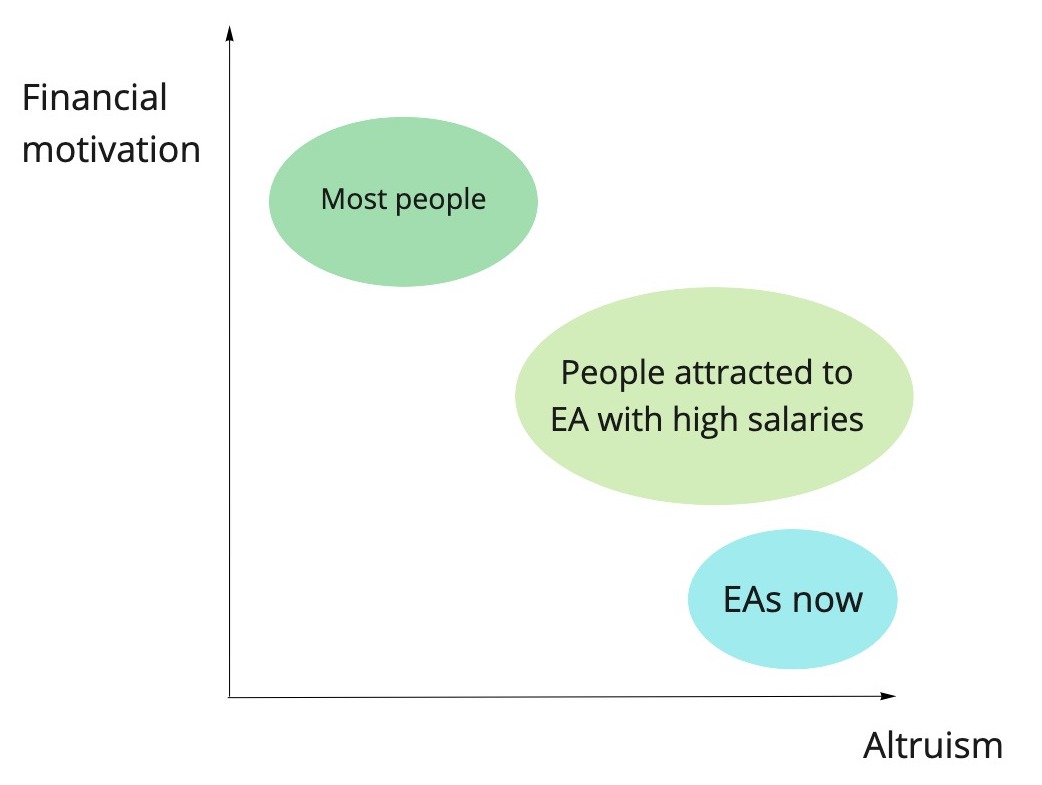

One core claim is that EA organisations offering high salaries will attract less altruistically-minded people relative to most people currently working at EA orgs (which I’ll call “EAs now” as a shorthand). This is not to say that these people attracted by higher salaries won’t be altruistic at all – but I believe those with strong altruistic motivations would be happy with moderate salaries, as their core values focus on impact rather than financial reward. As high salaries become more common across EA, due to increased funding and the importance of attracting the most talented individuals, this issue could become widespread in the EA community.

To make my point more clear, I’ve sketched out some diagrams below of how salaries might affect the EA community in practice. There are obviously many more factors that people take into account when searching for a job (e.g. career capital, personal fit) but I’m assuming these will be roughly constant if salaries change.[2]

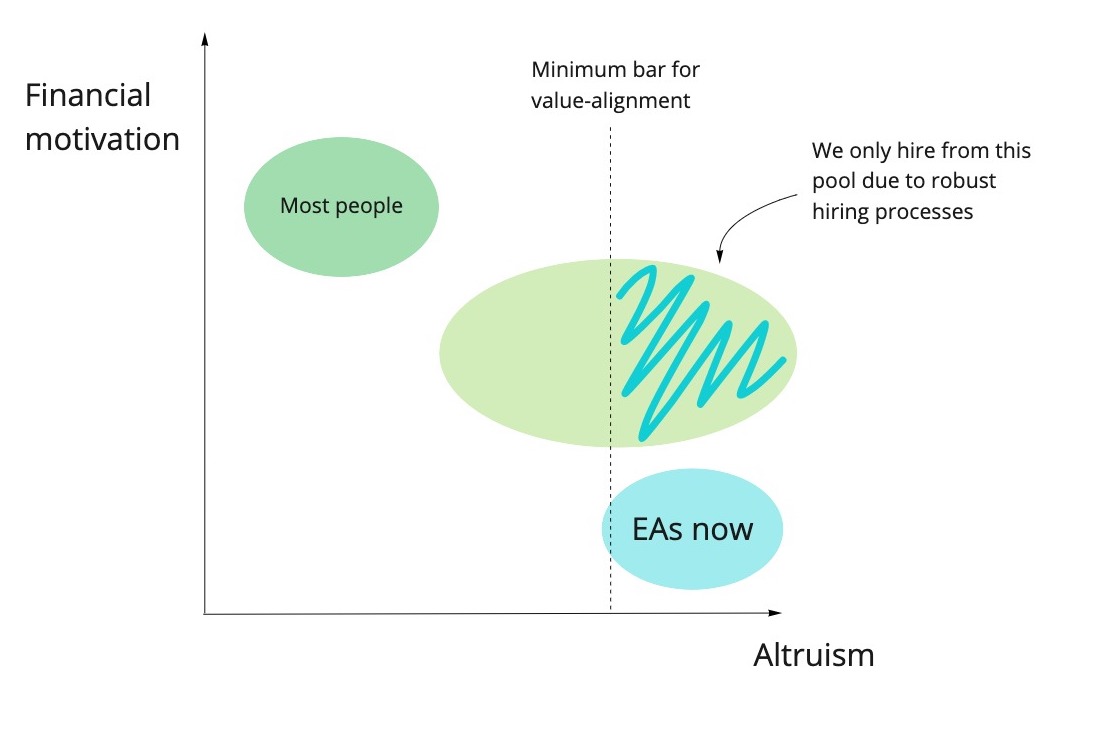

Whilst oversimplified, I anticipate if we hire the median applicant whilst offering moderate salaries vs high salaries, the applicant from the moderate salary pool will have a higher altruistic inclination. In addition, as noted by Sam Hilton here, higher salaries might actually deter some highly value-aligned EAs. This is because jobs with higher salaries have higher chances of being filled by good candidates and therefore have lower counterfactual impact (which matters more to highly altruistic people). Of course we might avoid the issue of selecting less-value aligned people by having water-tight recruitment processes that strongly select for altruism. However, I don’t think this is straightforward. For example, how does one reliably discern between 7/10 altruism and 8/10 altruism? Nevertheless, I’ll touch on this issue further below in how I think we can mitigate this potential problem.

The dangers of value misalignment in organisations

One common rebuttal might be “Not everyone in the EA org has to be perfectly value-aligned!”. I’ve certainly heard lots of discussions about whether operations folks need to be value-aligned, or whether highly competent non-EAs could also do operations within EA organisations. My view is that almost all employees within organisations need to be strongly value-aligned, for two main reasons:

- They will make object-level decisions about the future of your work, based on judgement calls and internal prioritisation

- They will influence the culture of your organisations

Object-level work

It seems pretty clear that most roles, especially within small organisations (as most EA orgs are), will have somewhat significant influence on the work carried out. Some exaggerated examples could be:

- A researcher will often decide which research questions to prioritise and tackle. A value-aligned one might seek to tackle questions around which interventions are the most impactful, whereas a less value-aligned researcher might choose to prioritise questions which are the most intellectually stimulating.

- An operations manager might make decisions regarding hiring within organisations. Therefore, a less value-aligned operations manager might attract similarly less value-aligned candidates, leading to a gradual worsening in altruistic alignment over time. It’s a common bias to hire people who are like you which could lead to serious consequences over time e.g. a gradual erosion of altruistic motivations to the point where less-value aligned folks could become the majority within an organisation.

In essence, I think most roles within small organisations will influence object-level work, involving prioritisation and judgement calls so it seems unrealistic to imply that value-alignment isn’t important for most roles.[3]

Cultural influence

This is largely based on my previous experience but I think a single person can have a significant impact on organisational culture. A common phrase within the start-up world is “Hire Slow, Fire Fast” - and I think this exists for a reason. Non-value aligned employees can lead to conflict, loss of productivity and internal issues that might significantly delay the actual work of an organisation.

In the worst-case, similar to the operations manager example, less-than-ideal values can spread around the organisation, either by hiring or social influence. This could lead to a rift with two parties having non-compatible views, where trust and teamwork significantly breaks down. Whilst anecdata, I’ve experienced this myself, where a less value-aligned group of 2 individuals significantly detracted from the work of 10 people, to the point where it almost forced some of our highest-performing staff to leave, due to the intense conflict and inability to execute on our goals.

Rowing vs steering

Another useful example to consider this issue is Holden’s framework about Rowing, Steering, Anchoring, Equity and Mutiny.

In this case, I’ll define the relevant terms as:

- Rowing = Help the EA ship (i.e. EA community) reach its current destination faster

- Steering = Navigate the EA ship to a better destination than the current one

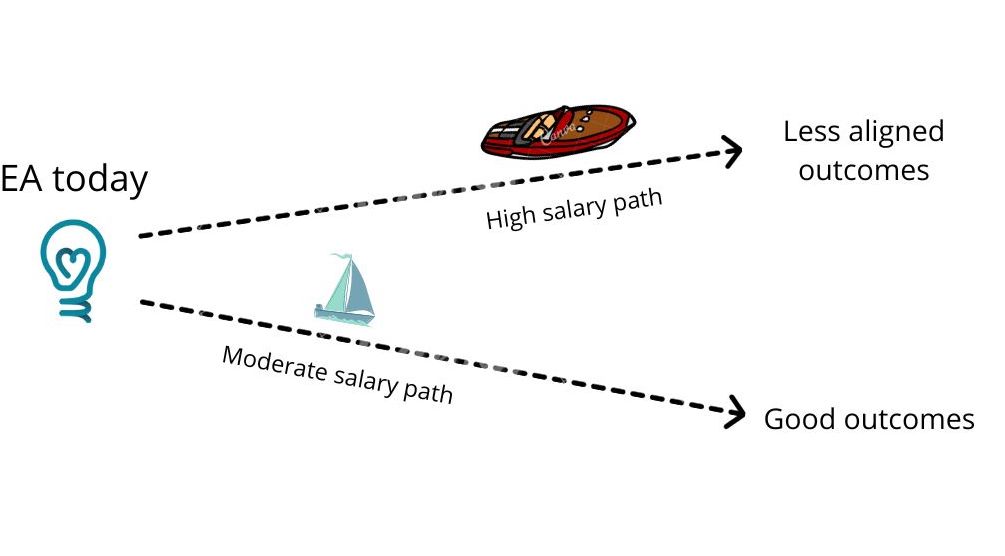

There are clear benefits to paying higher salaries, namely attracting higher calibre people to work on the world’s most pressing problems. This in turn could mean we actually solve some of these global challenges sooner, potentially helping huge numbers of humans or nonhuman animals. However, there exists a trade-off too. We might be rowing faster with more-talented individuals, but are we going in the right direction?

If what I’ve hinted above is directionally correct, that higher salaries could lead to worsening value-alignment to altruism within EA organisations, then this has the potential to steer the entire EA community off-course. Continuing with the wonderful rowing vs steering analogy, one can see how this might look graphically in the diagram below. In short, although higher salaries might mean more talented people, meaning more efficient work, it might ultimately be taking off the optimal trajectory. On the other hand, moderate salaries and high value alignment could mean slower progress, but ultimately progress toward maximally altruistic goals.

Some caveats to this might be if you have particularly short AI timelines, or otherwise think existential risk levels are extremely high. In this case, it might be worth sacrificing some level of internal value alignment purely to ensure the survival of humanity.

What is high-salary and what is moderate?

I’m not exactly sure of the line between “moderate” and “high” salaries, and interpreting this will likely be based on the reader’s personal experiences. There have been some numbers thrown around for rough salary caps for EA orgs, such as 80% of for-profit rate for a similar role. However, as Hauke also mentions above, I believe this 80% rate should be progressive rather than flat, as it seems somewhat excessive to pay employees of EA orgs $400,000 even if they could reasonably command $500,000 in the for-profit world, which is quite plausible for certain industries (e.g. software development, consulting, quant trading, etc.). There's obvious caveats to this e.g. if increasing the salary of Open Phil's CEO from $400k (no idea if this is true) to $4,000,000, this could be worth it if they more effectively allocate their ≈$500m/year grantmaking by just 1%. I'm slightly worried about EA making Pascal's mugging based arguments using motivated reasoning to justify extremely high salaries, but not sure how to square this with the possibility of real increased expected value.[4]

Another way to set salaries could be based on negligible marginal returns to emotional wellbeing from higher salaries, where one study suggests a cut-off around $75,000 and one implying it is much higher than this. [5]

How might we mitigate some of these dangers?

- Salary benchmarks up to 80% of market rate as suggested above, increasing in a progressive fashion such that the percentage of market rate falls as the salaries increase (e.g. it could be 60% for a $200,000 market salary, leading to a $120,000 non-profit salary).[6]

- I’m highly unsure about this, as some EA orgs do currently pay above this rate e.g. this comment highlighting an EA org paying ≈150% of the market rate. But clearly there is a need for talented EA operations folks, so getting in a great operations hire who might be worth 5-10x their salary could be worth it from an altruistic impact perspective if it attracts candidates that are much better.

- Non-financial perks for employees such as a training and development budget, a mental health budget, 10% of time to be spent on up-skilling, catered food, etc.

- Performance-based pay (idea from Hauke's great comment here) based on impact generated.

- Build intrinsic motivation using ideas from self-determination theory - by allowing employees to have greater autonomy, relatedness and competence within the workplace. [7] Some tangible tips are listed here.

- Robust hiring processes that can filter out people with less than what we consider sufficient value alignment, so that we only hire people who meet our current bar for altruism (see diagram below). This is easier said than done, and I’m sure EA orgs are already trying very hard to select for the most value-aligned people. However, I do think this will become increasingly important if EA salaries keep rising, putting additional pressure on recruiters to discern between a 7/10 aligned individual and a 8/10 aligned individual. Whilst this distinction might not seem relevant on a micro-level, I believe these will compound to become quite important on a community level.

- Anecdotally, I’ve seen organisations being (or promising to be) hugely impactful serve as a substitute for financial incentives.

- I saw this whilst working for Animal Rebellion and Extinction Rebellion, doing grassroots movement building. Whilst an extreme example, people (including myself for 2.5 years) often work for less than UK minimum wage (I was earning approx. £800/month) because we felt that what we were doing was so important and an essential contribution to the world, that it needed to happen regardless of our financial gain.

- Obviously I’m not saying that EA organisations should promise to deliver on things they’re not, but I think this is an example where organisations that do deliver huge impact can afford to pay lower salaries, as the attraction of having a huge positive impact on the world should be sufficient to entice most altruistically motivated and talented people.

- The solutions I’ve listed above probably aren’t great but it’s something we have to watch out for and I hope some people are thinking about these problems and optimal compensation at EA orgs! Would definitely be keen to hear more ideas below.

Credit

Thanks to Akhil Bansal, Leonie Falk, Sam Hilton and others for comments on this. All views and mistakes are my own of course.

- ^

- ^

My diagrams are also simplified in other ways e.g. it’s not to scale and the real distributions are almost certainly not random ovals I drew.

- ^

I’m sure there are exceptions to this rule, but I think these are the minority of cases.

- ^

For example, reducing existential risk by just 0.00000000001% could theoretically be worth saving 10^20 lives, at which most EAs should rationally pay an extremely high price for. Therefore one could use these calculations to justify very large ($1-10+ million) salaries at basically any longtermist org for any role.

- ^

H/T Leonie Falk

- ^

Obviously this runs into the same problems about trade-offs between value alignment and attracting more talented people. It’s not obvious how one should balance these considerations, but this is an iteration of one possible solution.

- ^

I’ve only just learned about self-determination theory and how it can be applied to workplaces, so I might be totally naive to any shortcomings.

Thanks, I think this post is thoughtfully written. I think that arguments for lower salary sometimes are quite moralising/moral purity-based; as opposed to focused on impact. By contrast, you give clear and detached impact-based arguments.

I don't quite agree with the analysis, however.

You seem to equate "value-alignment" with "willingness to work for a lower salary". And you argue that it's important to have value-aligned staff, since they will make better decisions in a range of situations:

I rather think that value-alignment and willingness to work for a lower salary come apart. I think there are non-trivial numbers of highly committed effective altruists - who would make very careful decisions regarding what research questions to prioritise and tackle, and who would be very careful about hiring decisions - who would not be willing to work for a lower salary. Conversely, I think there are many people - e.g. people from the larger non-profit or do-gooding world - who would be willing to work for a lower salary, but who wouldn't be very committed to effective altruist principles. So I don't think we have any particular reason to expect that lower salaries would be the most effective way of ensuring that decisions about, e.g. research prioritisation or hiring are value-aligned. That is particularly so since, as you notice in the introduction, lower salaries have other downsides.

As far as I understand, you are effectively saying that effective altruists should pay lower salaries, since lower salaries are a costly signal of general value-alignment - value-aligned people would accept a lower salary, whereas people who are not value-aligned would not. This is an argument that's been given multiple times lately in the context of EA salaries and EA demandingness, but I'm not convinced of it. For instance, in research on the general population led by Lucius Caviola, we found a relatively weak correlation between what we call "expansive altruism" (willingness to give resources to others, including distant others) and "effectiveness-focus" (willingness to choose the most effective ways of helping others). Expansive altruism isn't precisely the same thing as willingness to work for a lower salary, and things may look a bit differently among potential applicants to effective altruist jobs - but it nevertheless suggests that willingness to work for a lower salary need not be as useful a costly signal as it may seem.

More generally, I think what underlies these ideas of using lower salaries as a costly signal of value-alignment is the tacit assumption that value-alignment is a relatively cohesive, unidimensional trait. But I think that assumption isn't quite right - as stated, our factor analyses rather suggested there are two core psychological traits defining positive inclinations to effective altruism (expansive altruism and effectiveness-focus), which aren't that strongly related. (And I wouldn't be surprised if we found further sub-facets if we did more extensive research on this.)

For these reasons, I think it's better for EA recruiters to try to gauge, e.g. inclinations towards cause-neutrality, willingness to overcome motivated reasoning, and other important effective altruist traits, directly, rather than to try to infer them via their willingness to accept a lower salary - since those inferences will typically not have a high degree of accuracy.

[Slightly edited]

I don’t think we can infer too much from this result about this question.

The first thing to note, as observed here, is that taken at face value, a correlation of around 0.243 is decently large, both relative to other effect sizes in personality psychology and in absolute terms.

However, more broadly, measures that have been constructed in this way probably shouldn’t be used to make claims about the relationships between psychological constructs (either which constructs are associated with EA or how constructs are related to each other).

This is because the ‘expansive altruism’ and ‘effectiveness-focus’ measures were constructed, in part, by selecting items which most strongly predict your EA outcome measures (interest in EA etc.). Items selected to optimise prediction are unlikely to provide unbiased measurement (for a demonstration, see Smits et al (2018)). The items can predict well both because they are highly valid and because they introduce endogeneity, and there is no way to tell the difference just by observing predictive power.

This limits the extent to which we can conclude that psychological constructs (expansive altruism and effectiveness-focus) are associated with attitudes towards effective altruism, rather than just that the measures (“expansive altruism” and “effectiveness-focus”) are associated with effective altruism, because the items are selected to predict those measures.

So, in this case, it’s hard to tell whether the correlation between ‘expansive altruism’ and ‘effectiveness focus’ is inflated (e.g. because both measures share a correlation with effective altruism or some other construct) or attenuated (e.g. because the measures less reliably measure the constructs of interest).

Interestingly, Lucius’ measure of ‘impartial beneficence’ from the OUS (which seems conceptually very similar to ‘expansive altruism), is even more strongly correlated with ‘effectiveness-focus’ (at 0.39 [0.244-0.537], in a CFA model in which the two OUS factors, expansive altruism, and effectiveness-focus are allowed to correlate at the latent level). This is compatible with there being a stronger association between the relevant kind of expansive/impartial altruism and effectiveness (although the same limitations described above apply to the ‘effectiveness-focus measure’).

"More generally, I think what underlies these ideas of using lower salaries as a costly signal of value-alignment is the tacit assumption that value-alignment is a relatively cohesive, unidimensional trait. But I think that assumption isn't quite right - as stated, our factor analyses rather suggested there are two core psychological traits defining positive inclinations to effective altruism (expansive altruism and effectiveness-focus), which aren't that strongly related. (And I wouldn't be surprised if we found further sub-facets if we did more extensive research on this.)"

I agree with the last sentence of this-- there are probably at least as many sub-facets as there are distinct tenets of effective altruism, and only most or all of them coming together in the same person is sufficient for making someone aligned. Two facets is too few, and, echoing David, I do not think that the effectiveness-focus and expansive altruism measures are valid measures of actual psychological constructs (though these constructs may nevertheless exist). My view is that these measures should only be used for prediction, or reconstructed from scratch.

I am less sure the final part of the following:

"I think it's better for EA recruiters to try to gauge, e.g. inclinations towards cause-neutrality, willingness to overcome motivated reasoning, and other important effective altruist traits, directly, rather than to try to infer them via their willingness to accept a lower salary - since those inferences will typically not have a high degree of accuracy."

This depends, I think, on how difficult it is to ape effective altruism. As effective altruism becomes more popular and more materials are available to figure out the sorts of things walking-talking EAs say and think, I would speculate that aping effective altruism becomes easier. In this case, if you care about selecting for alignment, a willingness to take on a lower salary could be an important independent source of complimentary evidence.

Hey Stefan, thanks again for this response and will respond with the attention it deserves!

I definitely agree, and I talk about this in my piece as well e.g. in the introduction I say "There are clear benefits e.g. attracting high-calibre individuals that would otherwise be pursuing less altruistic jobs, which is obviously great." So I don't think we're in disagreement about this, but rather I'm questioning where the line should be drawn, as there must be some considerations to stop us raising salaries indefinitely. Furthermore, in my diagrams you can see that there are similarly altruistic people that would only be willing to work at higher salaries (the shaded area below).

This is an interesting point and one I didn't consider. I find this slightly hard to believe as I imagine EA as being quite esoteric (e.g. full of weird moral views) so struggle to imagine many people would be clambering to work for an organisation focused on wild animal welfare or AI safety when they could work for an issue they cared about more (e.g. climate change) for a similar salary.

Again, I would agree thats it's not the most effective way of ensuring value alignment within organisations, but I would say it's an important factor.

This was actually really useful for me and I would definitely say I was generally conflating "willingness to work for a lower salary" with "value-alignment". I've probably updated more towards your view in that "effectiveness-focus" is a crucial component of EA that wouldn't be selected for simply by being willing to take a lower salary, which might more accurately map to "expansive altruism".

I agree this is probably the best outcome and certainly what I would like to happen, but I also think it's challenging. Posts such as Vultures Are Circling highlight people trying to "game" the system in order to access EA funding, and I think this problem will only grow. Therefore I think EA recruiters might face difficulty in discerning between 7/10 EA-aligned and 8/10 EA-aligned, which I think could be important on a community level. Maybe I'm overplaying the problem that EA recruiters face and it's actually extremely easy to discern values using various recruitment processes, but I think this is unlikely.

Thanks for your thoughtful response, James - I much appreciate it.

My impression is that there are a fair number of people who apply to EA jobs who, while of course being positive to EA, have a fairly shallow understanding of it - and who would be sceptical of aspects of EA they find "weird". I also think a decent share of them aren't put off by a salary that isn't very high (especially since their alternative employment may be in the non-EA non-profit sphere).

I am not that well-informed, but fwiw - like I wrote in the thread - I think that people engaging in motivated reasoning, fooling themselves that their projects are actually effective, is a bigger problem. And as discussed I think tendency to do that isn't much correlated with willingness to accept a lower salary.

Sorry, no I didn't want to suggest that. I think it's in fact quite hard. I was just talking about which strategies are relatively more and less promising, not about how hard it is to determine value-alignment in general.

Thanks for the thoughtful engagement Stefan and kind words! I'm going to respond to the rest of your points in full later but just one quick clarification I wanted to make which might mean we're not so dissimilar on our viewpoints.

Just want to be very clear that low salaries is not what I think EA orgs should pay! I tried quite clearly to use the term 'moderate' rather than low because I don't think paying low salaries is good (for reasons you and I both mentioned). I could have been more explicit but I'm talking about concerns with more orgs paying $150,000+(or 120%+ of market rate as a semi-random number) salaries on regular basis, not paying people $80,000 or so. Obviously exceptions apply like I mentioned to Khorton below but it should be at least the point where everyone's (and their families/dependents) material needs can be met.

Do you have any thoughts on this? Because surely at some point salaries become excessive, have bad optics or counterfactually poor marginal returns but the challenge is identifying where this is.

( I'll update in my main body to be clearer as well)

How common do you think it is for an EA organisation to pay above market rate? I think of market rate as what someone might make doing a similar role in the private sector. I can think of one or two EA organisations that might pay above that, but not very many.

Yeah I think this is a good question. I can think of several of the main EA orgs that do this, in particular for roles around operations and research (which aren't generally paid that well in the private sector, unless you're doing it at a FAANG company etc). In addition, community-building pays much higher than other non-profit community building (in the absence of much private sector community building).

Some of these comparisons also feel hard because people often do roles at EA orgs they weren't doing in the private sector e.g. going from consulting or software development to EA research, where you would be earning less than your previous market rate probably but not the market rate for your new job.

There's one example comparison here and to clarify I think this is most true for more meta/longtermist organisations, as salaries within animal welfare (for example) are still quite low IMO. I can think of 3-4 different roles within the past 2 months that pay what is above market rate (in my opinion), some of which I'll list below:

(not implying these are bad calls, but that I think they're above market rate)

When you have a job X, then looking at other jobs with the title X is a heuristic for knowing the value of the skills involved. But this heuristic breaks down in the cases you mention. The skillset required for community-building in EA is very different from regular non-profit community-building. In operations roles, EA orgs prefer to hire people who are unusually engaged on various intellectual questions in a way that is rare in operations staff at large, so that they fit with the culture, can get promoted, and so on.

There are better ways to analyse the question:

All these analyses point in the same direction. Although it's a popular idea that EA orgs are paying unusually much for staff's skillsets, it's simply false.

Yeah this is a useful way of thinking about this issue of market rate so thanks for this! I guess I think people having the ability to earn more in non-EA orgs relative to EA roles is true for some people, and potentially most people, but also think it's context dependent.

For example, I've spoken with a reasonable number of early career EAs (in the UK) for whom working at EA orgs is actually probably the highest paying options available to them (or very close), relative to what they could reasonably get hired for. So whilst I think it's true for some EAs that EA jobs offer less* pay relative to their other options, I don't think it's universal. I can imagine you might agree so the question might be - how much of the community does it represent? and is it uniform? So maybe to clarify, I think that EA orgs are paying more than I would expect for certain skillsets, e.g. junior-ish ops people, rather than across the board.

*edited due to comment below

I think the reasoning is sound. One caveat on the specific numbers/phrasing:

To be clear, many of us originally took >>70% pay cuts to do impactful work, including at EA orgs. EA jobs pay more now, but I imagine being paid <50% of what you'd otherwise earn elsewhere is still pretty normal for a fair number of people in meta and longtermist roles.

Thanks for the correction - I'll edit this in the comment above as I agree my phrasing was too weak. Apologies as I didn't mean to underplay the significance of the pay cut and financial sacrifice yourself and others took - I think it's substantial (and inspiring).

I don't know how much credit/inspiration this should really give people. As you note, the other conditions for EA org work is often better than external jobs (though this is far from universal). And as you allude to in your post, there are large quality of life improvements from working on something that genuinely aligns with my values. At least naively, for many people (myself included) it is selfishly worth quite a large salary cut to do this. Many people both in and outside of EA also take large salary cuts to work in government and academia as well, sometimes with less direct alignment with their values, and often with worse direct working conditions.

I agree it's reasonable to ask where (if anywhere) EA is paying too much, and that UK EA has been offering high salaries to junior ops talent. But even then, there are some good reasons for it, so it's not obvious to me that this is excessive.

One hypothesis would be that some EA orgs are in-general overpaying junior staff, relative to executive staff, due to being "nice". But that, really, is pure speculation.

I agree with the rest of your comparisons but I think this one is suspect:

"Pure" ETG positions are optimized for earning potential, so we should expect them to be systematically more highly paid than other options.

Speaking for Rethink Priorities, I'd just like to add that benchmarking to market rates is just one part of how we set compensation, and benchmarking to academia is just one part of how we might benchmark to market rates.

In general, academic salaries are notoriously low and I think this is harmful for building long-term relationships with talent that let them afford a life that we want them to be able to live. Also we want to be able to attract the top-tier of research assistant and a higher salary helps with that.

I totally agree - like I said above, I don't think paying above market rate is necessarily erroneous, but I was just responding to Khorton's question of how many EA orgs actually paid above market rate. And as you point out, attracting top talent to tackle important research questions is very important and I definitely agree that this is main perk of paying higher salaries.

In this case of research, I also agree! Academic salaries are far too low and benchmarking to academia isn't even necessarily the best reference class (as one could potentially do research in the private sector and get paid much more).

Please note that Rethink Priorities, where I work, has the same salary band across cause areas.

Ah yes that's definitely fair, sorry if I was misrepresenting RP! I wasn't referring to intra-organisation when I made that comment, but I was thinking more across organisations like The Humane League / ACE vs 80K/CEA.

Thanks, I strongly upvoted this comment because of the list of detailed examples.

Thanks, James. Sorry, by using the term "low" I didn't mean to attribute to you the view that EA salaries should be very low in absolute terms. To be honest I didn't put much thought into the usage of this word at all. I guess I simply used it to express the negation of the "high" salaries that you mentioned in your title. This seems like a minor semantic issue.

I disagree with this post for the reasons Stefan's outlined. To provide a specific example, I know people in EA who provide financially for their children; for their spouse (in one case, their spouse has a disability, in another their spouse can't work for visa reasons); and/or for their ageing parents. Regardless of how mission aligned they are, people with financial responsibilities can't always afford to take a pay cut.

Thanks for raising this and I totally agree with your point. I think I could have been clearer in two aspects of this:

FYI: this is generally not legal. Organizations (at least in the US, and I'm pretty sure in Europe) are not supposed to consider these aspects of employees' personal lives when deciding how much to pay.

I dunno, man. I just want to be able to afford a house and a family while working, like, every waking hour on EA stuff. Sure, I’d work for less money, but I would be significantly less happy and healthy as a result — I know having recently worked for significantly less money. There’s some term for this - “cheerful price”? We want people to feel cared for and satisfied, not test their purity by seeing how much salary punishment they will take against the backdrop of “EA has no funding constraints.” I apologize for the spicy tone, but I think this attitude, common in EA in my experience, is an indication of bias against people over 25 — and largely accounts for why there are so few skilled, experienced operators and entrepreneurs in EA.

Related:

"Losing Prosociality in the Quest for Talent? Sorting, Selection, and Productivity in the Delivery of Public Services"

By Nava Ashraf, Oriana Bandiera, Edward Davenport, and Scott S. Lee

Abstract:

https://ashrafnava.files.wordpress.com/2021/11/aer.20180326.pdf

As you note, the key is being able to precisely select applicants based on altruism:

So perhaps EA orgs can raise salaries and attract more-talented-yet-equally-commited workers. (Though this effect would depend on the level of the salary.)

As someone who spends a fair amount of time on job boards out of curiosity, while I can't speak to technical CS roles, this is definitely true for a lot entry-level research and operations roles and while I agree with your analysis of the implications, I think this may actually be a good thing. Outside of paying workers more being a good thing on principle, there are two main reasons why I think so:

I realize that the goal to eventually promote up from the inside into leadership goals does present an important caveat to the second point; I think that that can be solved fairly easily by either a) not doing that if necessary, which is not great but not terrible, or b) transparently emphasizing that cultural fit with the principles guiding the mission of the org in the context of work is important in these decision is important to this during hiring and promotion and allowing employees to engage with and immerse themselves in these principles as part of their work.

It might help to put some rough numbers on this. Most of the EA org non-technical job postings that I have seen recently have been in the $60-120k/year range or so. I don't think those are too high, even at the higher end of that range. But value alignment concerns (and maybe PR and other reasons) seem like a good reason to not offer, say, 300k or more for non-executive and non-technical roles at EA orgs.

More views from two days ago: https://forum.effectivealtruism.org/posts/9rvpLqt6MyKCffdNE/jobs-at-ea-organizations-are-overpaid-here-is-why

I especially recommend this comment: https://forum.effectivealtruism.org/posts/9rvpLqt6MyKCffdNE/jobs-at-ea-organizations-are-overpaid-here-is-why?commentId=zbPE2ZiLGMgC7hkMf

What would be truly useful is annual (anonymous) salary statistics among EA organisations, to be able to actually observe the numbers and reason about them.

If the concern is less committed EAs working in EA organizations, could EA orgs shift compensation to a structure like:

65% compensation through income; 35% "compensation" through donating to the employee's organization of choice?

Percentages are ofcourse arbitrary for the purposes of this post. This has tax benefits as well.

How would this have tax benefits? Also sounds like it creates additional admin work for orgs.

In the US, the main tax benefits of employer donation matching over giving employees more money that they can donate are:

If you want to deduct donations, you can't take the standard deduction ($13k in 2022 for an individual). If you don't have any other deductions that you would be itemizing, this means the first $13k you donate is effectively taxed as if you had kept it.

At the federal level, only income tax allows deducting donations. Payroll taxes are pretty much just proportional to pay (~15%). Half of payroll taxes are paid by the employer, which makes this effect twice what it appears when looking at your paystub.

At the state level, most don't allow deducting donations, and the states that do typically have very low caps.

For example, last year my family paid $89k in federal income tax, $39k in state income tax (MA), and $35k in payroll taxes (the employers paid another $35k), for a total of $197k in taxes on $780k of income ($815k cost to employer counting payroll taxes) and $400k of donations. If our employers had instead let us direct that $400k and paid us $380k only, we would have paid approximately $75k in federal income tax, $20k in state income tax, and $17k in payroll taxes (with the employers paying another $17k). This saves $65k (8% of total pay, 17% of post-donation pay, 35% of post-donation post-tax pay) which could be donated.

Why does your graph have financial motivation as the y-axis? Isn't financial motivation negatively correlated with altruism, by definition? In other words, financial motivation and altruism are opposite ends of a one-dimensional spectrum.

I would've put talent on the y-axis, to illustrate the tradeoff between talent and altruism.