Note: I'm crossposting this from the United States of Exception Substack with the author's permission. The author may not see or respond to comments on this post. I'm posting this because I thought it was interesting and relevant, and don't necessarily agree with any specific points made.

I am a climate change catastrophist, but I’m not like all the others. I don’t think climate change is going to wipe out all life on Earth (as 35% of Americans say they believe) or end the human race (as 31% believe). Nor do I think it’s going to end human life on Earth but that human beings will continue to exist somewhere else in the universe (which at least 4% of Americans would logically have to believe). Nevertheless, I think global warming is among the worst things in the world — if not #1 — and addressing it should be among our top priorities.

Friend of the blog Bentham's Bulldog argues that this is silly, because even though climate change is very bad, it’s not the worst thing ever. The worst thing ever is factory farming, and climate change “rounds down to zero” when compared to factory farming.

I disagree. I think there is a plausible case that climate change is orders of magnitude worse than factory farming. In fact, I think I can convince Bentham of this (that it’s plausible, not that it’s definitely true) by the end of the following sentence: Climate change creates conditions that favor r-selected over K-selected traits and species in most environments, and these effects can be expected to last for several million years.

I don’t know if I’ve already convinced him. For most people, that sentence is probably nonsense. But if you’re familiar at all with the concept of wild animal suffering, it should start to raise some alarm bells.

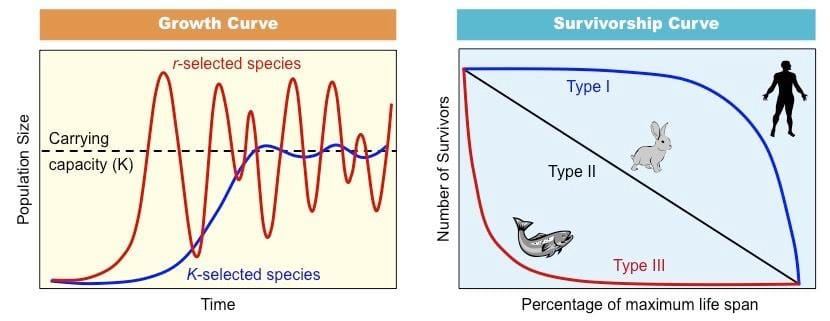

Biologists describe species’ reproductive strategies along a continuum of r-selection to K-selection, based on how a species trades off between quantity and quality of offspring. r-strategists reproduce in great numbers and invest little in each individual, hence each offspring has a very low chance of surviving to sexual maturity. K-strategists produce fewer offspring and invest a great deal of resources into raising each of them. Think “r” for rabbit and “K” for kangaroo. (Rabbits fuck like rabbits, and a mother kangaroo carries a joey in her pouch for months.)

r-strategists and more r-selected traits are usually advantaged when the environment is more precarious. Producing a large number of offspring improves the chance that at least some of them will survive to sexual maturity and pass their genes on to the next generation. More r-selected species also typically have shorter lifespans and reproduce faster after birth, which means they can more easily adapt to new conditions. K-strategists, on the other hand, are better suited for more stable environments where their offspring are more likely to survive and they don’t need to adapt quickly to changing conditions.

The reason that r-selection is so bad if you have values like Bentham’s and mine and you care about the hedonic well-being of individuals is that the average life of an r-strategist is extremely bad, and significantly worse than the average life of a K-strategist. For a species’ population to be stable in the long term, an average of only one offspring per parent needs to survive to adulthood and go on to reproduce. Hence, while species that produce few offspring should tend to have low premature mortality rates, most members of species that produce many offspring at a time die extremely painful deaths shortly after they’re brought into existence. Oscar Horta estimates that among a single population of one million Atlantic cod in the Gulf of Maine — who each spawn about 2 million eggs per reproductive cycle — the expected number of seconds of suffering among codlings who fail to survive is about 200 billion per year, or 6,338 suffering-years per year. Invertebrate populations are typically even worse off, since they also produce thousands or millions of offspring per cycle but their reproductive cycles are much shorter.

What makes climate change so bad is not just that it might wipe out a lot of wild animals (in itself, that would very plausibly, but not definitely, be a good thing), but that by making the natural environment more precarious, it would both wipe out a lot of wild animals and advantage the remaining r-strategists and r-selected traits. In fact, this is what the Intergovernmental Panel on Climate Change says climate change is already doing. Namely:

Biodiversity loss and degradation, damages to and transformation of ecosystems are already key risks for every region due to past global warming and will continue to escalate with every increment of global warming (very high confidence).

And specifically, among land animals:

3 to 14% of species assessed will likely face very high risk of extinction at global warming levels of 1.5°C, increasing up to 3 to 18% at 2°C, 3 to 29% at 3°C, 3 to 39% at 4°C, and 3 to 48% at 5°C. [The United Nations estimates that without further action, global temperatures will rise by as much as 3.1°C by 2100.]

Just the ~1.2°C in global warming we’ve observed so far has already contributed to a 73% decline in the population of wild vertebrates since 1970 and the extinction of up to 2.5% of vertebrate species. The invertebrate population has also likely declined, but the magnitude of the decline is unclear. In the near-to-medium term, it’s likely that both vertebrate and invertebrate populations will continue to fall as the environment becomes even more inhospitable. Throughout this period, more K-selected species will probably be more likely to go extinct than more r-selected species, as r-strategists can adapt better to a more variable environment. We should therefore expect both r- and K-strategists to become more r-selected, and r-strategists to make up an increasing share of the population of wild animals.

If global temperatures stabilize again in a few centuries, and the total biomass of wild animals returns to normal, nature will likely be populated disproportionately with r-strategists compared to what it would be if anthropogenic climate change had not happened. This will also likely persist for a very long time, as it has historically taken millions of years after a mass extinction for full species diversity to return.

There are an estimated 10 trillion (10¹³) vertebrate individuals on Earth, as well as 10 sextillion (10²²) invertebrates. If we assume conservatively (and just for illustrative purposes) that the biomass of 10% of the vertebrate population is converted to smaller-bodied animals — say, half as large — each of whom produces an extra 10 offspring per year who experience one day of suffering and then die, the number of extra suffering-years caused per year would be 55 billion, or more than the entire number of suffering-years caused by all land-based factory farms per year. If you accounted at all for how the reproductive strategies of invertebrates might change, the total would be mind-bogglingly bigger. But even if you just stick with vertebrates and assume the effect lasts one million years, the effect of climate change on wild animal suffering would be at least 55 quadrillion suffering-years, which is orders of magnitude greater than the amount of suffering that factory farming ever has and likely ever will produce.

This is simply an unfathomable amount of suffering; there’s basically nothing that comes close. Even if you think it’s a good thing that climate change is reducing wild animal populations in the near-to-medium term because wild animals live net-negative lives, the effect of reduced population is only likely to last a few hundred years until temperatures again stabilize. However, the likely mass extinction of K-strategists and the concomitant increase in r-selection might last for millions of years.

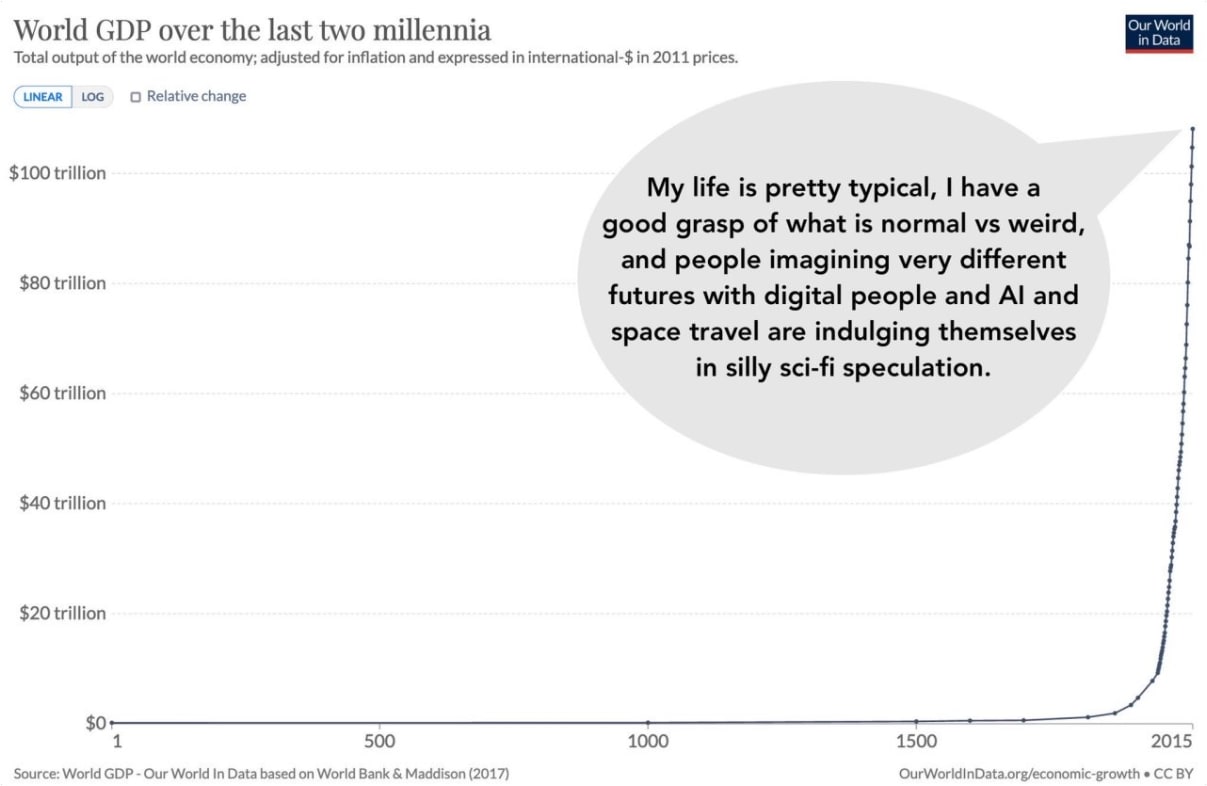

Now, you should be skeptical of everything I say here, since I’m not a biologist. Climate change might permanently reduce the biomass of wild animals, which would likely reduce total suffering even if it increases the share of r-strategists. AI might become godlike and help us re-engineer the biosphere to maximize utils. There might be some environments where a warmer climate actually favors K-selected traits. But I think the case I lay out above is at least plausible, and if you give it any non-negligible credence, you should probably agree that climate change deserves to be treated as a top social priority, either alongside or ahead of factory farming.

I crossposted this because it was an interesting read, and it makes an argument that I've never heard before. I'd be curious if anyone with more expertise has takes on this! :)

Thanks! I basically landed on using my personal account since most people seem to prefer that. I suppose I'll accept the karma if that's what everyone else wants! :P

Honestly I think it's somewhat misleading for me to post with my account because I am posting this in my capacity as part of the Forum Team, even though I'm still an individual making a judgement. It's like when I get a marketing email signed by "Liz" — probably this is a real person writing the email, but it's still more the voice of the company than of an individual, so it feels a bit mislead... (read more)