TL;DR: Help the AGI Safety Fundamentals, Alternative Protein Fundamentals, and other programs by automating our manual work to support larger cohorts of course participants, more frequently.

Register interest here [5 mins, CV not required if you don’t have one].

Job description

We are looking to find a low-code contractor who can help us to scale and run our seminar programmes. We have worked with an engineer to build our systems so far, but they are moving on.

Here is a list of tools and apps we use to make our programmes run:

- The backbone of the programme is run with no-code tools (Airtable database, Make automations to interface with Slack and Google Calendar).

- One python script which clusters participants with a similar background based on some numerical metrics (not currently integrated in Airtable, but we’d like it to be).

- One vercel webapp to collect participants’ and facilitators’ time availability.

- One javascript algorithm which groups ~50 previously-clustered participants & facilitators into cohorts of ~6 participants + 1 facilitator.

- We would like (you) to introduce more extensions using similar tools in the future.

We’re offering part time, contract work

We’re looking for part-time, contract support for now. We would offer a retainer[1], and would pay an hourly rate for additional work.

Further logistical details:

- We need more responsiveness when kicking off programs, in case there are bugs (4 weeks, ~3 times a year).

- There will be development work to do in between programmes.

- We are open to (and slightly prefer) full time contract work for the first couple of months to get us up and running.

- You can let us know what would work for you in the form.

Salary

We are offering $50 / hour. Let us know if this salary would prevent you from taking the role in the form.

Were you hoping for a full time opportunity?

We're also likely to want to build out an entire new software platform for these programmes over the next 6 months, and we'll be looking for excellent software engineers who can help us build a team to achieve this.

We currently use Slack, Zoom, vercel, Airtable forms etc and tie these together using low-code tools like Airtable and Zapier. We are interested in bringing this under one platform, to enhance user experience and retention. We expect to have other software needs to manage our community and websites hosting opportunities downstream of our programmes, too.

You can register interest for the full time Head of Software here, though please note this role is not as fleshed-out as the part-time role described in this post at this point.

Why should you work on this project?

Scale

These programmes have offered the first scalable, EA-originating onboarding programmes to the field of AI safety and alternative proteins. The growth trajectory for both programmes has been excitingly steep.

The AGI Safety Fundamentals went from 15->230->520 participants. We now have 650 registrations of interest for the next round, before promoting the programme through usual channels (this is as many people who ultimately applied to round 2).

We also run the Alternative Proteins Fundamentals, which has seen 400 participants in its 2nd round, and we plan to build out many more programmes using the same infrastructure in the future.

All together, we expect the infrastructure you build in this role to support 5,000+ people in the next 1-5 years. We get that with the conservative estimate that we run 2 programmes, 2.5 times/year with 200 participants each time. Additionally, we expect to add ~10 more programmes in the coming years.

Building quality infrastructure will increase our teams’ capacity to continue to iterate and improve to deliver a quality programme.

Impact on participants

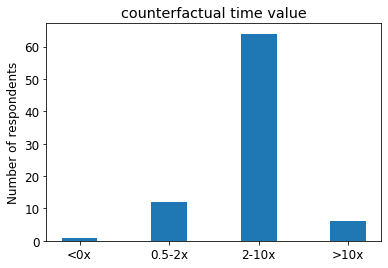

For the full details, check our retrospective on the 2nd iteration of the AGI safety fundamentals programme. Pulling out some highlights:

Alumni

These are some anecdotal cases of what alumni in our last iteration of the programme have gone on to do:

- At least 1 Machine Learning Engineer at 3 top software/ML companies.

- Member of technical staff at Redwood research

- Independent researcher

- SERI MATS and MLAB participants, furthering their career in AI safety research

- Access to our database of participants who gave their permission to share their information led to:

- Research fellow at Rethink Priorities (1 offer made sourced from our database, 'major contribution' attributed to AGISF)

- Open Philanthropy's longtermist movement building team (2 counterfactual offers made sourced from our database, resulting in 1 hire)

- Likely more that we likely haven’t tracked

Evidence of future impact

- We’re getting more participants interested (so far exponentially, though we expect this to level off at some point and won't necessarily scale the programmes exponentially)

- We want to produce more content and more programs, including

- More in-depth programmes on AI safety

- Programmes in different fields

- We want to package our infrastructure up so that local groups can use it to easily run a high-quality local version. (We expect local versions are better for making connections, where possible)

What would be your impact?

Our infrastructure has been a major factor preventing us from running the programme more frequently, at scale. There are aspects of it which do not scale, which uses a lot of organiser time.

We want to have the ability to process 500+ people at a time without much additional organiser overhead. This will require an overhaul of our process for e.g. allowing people to join a different cohort if they can’t make their regular slot (5-10 hours organiser time / week for 500 people), grouping cohorts (80 hours / week for 2-3 weeks at the start of the programme), etc.

We are hiring for this role to professionalise and scale our seminar programme infrastructure. With your help, we’ll be able to:

- Run the programme more frequently (at least 1 more time per year, per programme).

- Offer a higher-quality experience for participants. For example, the first thing we’d focus on is putting on more events for programme participants, e.g. networking opportunities between PhD participants, targeted advice & networking for software engineers from alignment research engineers, etc.

Your role and responsibilities

The role would involve improving or rewriting our current systems so that we can run the seminar programme as we’ve run them before. See the summary of the current system at the top of the post.

In the future, we’d like to add new features which you would develop. We’d work together to explain our requirements, and discuss potential solutions with you.

Scroll to the bottom of the post if you have more questions about the nature of the role.

Example tasks you might do

- Add a feature that lets the participants choose and join another cohort (from a list of cohorts specific to that participant), for a week that they’re unavailable at their usual time.

- Add a tool that groups participants based on some measures of their background knowledge

You’ll be the tech lead

We will know what we need from the product perspective, but you’ll have ownership over how to implement it.

We can’t offer technical mentorship

Since you’re the tech lead - we won’t be able to provide technical mentorship. We are otherwise willing and expect to work closely with you with regular check-ins to help you stay on-task, though.

If you can find your own mentorship or training - we are open to provide a budget for it.

What’s the application process?

Click the form to find out fastest!

We want to keep this light touch at this stage. We’ll ask for one of a LinkedIn, CV or paragraph about yourself. We’ll also ask for a brief couple of sentences about why you’re interested, and your availability.

We will follow up with respondents to find out which arrangements would suit which people, and would offer a work trial to those whom we’re mutually excited to proceed with.

AMA

Please ask us any questions in the comments, by emailing jamie@thisdomain, or submit an anonymous question / feedback here.

–

Special thanks to Yonatan Cale for his help and advice in creating this post.

- ^

What we'd expect to offer for a retainer contract: we agree on a fixed number of hours / month (guessing 20-40 hours / month), for a fixed number of months (about 6 months), that we will pay for regardless of how much you work. We pay you in addition to that retainer if you work more hours. We expect there will be plenty of work to do, but are happy to offer this as security for you if you were to join us as a contractor.

Sounds nice, except that um, well, AI safety is a myth.