I reference Buck Shlegeris several times in this post, because appreciating his dyed hair seemed like a fun example and analogy to start the post off with, but any impressive-seeming EA could act as a stand in.

I obtained Buck’s permission to use his name in this post.

Wanting to dye my hair like Buck

When I think of the word cool, my mind conjures refreshing breezes, stars in the sky, friends I whole-heartedly admire, and Buck Shlegeris’ dyed hair.

Why Buck’s hair, you ask? Well it is badass, and the reasoning process behind it is probably also great fun, but my guess is that I mostly think of Buck's dyed hair as cool because I strongly associate Buck's hair... with Buck (it is a distinctive feature of his).

People tell me that Buck does a lot of pretty good work as the CTO of Redwood Research, which is one of the major EA aligned organizations working on alignment, which may very well be the present most important bottleneck on humanity’s future going well.

So by extension, Buck’s hair is cool.

I don’t think this is logically sound. What I do know is that I generally don’t attach an emotional valence to dyed hair, but when I see Buck at retreats or conferences, I notice his hair and I'm slightly more likely to think, "huh, Buck has cool hair" even though I generally don't care what color your hair is.

It’s a stupid update; my appreciation for Buck's work shouldn't extend to his hair, but I definitely do it a bit anyways. It’s like there’s a node in my mind for Buck that is positively valenced and a node for dyed hair that is neutrally valenced. When I see his dyed hair, the nodes become associated with each other and a previously neutral dyed hair node becomes tinted with positive feelings.

My guess is that I'm more inclined to dye my hair myself because Buck dyes his hair. This is true even though we're optimizing for different aesthetic preferences and I don't actually obtain almost any information on how I should optimize for my aesthetic preferences based on Buck's decisions.

Conclusion: When I see people I admire commit to action X, I want to commit to X myself a little more, regardless of the informational value of their decision to me.

Buck does alignment research. Should I do alignment research?

Now let’s broaden this example. Lots of people I admire believe alignment research is very important. Even discounting the specific reasons they believe alignment research is important, I should probably be more inclined to do alignment research, because these people are super smart and thoughtful and largely value aligned with me in our altruistic efforts.

But how much more?

So I first take into account their epistemic status, adjusting my opinion on the importance of alignment research based on how much I trust their judgment and how confident they are and how altruistically aligned we are.

But remember: I want to dye my hair slightly more because Buck dyes his hair, even though his dyeing his hair doesn't provide (basically) any information to me on how I should optimize for my own aesthetic preferences.

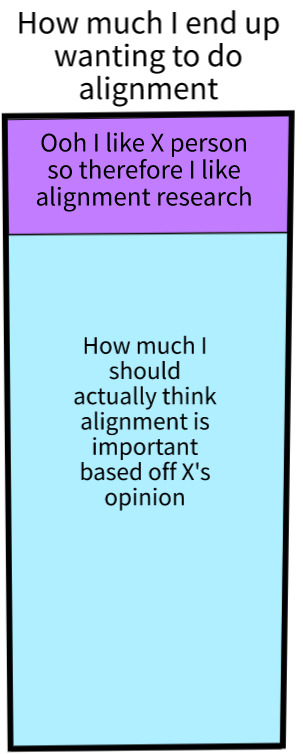

There's a separate motivation of "wanting to do what this person I like does" that I have to separate out and subtract from how much I want to do alignment research if I want to have an accurate sense of the importance of alignment research.

But this is hard. Disentangling the informational value people I admire have provided through their beliefs that alignment research is important from the positive feelings I hold towards alignment research because it is now associated with them is hard.

And I'm probably not doing it very well.

Conclusion: I’m likely updating too much in favor of dyed hair and alignment research based on the beliefs of and my positive feelings for EA’s I admire. Maybe I really should do the thing where I sit down for a few dozen hours and formulate my empirical and moral uncertainties into precise questions whose answers are cruxy to me working on alignment and then try to answer those questions.

Optimizing for validation instead of impact

But it gets worse. Dyeing my hair might not better fulfill my aesthetic preferences, but it may very well make Buck like me marginally more. Doing alignment research might not be the right path to impact for me, but it may very well make lots of people I admire like me marginally more. My monke brain is way more attracted instinctively to “optimizing for self-validation within the EA community by doing the things impressive EAs say are good” rather than “actually figuring out how to save the world and doing it,” and the two look similar enough, and the former so much easier, that I’m probably falling into this trap.

Conclusion: It is likely that at least some of the time, I’m unintentionally optimizing for validation within the EA community, rather than fulfilling the EA project. Sometimes optimizing for either leads to the same action, but whenever they pull apart and I choose to follow the EA community incentives rather than the EA project ones, I pay in dead children. This makes me somewhat aggrieved. So what do I do?

I don't know. I'm writing this post because I’m not sure; I have some thoughts, but they're very scattered. Do you have suggestions?

Note:

This is my first forum post, so feedback is really appreciated! In particular, I realize that I'm singling out Buck in this post and I'm unsure if this was useful in communicating my point, or if this was just weird.

I think there's definitely some part of me that is trying to feel more legible in the community through this post. I've deleted the karma elements from the EA forum for myself using my adblock and am writing this under a pseudonym to try to mitigate this.

An early reader noted that “it's badass to dye your hair like [Buck’s], it's contrarian, it's free. and come on, deciding your hair color based on quantum events such that theoretically all bucks throughout the multiverse sport all the colors of the rainbow is just fucking cool”. So those are also reasons to dye your hair like Buck.

Edit: changed the title because jwpieters noted it was misleading. It was originally the first section title "wanting to dye my hair like buck". Thanks jwpieters!

I agree that it's well worth acknowledging that many of us have parts of ourselves that want social validation, and that the action that gets you the most social approval in the EA community is often not the same as the action that is best for the world.

I also think it's very possible to believe that your main motivation for doing something is impact, when your true motivation is actually that people in the community will think more highly of you. [1]

Here are some quick ideas about how we might try to prevent our desire for social validation from reducing our impact:

I haven't read it, but I think the premise of The Elephant in the Brain is that self deception like this is in our own interests, because we can truthfully claim to have a virtuous motivation even if that's not the case.

The flip side is that maybe EA social incentives, and incentives in general, should be structured to reward impact more than it currently does. I'm not sure how to do this well, but ideas include:

Unfortunately I don't have good ideas for how to implement this in practice.

I guess some of those things you could reward monetarily. Monetary rewards seem easier to steer than more nebulous social rewards ("let's agree to celebrate this"), even though the latter should be used as well. (Also, what's monetarily rewarded tends to rise in social esteem; particularly so if the monetary rewards are explicitly given for impact reasons.)

Yes, I like the idea of monetary rewards.

Some things might need a lot less agreed upon celebration in EA, like DEI jobs and applicants and DEI styled community managenent.

I agree with you that we should reward impact more and I like your suggestions. I think that having more better incentives for searching for and praising/rewarding 'doers' is one model to consider. I can imagine a person in CEA being responsible for noticing people who are having underreported impact and offering them conditional grants (e.g., finacial support to transition to do more study/full time work) and providing them with recognition by posting about and praising their work in the forum.

I would be quite curious to know how this could work!

You could spotlight people that do good EA work but are virtually invisible to other EAs and do nothing of their own volition to change that, i.e. non paradise birds and non social butterflies.

I think people are always going to seek status or validation and people in EA are no different. Status in EA has a lot of massive upsides as well (you might have greater chances of getting funding/job offers). It's highly unlikely this will change. What we should be actively monitoring is how closely we're matching status with impact.

Also, I'm not sure if the title is good or bad. I think this post points to some important things about status in EA and I wonder if the misleading title makes people less likely to read it. On the other hand, I thought it looked funny and that made me click on it.

Updated the title, thank you for your thoughts jwpieters!

Consider looking into Girardian mimesis. In particular, Girard hypothesizes about a dynamic which goes: A does X => people surrounding A assume that X is advantageous => people surrounding A copy X => X delivers less of an advantage to A => Conflict => Repeated iterations of this loop => Prohibition about copying other people in order to avoid conflict => etc. If you find yourself mimicking other people, looking into this dynamic might be interesting to you.

You wrote,

"Maybe I really should do the thing where I sit down for a few dozen hours and formulate my empirical and moral uncertainties into precise questions whose answers are cruxy to me working on alignment and then try to answer those questions."

It sounds like you have a plan. If you have the time, you could follow it as an exercise. It's not clear to me that we all have a buffet of areas to contribute to directly, but you might be an exception.

Speaking for myself, I develop small reserves of information that I consider worth sharing, but not much after that.

I look for quick answers to my questions of whether to pursue common goals, identifying whatever disallows my personally causing the solution.

Here are some quick answers to a list of EAish goals:

My only point is that you too could write a list, and look for the first thing that removes a list item from the list. I could painstakingly develop some idealized but context-bound model of why to do or not do something, only to find that I am not in that context, and "poof", all that work is for nothing.

BTW, I like your post, but you might not have reason to value my opinion, I don't get much karma or positive feedback myself, and am new on the forum.