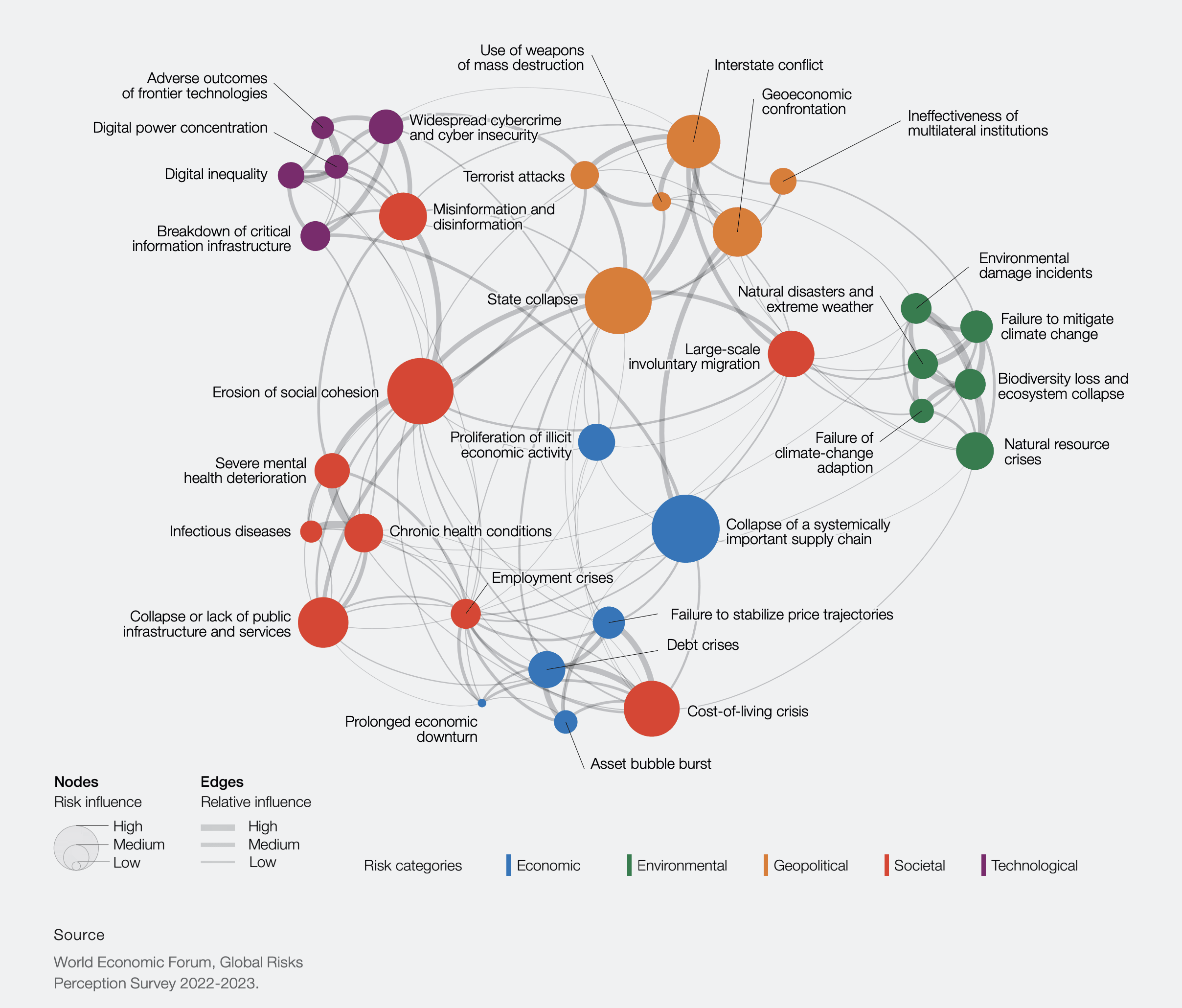

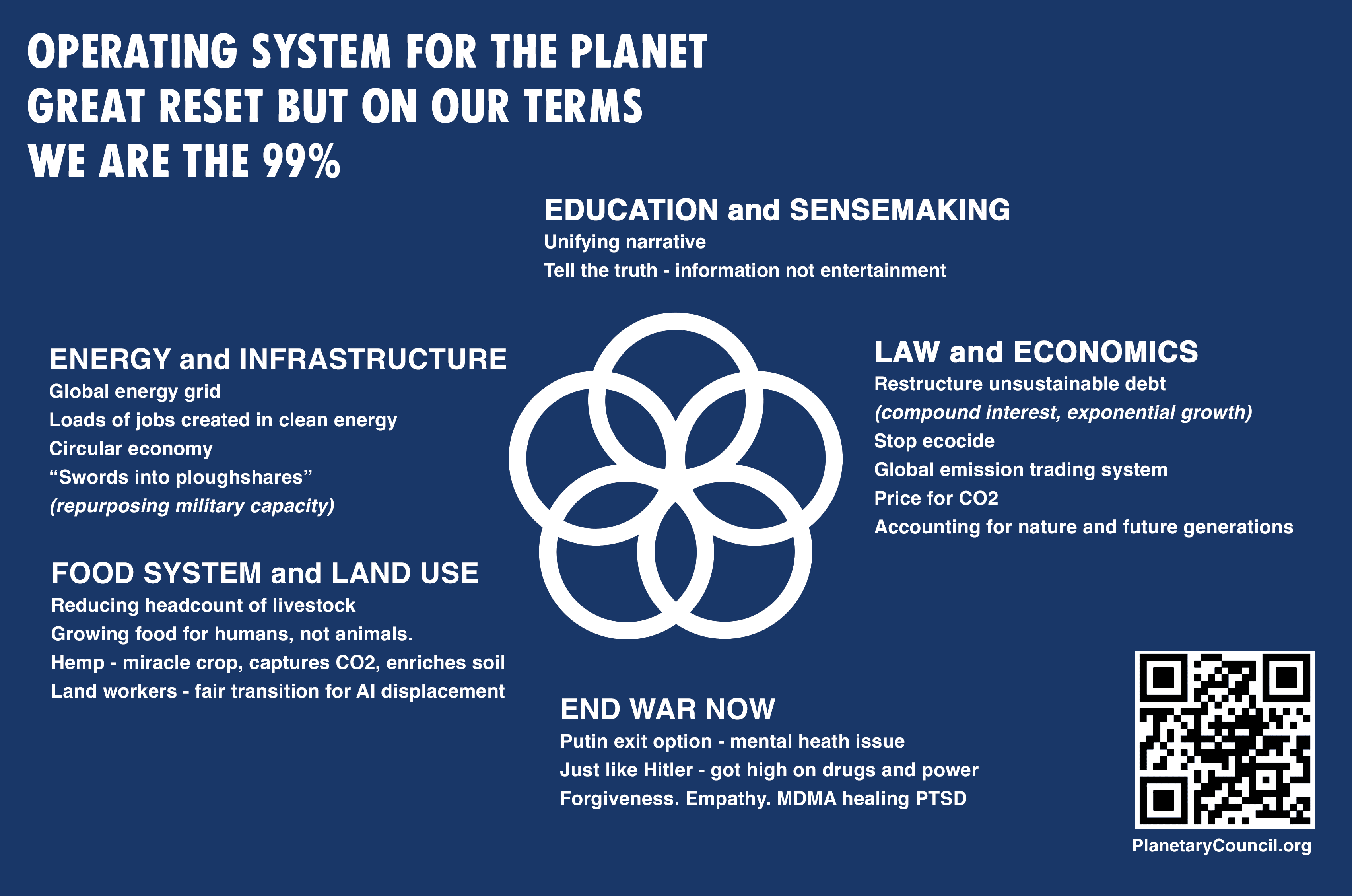

I am currently engaging more with the content produced by Daniel Schmachtenberger and the Consilience Project and slightly wondering why the EA community is not really engaging with this kind of work focused on the metacrisis, which is a term that alludes to the overlapping and interconnected nature of the multiple global crises that our nascent planetary culture faces. The core proposition is that we cannot get to a resilient civilization if we do not understand and address the underlying drivers that lead to global crises emerging in the first place. This work is overtly focused on addressing existential risk and Daniel Schmachtenberger has become quite a popular figure in the youtube and podcast sphere (e.g., see him speak at Norrsken). Thus, I am sure people should have come across this work. Still, I find basically no or only marginally related discussion of this work in this forum (see results of some searches below), which surprises me.

What is your best explanation of why this is the case? Are the arguments so flawed that it is not worth engaging with this content? Do we expect "them" to come to "us" before we engage with the content openly? Does the content not resonate well enough with the "techno utopian approach" that some say is the EA mainstream way of thinking and, thus, other perspectives are simply neglected? Or am I simply the first to notice, be confused, and care enough about this to start investigate this?

Bonus Question: Do you think that we should engage more with the ongoing work around the metacrisis?

Related content in the EA forum

- Systemic Cascading Risks: Relevance in Longtermism & Value Lock-In

- Interrelatedness of x-risks and systemic fragilities

- Defining Meta Existential Risk

- An entire category of risks is undervalued by EA

- Corporate Global Catastrophic Risks (C-GCRs)

- Effective Altruism Risks Perpetuating a Harmful Worldview

Just a note on your communication style, at least on EA forum I think it would help if you replaced more of your "deckchairs on the Titanic" and "forest for the trees" metaphores with specific examples, even hypothetical.

For example, when you say "I would say all of these areas are either underprioritized or, as in the case of global health, often missing the forest for the trees (literally - saving trees without doing anything about the existential threat to the forest itself)," I actually don't know what you mean. What are the forest and what are trees in this example? Like, you say "literally saving trees," but unless you for some reason consider forest preservation to fall under the umbrella of global health, it's not literally saving trees.

Anyway, I think I see a little more where you're coming from, let me know if I'm misunderstanding.

Overall, it seems like you think there's a lot more sources of fragility than EA takes into account, lots of ways civilization could collapse, and EA's only looking at a few.

Is that roughly where you're coming from?