Alt. title: "If EA isn't feminist, let me out of it"

TW / CW: discussion of sexual violence, assault

Written in a state of: Hurry. Anger. A high degree of expertise.

This post does not include a sufficient discussion of the uniqueness of gender identity, and tends to oversimplify what it means to be a woman. I also would like to see an EA community-based discussion about supporting and caring for nonbinary people, as well as one that more carefully centers trans experiences. Even more crucially, it terrifies me to think about the poor quality of discussion that might result from addressing intersectionality.

I am so, so tired. I haven't even been here that long and I am so, so tired. I can't imagine how other people feel.

I would be extremely surprised to meet a woman who does not go through her life fearing violence from men, or that violence will be perpetrated against them because of some aspect of their gender, at least some of the time.

This should not cause controversy. This should not even remotely surprise you. This should not elicit any thought that is in any way related to "but what about-". I'm not saying it does; if it doesn't, that would be great. We (everyone) are allowed to say things that aren't surprising. And I shouldn't have to clarify that.

But apparently, it is surprising to some people. Therein lies the problem.

Usually, I try to make men feel better by saying things like "oh, well I know you're not like that," or "I'm sure that's not what you meant," or "I'm sure he just forgot," or by making jokes. I'm not doing that today. I don't know that you're not like that. I actually do know what you mean, because you said it. Because you forgot half the world's population, half your family and friends and coworkers and classmates (roughly, potentially) exist in a state of constant fear.

This attitude absolutely disgusts me.

If you're not aware of the backlash against feminism by now, you have been intentionally ignoring it. If you are intentionally ignoring the fight for gender liberation, in the context of my life you are a malicious actor, and I'm tired of pretending you're not.

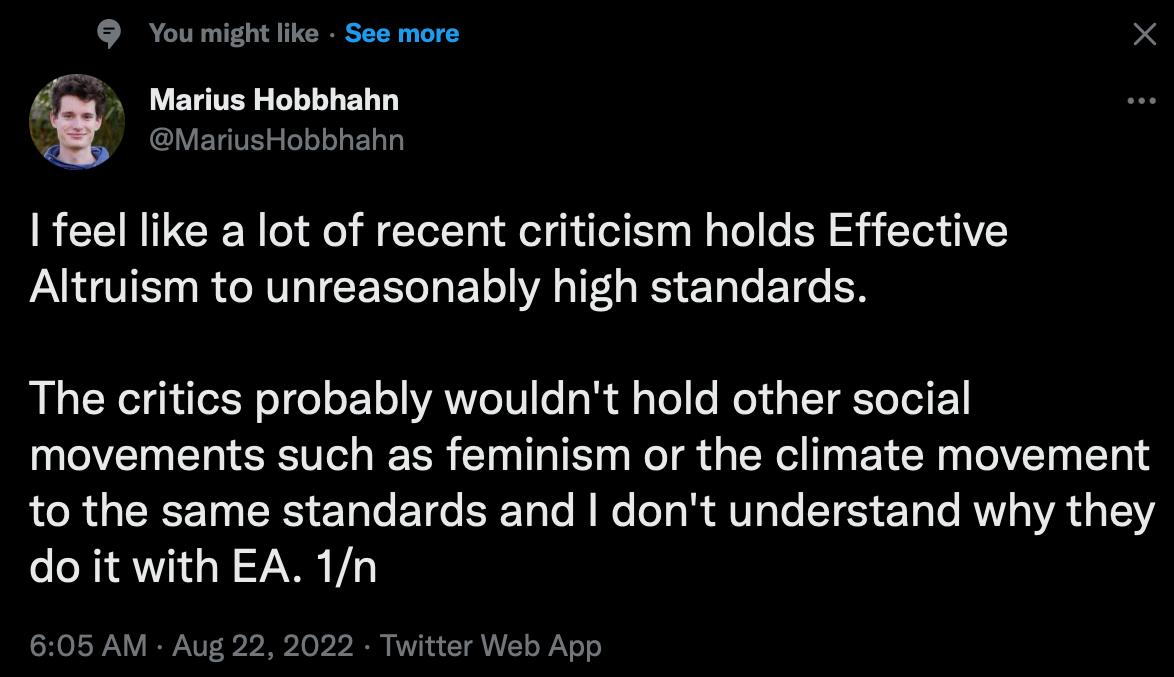

Besides that, it's not about this one tweet. It's about seeing gender liberation as somehow anything other than completely integral. I don't understand it, and I don't want to.

Believe me, I would love to be able to trust men and assume that 99.9% of them/you actually wish me no harm and move through the world relatively unencumbered by the power differential that characterizes society, but I can't. Because I'm confident someone is going to openly relate this problem to the animal rights movement and not see a problem with that.

This is very clearly a high-pitched wail given words. You have to understand. One of you has to understand. One more person has to understand. I want to scream. I've wanted to scream for years, and I haven't, and this is as close as I've ever gotten.

I want to cry. I want to cry every time I see a story about a missing woman, or a missing girl. I want to cry every time I hear about women impregnated with sperm from their OB/GYN and not their partner or chosen donor. I want to cry every time someone sends me a news article that a woman in the middle of a C-section has been raped by her anesthesiologist.

I want to cry every day.

This discussion needs to be in the open. And you need to have it right now.

Lots of strong downvotes for not a lot of explanation.