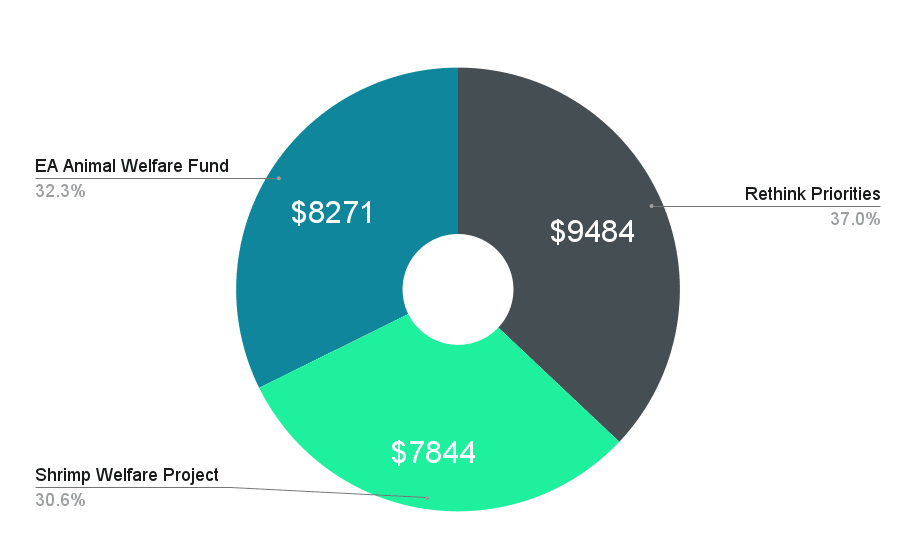

This year’s Donation Election Winners are… no suspense needed… Rethink Priorities, the EA Animal Welfare Fund and the Shrimp Welfare Project!

The prize money will be split based on final vote share, like so:

Thanks again to everyone who voted in the election (all 485 of you[1]), donated to the fund, or participated in the discussion. And a massive thank you to all the organisations who posted for marginal funding week. You can read about them here or listen to many of their posts on this Spotify playlist.

Some more stats

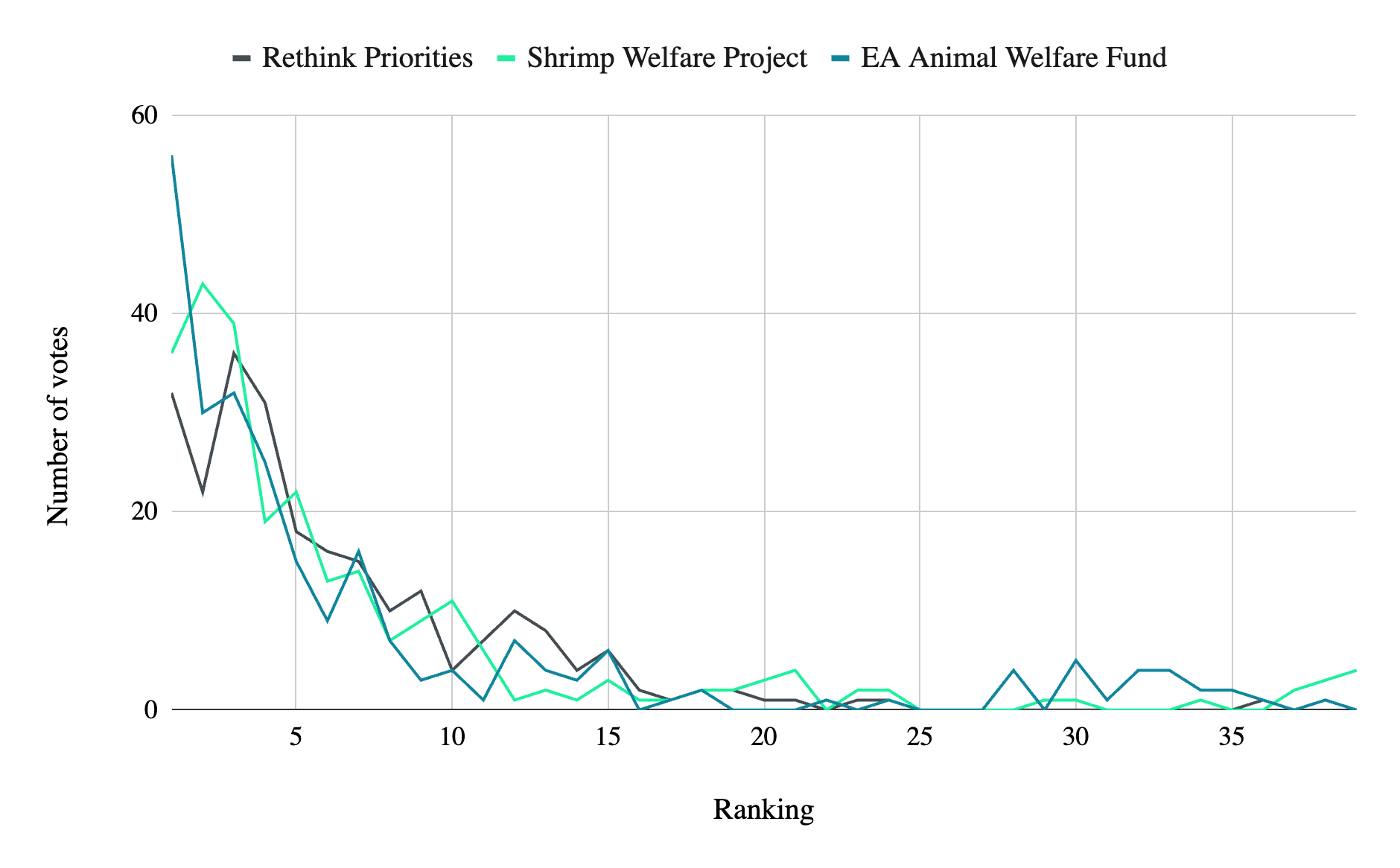

Below is a table listing the winners and the top three runners-up. I’ve listed each candidate’s total votes, i.e., the number of final votes[2] that rank the candidate anywhere on their list and the number of votes that rank them first place.

| Winners | Total votes. [3] | Number of 1st place votes. |

| Rethink Priorities | 249 | 32 |

| EA Animal Welfare Fund | 251 | 60 |

| Shrimp Welfare Project | 260 | 36 |

| Runners up | ||

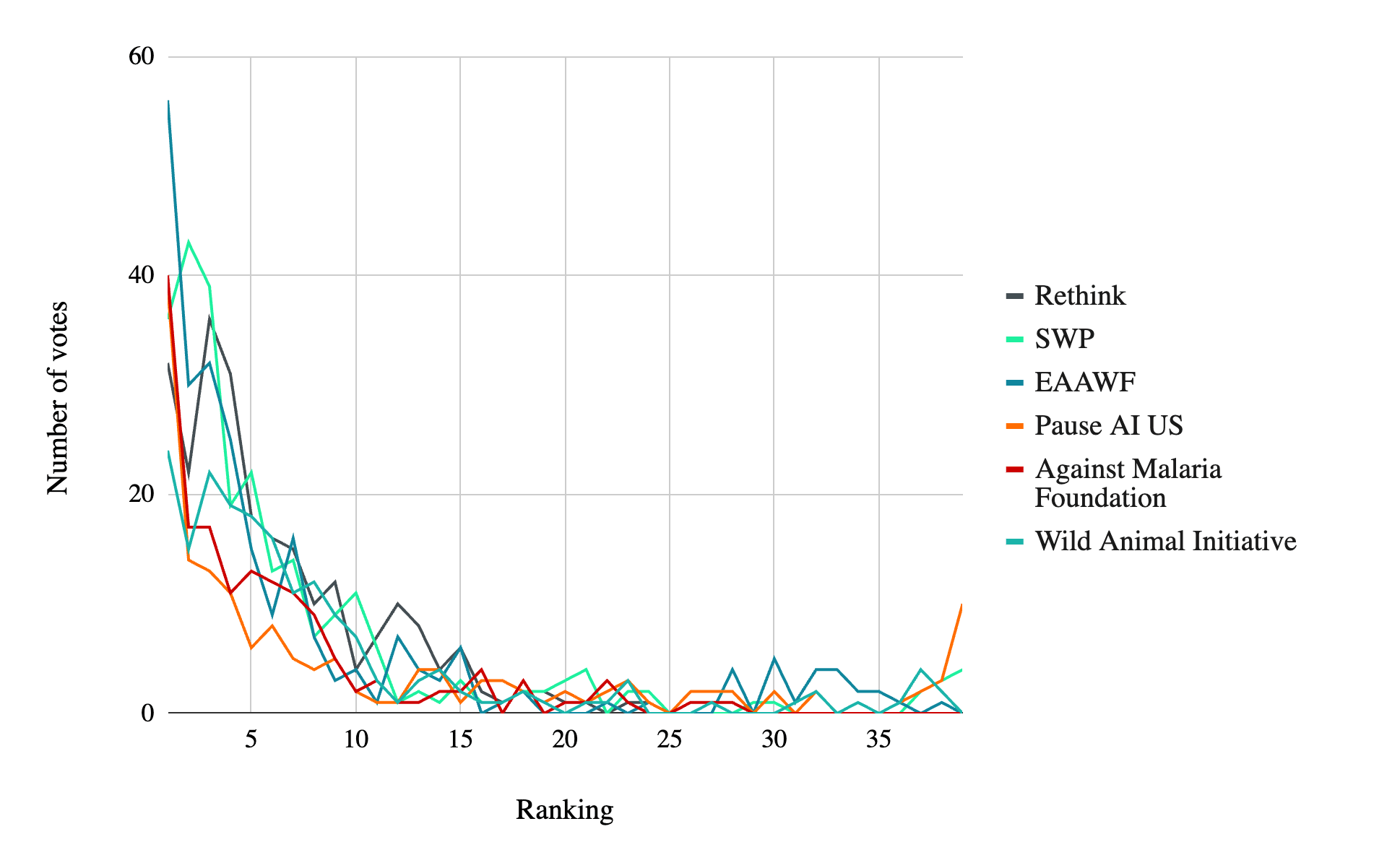

| Against Malaria Foundation | 187 | 44 |

| Pause AI US | 166 | 43 |

| Wild Animal Initiative | 192 | 24 |

The chart below shows that EA AWF was the most popular first choice, the Shrimp Welfare Project was the most popular second and third choice, and Rethink Priorities was the most popular fourth choice.

You might wonder- what would happen if we eliminated more candidates?

If we eliminate one more candidate, the Shrimp Welfare Project drops off, and most of their votes go to the EA Animal Welfare Fund:

If we eliminate another candidate, we get:

If we'd narrowed it down to one candidate, the EA Animal Welfare Fund would have won.

Runners up

As a reminder— this year, we used ranked-choice voting. To get the winners, we first look at everyone’s top-ranked candidates and eliminate the candidate ranked top on the least votes. All voters who had the eliminated candidate as their top-ranked candidate have all their votes moved up one for the next round (so in round 2, the candidate they ranked as a second choice will be treated as a top choice). This process is repeated until we are left with three candidates.

The final runner-up (the last candidate to be eliminated) was the Against Malaria Foundation. When they were eliminated, their votes went to:

- Rethink Priorities: 27 votes

- EA Animal Welfare Fund: 11 votes

- Shrimp Welfare Project: 10 votes

Below is a graph of the votes per ranking for the top three runners-up, alongside the winners. You can look closer at this data (and all the voting data) here.

A reminder — where this money will go.

Rethink Priorities — $9484

Rethink Priorities has more ideas than resources, so extra funding can go to many promising projects. For example:

- $50,000 could pay for research into the global health burden of fungal diseases, a potentially impactful area where good analysis doesn’t yet exist.

- $33,000 could allow the Worldview Investigations team to apply their moral weights work to government spending decisions by finding a way to represent the moral weights of different species in financial terms.

- $60,000 could allow Rethink Priorities to survey US public attitudes toward the potential sentience of AI systems.

This list was selected fairly randomly from Rethink's marginal funding post. For more, I recommend reading the full post.

DonateShrimp Welfare Project — $7844

Shrimp Welfare project’s overheads and program costs are covered until the end of 2026, so marginal funding this giving season will go towards their Humane Slaughter Initiative.

For $55k (source: marginal funding post), the Humane Slaughter Initiative buys a stunner, which will be used by shrimp producers. Not only does a stunner directly make the deaths of a minimum of 120 million shrimps per year more humane, but the Humane Slaughter Initiative also aims to “catalyse industry-wide adoption by deploying stunners to the early adopters in order to build towards a tipping point that achieves critical mass”- in other words, if the use of stunners catches on, many more shrimps will be impacted.

DonateEA Animal Welfare Fund — $8271

In 2024, AWF has distributed $3.7M across 51 grants. Next year, they estimate they can distribute $6.3M at the same bar.

This is because:

- Projects they have already granted to are growing but still aren’t big enough to be funded by larger funders.

- The main bottleneck on them granting to more projects is the amount of money they have to grant, not the number of good projects. They accept a lower portion of applications when they have less funding.

- Good Ventures, the foundation that funds OpenPhilanthropy, has pulled out of some animal welfare areas, such as insect farming, shrimp welfare, and wild animal welfare. Because of this, funding gaps are opening up at established organisations, which AWF would love to fill.

You can read more about their past grants here.

And more about why their room for more funding is so large here.

Or, simply:

DonateBefore you go:

- Please email us (forum at centreforeffectivealtruism dot org), message me, or comment below with any feedback you have about the Donation Election.

- If I haven't answered all your data questions, consider digging around the anonymised voting data from the election and commenting more data insights below.

- ^

This might seem odd because the numbers on the banner add up to less than this (they add to 359 to be precise). But that's because only voters who ranked one of the winning candidates are represented in the banner totals.

- ^

Note that we only record the most recent vote for each voter, the “final vote”.

- ^

The number of votes that contain a ranking for this charity.

Thanks for sharing the raw data!

Interestingly, of the 44 people who ranked every charity, the candidates with most last-placed votes were: PauseAI = 10, VidaPlena = ARMoR = 5, Whylome = 4, SWP = AMF = Arthropoda = 3, ... . This is mostly just noise I'm guessing, except perhaps that it is some evidence PauseAI is unusually polarising and a surprisingly large minority of people think it is especially bad (net negative, perhaps).

Also here is the distribution of how many candidates people ranked:

I am a bit surprised there were so many people who voted for none of the winning charities - I would have thought most people would have some preference between the top few candidates, and that if their favourite charity wasn't going to win they would prefer to still choose between the main contenders. Maybe people just voted once initially and then didn't update it based on which candidates had a chance of winning.

The all-completers are very likely not representative of the larger group of voters, but it's still interesting to see the trends there. I find it curious that a number of orgs that received lots of positive votes (and/or ranked higher than I would have expected in the IRV finish) also received lots of last-place votes among all-voters.

PauseAI US isn't surprising to me given that the comments suggest a wide range of opinions on its work, from pivotal to net negative. There were fewer votes in 34th to 38th place, which is a difference from some other orgs that got multiple last-place votes.

I'm guessing people who put Arthropoda and SWP last don't think invertebrate welfare should be a cause area, and that people who voted AMF last are really worried about the meat-eater problem. It was notable and surprising to me that 16 of the 52 voters who voted 34+ orgs had AMF in 34th place or worse.

For Vida Plena, I speculate that some voters had a negative opinion on VP's group psychotherapy intervention based on the StrongMinds/HLI-related discussions about the effect size of group psychotherapy in 2022 & 2023, and this caused them to rank VP below orgs on which they felt they had no real information or opinion. I'm not aware of any criticism of VP as an org, at least on the Forum. There are a lot of 34th to 38th place votes for Vida Plena as well (of 52 who voted at least 34 orgs, 32 had VP in one of these slots).

I don't know enough about ARMoR or Whylome to even speculate.

I was curious about the thing with Vida Plena too (since I work for Kaya Guides, and we’re generally friendly). I cooked up a quick data explorer for the three mental health charities:

It’s an interesting pattern. It does appear like Kaya Guides and Vida Plena got a pretty equal number of partial votes, suggesting that people favourable to those areas ranked them equally highly. But among completers, Vida Plena got a lot of lower votes—even more so than ACTRA! Given that ACTRA is very new, I think they make a good control group, which to me implies something like ‘completers think Vida Plena is likely to be less favourable than the average unknown mental health charity’.

Your hypothesis makes sense to me; many in the EA community don’t know the specifics of Vida Plena’s program or its potential for high cost-effectiveness, probably due to previous concerns around HLI’s evaluations. I personally think this is unfounded, and clearly many partial voters agree, as Vida Plena ranked quite highly even if you assume that some number of these partial voters sorted by a GHD focus and only voted for GHD charities (higher than us!).

This is a popular way to count ranked ballots, but it really shouldn't be. Counting only first-choice votes in each round means you are discarding many of the preferences that voters expressed on their ballots, which can incorrectly eliminate candidates through vote-splitting, even when a supermajority of voters preferred them over others.

Please set a good example and don't use plurality-based systems like this.

If you count all voter preferences, instead of only first-choice rankings, the set of top three winners is the same, but in a different order and with different proportions:

See https://forum.effectivealtruism.org/posts/RzdKnBYe3jumrZxkB/giving-season-2025-announcement?commentId=ZjhgdhWQ2LQ6TL8kC

Noting here that the totals going to each charity have been updated (upwards), due to a matching miscalculation. This also means that we have hit two rather than one of the collective rewards- so keep an eye out for that!