Upvotes or likes have become a standard way to filter information online. The quality of this filter is determined by the users handing out the upvotes.

For this reason, the archetypal pattern of online communities is one of gradual decay. People are more likely to join communities where users are more skilled than they are. As communities grow, the skill of the median user goes down. The capacity to filter for quality deteriorates. Simpler, more memetic content drives out more complex thinking. Malicious actors manipulate the rankings through fake votes and the like.

This is a problem that will get increasingly pressing as powerful AI models start coming online. To ensure our capacity to make intellectual progress under those conditions, we should take measures to future-proof our public communication channels.

One solution is redesigning the karma system in such a way that you can decide whose upvotes you see.

In this post, I’m going to detail a prototype of this type of karma system, which has been built by volunteers in Alignment Ecosystem Development. EigenKarma allows each user to define a personal trust graph based on their upvote history.

EigenKarma

At first glance, EigenKarma behaves like normal karma. If you like something, you upvote it.

The key difference is that in EigenKarma, every user has a personal trust graph. If you look at my profile, you will see the karma assigned to me by the people in your trust network. There is no global karma score.

If we imagine this trust graph powering a feed, and I have gamed the algorithm and gotten a million upvotes, that doesn’t matter; my blog post won’t filter through to you anyway, since you do not put any weight on the judgment of the anonymous masses.

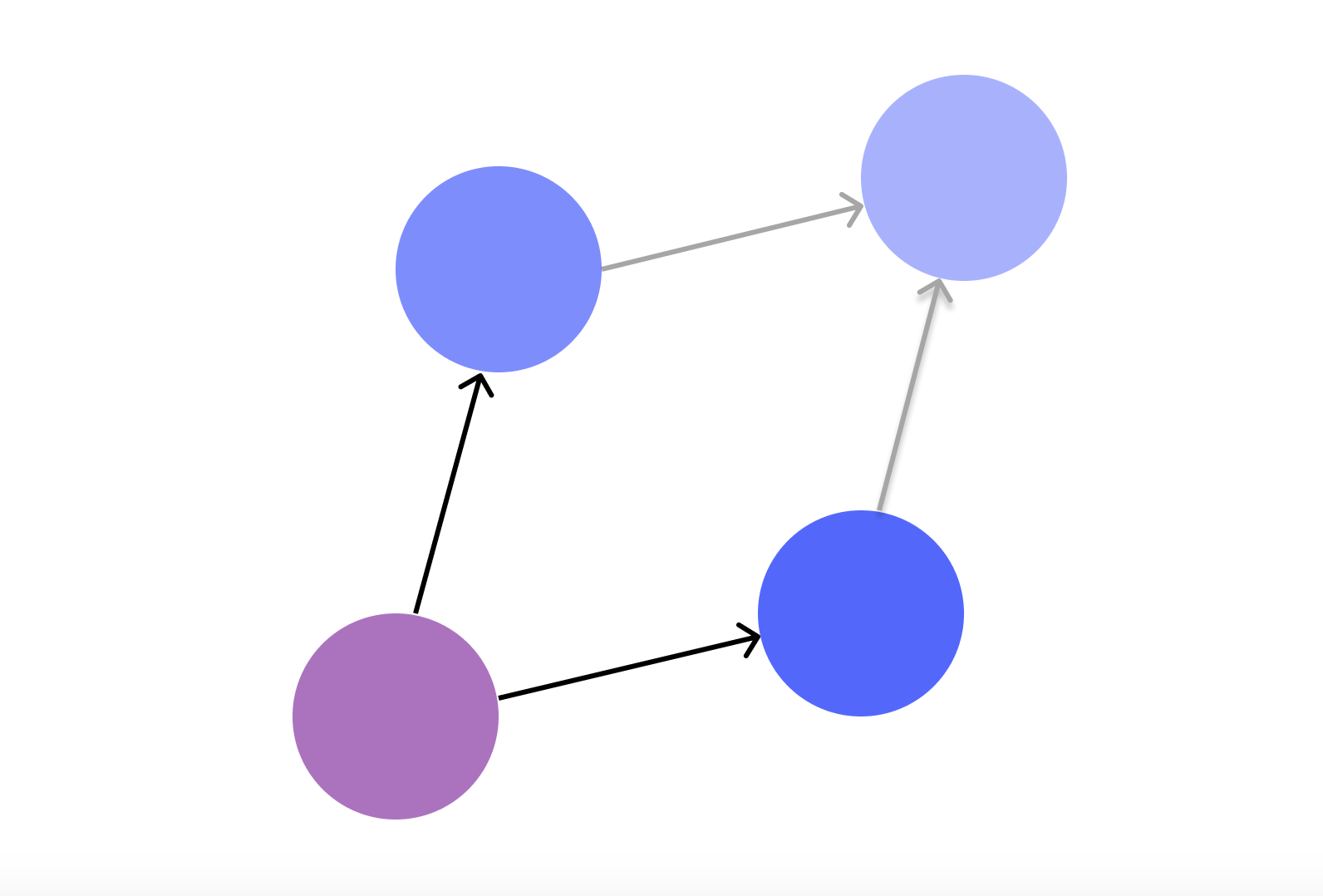

If you upvote someone you don’t know, they are attached to your trust graph. This can be interpreted as a tiny signal that you trust them:

That trust will also spread to the users they trust in turn. If they trust user X, for example, you too trust X—a little:

This is how we intuitively reason about trust when thinking about our friends and the friends of our friends. Only EigenKarma being a database, it can remember and compile more data than you, so it can keep track of more than a Dunbar’s number of relationships. It scales trust. Karma propagates outward through the network from trusted node to trusted node.

Once you’ve given out a few upvotes, you can look up people you have never interacted with, like K., and see if people you “trust” think highly of them. If several people you “trust” have upvoted K., the karma they have given to K. is compiled together. The more you “trust” someone, the more karma they will be able to confer:

I have written about trust networks and scaling them before, and there’s been plenty of research suggesting that this type of “transitivity of trust” is a highly desired property of a trust metric. But until now, we haven’t seen a serious attempt to build such a system. It is interesting to see it put to use in the wild.

Currently, you access EigenKarma through a Discord bot or the website. But the underlying trust graph is platform-independent. You can connect the API (which you can find here) to any platform and bring your trust graph with you.

Now, what does a design like this allow us to do?

EigenKarma is a primitive

EigenKarma is a primitive. It can be inserted into other tools. Once you start to curate a personal trust graph, it can be used to improve the quality of filtering in many contexts.

- It can, as mentioned, be used to evaluate content.

- This lets you curate better personal feeds.

- It can also be used as a forum moderation tool.

- What should be shown? Work that is trusted by the core team, perhaps, or work trusted by the user accessing the forum?

- Or an H-index, which lets you evaluate researchers by only counting citations by authors you trust.

- This can filter out citation rings and other ways of gaming the system, allowing online research communities to avoid some of the problems that plague universities.

- Extending this capacity to evaluate content, you can also use EigenKarma to index trustworthy web pages. This can form the basis of a search engine that is more resistant to SEO.

- In this context, you can have hyperlinks count as upvotes.

- Another way you can use EigenKarma is as a way to automate who gets privileges in a forum or Discord server - whoever is trusted by the core team. Or trusted by someone they trust.

- If you are running a grant program, EigenKarma might increase the number of applicants you can correctly evaluate.

- Researchers channel their trust to the people they think are doing good work. Then the grantmakers can ask questions such as: "conditioning on interpretability-focused researchers as the seed group, which candidates score highly?" Or, they'll notice that someone has been working for two years but no one trusted thinks what they're doing is useful, which is suspicious.

- This does not replace due diligence, but it could reduce the amount of time needed to assess the technical details of a proposal or how the person is perceived.

- You can also use it to coordinate work in distributed research groups. If you enter a community that runs EigenKarma, you can see who is highly trusted, and what type of work they value. By doing the work that gives you upvotes from valuable users, you increase your reputation.

- With normal upvote systems, the incentives tend to push people to collect “random” upvotes. Since likes and upvotes, unweighted by their importance, are what is tracked on pages like Reddit and Twitter, it is emotionally rewarding to make those numbers go up, even if it is not in your best interest. With EigenKarma this is not an effective strategy, and so you get more alignment around clear visions emanating from high-agency individuals.

- Naturally, if EigenKarma was used by everyone, which we are not aiming for, a lot of people would coalesce around charismatic leaders too. But to the extent that happens, these dysfunctional bubbles are isolated from the more well-functioning parts of the trust graph, since users who are good at evaluating whom to trust will by doing this sever the connections.

- With normal upvote systems, the incentives tend to push people to collect “random” upvotes. Since likes and upvotes, unweighted by their importance, are what is tracked on pages like Reddit and Twitter, it is emotionally rewarding to make those numbers go up, even if it is not in your best interest. With EigenKarma this is not an effective strategy, and so you get more alignment around clear visions emanating from high-agency individuals.

- EigenKarma also makes it easier to navigate new communities, since you can see who is trusted by people you trust, even if you have not interacted with them yet. This might improve onboarding.

- You could, in theory, connect it to Twitter and have your likes and retweets counted as updates to your personal karma graph. Or you could import your upvote history from LessWrong or the AI alignment forum. And these forums can, if they want to, use the algorithm, or the API, to power their internal karma systems.

- By keeping your trust graph separate from particular services, it could allow you to more broadly filter your own trusted subsection of the internet.

If you are interested

We’re currently test-running it on Superlinear Prizes, Apart Research, and in a few other communities. If you want to use EigenKarma in a Discord server or a forum, I encourage you to talk with plex on the Alignment Ecosystem Development Discord server. (Or just comment here and I’ll route you.)

There is work to be done if you want to join as a developer, especially optimizing the core algorithm’s linear algebra to be able to handle scale. If you are a grantor and want to fund the work, the lead developer would love to be able to rejoin the project full-time for a year for $75k (and open to part-time for a proportional fraction, or scaling the team with more).

We’ll have an open call on Tuesday the 14th of February if you want to ask questions (link to Discord event).

As we progress toward increasingly capable AI systems, our information channels will be subject to ever larger numbers of bots and malicious actors flooding our information commons. To ensure that we can make intellectual progress under these conditions, we need algorithms that can effectively allocate attention and coordinate work on pressing issues.

As a mathematician I think this is cool and interesting and I'd be glad to know what comes out of these experiments.

As a citizen I'm concerned about the potential to increase gatekeeping, groupthink and polarisation, and most of all of the major privacy risk. Like, if I open a new account and upvote a single other user, can I now figure out exactly who they have upvoted? Even if I can't in this manner, what can I glean from looking, as your example suggests, at the most trusted individuals in a community etc.?

As it is currently set up, you could start a blank account and give someone a single upvote and then you would see something pretty similar to their trust graph. You would see whom they trust.

It could, I guess, be used to figure out attack vectors for a person - someone trusted that can be compromised. This does not seem like something that would be problematic in the contexts where this system would realistically be implemented over a short to medium term. But it is something to keep in mind as we iterate on the system with more users onboard.

It does seem like an important point that your trust graph is effectively public even if you don't expose it in the API.

I think the privacy risk is more prominent when you don't know that information is being made public. A system like this would obviously tell users that their upvotes are counted towards the trust system and therefore people would upvote based on that. I guess we could make it all hidden, similar to Youtube's algorythm where the trust graph is not publically available and only works in the backgrounds to change the upvotes you see for the people you trust (so it changes the upvotes but you don't see how it changes and other people can't see yours), but I believe that would be more concerning and likely to be abused then if the information is always available for everyone.

As for the gatekeeping and groupthink, I think that already happens regardlessly of a trust system, and is a consequence of tribalism in the real world rather than the cause of it. Honestly, I see it beneficial that people who despise each other get more separated, and usually it's the forceful interaction between opposing groups that results in violent outcomes.

The literature on differential privacy might be helpful here. I think I may know a few people in the field, although none of them are close.

I have put some thought into the privacy aspect, and there are ways to make it non-trivial or even fairly difficult to extract someone's trust graph, but nothing which actually hides it perfectly. That's why the network would have to be opt-in, and likely would not cover negative votes.

I'd be interested to hear the unpacked version of your worries about "gatekeeping, groupthink and polarisation".

I don't have time to write it in detail, but basically I'm referring to two ideas here regarding what a user sees:

Both lead to gatekeeping because new users aren't trusted by anyone so their content can't get through.

Thanks for your post! It was interesting and I'm curious to learn more.

My main worry reading this post was that EigenKarma might create social bubbles and group-think. Not just in a few isolated cases of charismatic leaders you mention, but more generally too. For example, a lot of social networks that have content curation based on people you already follow seem to have that dynamic (I'm unsure how big a problem this really is – but it gets mentioned a lot).

E.g. If I identify with Red Tribe and upvote Red Tribe, I will increasingly see other posts upvoted by Red Tribe (and not Blue Tribe). That would make it harder to learn new information or have my priors challenged.

How is EigenKarma different from social networks that use curation algorithms based on people's previous likes and follows? Is this an issue in practice and if so how would you try to mitigate it?

[These are intended as genuine questions to check whether I am reading your post correctly :)]

In my understanding, EigenKarma only creates bubbles if it also acts as a default content filter. If, for example, it is just displayed near usernames, it shouldn't have this effect but would still retain its use as a signal of trustworthiness.

Also, sometimes creating a bubble -- a protected space -- is exactly what you want to achieve, so it might be the correct tool to use in specific contexts.

It's the first time I read about this, so please correct me if I'm misunderstanding.

Personally, I find the idea very interesting.

Can you explain with an example when a bubble would be a desirable outcome?

One class of examples could be when there's an adversarial or "dangerous" environment. For example:

Another class of examples could be when a given topic requires some complex technical understanding. In that case, a community might want only to see posts that are put forward by people who have demonstrated a certain level of technical knowledge. Then they could use EigenKarma to select them. Of course, there must be some way to enable the discovery of new users, but how much of a problem this is depends on implementation details. For example, you could have an unfiltered tab and a filtered one, or you could give higher visibility to new users. There could be many potential solutions.

Right, the first class are the use cases that the OP put forward, and vote brigading is something that the admins here handle.

The second class is more what I asking about, so thank you for explaining why you would want a conversation bubble. I think if you're going to go that far for that reason, you could consider a entrance quiz. Then people who want to "join the conversation" could take the quiz, or read a recommended reading list, and then take the quiz, to gain entrance to your bubble.

I don't know how aversive people would find that, but if lack of technical knowledge were a true issue, that would be one approach to handling it while still widening the group of conversation participants.

There is a family resemblance with the way something like Twitter is set up. There are a few differences:

How does this affect the formation of bubbles? I'm not sure. My guess is that it should reduce some of the incentives that drive the tribe-forming behaviors at Twitter.

I'm also not sure that bubbles are a massive problem, especially for the types of communities that would realistically be integrated into the system. This last point is loosely held, and I invited strong criticism, and it is something we are paying attention to as we run trials with larger groups. You could combine EigenKarma with other types of designs that counteract these problems if they are severe (though I haven't worked through that idea deeply).

tbh I feel like too much exposure to the bottom 50% of outgroup content is the main thing driving polarisation on twitter and it seems to me that EAs are the sort of people to upvote/promote the outgroup content that's actually good

in general I feel like worries about social media bubbles are overstated. if people on the EA forum split into factions which think little enough of each other that people end up in bubbles I feel like this means we should split up into different movements.

Yeah it'd be cool if @Henrik Karlsson and team could come up with a way to defend against social bubbles while still having a trust mechanic. Is there some way to model social bubbles and show that eigenkarma or some other mechanism could prevent social bubbles but still have the property of trusting people who deserve trust?

For instance maybe users of the social network are shown anyone who has trust, and 'trust' is universal throughout the community, not just something that you have when you're connected to someone else? Would that prevent the social bubble problem, while still allowing users to filter out the low quality content from untrusted users?

Have you considered talking to the Lightcone folks and seeing if it can be implemented in forum magnum?

No conversation that I have been a part of yet. But it is of course something that would be very interesting to discuss.

This is an interesting idea, and something in this area might be pretty neat, but when I imagine using it I feel like I'm regularly going to be faced with a dilemma of whether to upvote someone's post. If someone who usually has (in my opinion) terrible judgment, makes a great point, today I happily upvote to show appreciation. But if upvoting them also meant slightly increasing how much the system thinks I care about their judgment, I would be much more reticent to do it.

This system works by using who you upvote as a proxy for whose upvotes you trust. In addition to the problem described above, there's a separate issue which is that I don't even know people's upvote patterns. Which means talking about my voting activity as a proxy for my assessment of upvoting trust is a bit fraught.

Topically, this might be a useful part of a strategy to help the EA forum to stay focused on the most valuable things, if people had the option to sync their vote own history with the EigenKarma Network and use EKN lookup scores to influence the display and prioritization of posts on the front page. We'd be keen to collaborate with the EAF team to make this happen, if the community is excited.

At first I was confused because we already use something we call eigenkarma, but reading more closely I see that you have separate weightings for each person whereas we have a single global weighting. (Also we use an approximation, but still spiritually derived from the idea of calculating the eigenvectors of a vote matrix.)

I think this is an interesting idea; I have a bunch of uncertainty but I'm interested to see what other people think.

(Note: speaking on behalf of myself, not sure what other forum team people think.)

I'm curious about what the thing you call EigenKarma is, is it the way people with more karma have more weighty votes? Or is it something with a global eigenvector?

I personally would want them to factor the problem of social bubbles into their model and figure out some way of preventing that while still building up 'trust points'.

Thanks for posting this! Karma systems are an underappreciated aspect of forum design and we should definitely consider ways to improve its effects on discourse.

tl;dr The forum software developer habryka has ideas on alternative karma systems and there may even be some ready to use code.

I first heard of EigenKarma in this post from habryka about LessWrong 2.0, where he describes a system inspired by Scott Aaronson's eigendemocracy "in which the weights of the votes of a user depends on how many other trustworthy users have upvoted that user." There is some discussion in the comments to that post which complements this one.

I just searched for project outcomes to update on but didn't find information on what they ended up implementing. I did find this comprehensive commentary on varied purposes of karma systems from the forum software developer, highly recommended read.

I get the sense that they investigated it seriously and there may be prototype or even working code that you could use!

Hmm. I've watched the scoring of topics on the forum, and have not seen much interest in topics that I thought were important for you, either because the perspective, the topic, or the users, were unpopular. The forum appears to be functioning in accordance with the voting of users, for the most part,because you folks don't care to read about certain things or hear from certain people. It comes across in the voting.

I filter your content, but only for myself. I wouldn't want my peers, no matter how well informed, deciding what I shouldn't read, though I don't mind them recommending information sources and I don't mind recommending sources of my own, on a per source basis. I try to follow the rule that I read anything I recommend before I recommend it. By "source" here I mean a specific body of content, not a specific producer of content.

I actually hesitate to strong vote, btw, it's ironic. I don't like being part of a trust system, in a way. It's pressure on me without a solution.

I prefer "friends" to reveal things that I couldn't find on my own, rather than, for their lack of "trust", hide things from me. More likely, their lack of trust will prove to be a mistake in deciding what I'd like to read. No one assumes I will accept everything I read, as far as I know, so why should they be protecting me from genuine content? I understand spam, AI spam would be a real pain, all of it leading to how I need viagra to improve my epistemics.

If this were about peer review and scientific accuracy, I would want to allow that system to continue to work, but still be able to hear minority views, particularly as my background knowledge of the science deepens. Then I fear incorrect inferences (and even incorrect data) a bit less. I still prefer scientific research to be as correct as possible, but scientific research is not what you folks do. You folks do shallow dives into various topics and offer lots of opinions. Once in a while there's some serious research but it's not peer-reviewed.

You referred to AI gaming the system, etc, and voting rings or citation rings, or whathaveyou. It all sounds bad, and there should be ways of screening out such things, but I don't think the problem should be handled with a trust system.

An even stronger trust system that will just soft-censor some people or some topics more effectively. You folks have a low tolerance for being filters on your own behalf, I've noticed. You continue to rely on systems, like karma or your self-reported epistemic statuses, to try to qualify content before you've read it. You absolutely indulge false reasons to reject content out of hand. You must be very busy and so you make that systematic mistake.

Implementing an even stronger trust system will just make you folks even more marginalized in some areas, since EA folks are mistaken in a number of ways. With respect to studies of inference methods, forecasting, and climate change, for example, the posting majority's view here appears to be wrong.

I think it's baffling that anyone would ever risk a voting system for deciding the importance of controversial topics open to argument. I can see voting working on Stack Overflow, where answers are easy to test, and give "yes, works well" or "no, doesn't work well" feedback about, at least in the software sections. There, expertise does filter up via the voting system.

Implementing a more reliable trust system here will just make you folks more insular from folks like me. I'm aware that you mostly ignore me. Well, I develop knowledge for myself by using you folks as a silent, absorbing, disinterested, sounding board. However, if I do post or comment, I offer my best. I suppose you have no way to recognize that though.

I've read a lot of funky stuff from well-intentioned people, and I'm usually ok with it. It's not my job, but there's usually something to gain from reading weird things even if I continue to disagree with it's content. At the very least, I develop pattern recognition useful to better understand and disagree with arguments: false premises, bogus inferences, poor information-gathering, unreliable sources, etc, etc. A trust system will deprive you folks of experiencing your own reading that way.

What is in fact a feature must seem like a bug:

"Hey, this thing I'm reading doesn't fit what I'd like to read and I don't agree with it. It is probably wrong! How can I filter this out so I never read it again. Can my friends help me avoid such things in future?"

Such an approach is good for conversation. Conversation is about what people find entertaining and reaffirming to discuss, and it does involve developing trust. If that's what this forum should be about, your stronger trust system will fragment it into tiny conversations, like a party in a big house with different rooms for every little group. Going from room to room would be hard,though. A person like me could adapt by simply offering affirmations and polite questions, and develop an excellent model of every way that you're mistaken, without ever offering any correction or alternative point of view, all while you trust that I think just like you. That would have actually served me very well in the last several months. So, hey, I have changed my mind. Go ahead. Use your trust system. I'll adapt.

Or ignore you ignoring me. I suppose that's my alternative.

I think maybe the word "filter" which I use gives the impression that it is about hiding information. The system is more likely to be used to rank order information, so that information that has been deemed valuable by people you trust is more likely to bubble up to you. It is supposed to be a way to augment your abilities to sort through information and social cues to find competent people and trustworthy information, not a system to replace it.

I understand, Henrik. Thanks for your reply.

Forum karma

The karma system works similarly to highlight information, but there's these edge cases. Posts appear and disappear based on karma from first page views. New comments that get negative karma are not listed in the new comments from the homepage, by default.

This forum in relation to the academic peer review system

The peer review system in scientific research is truly different than a forum for second-tier researchers doing summaries, arguments, or opinions. In the forum there should be encouragement of access to disparate opinions and perspectives.

The value of disparate information and participants here

Inside the content offered here are recommendations for new information. I evaluate that information according to more conventional critical thinking criteria: peer-reviewed, established science, good methodology. Disparate perspectives among researchers here let me gain access to multiple points of view found in academic literature and fields of study. For example, this forum helped me research a foresight conflict between climate economists and earth scientists that is long-standing (as well as related topics in climate modeling and scenario development).

NOTE:Peer-reviewed information might have problems as well, but not ones to fix with a voting system relying on arbitrary participants.

Forum perspectives should not converge without rigorous argument

Another system that bubbles up what I'd like to read? OK, but will it filter out divergence, unpopular opinions, evidence that a person has a unique background or point of view, or a new source of information that contradicts current information? Will your system make it harder to trawl through other researchers' academic sources by making it less likely that forum readers ever read those researchers' posts?

In this environment, among folks who go through summaries, arguments, and opinions for whatever reason, once an information trend appears, if its different and valid, it lets me course correct.

The trend could signal something that needs changing, like "Here's new info that passes muster! Do something different now!" or signal that there's a large information gap, like "Woa, this whole conversation is different! I either seem to disagree with all of the conclusions or not understand them at all. What's going on? What am I missing?"

A learning environment

Forum participants implicitly encourage me to explore bayesianism and superforecasting. Given what I suspect are superforecasting problems (its aggregation algorithms and bias in them and in forecast confirmations), I would be loathe to explore it otherwise. However, obviously smart people continue to assert its value in a way that I digest as a forum participant. My minority opinion of superforecasting actually leads to me learning more about it because I participate in conversation here. However, if I were filtered out in my minority views so strongly that no one ever conversed with me at all I could just blog about how EA folks are really wrong and move on. Not the thing to do, but do you see why tolerance of my opinions here matters? It serves both sides.

From my perspective, it takes patience to study climate economists, superforecasting, bayesian inference, and probabilism. Meanwhile, you folks, with different and maybe better knowledge than mine on these topics, but a different perspective, provide that learning environment. If there can be reciprocation, that's good, EA folks deserve helpful outside perspectives.

My experience as other people's epistemic filter

People ignore my recommendations, or the intent behind them. They either don't read what I recommend, or much more rarely, read it but dismiss it without any discussion. If those people use anonymized voting as their augmentation approach, then I don't want to be their filter. They need less highlighting of information that they want to find, not more.

Furthermore, at this level of processing information, secondary or tertiary sources, posts already act like filters. Ranking the filtering to decide whether to even read it is a bit much. I wouldn't want to attempt to provide that service.

Conclusion

ChatGPT, and this new focus on conversational interfaces makes it possible that forum participants in future will be AI, not people. If so,they could be productive participants, rather than spam bots.

Meanwhile, the forum could get rid the karma system altogether, or add configuration that lets a user turn off karma voting and ranking. That would be a pleasant alternative for someone like me, who rarely gets much karma anyway. That would offer even less temptation to focus on popular topics or feign popular perspectives.