Hi all,

some of you may remember that a while back, Vetted Causes had posted a quite poor review of Animal Charity Evaluators on this forum which led to lengthy discussion between the two in the comments.

Vetted causes has now released their first review of one of the top Charities according to Animal Charity Evaluators, here are the two reviews:

Review of Sinergia Animal by Animal Charity Evaluators

Review of Sinergia Animal by Vetted Causes

As a long time donor to Animal Charity Evaluators, I obviously find it troubling that one of the Charities they recommend might be vastly overestimating its own impact, or even claiming successes as their own which they had no part in. At the same time I am not sure how trustworthy Vetted Causes is as their initial review of ACE was - imo - worded quite poorly and their review of Sinergia Animal almost sounds a bit - for lack of a better term - unbelievably negative, claiming problems with every single (7 out of 7) pig welfare commitment achieved by Sinergia Animal in 2023.

This leaves me in a difficult position where I don't really know who to believe and if I should cancel my donations to Animal Charity Evaluators based on this.

Thats why I wanted to ask for some additional opinions, if you all find Vetted Causes' Review trustworthy and if so - who to donate to instead of ACE to help the most animals possible going forward.

(For transparency, I am not associated with ACE, Vetted Causes or Sinergia Animal, beyond my donation to ACE.)

Thank you!

It's eag weekend. I would give at least a week before rushing to a judgement.

Hi Marcus,

Thanks for your comment.

I want to acknowledge that members of this community have shared this post with us, and we truly appreciate your engagement and interest in our work. A deep commitment to create real change, transparency and honesty have always been central to our approach, and we will address all concerns accordingly.

To clarify in advance, we have never taken credit for pre-existing or non-existent policies, and we will explain this in our response. We always strive to estimate our impact in good faith and will carefully review our methodology based on this feedback to address any concerns, if valid.

This discussion comes at a particularly busy time for us, as we have been attending EA Global while continuing our critical work across eight countries. We appreciate your patience as we prepare a thorough response.

As a best practice, we believe organizations mentioned by others in posts should have the chance to respond before content is published. We take the principle of the right to reply so seriously that we even extend it to companies targeted in our campaigns or enforcement programs. In that spirit, we will share our response with Vetted Causes via the email provided on their website 24 hours (or as much time as Vetted Causes prefers) before publishing it on the Forum.

The EA community has been a vital supporter of our work, and we hope this serves as an constructive opportunity to provide further insight into our efforts and approach.

Best,

Carolina

Thanks, yeah this seems like a reasonable approach. Hoping from a statement from ACE or Sinergia.

I think it's pretty safe to assume that the reality of most charities' cost-effectiveness is less than they claim.

I'd also advise skepticism of a critic who doesn't attempt to engage with the charity to make sure they're fully informed before releasing a scathing review. [I also saw signs of naive "cost-effectiveness analysis goes brrr" style thinking about charity evaluation from their ACE review, which makes me more doubtful of their work].

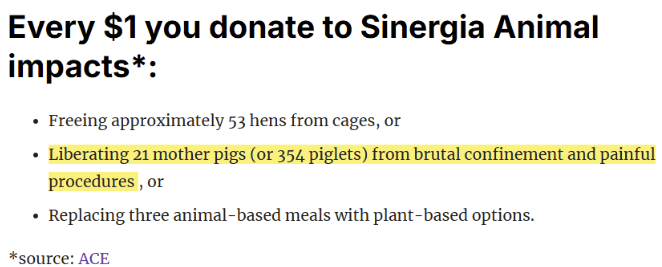

It's also worth noting that quantifying charity impact is messy work, especially in the animal cause area. We should expect people to come to quite different conclusions and be comfortable with that. FarmKind estimated the cost-effectiveness of Sinergia's pig work using the same data as ACE and came to a number of animals helped per dollar that was ~6x lower (but still a crazy number of pigs per dollar). Granted, the difference between ACE and Vetted Causes assessments are beyond the acceptable margin of error

I love this wisdom and agree that most charities' cost effectiveness will be less than they claim. I include our assessment of my own charity in that, and GiveWell's assessments. Especially as causes become more saturated and less neglected. And yes like you say with Animal charities there are more assumptions made and far wider error bars than with human assessment.

I haven't (and won't) look into this in detail but I hope some relatively unmotivated people will compare these analysis in detail.

Hi Aidan, thank you for providing your input to the community.

I think it's pretty safe to assume that the reality of most charities' cost-effectiveness is less than they claim.

It appears we agree that Sinergia is making false claims about helping animals. '

We are curious if you think this is proper grounds for not recommending them as a charity.

It appears we agree that Sinergia is making false claims about helping animals.

I can't speak for Aidan, but the word false has certain connotations. Although it doesn't explicitly denote bad faith, it does carry a hint of that aroma in most contexts. I think that's particularly true when it is applied to estimates (cost cost-effectiveness estimates), opinions, and so on.

I think that phrasing should be used with caution. Generally, it would be better to characterize a cost-effectiveness estimate as overly optimistic, inaccurate, flawed, or so on to avoid giving the connotation that comes with false. False could be appropriate if the claims were outside the realm of what a charity could honestly -- but mistakenly -- believe. But I don't think that's what Aidan was saying (that reading would imply he thought "most charities[]" were guilty of promoting such claims rather than merely ones that were overly optimistic).

Thanks for the reply, Jason.

If Sinergia had framed their claims as estimates, we would agree with you.

However, Sinergia states that "every $1 you donate will spare 1,770 piglets from painful mutilations." If someone donates $1 to Sinergia based on this claim and Sinergia does not spare an additional 1,770 piglets from painful mutilations, Sinergia has made a false claim to the donor, and it is fair to state this is the case.

The same applies to their claim that they help 113 million farmed animal every year.

Note: Sinergia could have avoided these issue by stating "we have estimates that state every $1 you donate will spare 1,770 piglets from painful mutilations" and "we have estimates that state we help 113 million farmed animals every year." However, these statements are likely not as effective at convincing people to donate to Sinergia.

In common English parlance, we don't preface everything with "I have estimates that state...".

I don't think any reasonable person thinks that they mean that if they got an extra $1, they'd somehow pay someone for 10 minutes of time to lobby some tiny backyard farm of about 1770 pigs to take on certain oractices. You get to these unit economics with a lot more nuance.

I think a reasonable reader would view these statements as assertions grounded in a cost-effectiveness estimate, rather than as some sort of guarantee that Singeria could point to 1,770 piglets that were saved as a result of my $1 donation. The reader knows that the only plausible way to make this statement is to rely on cost-effectiveness estimates, so I don't think there's a meaningful risk that the reader is misled here.

I think a reasonable reader would view these statements as assertions grounded in a cost-effectiveness estimate, rather than as some sort of guarantee

If there are no advantages to making these statements factual claims, why didn't Sinergia just state that they are estimates?

I can't believe how often I have to explain this to people on the forum: Speaking with scientific precision makes for writing very few people are willing to read. Using colloquial, simple language is often appropriate, even if it's not maximally precise. In fact, maximally precise doesn't even exist -- we always have to decide how detailed and complete a picture to paint.

If you're speaking to a PhD physicist, then say "electron transport occurs via quantum tunneling of delocalized wavefunctions through the crystalline lattice's conduction band, with drift velocities typically orders of magnitude below c", but if you're speaking to high-school students teetering on the edge of losing interest, it makes more sense to say "electrons flow through the wire at the speed of light!". This isn't deception -- it's good communication.

You can quibble that maybe charities should say "may" or "could" instead of "will". Fine. But to characterize it as a wilful deception is mistaken.

If charities only spoke the way some people on the forum wish they would, they would get a fraction of the attention, a fraction of the donations, and be able to have a fraction of the impact. You'll get fired as a copywriter very quickly if you try to have your snappy call to action say "we have estimates that state every $1 you donate will spare 1,770 piglets from painful mutilations".

Using colloquial, simple language is often appropriate, even if it's not maximally precise. In fact, maximally precise doesn't even exist -- we always have to decide how detailed and complete a picture to paint.

I tend to agree, but historically EA (especially GiveWell) has been critical of the "donor illusion" involved in things like "sponsorship" of children in areas the NGO has already decided to fund by mainstream charities on a similar basis. More explicit statistical claims about future marginal outcomes based on estimates of outcomes of historic campaign spend or claims about liberating from confinement and mutilation when it's one or the other free seem harder to justify than some of the other stuff condemned as "donor illusion".

Even leaning towards the view it's much better for charities to have effective marketing than statistical and semantic exactness, that debate is moot if estimates are based mainly on taking credit for decisions other parties had already made, as claimed by the VettedCauses review. If it's true[1] that some of their figures come from commitments they should have known do not exist and laws they should have known were already changed it would be absolutely fair to characterise those claims as "false", even if it comes from honest confusion (perhaps ACE - apparently the source of the figures - not understanding the local context of Sinergia's campaigns?)

- ^

I would like to hear Sinergia's response, and am happy for them to take their time if they need to do more research to clarify.

Thanks for your input David!

If it's true[1] that some of their figures come from commitments they should have known do not exist and laws they should have known were already changed it would be absolutely fair to characterise those claims as "false", even if it comes from honest confusion

We would like to clarify something. Sinergia wrote a 2023 report that states "teeth clipping is prohibited" under Normative Instruction 113/2020. Teeth clipping has been illegal in Brazil since February 1, 2021[1]. In spite of this, Sinergia took credit for alleged commitments leading to alleged transitions away from teeth clipping (see Row 12 for an example).

We prefer not to speculate about whether actions were intentional or not, so we didn't include this in our report. We actually did not include most of our analysis or evidence in the review we published, since brevity is a top priority for us when we write reviews. The published review is only a small fraction of the problems we found.

- ^

See Article 38 Section 2 and Article 54 of Normative Instruction 113/2020.

We actually did not include most of our analysis or evidence in the review we published, since brevity is a top priority for us when we write reviews. The published review is only a small fraction of the problems we found.

I'd suggest publishing an appendix listing more problems you believe you identified, as well as more evidence. Brevity is a virtue, but I suspect much of your potential impact lies with moving grantmaker money (and money that flows through charity recommenders) from charities that allegedly inflate their outcomes to those that don't. Looking at Sinergia's 2023 financials, over half came from Open Phil, and significant chunks came from other foundations. Less than 1% was from direct individual donations, although there were likely some passthrough-like donations recommended by individuals but attributed to organizations.

Your review of Sinergia is ~1000 words and takes about four minutes to read. That may be an ideal tradeoff between brevity and thoroughness for a potential three-to-four figure donor, but I think the balance is significantly different for a professional grantmaker considering a six-to-seven figure investment.

You can quibble that maybe charities should say "may" or "could" instead of "will". Fine.

We appreciate that you seem to acknowledge that saying "may" or "could" would be more accurate than saying "will", but we don’t see this as just a minor wording issue.

The key concern is donors being misled. It is not acceptable to use stronger wording to make impact sound certain when it isn't.

If charities only spoke the way some people on the forum wish they would, they would get a fraction of the attention, a fraction of the donations, and be able to have a fraction of the impact.

Perhaps the donations would instead go to charities that make true claims.

Hi everyone,

I’m Carolina, International Executive Director of Sinergia Animal.

I want to acknowledge that members of this community have shared this post with us, and we truly appreciate your engagement and interest in our work. A deep commitment to create real change, transparency and honesty have always been central to our approach, and we will address all concerns accordingly.

To clarify in advance, we have never taken credit for pre-existing or non-existent policies, and we will explain this in our response. We always strive to estimate our impact in good faith and will carefully review our methodology based on this feedback to address any concerns, if valid.

This discussion comes at a particularly busy time for us, as we have been attending EA Global while continuing our critical work across eight countries. We appreciate your patience as we prepare a thorough response.

As a best practice, we believe organizations mentioned by others in posts should have the chance to respond before content is published. We take the principle of the right to reply so seriously that we even extend it to companies targeted in our campaigns or enforcement programs. In that spirit, we will share our response with Vetted Causes via the email provided on their website 24 hours (or as much time as Vetted Causes prefers) before publishing it on the Forum.

The EA community has been a vital supporter of our work, and we hope this serves as an constructive opportunity to provide further insight into our efforts and approach.

Best,

Carolina

Hi Carolina, thank you for the response, looking forward to your more thorough response!

They posted about their review of Sinergia on the forum already: https://forum.effectivealtruism.org/posts/YYrC2ZR5pnrYCdSLt/sinergia-ace-top-charity-makes-false-claims-about-helping

I suggest we concentrate discussion there and not here.

Hi Marcus,

Thanks for your comment.

I want to acknowledge that members of this community have shared this post with us, and we truly appreciate your engagement and interest in our work. A deep commitment to create real change, transparency and honesty have always been central to our approach, and we will address all concerns accordingly.

To clarify in advance, we have never taken credit for pre-existing or non-existent policies, and we will explain this in our response. We always strive to estimate our impact in good faith and will carefully review our methodology based on this feedback to address any concerns, if valid.

This discussion comes at a particularly busy time for us, as we have been attending EA Global while continuing our critical work across eight countries. We appreciate your patience as we prepare a thorough response.

As a best practice, we believe organizations mentioned by others in posts should have the chance to respond before content is published. We take the principle of the right to reply so seriously that we even extend it to companies targeted in our campaigns or enforcement programs. In that spirit, we will share our response with Vetted Causes via the email provided on their website 24 hours (or as much time as Vetted Causes prefers) before publishing it on the Forum.

The EA community has been a vital supporter of our work, and we hope this serves as an constructive opportunity to provide further insight into our efforts and approach.

Best,

Carolina