Post intends to be a brief description of CEEALAR’s updated Theory of Change.

With an increasingly high calibre of guests, more capacity, and an improved impact management process, we believe that the Centre for Enabling EA Learning & Research is the best it's ever been. As part of a series of posts —see here and here— explaining the value of CEEALAR to potential funders (e.g. you!), we want to briefly describe our updated Theory of Change. We hope readers leave with an understanding of how our activities lead to the impact we want to see.

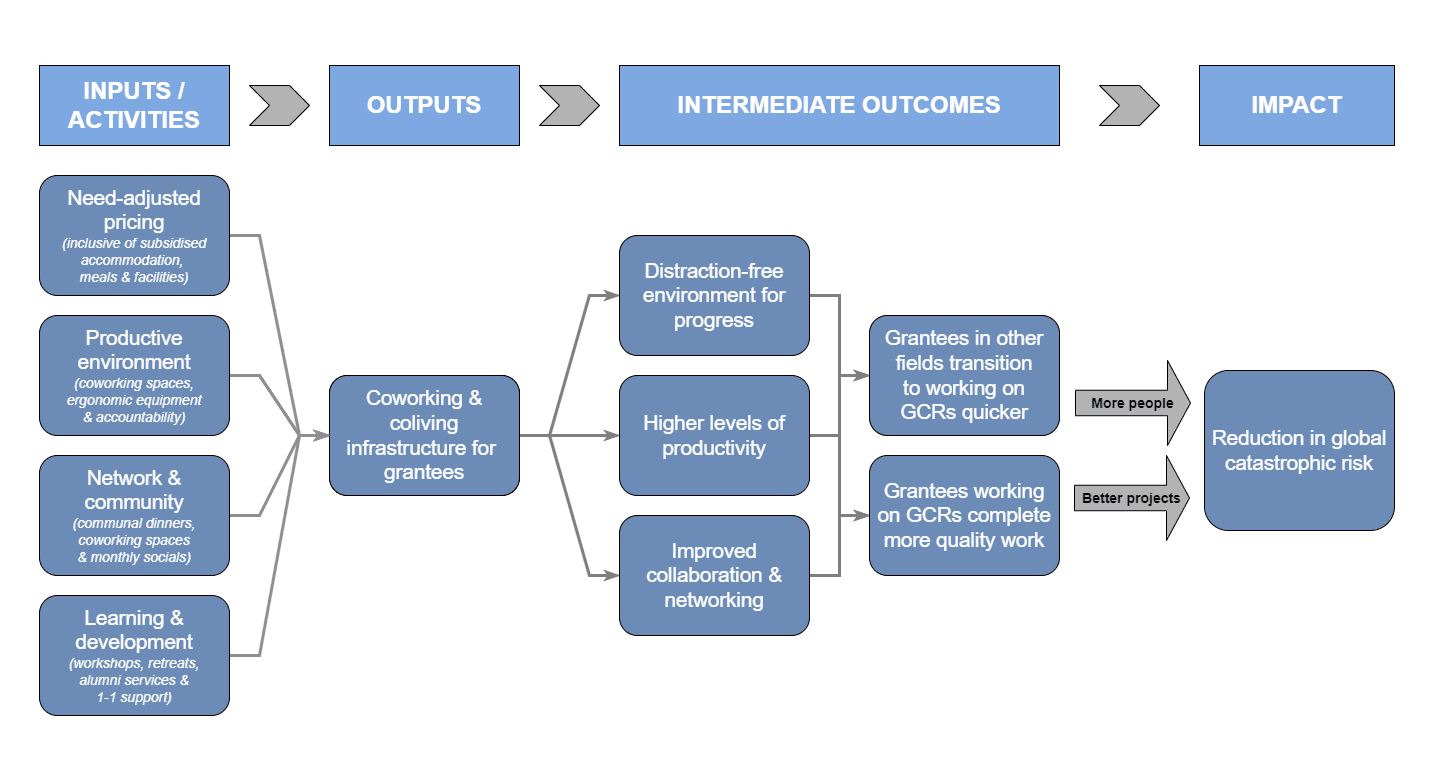

Our Theory of Change

Our goal is to safeguard the flourishing of humanity by increasing the quantity and quality of dedicated EAs working on reducing global catastrophic risks (GCRs) in areas such as Advanced AI, Biosecurity, and Pandemic Preparedness. We do this by providing a tailor-made environment for promising EAs to rapidly upskill, perform research, and work on charitable entrepreneurial projects. More specifically, we aim to help early-career professionals who 1) Have achievements in other fields but are looking to transition to a career working on reducing GCRs; or 2) Are already working on reducing GCRs and would benefit from our environment.

Eagle-eyed readers will notice we now refer to supporting work “reducing GCRs” rather than simply “high impact work”. We have made this change in our prioritisation as it reflects the current needs of the world and the consequent focus on GCRs by the wider EA movement, as well as the reality of our applicant pool in recent months (>95% of applicants were focused on GCRs).

Our updated theory of change —see below— posits that by providing an environment to such EAs that is highly supportive of their needs, enables increased levels of productivity, and encourages collaboration and networking, we can counterfactually impact their career trajectories and, more generally, help in the prevention of global catastrophic events.

This Theory of Change reflects our belief that there is something broken about the pipeline for both talent and projects in the GCR community, and that programs that simply supply training to early-career EAs are not enough on their own. We fill an important niche because:

- At just $750 to support a grantee for 1 month, we are particularly cost-effective. For funders, this means reduced risk: you can make a $4,500 investment in a person for six months rather than a $45,000 investment, or use that $45,000 for hits-based giving and invest in ten people rather than one.

- Since we remove barriers to entering full-time careers in reducing GCRs, the counterfactual impact is high. Indeed, when considering applications we look for prospective grantees who otherwise would not be able to pursue such careers, be that because they currently lack financial security, connections / credentials, or a conducive environment.

- As grantees do independent research & projects, their work is often cutting-edge. When it comes to preventing global catastrophic events, it is imperative to support ambitious individuals who are motivated to try innovative approaches and further their specific fields.

- Finally, because CEEALAR only offers time-limited stays (the average stay is ~4-6 months) and prioritises selecting agentic individuals as grantees, our alumni are committed to ensuring their learning translates into action.

This final bullet point can be seen in our alumni who have gone on to have impactful careers (see our website for further details). For example:

- Chris Leong, now Principal Organiser for AI Safety and New Zealand (before CEEALAR (BC) he was a graduate likely to take a non-EA corporate role)

- Sam Deverett, now an ML Researcher in the MIT Fraenkel Lab and an incoming AI Futures Fellow (BC he was a corporate data scientist)

- Derek Foster, previously a Research Analyst at Rethink Priorities (BC he was a master's student)

- Hoagy Cunningham, now a Researcher at the Stanford Existential Risks Initiative (BC he was a graduate likely to take a non-EA corporate role)

Your next steps

CEEALAR is just one part of a wider ecosystem. Rather than attempting to solve the pipeline for both talent and projects in the GCR community, we hope to serve a niche but important role in helping early-career professionals thrive.

If you have questions about this Theory of Change or our work more generally, feel free to email us at contact@ceealar.org. Additionally, you can:

- Donate now! We support PayPal, Ko-Fi, PPF Fiscal Sponsorship, and bank transfer donations.

- Check out our website and sign up for our mailing list to keep abreast of future updates.

- Read through our forum posts for this giving season here.

Executive summary: CEEALAR aims to reduce global catastrophic risks by providing promising early-career effective altruists with a supportive environment to rapidly upskill, perform research, and develop projects.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.