This post is the final part of my summary of The Precipice, by Toby Ord. Previous posts gave an overview of the existential risks. We learned that some of these risks (especially the emerging anthropogenic risks) are alarmingly high. This post explores our place in the story of humanity and the importance of reducing existential risk.

A single human in the wilderness is nothing exceptional. But together humans have the ability to shape the world and determine the future of our species, planet, and universe.

We learn from our ancestors, add minor innovations of our own, and teach our children. We are the beneficiaries of countless improvements in technology, mathematics, language, institutions, culture, and art. These improvements make our lives much better than the lives of our ancestors.[1]

We hope that life will continue to improve. And we could have a lot of time to get things right. Humans have walked the earth for around 200,000 years, but a typical mammalian species lasts for a million years, and our planet will remain habitable for a billion years. This is enough time to eradicate malaria and HIV, eliminate depression and dementia, and create a world free from racism, sexism, torture, and oppression. With so much time ahead of us, we might even figure out how to leave our solar system and settle the stars. If so, we could have a truly staggering number of descendants who can explore the universe and build wonders and masterpieces better than we can imagine. If we go extinct, all of this will be lost.

We have always faced a small risk from asteroids, pandemics, and volcanoes. But it was only recently that we began to face larger risks of our own creation. This period of heightened risk began last century with the invention of nuclear weapons (we now have enough to kill everyone on earth). Over the next century we will face additional risk from emerging developments in biotechnology and AI. In the words of Toby Ord, we are standing on “a crumbling ledge on the brink of a precipice.”

Safeguarding humanity is the defining challenge of our time.[2] If we rise to it, there may be trillions of people living meaningful lives in the future. If we fail, then in all likelihood we will destroy ourselves. The fate of the world rests on our collective decisions.

Why should we try to prevent extinction?

If a large asteroid was hurtling towards Earth, few would argue against building a deflection system. This indicates that our collective inaction is driven by a shared sentiment that the risk of extinction is low, rather than the belief that humanity is not worth protecting. However, it is still worth reflecting on why preventing extinction is so important.

A tragedy on the grandest scale

Sudden extinction, such as from an asteroid collision, would involve the sudden and gruesome deaths of billions of people, perhaps everyone. This alone would make it the most severe tragedy in history.

The destruction of our potential

Extinction would destroy our immense potential. Almost all humans that will ever live are yet to be born. Almost all human well-being and flourishing is yet to happen.[3] All of this would be lost if the present generation went extinct.

Intergenerational projects

Our ancestors set in motion great projects for humanity — ending war, forging a just world, and understanding the universe. No single generation can complete these projects. But humanity can, with each generation contributing just a little. We benefit immensely from knowledge and wisdom passed down to us from previous generations, and we owe it to our children and grandchildren to protect this legacy and pass it down to them. Extinction would also destroy all cultural traditions, languages, poetry, and culture. We ought instead to protect, preserve, and cherish these things.[4]

Civilisational virtues

We are accustomed to understanding virtues on an individual level, but we could also think of the collective virtues of humanity. When we fail to take these risks seriously, humanity might collectively demonstrate a lack of prudence. When we value our own generation so much as to put all future generations at risk, we demonstrate a lack of patience. And when we fail to prioritise well-known risks, we display a lack of self-discipline. When we do not rise to the challenge, we display a lack of hope, perseverance, and responsibility for our own actions.

Cosmic significance

We may be alone in the universe. If there are no aliens, then all life on Earth may have cosmic significance. Humanity would be in a unique position to explore and understand the universe. We would also have a responsibility to all life, as we would be the only ones who could protect it from harm and promote flourishing on other planets.

Uncertainty

Correctly accounting for our uncertainty about the future tends to strengthen the case for protecting our potential because the stakes are asymmetrical: overinvesting in safety is simply much better than letting everyone die. This means that even if we believe the risks are low, but we are not completely confident, then some efforts to safeguard humanity are warranted.[5]

Why are existential risks neglected?

Are existential risks neglected?

Humanity spends less money attempting to prevent existential risk than it does on ice cream each year.[6] The most risky emerging technologies are biotechnology and AI (see parts 2 & 3). Yet the international body responsible for the continued prohibition of bioweapons has an annual budget less than that of an average McDonald’s restaurant.[7] And while we spend billions of dollars improving the capabilities of AI systems, we only spend tens of millions of dollars on ensuring safety. Research similarly neglects the most severe risks: for instance, there is plenty of research on the possible effects of climate change, but scenarios involving more than six degrees of warming are rarely studied or given space in policy discussions (King et al., 2015). There are several reasons for this neglect.

Existential risk as a global public good

When one organisation or government reduces the risk, they improve the situation for everyone in the world. Everyone is incentivised to wait for someone else to solve the problem and benefit from the hard work of others. This dynamic happens across generations too. So, many of the people who benefit if we safeguard humanity have not even been born yet. We do not yet have robust ways to coordinate on these issues.

Short-term institutions

Additionally, political decisions are notoriously short term. Existential risk tends to be ignored in favour of more urgent issues. That said, most existential risks are new relative to our political institutions, which have been built up over thousands of years. We only began to have the power to destroy ourselves in the midde of the last century, and since then there has begun to be serious thought about the possibility of extinction. Perhaps our institutions and practices will gradually adapt.

Patterns of thinking

Our brains are not built to grasp these risks intuitively, and there are several patterns of thinking that lead us to neglect existential risk. For instance, we tend to estimate the likelihood of an event based on how easy it is to recall examples of it happening in the past. This availability heuristic serves us well most of the time, but when we are dealing with risks like extinction, these heuristics allow us to ignore even large and growing risks. We also lack sensitivity to the scale of various catastrophes.

Sources

Biological Weapons Convention Implementation Support Unit (2019). Biological Weapons Convention—Budgetary and Financial Matters.

Mark Nathan Cohen (1989). Health and the Rise of Civilization. Yale University Press.

Joe Hasell, Max Roser, Esteban Ortiz-Ospina and Pablo Arriagada (2022). Poverty. Our World in Data. (This article has been updated since The precipice was published in 2020).

David King, Daniel Schrag, Zhou Dadi, Qi Ye and Arunabha Ghosh (2015). Climate Change: A Risk Assessment. Centre for Science and Policy.

IMARC Group (2019). Ice Cream Market: Global Industry Trends, Share, Size, Growth, Opportunity and Forecast 2019–2024.

McDonald’s Corporation (2018). Form 10-K. (McDonald’s Corporation Annual Report).

Max Roser and Esteban Ortiz-Ospina (2019). Literacy. Our World in Data.

World Health Organization (2016). World Health Statistics 2016: Monitoring Health for the SDGs, Sustainable Development Goals.

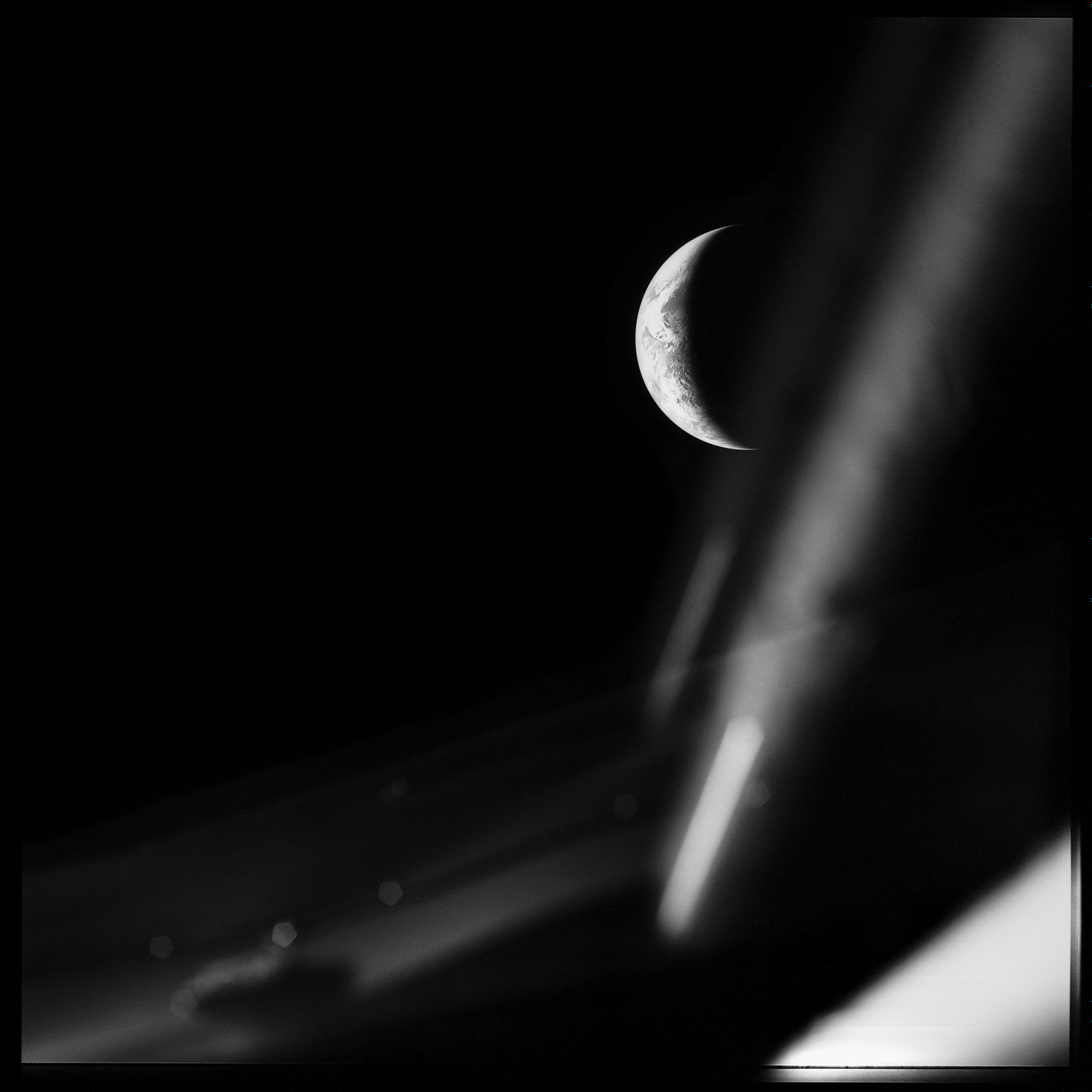

Image of the earth from: www.tobyord.com/earth

- ^

While 1 person in 10 is so remarkably poor today that they live on less than $2 per day, before the Industrial Revolution 19 out of 20 people were this poor. Throughout history, only a tiny elite was ever much above subsistence (Hasell, Roser, Ortiz-Ospina & Arriagada, 2022). Our health and education are also much better than ever before. Before the Industrial Revolution, 1 in 10 could read and write; now more than 8 in 10 can (Roser & Ortiz-Ospina, 2019). For 10,000 years, life expectancy was between 20 and 30 years; now it is 72 years (Cohen 1989; World Health Organization, 2016). According to Toby Ord, “It is not that things are great today, but that they were terrible before” (p. 294).

- ^

The importance of safeguarding humanity is familiar at the smallest scale. Consider a child who has a bright future ahead of them. They must be protected from accident, trauma, or lack of education that would prevent their flourishing. We must put safeguards in place to preserve their potential.

- ^

Though Ord focusses on humanity, he does not believe that we are the only source of value in the universe but that we appear to be the only beings capable of shaping the future in a way that is particularly valuable. He also uses the term very inclusively to include (perhaps very different from us) moral agents that we morph into or create.

- ^

We may have duties to properly acknowledge and remedy past horrors. If we went extinct, there would be no opportunity to ever do so.

- ^

Indeed, even if we thought the future was likely to be worse than nonexistence, protecting our potential might still be worthwhile. First, some risks would still be clearly worth preventing, such as the risk of stable global totalitarianism. Second, there would be a strong reason to gather more information about the value of the future, and it would be incredibly reckless to let humanity destroy itself now.

- ^

The ice-cream market was estimated at $60 billion in 2018 (IMARC Group, 2019).

- ^

The international body responsible for the continued prohibition of bioweapons has a budget of $1.4 million (Biological Weapons Convention Implementation Support Unit, 2019) compared to an average $2.8 million to run a McDonald’s (McDonaldʼs Corporation, 2018, pp. 14, 20).