This is a summary of the GPI Working Paper “High risk, low reward: A challenge to the astronomical value of existential risk mitigation” by David Thorstad. The paper is now forthcoming in the journal Philosophy and Public Affairs. The summary was written by Riley Harris.

The value of the future may be vast. Human extinction, which would destroy that potential, would be extremely bad. Some argue that making such a catastrophe just a little less likely would be by far the best use of our limited resources--much more important than, for example, tackling poverty, inequality, global health or racial injustice.[1] In “High risk, low reward: A challenge to the astronomical value of existential risk mitigation”, David Thorstad argues against this conclusion. Suppose the risks really are severe: existential risk reduction is important, but not overwhelmingly important. In fact, Thorstad finds that the case for reducing existential risk is stronger when the risk is lower.

The simple model

The paper begins by describing a model of the expected value of existential risk reduction, originally developed by Ord (2020;ms) and Adamczewski (ms). This model discounts the value of each century by the chance that an extinction event would have already occurred, and gives a value to actions that can reduce the risk of extinction in that century. According to this model, reducing the risk of extinction this century is not overwhelmingly important–in fact, completely eliminating the risk we face this century could at most be as valuable as we expect this century to be.

This result–that reducing existential risk is not overwhelmingly valuable––can be explained in an intuitive way. If the risk is high, the future of humanity is likely to be short, so the increases in overall value from halving the risk this century are not enormous. If the risk is low, halving the risk would result in a relatively small absolute reduction of risk, which is also not overwhelmingly valuable. Either way, saving the world will not be our only priority.

Modifying the simple model

This model is overly simplified. Thorstad modifies the simple model in three different ways to see how robust this result is: by assuming we have enduring effects on the risk, by assuming the risk of extinction is high, and by assuming that each century is more valuable than the previous. None of these modifications are strong enough to uphold the idea that existential risk reduction is by far the best use of our resources. A much more powerful assumption is needed (one that combines all of these weaker assumptions). Thorstad argues that there is limited evidence for this stronger assumption.

Enduring effects

If we could permanently eliminate all threats to humanity, the model says this would be more valuable than anything else we could do––no matter how small the risk or how dismal each century is (as long as each is still of positive value). However, it seems very unlikely that any action we could take today could reduce the risk to an extremely low level for millions of years––let alone permanently eliminate all risk.

Higher risk

On the simple model, halving the risk from 20% to 10% is exactly as valuable as halving it from 2% to 1%. Existential risk mitigation is no more valuable when the risks are higher.

Indeed, the fact that higher existential risk implies a higher discounting of the future indicates a surprising result: the case for existential risk mitigation is strongest when the risk is low. Suppose that each century is more valuable than the last and therefore that most of the value of the world is in the future. Then high existential risk makes mitigation less promising, because future value is discounted more aggressively. On the other hand, if we can permanently reduce existential risk, then reducing risk to some particular level is approximately as valuable regardless of how high the risk was to begin with. This implies that if risks are currently high then much larger reduction efforts would be required to achieve the same value.

Value increases

If all goes well, there might be more of everything we find valuable in the future, making each century more valuable than the previous and increasing the value of reducing existential risk. On the other hand, however, high existential risk discounts the value of future centuries more aggressively. This leads to a race between the mounting accumulated risk and the growing improvements. The final expected value calculation depends on how quickly the world improves relative to the rate of extinction risk. Given current estimates of existential risk, the value of preventing existential risk receives only a modest increase.[2] However, if value grows quickly and we can eliminate most risks, then reducing existential risk would be overwhelmingly valuable–we will explore this in the next section.

The time of perils

So far none of the extensions to the simple model imply reducing existential risk is overwhelmingly valuable. Instead, a stronger assumption is required. Combining elements of all of the extensions to the simple model so far, we could suppose that we are in a short period–less than 50 centuries––of elevated risk followed by extremely low ongoing risk–less than 1% per century, and that each century is more valuable than the previous. This is known as the time of perils hypothesis. Thorstad explores three arguments for this hypothesis but ultimately finds them unconvincing.

Argument 1: humanity’s growing wisdom

One argument is that humanity's power is growing faster than its wisdom, and when wisdom catches up, existential risk will be extremely low. Though this argument is suggested by Bostrom (2014, p. 248), Ord (2020, p. 45) and Sagan (1997, p. 185), it has never been made in a precise way. Thorstad considers two ways of making this argument precise, but doesn’t find that they provide a compelling case for the time of perils.[3]

Argument 2: existential risk is a Kuznets curve

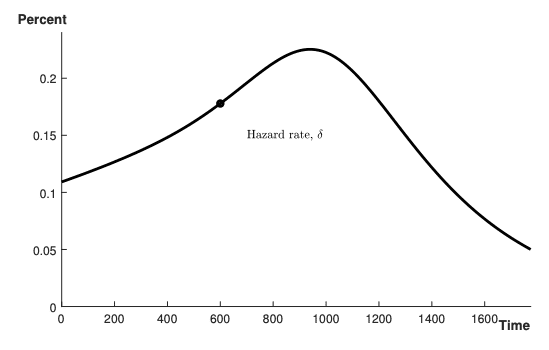

Figure 1: the existential risk Kuznets curve, reprinted from Aschenbrenner (2020).

Aschenbrenner (2020) presents a model in which societies initially accept an increased risk of extinction in order to grow the economy more rapidly. However, when societies become richer, they are willing to spend more on reducing these risks. If so, existential risk would behave like a Kuznets curve––first increasing and then decreasing (see Figure 1).

Thorstad thinks this is the best argument for the time of perils hypothesis. However, the model assumes that consumption drives existential risk, while in practice technology growth plausibly drives the most concerning risks.[4] Without this link to consumption, the model gives no strong reason to think that these risks will be reduced in the future. The model also assumes that increasing the amount of labour spent on reducing existential risks will be enough to curtail these risks––which is at best unclear.[5] Finally, perhaps even if the model is correct about optimal behaviour, real world behaviour may fail to be optimal.

Argument 3: planetary diversification

Perhaps this period of increased risk holds only while we live on a single planet, but later, we might settle the stars and humanity will be at much lower risk. While planetary diversification reduces some risks, it is unlikely to help us against the most concerning risks, such as bioterrorism and misaligned artificial intelligence.[6] Ultimately, planetary diversification does not present a strong case for the time of perils.

Conclusion

Thorstad concludes that it seems unlikely that we live in the time of perils. This implies that reducing existential risk is probably not overwhelmingly valuable and that the case for reducing existential risk is strongest when the risk is low. He acknowledges that existential risk may be valuable to work on, but only as one of several competing global priorities.[7]

References

Thomas Adamczewski (ms). The expected value of the long-term future. Unpublished manuscript.

Leopold Aschenbrenner (2020). Existential risk and growth. Global Priorities Institute Working Paper No. 6-2020

Nick Bostrom (2013). Existential risk prevention as a global priority. Global Policy 4.

Nick Bostrom (2014). Superintelligence. Oxford University Press.

Carl Sagan (1997). Pale Blue Dot: A Vision of the Human Future in Space. Balentine Books.

Anders Sandberg and Nick Bostrom (2008). Global Catastrophic Risks Survey. Future of Humanity Institute Technical Report #2008-1

Toby Ord (2020). The Precipice: Existential Risk and the Future of Humanity. Bloomsbury Publishing.

Toby Ord (ms). Modelling the value of existential risk reduction. Unpublished manuscript.

- ^

See Bostrom (2013), for instance.

- ^

Participants of the Oxford Global Catastrophic Risk Conference estimated the chance of human extinction was about 19% (Sandberg and Bostrom 2008), and Thorstad is talking about risks of approximately this magnitude. At this level of risk, value growth could make a 10% reduction in total risk 0.5 to 4.5 times as important as the current century.

- ^

The two arguments are: (1) humanity will become coordinated, acting in the interests of everyone; or (2), humanity could become patient, fairly representing the interests of future generations. Neither seems strong enough to reduce the risk to below 1% per century. The first argument can be criticised because some countries already contain 15% of the world's population, so coordination is unlikely to push the risks low enough. The second can also be questioned, because it is unlikely that any government will be much more patient than the average voter.

- ^

For example, the risks from bioterrorism grow with our ability to synthesise and distribute biological materials.

- ^

For example, asteroid risks can be reduced a little with current technology, but to reduce this risk further we would need to develop deflection technology––technology which would likely be used in mining and military operations, which may well increase the risk from asteroids, see Ord (2020).

- ^

See Ord (2020) for an overview of which risks are most concerning.

- ^

Where the value of reducing existential risk is around one century of value, Thorstad notes that “...an action which reduces the risk of existential catastrophe in this century by one trillionth would have, in expectation, one trillionth as much value as a century of human existence. Lifting several people out of poverty from among the billions who will be alive in this century may be more valuable than this. In this way, the Simple Model presents a prima facie challenge to the astronomical value of existential risk mitigation.” (p. 5)

By the way, the paper "Existential risk and growth", which seems relevant to this post, was evaluated by The Unjournal – see https://doi.org/10.21428/d28e8e57.51c89928. Please let us know if you found our evaluation useful and how we can do better – contact@unjournal.org

Could you explain why the Time of Perils assumption is stronger? It seems to me to be consistent with rejecting the previous assumptions; for example, you could navigate the Time of Perils but have no impact on future years, especially if the risk there was already very low. Rather than being stronger, it just seems like a different model to me.

I was also disappointed to not see AGI discussed in relation to the Time of Perils. The only mention is this footnote:

This seems very unsatisfactory to me, because as far as I can see (perhaps I am mistaken) AGI is the main reason most people believe in the Time of Perils hypothesis. Declaring it to be out of scope doesn't mean you have struck a blow against Xrisk mitigation, it means you have erected a strawman.

On a much more mundane note, I found this paragraph very confusing:

Doesn't Toby work in the same office as GPI? If the most helpful thing to do would be to ask him for details... why not do that?

This line of reasoning usually relies on the "imminent godlike AGI" hypothesis. Thorstadt believes to be wrong, but it takes a lot of highly involved argumentation to make the case for this (I've taken a stab at it here).

I think he's right to leave it out. One paper should be about one thing, not try and debunk the entire x-risk argument all in one go, otherwise it would just become a sprawling, bloated mess. The important conclusion here is that the astronomical risk hypothesis relies on the time of perils hypothesis: previously i think a lot of people thought they were independent.

I think this (just arguing the Astronomical Risk Hypothesis relies on the Time of Perils Hypothesis (TOPH) pessimism, and leaving it at that) would be a very reasonable thing to do. But that's not what this paper actually does:

The three arguments he did show are the most popular arguments in the academic literature, it makes sense to give them priority. The "godlike aligned AI will fix everything forever" hypothesis might be popular within a few subcultures, but in my opinion is severely unproven.

You have changed my mind though, I think that he should have addressed it with more than a footnote. If he added in a section briefly explaining why he thought it was bunk, would you be satisfied?

This seems false, since the construction of AGI is probably an event we can influence. Having aligned AGI should reduce other x-risks permanently.

It could reduce other x-risks, but the hypothesis that it would lower all x-risks to almost zero for the rest of time seems like wishful thinking.

One of the interesting calculations from the paper: if the value of 1 century is v, and the current risk of extinction every century is 20%, and you invent an AGI that permanently lowers this by half to 10% for the rest of time... you would only increase the expected value in the world from 4*v to 9*v. Definitely a good result, but pretty far from the the astronomical result you might expect.

What is a plausible source of x-risk that is 10% per century for the rest of time? It seems pretty likely to me that not long after reaching technological maturity, future civilization would reduce x-risk per century to a much lower level, because you could build a surveillance/defense system against all known x-risks, and not have to worry about new technology coming along and surprising you.

It seems that to get a constant 10% per century risk, you'd need some kind of existential threat for which there is no defense (maybe vacuum collapse), or for which the defense is so costly that that the public goods problem prevents it from being built (e.g., no single star system can afford it on their own). But the likelihood of such a threat existing in our universe doesn't seem that high to me (maybe 20%?) which I think upper bounds the long term x-risk.

Curious how your model differs from this.

What does technological maturity mean?

“the attainment of capabilities affording a level of economic productivity and control over nature close to the maximum that could feasibly be achieved.” (Nick Bostrom (2013) ‘Existential risk prevention as global priority’, Global Policy, vol. 4, no. 1, p. 19.)

It would depend on if the risk from AGI is a one time risk that goes away when humans figure out alignment or an ongoing effort.

Alignment may be impossible. As a SWE working in AI I don't know of any plausible method for the kind of alignment discussed here.

Risk mitigation is possible, in that we can stack together serial steps that must fail for the AGI to escape and do meaningful damage, as well as countermeasures (pre constructed weapons and detection institutions) ready to respond when this happens.

But the risk remains nonzero and recurring . Each century there is always this risk that the AGIs escape human control and the risk remains as long as "humans" are significantly stupider and less rational than AGI. I don't know if "humans augment themselves so much to compete they are not remotely humanlike" counts as the extinction of humanity or not.

Executive summary: The paper challenges the view that reducing existential risk should be an overwhelming priority, arguing this is only justified under a strong "time of perils" assumption which currently seems unlikely.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

+1

Isn't estimated value calculated by the probability times the utility and as a consequence isn't the higher risk part wrong if one simply looks at it like this? (20% to 10% would be 10x the impact of 2% to 1%)

(I could be missing something here, please correct me in that case)

My understanding is that, at a high level, this effect is counterbalanced by the fact that a high rate of extinction risk means the expected value of the future is lower. In this example, we only reduce the risk this century to 10%, but next century it will be 20%, and the one after that it will be 20% and so on. So the risk is 10x higher than in the 2% to 1% scenario. And in general, higher risk lowers the expected value of the future.

In this simple model, these two effects perfectly counterbalance each other for proportional reductions of existential risk. In fact, in this simple model the value of reducing risk is determined entirely by the proportion of the risk reduced and the value of future centuries. (This model is very simplified, and Thorstad explores more complex scenarios in the paper).

Yes, essentially preventing extinction "pays off" more in the low risk situation because the effects ripple on for longer.

Mathematically, if the value of one century is v, the "standard" chance of extinction is r, and the rate of extinction just for this century is d, then the expected value of the remaining world will be

v(1−d)+v(1−d)(1−r)+v(1−d)(1−r)2+...

= v(1−d)/r (using geometric sums).

In the world where background risk is 20%, but we reduce this century risk from 20% to 10%, the total value goes from 4*v to 4.5*v.

In the world where background risk is 2%, but we reduce this century risk from 20% to 10%, the total value goes from 49*v to 49.5*v.

In both cases, our intervention has added 0.5v to the total value.

Alright, that makes sense; thank you!

There is not trade off: social estabilization and international pacification are main tools to reduce existencial risk, which in my view mainly comes from nuclear war.

https://forum.effectivealtruism.org/posts/6j6qgNa3uGmzJEMoN/artificial-intelligence-as-exit-strategy-from-the-age-of

The counter argument is that nuclear war was maybe never existential. That even at cold war peaks, there were not enough warheads and missiles and they were not targeted on enough industrial centers on earth to extinct humanity. Entire continents and global regions would have been uninvolved in the war (Africa, south America) and had enough copies of human knowledge and tools to respond to the new reality. Even global cooling scenarios ignore humans building makeshift greenhouses or other countermeasures.

Theoretically a hostile AGI with unlimited access to self replicating machinery, and freedom from interference from human militaries with comparable technology, could locate and kill every human on earth.

A relevant difference is that nuclear bombs already exist, and AGI do not…

Agree. Also the very idea of a "hostile AGI" being able to exist assumes a bunch of things.

Notably :

(1) humans build large powerful models with the cognitive capacity to even be hostile (2) it is possible for a model to be optimized to actually run on stolen computers. This may be flat impossible due to fundamental limits on computation (3) once humans learn of the escaped hostile model they are ineffective in countermeasures or actually choose to host the model and trade with it instead of licensing more limited safer models (4) the hostile model is many times more intelligent than safer cognitively restricted models (5) intelligence has meaningful benefits at very high levels, it doesn't saturate, where "5000 IQ" is meaningfully stronger in real world conflicts than "500 IQ" where the weaker model has a large resource advantage

I don't believe any of these 5 things are true based on my current knowledge, and all 5 must be true or AGI doom is not possible.