In the previous post, I introduced multiple methods for probabilistically modeling the evolution of civilization, which I’ve gradually been working on implementing in code.

In the process, I’ve decided to tweak the simplest (‘cyclical’) model. I’ve removed the ‘survival’ state based on Luisa’s overall conclusion that we would almost certainly get through such a state (and people in the comments seem to view her as too pessimistic).

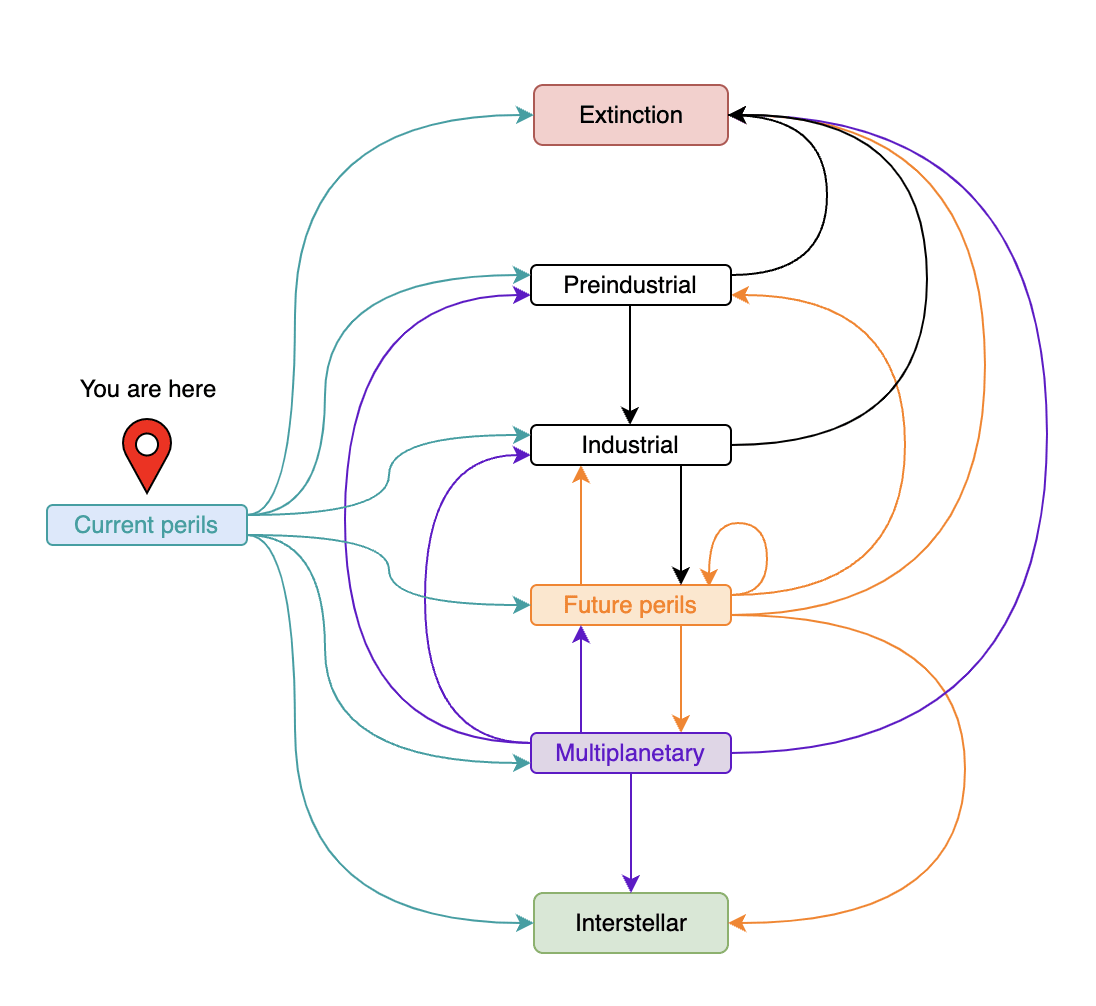

I’ve also divided the ‘time of perils’ into our current state, which we will never return to, and all future times of perils. The thought is that one might prefer to eschew the complexity of the other models while still thinking that future ‘times of perils’ might substantially differ from ours in somewhat consistent ways:

- They would all need to start over from the beginning of their modern age equivalent, therefore needing to navigate through their own equivalents to the Cuban missile crisis and other nuclear near misses just to get back to today’s technological equivalent.

- They would all have had at least one cataclysm to learn from, and at least one civilisation’s technology to learn from - perhaps each civilisation will effectively consume the insights of its predecessor, or perhaps the technology of each civilisation will be similar enough that having more to look back on doesn't meaningfully improve insight.

- They would all be missing almost all or entirely all of the fossil fuels our civilisation bootstrapped itself on. Perhaps other resources aren't used up in the same way (for example, while we might 'use up' phosphorus, this effectively just puts the atoms in less accessible places - a process which future civilisations might reduce, or even reverse).

The revised model allows the user to express some of this nuance while still being computationally simple enough to use interactively. It now looks like this:

The states are now:

Extinction: Extinction of whatever type of life you value any time between now and our sun’s death (i.e. any case where we've failed to develop interplanetary technology that lets us escape the event).

Preindustrial: Civilisation has regressed to pre-first-industrial-revolution-equivalent technology.

Industrial: Civilisation has technology comparable to the first industrial revolution but does not yet have the technological capacity to do enough civilisational damage to regress to a previous state (e.g. nuclear weapons, biopandemics etc). A formal definition of industrial revolution technology is tricky but seems unlikely to dramatically affect probability estimates. In principle it could be something like 'kcals captured per capita go up more than 5x as much in a 100 year period as they had in any of the previous five 100-year periods.’

Current perils: Our current state, as of 1945, when we developed nuclear weaponry - what Carl Sagan called the ‘time of perils’.

Future perils: Human development has had a serious setback, and also has technology capable of threatening another serious contraction (such as nuclear weaponry, misaligned AI, etc.) but does not yet have multiple spatially isolated self-sustaining settlements. Arguably we could transition directly to this directly from our current state if there were a global shock sufficient to destroy much modern technology, but small enough to leave our nuclear arsenals and a decent fraction of industry intact or very quickly recoverable.

Multiplanetary: Civilisation has progressed to having at least two spatially isolated self-sustaining settlements capable of continuing in an advanced enough technological state to produce further such settlements even if all the others disappeared. Each settlement must be physically isolated enough to be unaffected by at least one type of technological milestone catastrophe impacting the other two (e.g. another planet, a hollowed out asteroid or an extremely well-maintained bunker system). Although each settlement may face local threats, we might assume the risks to humanity as a whole, of either extinction or regression to reduced-technology-states, declines as the number of settlements increases.

Interstellar: Civilisation has progressed to having at least two self-sustaining colonies in different star systems, or gains existential security in some other way.

For a more comprehensive explanation of these states, see the previous post. In the next post I'll introduce the implementations of these models that I've been working on.

Hi Arepo,

Do you mean human extinction? In the last post, you had (emphasis mine):

Good catch, thanks. I'm not sure why or how I changed that - I've set it to match the previous post now.

[ETA] changed again for greater clarity