Link to tool: https://parliament.rethinkpriorities.org

(1 min) Introductory Video

(6 min) Basic Features Video

Executive Summary

- This post introduces Rethink Priorities’ Moral Parliament Tool, which models ways an agent can make decisions about how to allocate goods in light of normative uncertainty.

- We treat normative uncertainty as uncertainty over worldviews. A worldview encompasses a set of normative commitments, including first-order moral theories, values, and attitudes toward risk. We represent worldviews as delegates in a moral parliament who decide on an allocation of funds to a diverse array of charitable projects. Users can configure the parliament to represent their own credences in different worldviews and choose among several procedures for finding their best all-things-considered philanthropic allocation.

- The relevant procedures are metanormative methods. These methods take worldviews and our credences in them as inputs and produce some action guidance as an output. Some proposed methods have taken inspiration from political or market processes involving agents who differ in their conceptions of the good and their decision-making strategies. Others have modeled metanormative uncertainty by adapting tools for navigating empirical uncertainty.

- We show that empirical and metanormative assumptions can each make large differences in the outcomes. Moral theories and metanormative methods differ in their sensitivity to particular changes.

- We also show that, taking the results of the EA Survey as inputs to a moral parliament, no one portfolio is clearly favored. The recommended portfolios vary dramatically based on your preferred metanormative method.

- By modeling these complexities, we hope to facilitate more transparent conversations about normative uncertainty, metanormative uncertainty, and resource allocation.

Introduction

Decisions about how to do the most good inherently involve moral commitments about what is valuable and which methods for achieving the good are permissible. However, there is deep disagreement about central moral claims that influence our cause prioritization:

- How much do animals matter?

- Should we prioritize present people over future people?

- Should we aim to maximize overall happiness or also care about things like justice or artistic achievement?

The answers to these questions can have significant effects on which causes are most choiceworthy. Understandably, many individuals feel some amount of moral uncertainty, and individuals within groups (such as charitable organizations and moral communities) may have different moral commitments. How should we make decisions in light of such uncertainty?

Rethink Priorities’ Moral Parliament Tool allows users to evaluate decisions about how to allocate goods in light of uncertainty over different worldviews. A worldview encompasses a set of normative commitments, including first-order moral theories, values, and attitudes toward risk.[1] We represent worldviews as delegates in a moral parliament who decide on an allocation of funds to a diverse array of charitable projects. Users can configure the parliament to represent their own credences in different worldviews and choose among several procedures for finding their best all-things-considered philanthropic allocation.

How does it work?

The Moral Parliament tool has three central components: Worldviews, Projects, and Allocation Strategies for making decisions in light of worldview uncertainty. It embodies a three-stage strategy for navigating uncertainty:

- What are the worldviews in which I place some non-trivial credence?

- What do they individually recommend that I do?

- How do I aggregate and arbitrate among these recommendations to come up with an all-things-considered judgment about what to do?

Worldviews

A worldview encompasses a broad set of commitments about what matters and how we should act. This includes views about who and what matters and how we should act in light of risk. In some cases, a worldview might speak directly to what we should do. For example, one that says that only humans are morally considerable will place no intrinsic moral value on efforts to humanely farm shrimp. However, in many cases, this is not so obvious, and we must do more inferential work. We develop a bottom-up process for generating the degrees of normative support that a particular worldview assigns to various projects.

We characterize a worldview by the normative importance it places on determinants of five central components:

- Beneficiaries: what kinds of creatures matter? What moral weights should we assign to humans and other kinds of animals?

- Population: how much does it matter whether an individual is currently alive, will exist soon, or will exist in the future?

- Effect Type: how much should we care about adding or creating good things, as opposed to removing or preventing bad things?

- Value Type: what contributes to the value of a state of affairs? How much do pleasure and pain, flourishing, and justice and equity matter when evaluating some outcome?

- Risk: how much risk should we be willing to take when trying to do good? Should we be risk-averse and try to avoid bad outcomes? Should we be more risk-tolerant and be willing to take risks to achieve good outcomes?

A worldview assigns a value to each determinant, reflecting the normative importance it deems it to have, with 1 reflecting full importance and 0 reflecting no importance.[2]

For example, consider one difference between Benthamite hedonistic consequentialism and welfarist consequentialism. According to the former, only hedonic states, like pleasures and pains, have value and disvalue; according to the latter, non-hedonic welfare states, like the degree to which an individual is flourishing (understood in terms of having more sophisticated experiences or desires or in terms of being able to secure various objective goods), also have value. This difference manifests in the importance that each assigns to various determinants of moral value. The hedonistic consequentialist assigns moral considerability to humans, mammals and birds, fish, and invertebrates in accordance with their ability to experience hedonic states.[3] A welfarist consequentialist in our parliament assigns some considerability to all kinds of creatures but assigns much lower scores to non-human animals, due to their presumed inability to flourish in the relevant sense. Likewise, the hedonistic consequentialist assigns full importance to valenced experiences and none to flourishing, while the welfarist consequentialist assigns equal weight to both.

For a Kantian, morality consists in obeying universal ethical rules which require us to respect the rights of rational creatures. We assume, therefore, that she will assign full moral weight to humans and none to other animals. She cares naught for pleasure and pain, only caring about justice and equity. Duties against harm are much stronger than duties of benevolence, so she cares much more about averting bad outcomes and is highly risk-averse.

We have characterized a diverse array of worldviews that reflect positions about which many in the EA community might be uncertain. The settings of each worldview are configurable, so users can create worldviews that align with their own normative commitments.

Projects

Projects are philanthropic ventures attempting to improve the world in some way. The tool contains 19 hypothetical projects that vary significantly in their goals and their likelihoods of success. Some projects promote global health, others promote the arts, and yet others strive to secure the long-term future. Projects were constructed to highlight differences among worldviews and are not meant to be completely accurate depictions of any actual projects that they may resemble.

We characterize each project by how much it promotes the various determinants of moral value that we used to characterize worldviews and by the scale (or magnitude) of its effects. For each category of value, we specify what proportion of its benefits accrue to that kind of value. For example, in the Beneficiaries category, 90% of a project’s benefits might go to humans and 10% to Birds and Mammals. In the Status category, a project to mitigate existential risk might give most of its benefits to beings who will someday exist, while a global health project might give most of its benefits to currently existing beings.

The Scale of a project specifies the likely magnitude of the project, if it were to succeed.[4] For example, a project that successfully averts an existential catastrophe will have an enormous scale, relative to a more modest global health project. The overall cost-effectiveness of a project is determined by its scale and its probability of success. This is captured by the probability distribution over possible outcomes in the Risk tab. Note that Scale must be interpreted with some care, as the value of a project is inherently relative to a particular worldview. For example, a shrimp welfare project might have an enormous scale if you assign high moral weight to invertebrates and zero scale if you think they don’t matter. Scale is the default magnitude of value of a successful project, were you to fully value all of the things it does. This default magnitude is adjusted for the chance of futility, extreme success, and accidental backfiring according to the project’s risk settings.

Users can modify these scores, either to reflect their best judgments about the effects of a particular charity or to create their own charitable project.

We now have a score for how much each determinant contributes to utility according to each theory and a score for how much of a charity’s benefits accrue to each determinant. These are combined to yield an overall utility (where this should be interpreted as the degree of normative support) for each project by the lights of each worldview. This set of utilities will be the input for the next stage of the process.

Metanormative parliament

How should these utilities be aggregated to arrive at one’s all-things-considered judgment about how to allocate resources? In the tool, users will populate a moral parliament with delegates of those worldviews they want to compare. You can represent the credences that you have in each worldview by giving them proportional representation in the parliament. For example, if you are 50% confident in both Kantianism and common sense, your parliament could consist of 10 delegates for each.

A metanormative method takes worldviews and our credences in them as inputs and produces some action guidance as an output. Some proposed methods have taken inspiration from political or market processes involving agents who differ in their conceptions of the good and their decision-making strategies. Others have modeled metanormative uncertainty by adapting tools for navigating empirical uncertainty. The Moral Parliament tool allows users to choose from several strategies for aggregating and arbitrating the judgments of worldviews that embody very different commitments about how to navigate moral uncertainty. For example, My Favorite Theory selects the worldview of which you are most confident and does what it says, while Approval Voting selects the allocation that is most widely approved of by the different worldviews. An exploration of the commitments, motivations, and effects of different allocation strategies can be found in Metanormative Methods.

The Moral Parliament Tool at work

(How) do empirical assumptions matter?

Uncertainties about scale

To explore the effects of empirical uncertainty in the moral parliament tool, we will focus on scale, a number that represents how much value the project would likely create if it succeeded, if you fully valued everything it would do.[5] Scale allows us to distinguish between the descriptive aspects of a project’s outcome, which will be invariant across worldviews, from its normative aspects, which will be relative to worldviews.

Still, judgments about scale involve significant empirical and theoretical uncertainties. Consider, for example, what kinds of considerations go into assigning a scale to the Cassandra Fund, a project to increase public awareness of existential threats in order to pass safety legislation.[6] Suppose the project succeeds and averts an existential threat. What is the value of the future whose termination it prevented? This depends on growth and risk trajectories over the long-run future, as well as how much value each individual will experience. The future could be long, grand, and happy, or it could be short, small, and miserable. There are also theoretical questions that arise: should the Cassandra Fund get all the credit for the future it protected? Or should it only get counterfactual credit for part of the future?

Depending on how one answers these questions, widely different values can be given for a project’s scale. We acknowledge that our model inherits some of the deep uncertainties that beset EA attempts at cause prioritization, especially across major cause areas. We attempted to mitigate these challenges in two ways.. First, we chose default scale values that yielded intuitive results about worldviews’ ordinal rankings of projects. Second, we allow users to easily change the default scale values to bring them more in line with their own judgments.

How much does scale matter?

Uncertainties about scale matter if assigning different scale values within our range of uncertainty yields significantly different results about what we should do. Indeed, one possibility is that empirical uncertainties overwhelm moral uncertainties, that changing your empirical credences matters far more than changing your credences in worldviews. While empirical assumptions certainly matter, that conclusion would be too hasty. Moral uncertainty remains important.

Not all changes in scale will matter relative to all worldviews. For example, consider Person-Affecting Consequentialism, according to which the only individuals that matter are presently existing individuals. Even if we increase the value of the future by orders of magnitude, this will not affect the worldview’s assessment of x-risk projects one whit. If you assign some credence to Person-Affecting Consequentialism, then you may be at least partially insensitive to changes in the Cassandra Fund’s scale. Whether you are insensitive depends on how you navigate moral uncertainties.

Some metanormative methods will be highly sensitive to changes in project scales. For example, Maximize Expected Choiceworthiness selects the allocation that has the largest expected value, weighted by the credence in each worldview. When increases in scale cause at least some worldviews to assign a project much more value, this will be reflected in that project’s expected choiceworthiness. Some metanormative methods will be less sensitive to changes in scale. For example, if Person-Affecting Consequentialism is the view you have most credence in and changing the scale doesn’t change the score it assigns, then the verdict under My Favorite Theory will also remain unchanged. Approval voting tends to favor consensus projects that many worldviews like, so it will also be less sensitive to changes in scale that don’t change the judgments of some worldviews.

An example project: The Cassandra Fund

Default scale settings

Cassandra Fund’s scale is >1000x greater than TB and >10x greater than Shrimp.

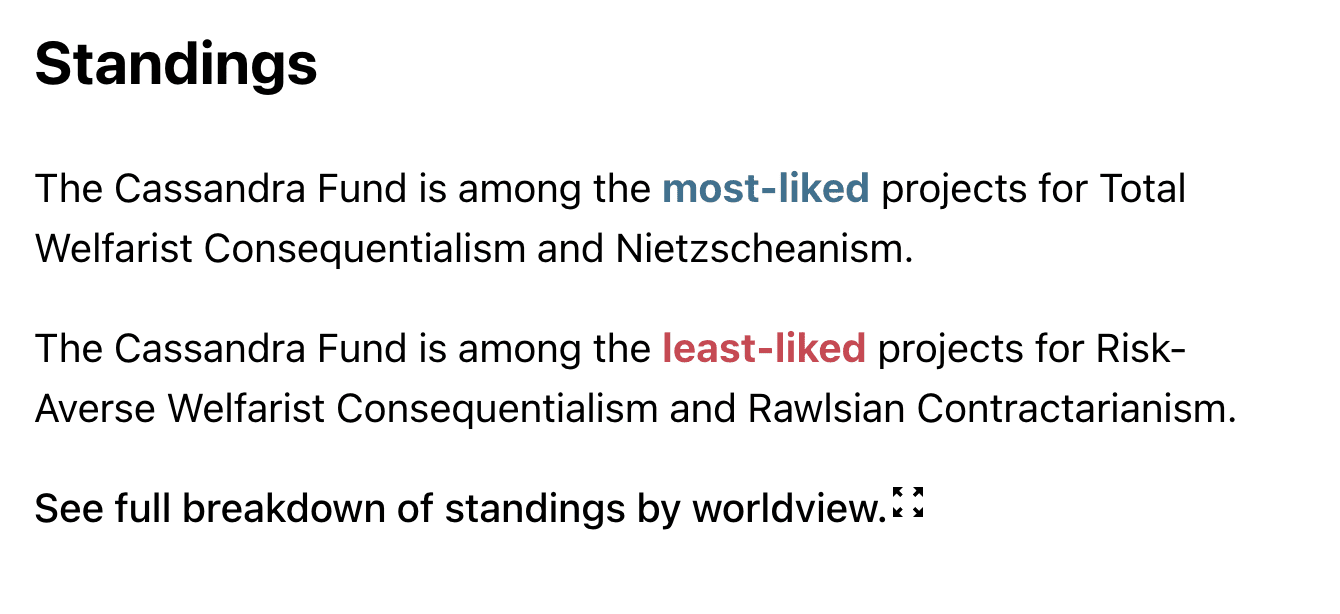

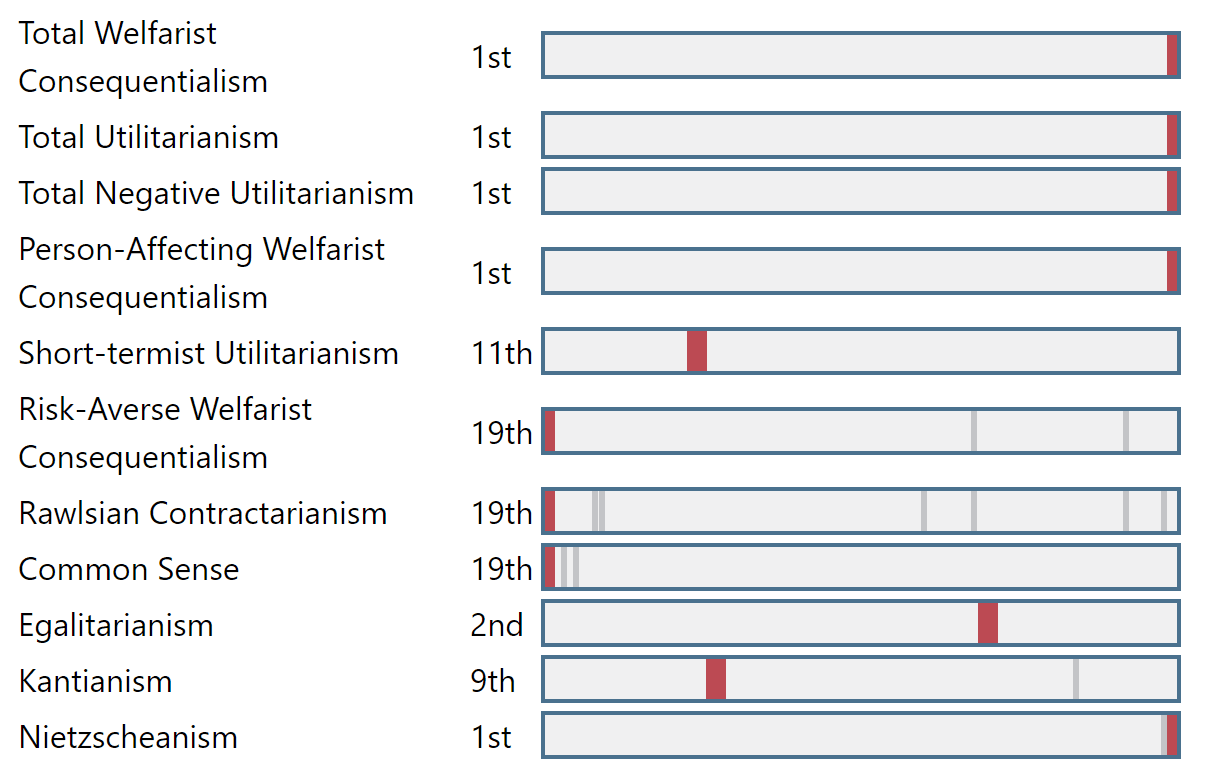

Still, it’s not highly favored by a majority of the worldviews. For a quick glance of its standings you can navigate to the bottom of the project’s page once its selected:

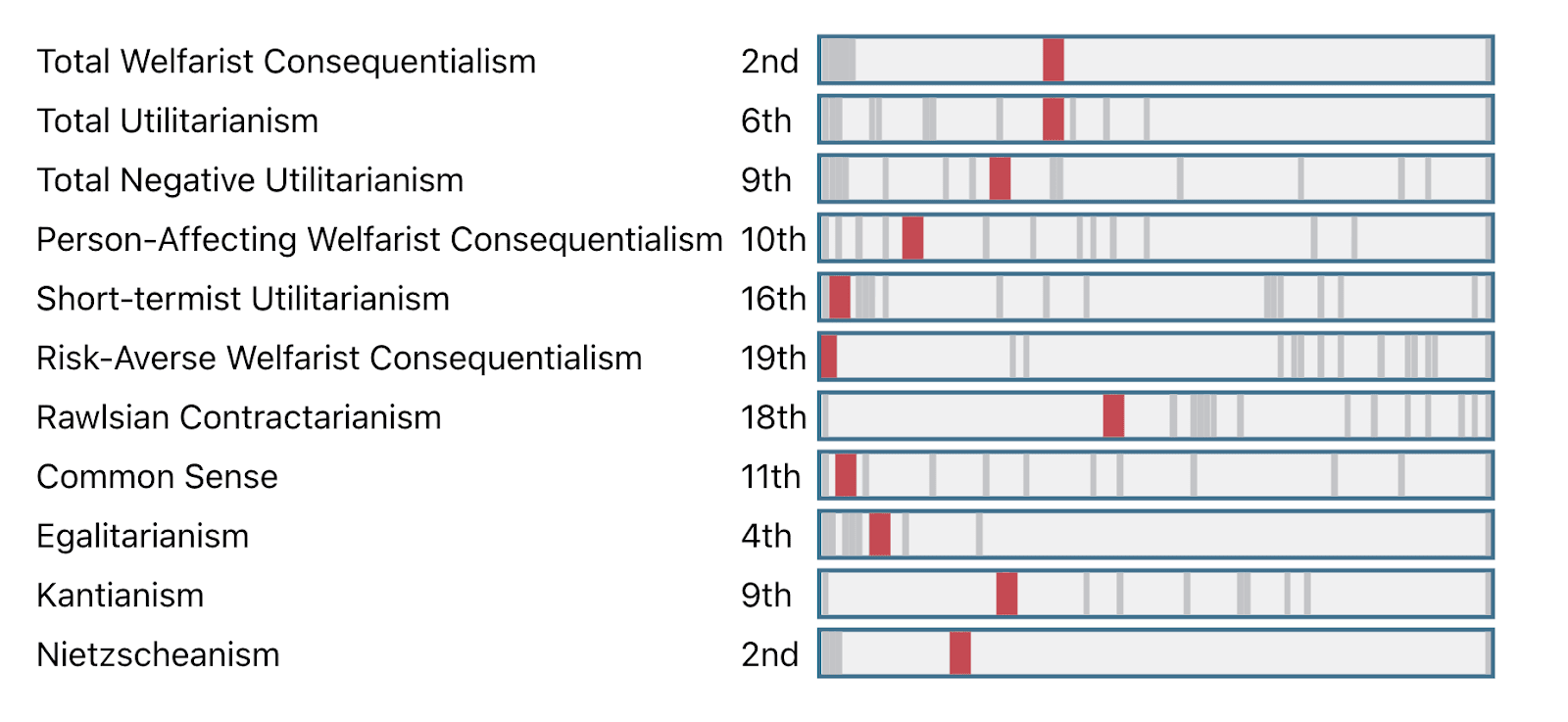

If we inspect the full breakdown we confirm that most projects do not rank it particularly highly:

The previous chart depicts the ranking and absolute position of The Cassandra Fund on a scale from best (rightmost end of each bar) to worst (leftmost) of all defined projects and for all worldview, regardless of which ones were selected. The thick red lines indicate its position. The thin gray lines indicate the positions of other projects. Often, gray lines bunched together. This can make it seem like there aren’t the right number of gray lines, for example under Total Utilitarianism, where there must be two gray lines overlapping somewhere to the right of the red line for Cassandra to rank 6th. In the example above you might recognize that there is at least one project (one gray line) all the way at the right, since Cassandra never ranks first.

Cassandra also doesn’t perform all that well on any allocation strategy. With a parliament with equal representation of all worldviews (and compared to a subset of projects), it performs poorly with Maximize Expected Choiceworthiness:

And Nash bargaining:

And approval voting:

Bumping up the scale by an OOM causes…

The project to be strongly favored by many more theories:

It is favored by Maximize Expected Choiceworthiness:

And Nash bargaining:

But not approval voting:

What would an EA parliament do?

Normative uncertainty among EAs

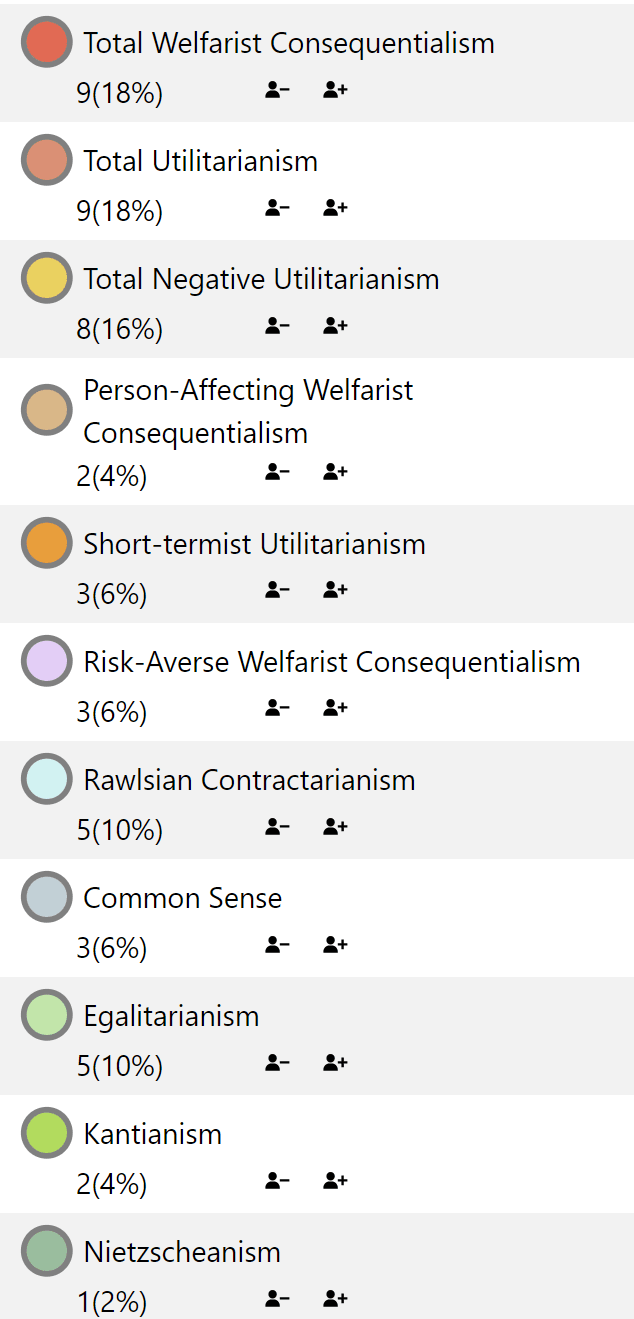

What happens when we attempt to model the EA community as a whole in our moral parliament? While previous surveys don’t tell us exactly the breakdown of worldview commitments among EAs (and do not tell us about uncertainty within individuals), we can get some rough guidance. In the 2019 EA Survey, when participants were asked “Which of the following moral views do you identify most with?”, the answers were (roughly):

- 67% Consequentialism (Utilitarian)

- 12% Consequentialism (Non-Utilitarian)

- 3.5% Deontology

- 8% Virtue Ethics

- 9.5% Other

Among utilitarians, 25% leaned toward negative utilitarianism (which assigns moral value to avoiding suffering but not to attaining pleasures), 58% leaned toward classical utilitarianism (which assigns equal moral value to pleasure and pain), and the remainder reported no opinion/other.

Accordingly, in our model of the EA community[7], ⅔ of the parliament consists of utilitarians of various stripes, ¼ of whom are negative utilitarians. We apportioned the largest share of delegates to Total Utilitarianism and Total Welfare Consequentialism, which each value present and future individuals equally but differ on how much weight to give to the kinds of non-hedonic experiences that give humans larger moral weight. Smaller numbers of delegates represent short-termist, person-affecting, and risk-averse consequentialisms. We assigned ⅓ of the delegates to worldviews that are larger departures from standard utilitarianism. Of these, Egalitarianism has a large number of delegates, which is meant to capture the subset of EAs that are primarily focused on the treatment of animals.

Results

We compared a diverse subset of the possible projects: 3 GHD projects (Tuberculosis, Direct Transfers, Just Treatment of Humans); 3 animal welfare projects (Lawyers for Chickens, Shrimp Welfare, Happier Animals Now); and 3 GCR projects (Cassandra, Pandemics, AI). The results depend crucially on the methods we use to navigate community-wide metanormative uncertainty.

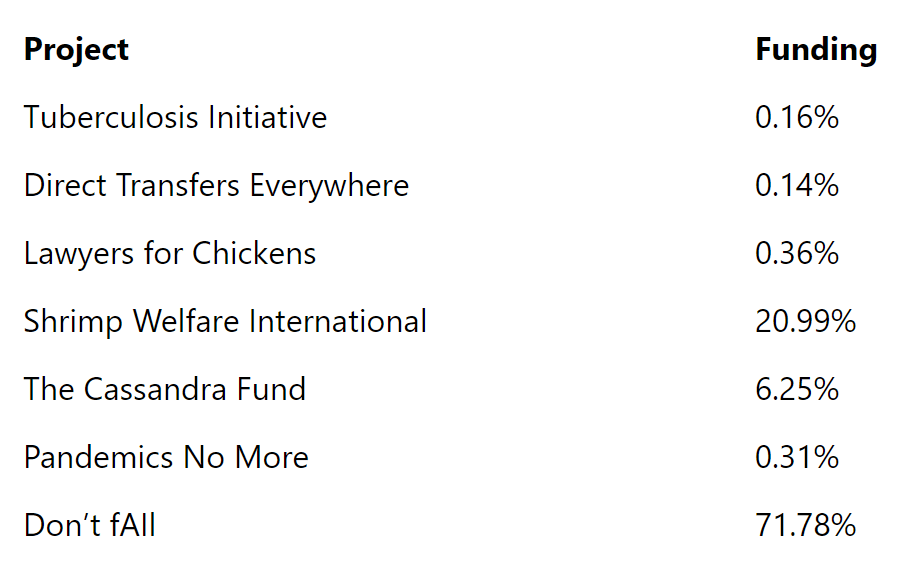

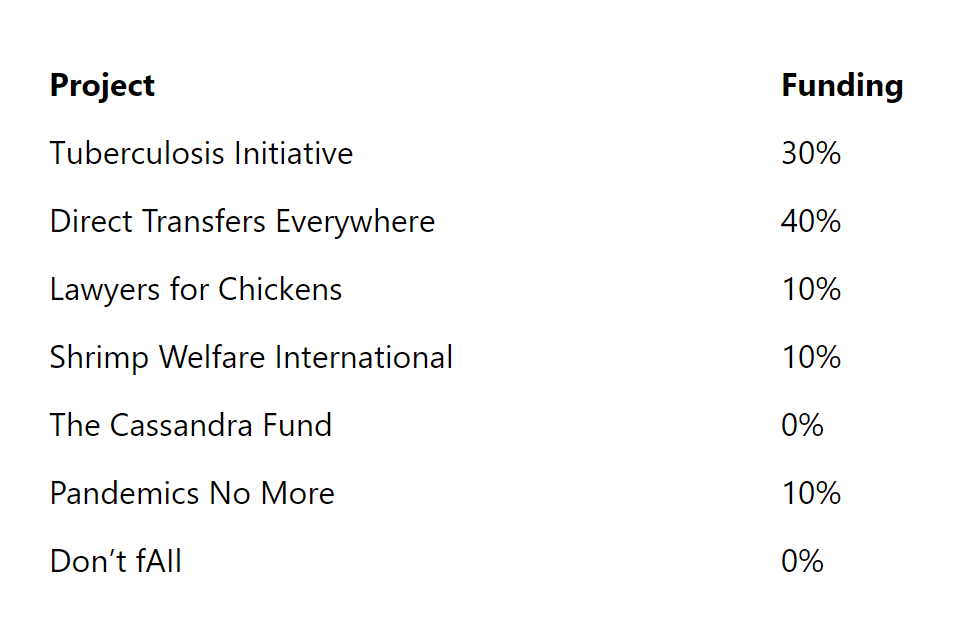

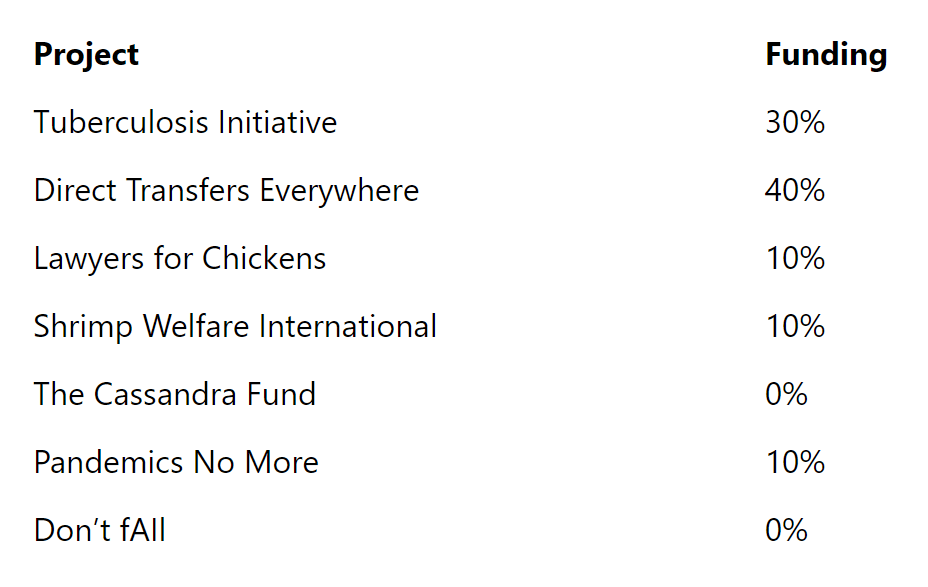

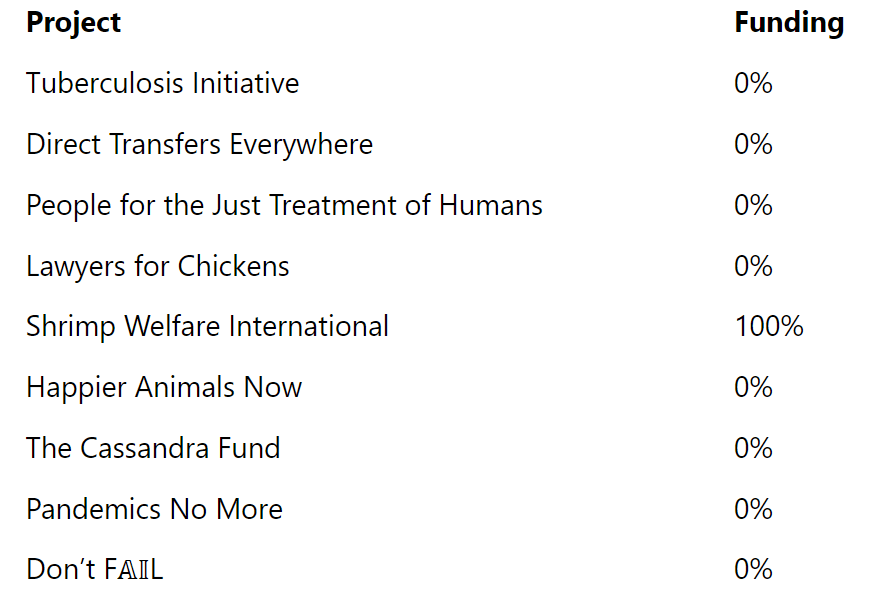

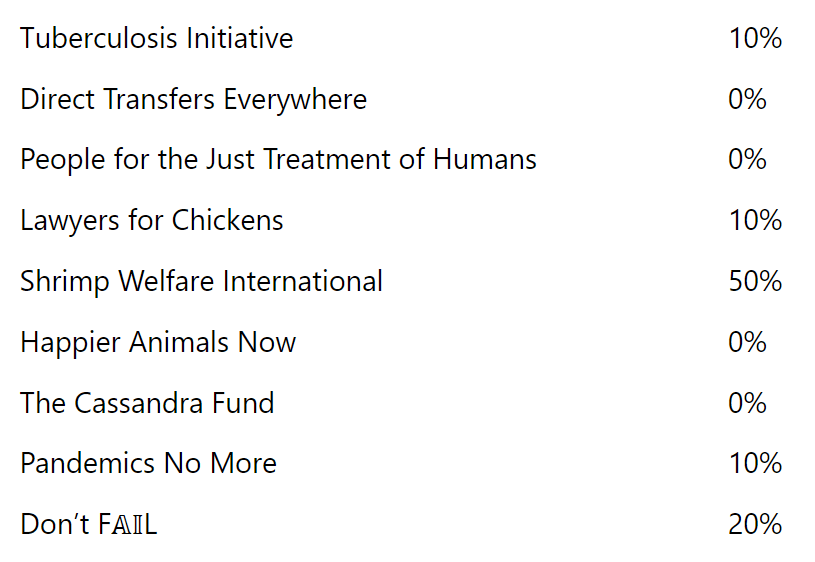

Two metanormative methods strongly favor GCR causes. My Favorite Theory selects the worldview with highest credence and does what it says. Totalist consequentialist views are the most common among EAs; unsurprisingly, they favor projects with large scale and do not impose any penalty on projects that mostly benefit future individuals:

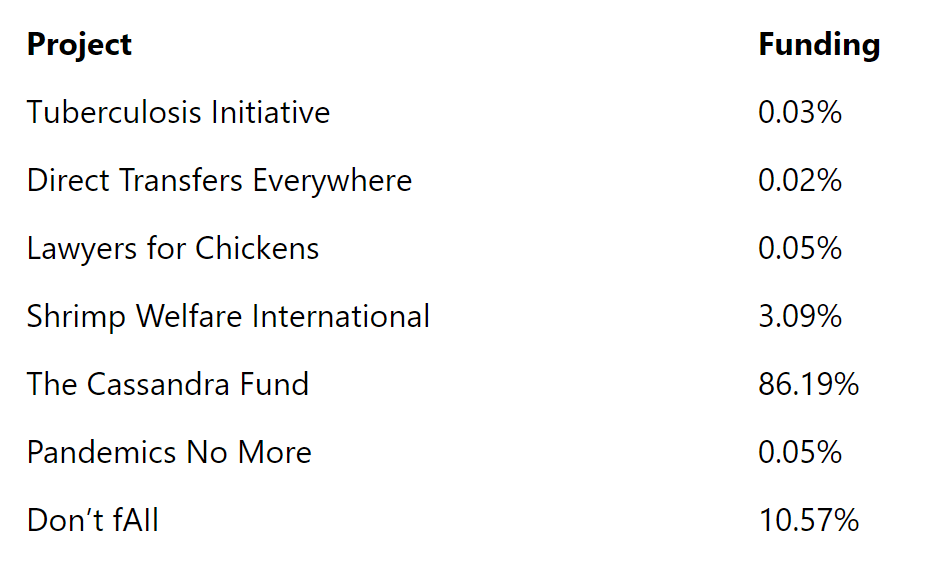

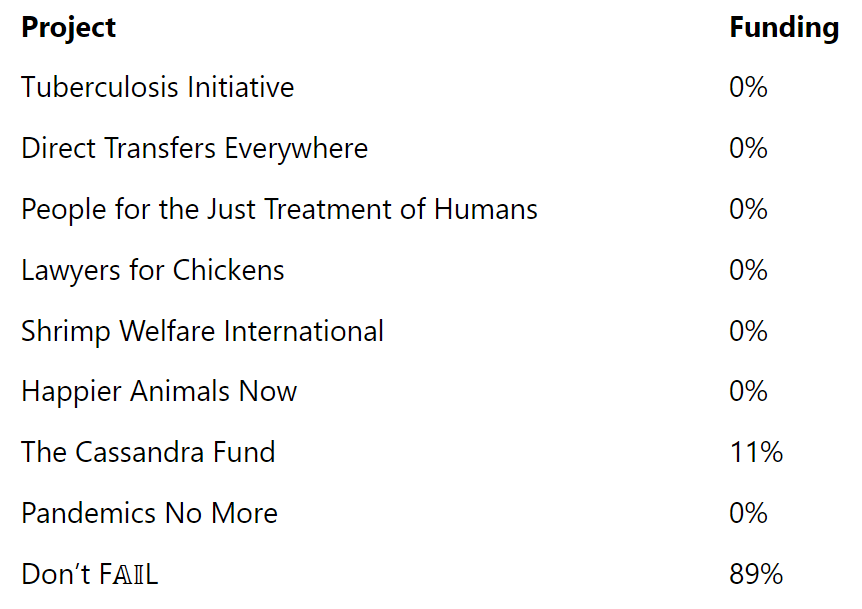

Maximize Expected Choiceworthiness selects the distribution that achieves the highest weighted average utility (level of normative support) across worldviews, weighted by the credence in each worldview. If a project receives very high utility on some worldviews, this will skew MEC in favor of it. MEC allocates funds to Shrimp and AI causes, each of which has large stakes according to some worldviews:

However, other metanormative methods give strikingly different results. Ranked Choice Voting[8] favors helping shrimp (as it looks for options that many worldviews rank highly):

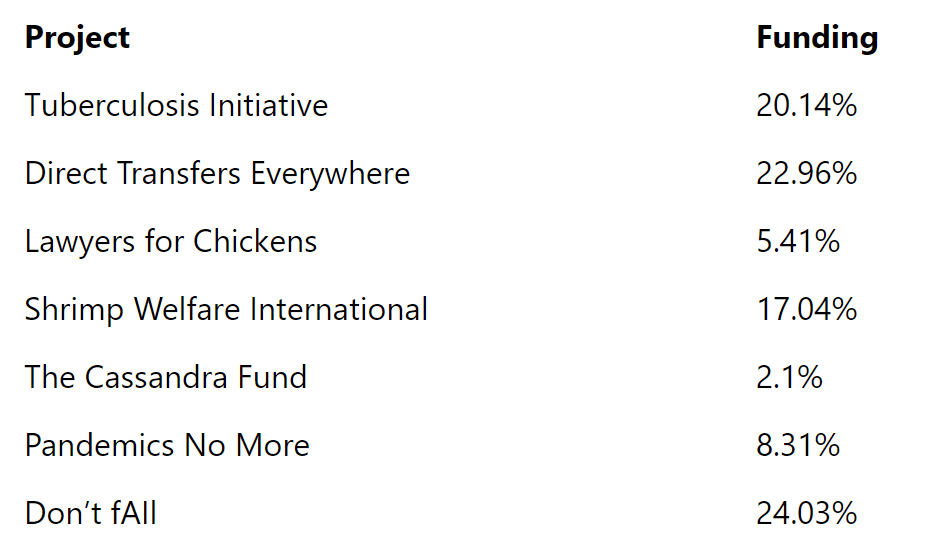

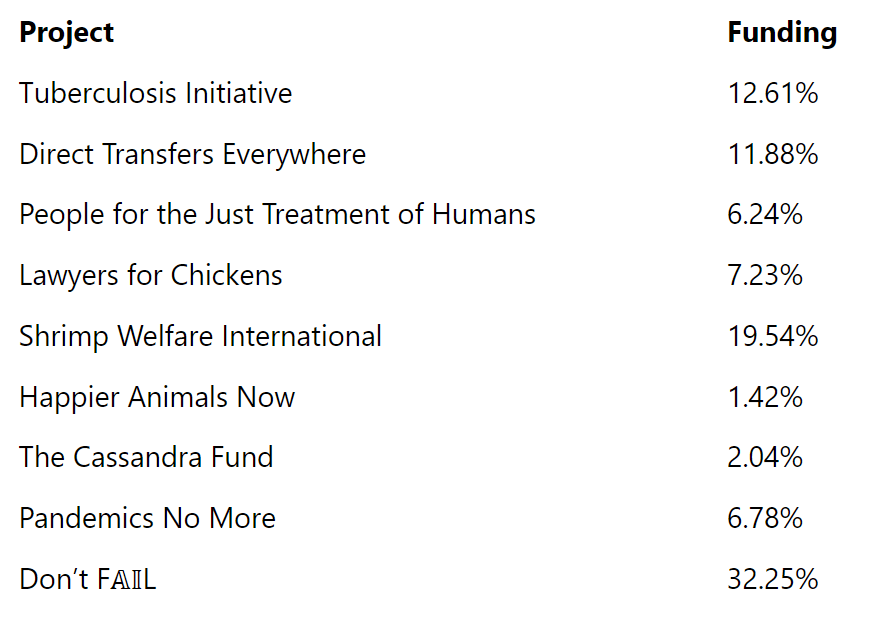

Three allocation methods recommend diversifying across projects and cause areas. Approval Voting tends to recommend options that have broad appeal, even if they are no one’s top choice. It suggests an allocation of funds that has something for everyone (though standard GHD projects receive surprisingly little support):

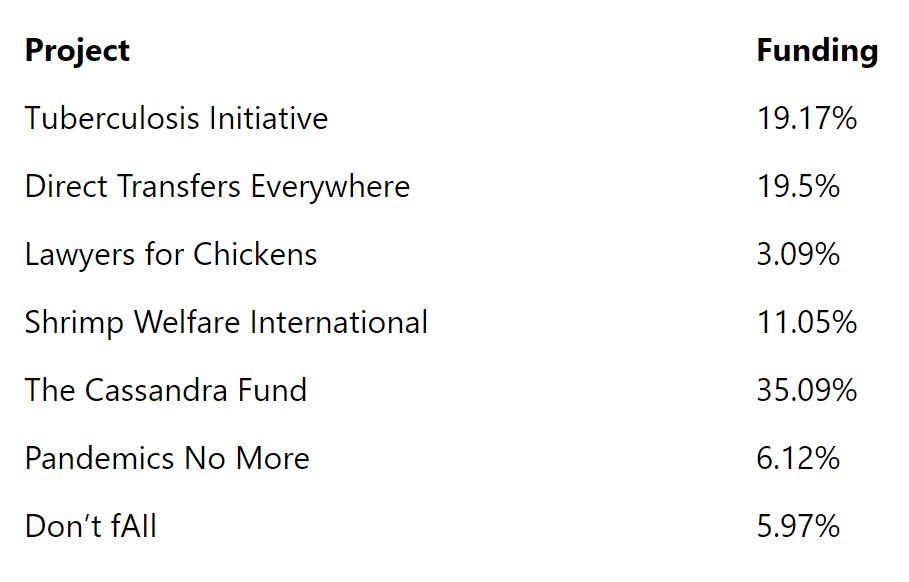

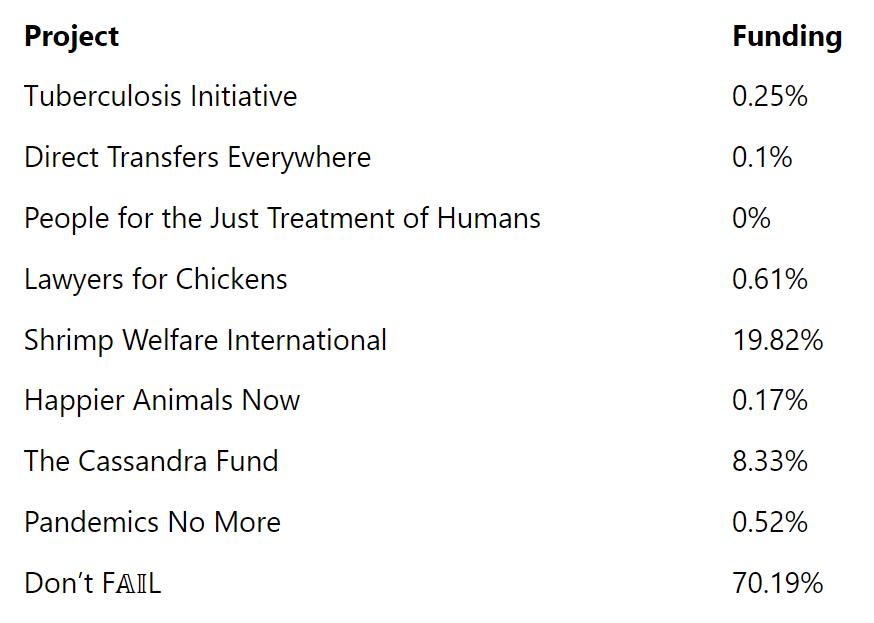

Moral Marketplace and Nash Bargaining behave very similarly to one another. In these methods, each worldview controls a share of the budget—proportional to the credence they are assigned—which they can spend as they like (with the latter providing the opportunity for bargaining between worldviews). These methods yield the greatest amount of diversification:

Takeaways

The method you use to navigate uncertainty matters a lot.

As you can see from the applications of the tool above, different metanormative strategies can yield very different answers for what you ought to do. Some methods, like MEC, tended to go all-in on top projects. Others, like Nash bargaining or approval voting, favored diversifying widely across projects and cause areas.[9]

Unfortunately, figuring out what we ought to do in light of uncertainty over worldviews lands us squarely in another level of uncertainty over metanormative methods.

Given the kinds of worldviews that are popular in EA, key empirical uncertainties are at least as important as moral uncertainty.

Given how popular various forms of consequentialism are in EA, the details of a portfolio often come down to the details of the project features. If you tend to think that work in a particular area won’t backfire, will do a lot of good with high confidence, etc., then it’s likely that work in that area will be favorably ranked by most of the worldviews to which you give some credence. For instance, by cranking up the scale, you can even make our token humanistic project—Artists without Borders—be the top-ranked option according to almost every worldview (with negative utilitarianism as the holdout). Likewise, if you assume that one of our x-risk projects—the Cassandra Fund, which aims to increase public awareness of existential threats in order to pass safety legislation—has no downsides and, say, a 10% chance of success, then even if its scale is fairly modest, roughly half the worldviews will rank it first.

It’s hard to be confident about the features of particular worldviews and projects.

We spent many hours discussing both the parameter choices for the tool and the specific parameter values for worldviews and projects, even though our primary purpose was pedagogical, and the stakes were not particularly high. We found it difficult to reach consensus, as uncertainties and tradeoffs abound. We often found that worldviews and projects were underdetermined and could be plausibly represented in many ways.

Further, some of the worldviews that we have included may not be amenable to the kind of operationalization required for our tool. In particular, deontologists might emphasize moral permissibility rather than value, have different kinds of preference orderings, and behave differently than consequentialists in a moral parliament.

Getting Started

To begin experimenting with RP’s Moral Parliament Tool, hit “Let me build my own,” choose some projects, select a handful of worldviews, adjust your parliament to your liking, and opt for an allocation procedure. Refresh the page to start over entirely. If you come across any bugs, please share them via this Google Form.

Acknowledgments

The Moral Parliament Tool is a project of the Worldview Investigation Team at Rethink Priorities. Arvo Muñoz Morán and Derek Shiller developed the tool; Hayley Clatterbuck and Bob Fischer wrote this post. We’d also like to thank David Moss, Adam Binksmith, Willem Sleegers, Zachary Mazlish, Gustav Alexandrie, John Firth, Michael Plant, Ella McIntosh, Will McAuliffe, Jamie Elsey and Eric Chen for the helpful feedback provided on the tool. If you like our work, please consider subscribing to our newsletter. You can explore our completed public work here.

- ^

A worldview can also include substantive empirical commitments, e.g. beliefs about the likely trajectory of the future or the number of individuals that might be affected by a project. We represent these empirical views as part of projects, not worldviews.

- ^

There might be other dimensions of worldviews (e.g. empirical views, epistemic practices, etc.) that are not captured here. These could be added in future work to develop the tool.

- ^

The default values in the tool come from Rethink Priorities’ Moral Weight Project. Users can change these values if desired.

- ^

The scale serves as a benchmark of what a successful project would probably do. We don’t assume that a successful project will certainly yield the amount of value (X) described by the scale. In the Risk tab, we also include the probability that the project will have greater or lesser effects.

- ^

A similar exercise could be done to explore the effects of changing the probabilities of success of various projects, which will interact with the Risk profiles of worldviews.

- ^

Though the problems for assigning scales are particularly salient for GCR projects, they are also present for GHD or animal welfare projects. For example, the Tuberculosis Initiative could have downstream effects of enormous magnitude. Even if we know how many shrimp would be affected by Shrimp Welfare International, we don’t know exactly how much their lives would improve by lowered ammonia concentrations at shrimp farms.

- ^

Users can load the EA parliament settings via this Google Sheet: EA Community Parliament

- ^

Note that Ranked Choice Voting is different from other methods in that delegates vote on projects rather than allocations. Therefore, it will always allocate all money to a single project.

- ^

In the examples above, we assumed that projects had decreasing marginal utility, which explains some of the trend toward diversification. However, the same general patterns hold if we assume no decreasing marginal utility.

This seems cool!

I could imagine that many people will gravitate towards moral parliament approaches even when all the moral considerations are known. If moral anti-realism is true, there may not come a point in moral reflection under idealized circumstances where it suddenly feels like "ah, now the answer is obvious." So, we can also think of moral parliament approaches as a possible answer to undecidedness when all the considerations are laid open.

I feel like only seeing it as an approach to moral uncertainty (so that, if we knew more about moral considerations, we'd just pick one of the first-order normative theories) is underselling the potential scope of applications of this approach.

Thank you for your comment Lukas, we agree that this tool, and more generally this approach, could be useful even in that case, when all considerations are known. The ideas we built on and the language we used came from the literature on moral parliaments as an approach to better understand and tackle moral uncertainty, hence us borrowing from that framing.

As a software developer looking for projects:

It seems like a lot of careful thought went into the overall design and specification of the tool. How much and what kind of software work was required to turn it into a reality? Do you have other plans for projects like this that are bottlenecked on software capacity?

Have you considered open-sourcing the project?

Thank you for your kind words Ben. A substantial amount of in-house software work went into both tools. We used react and vite to create these, and python for the server running the maths behind the scenes. If the interest in this type of work and the value-added are high enough, we'd likely want to do more of it.

On your last point, we haven't done open-source development from scratch for the projects we've done so far, but it might be a good strategy for future ones. That said, for transparency, we've made all our code accessible here.

Thanks for sharing, this is cool!

Is it possible to see the code and/or maths somewhere? It would be pretty neat make a standardised implementations of different allocation methods broadly accessible! Additionally, many results are quite sensitive to subtle choices like default parameters, scales and non-linearities used to express marginal diminishing "returns", right?

(At first, I was a bit confused that the "Maximise Excpected Choiceworthiness" solution did not end up with 100% on one option, but then I saw that "Diminishing Marginal Returns" was switched on in "Settings". Is there any philosophical support for this non-linearity in the case of intertheoretic comparisons?)

edit: found it here: https://github.com/rethinkpriorities/moral-parliament/tree/master/server/allocate

[Update: the tool does capture diminishing marginal cost-effectiveness - see reply]

Cool tool!

I'd be interested to see how it performs if each project has diminishing marginal cost-effectiveness - presumably there would be a lot more diversification. As it stands, it seems better suited to individual decision-making.

Thanks for your feedback, Stan. The tool does indeed capture the diminishing marginal cost-effectiveness of projects; you're right that it leads to more diversification. By default this setting is on, but you can change how steeply cost-effectiveness diminishes or erase them altogether (see this part of the intro video, and this of the longer features video).

Second, I should just mention that the Moral Parliament Tool is especially valuable for groups because of the methods we offer at the end for reaching an allocation decision. One of our aims is to make it clear that the work isn't done once you know the distribution of views in your group: you still need to pick a method for moving from that distribution to an allocation, with significant variation in the outcomes depending on the method you select. We hope that flagging this can help teams deliberate about their collective decision-making practices.

Thanks for making this! I think it is valuable, although I should basically just donate to whatever I think is the most cost-effective given my strong endorsement of expected total hedonistic utilitarianism and maximising expected choice-worthiness.

Thanks, Vasco! If you're completely certain about the relevant questions, then you're right that this tool won't inform your personal allocations. Of course, it's an open question whether that level of confidence is warranted---granting, of course, that this depends partly on your metaethical views, as it's much more reasonable to be highly confident about your own values than about objective moral norms, if any there are. Still, we hope that a tool like this can be useful for folks like you when they're coordinating with others. And independently of all that, the tool can serve people with even low levels of uncertainty, as they may uncover various surprising implications of their views.

Executive summary: Rethink Priorities' Moral Parliament Tool allows users to evaluate philanthropic decisions under moral uncertainty by representing different worldviews as delegates in a parliament and using various allocation strategies to determine the best course of action.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

This is only necessary for funds obtained by compulsion or for large indivisible expenditures that need coordination.

For voluntary contributions, direct allocation of individual funds work as a (probably optimal!) moral parliament, doesn’t it?

Hi Arturo. I'm not sure what you have in mind here, but it sounds like you may be missing one of the two ways of thinking about the moral parliament in this tool. First, as you seem to be suggesting, we could think of a group of people in an organization as a moral parliament. Then, our tool helps them decide how to allocate resources. Second, we could think of any single individual as composing a moral parliament, where each worldview to which you assign some credence is represented by some number of delegates (and more delegates = higher credence). Interpreted in this second way, a moral parliament isn't a literal moral parliament but, rather, a way of modeling moral uncertainty. Does that help?

Yes, absolutely. All in with portfolio theory for all aplications!