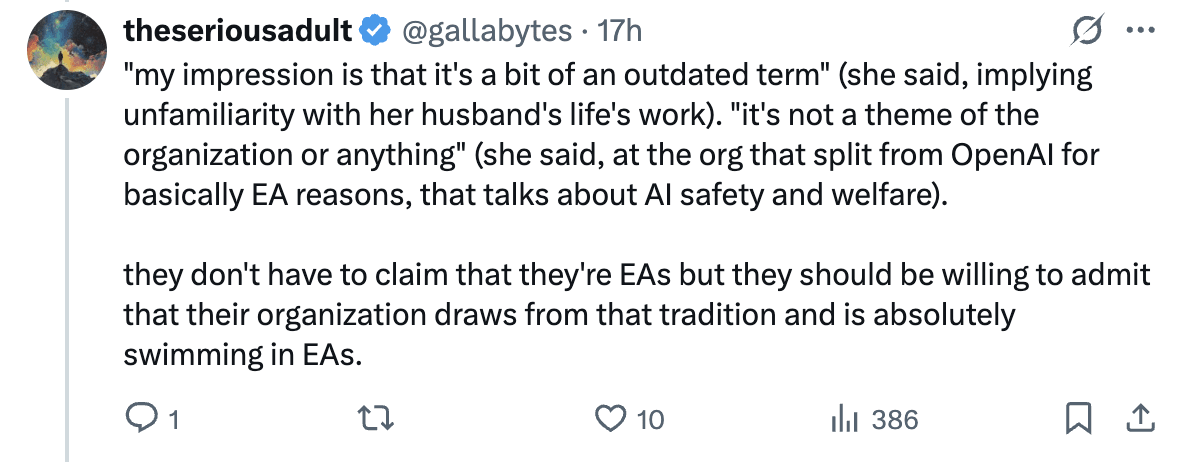

In a recent Wired article about Anthropic, there's a section where Anthropic's president, Daniela Amodei, and early employee Amanda Askell seem to suggest there's little connection between Anthropic and the EA movement:

Ask Daniela about it and she says, "I'm not the expert on effective altruism. I don't identify with that terminology. My impression is that it's a bit of an outdated term". Yet her husband, Holden Karnofsky, cofounded one of EA's most conspicuous philanthropy wings, is outspoken about AI safety, and, in January 2025, joined Anthropic. Many others also remain engaged with EA. As early employee Amanda Askell puts it, "I definitely have met people here who are effective altruists, but it's not a theme of the organization or anything". (Her ex-husband, William MacAskill, is an originator of the movement.)

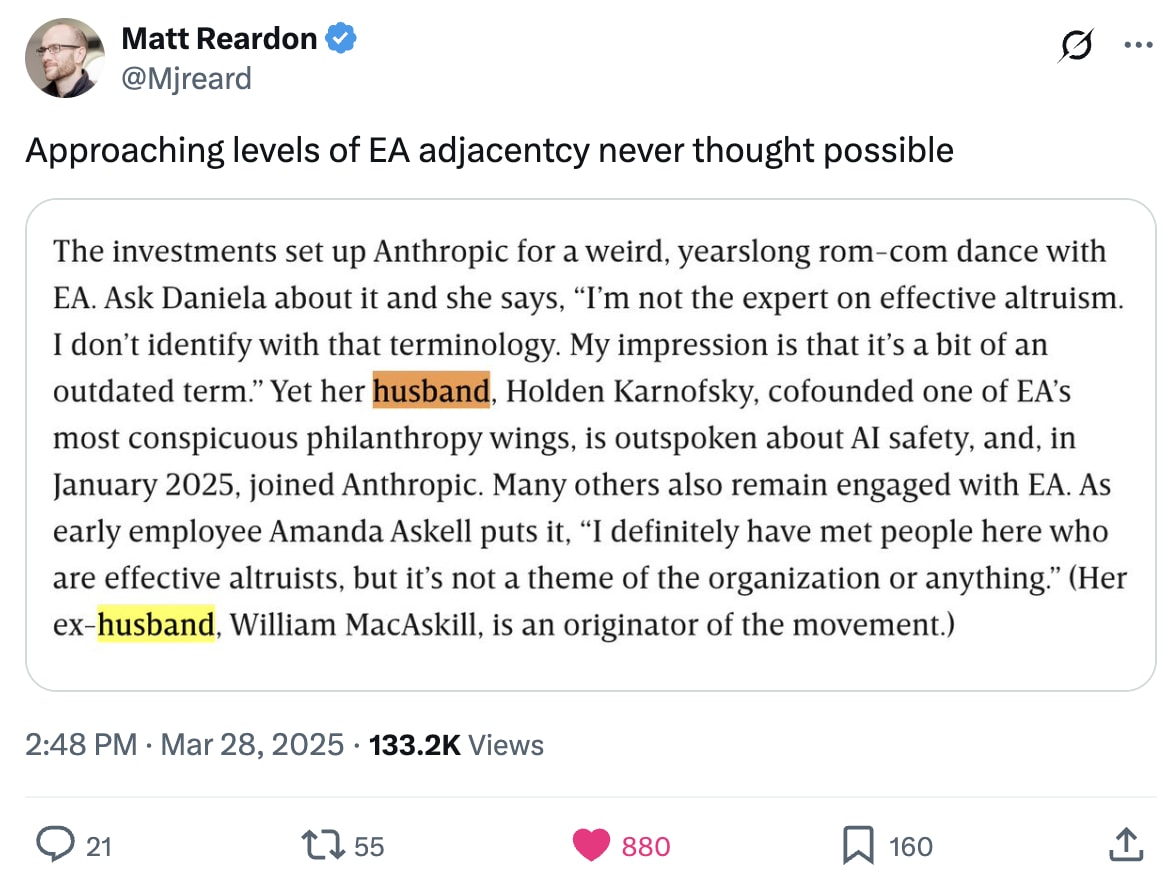

This led multiple people on Twitter to call out how bizarre this is:

In my eyes, there is a large and obvious connection between Anthropic and the EA community. In addition to the ties mentioned above:

- Dario, Anthropic’s CEO, was the 43rd signatory of the Giving What We Can pledge and wrote a guest post for the GiveWell blog. He also lived in a group house with Holden Karnofsky and Paul Christiano at a time when Paul and Dario were technical advisors to Open Philanthropy.

- Amanda Askell was the 67th signatory of the GWWC pledge.

- Many early and senior employees identify as effective altruists and/or previously worked for EA organisations

- Anthropic has a "Long-Term Benefit Trust" which, in theory, can exercise significant control over the company. The current members are:

- Zach Robinson - CEO of the Centre for Effective Altruism.

- Neil Buddy Shah - CEO of the Clinton Health Access Initiative, former Managing Director at GiveWell and speaker at multiple EA Global conferences

- Kanika Bahl - CEO of Evidence Action, a long-term grantee of GiveWell.

- Three of EA’s largest funders historically (Dustin Moskovitz, Sam Bankman-Fried and Jann Tallinn) were early investors in Anthropic.

- Anthropic has hired a "model welfare lead" and seems to be the company most concerned about AI sentience, an issue that's discussed little outside of EA circles.

- On the Future of Life podcast, Daniela said, "I think since we [Dario and her] were very, very small, we've always had this special bond around really wanting to make the world better or wanting to help people" and "he [Dario] was actually a very early GiveWell fan I think in 2007 or 2008."

- The Anthropic co-founders have apparently made a pledge to donate 80% of their Anthropic equity (mentioned in passing during a conversation between them here and discussed more here)

- Their first company value states, "We strive to make decisions that maximize positive outcomes for humanity in the long run."

It's perfectly fine if Daniela and Dario choose not to personally identify with EA (despite having lots of associations) and I'm not suggesting that Anthropic needs to brand itself as an EA organisation. But I think it’s dishonest to suggest there aren’t strong ties between Anthropic and the EA community. When asked, they could simply say something like, "yes, many people at Anthropic are motivated by EA principles."

It appears that Anthropic has made a communications decision to distance itself from the EA community, likely because of negative associations the EA brand has in some circles. It's not clear to me that this is even in their immediate self-interest. I think it’s a bad look to be so evasive about things that can be easily verified (as evidenced by the twitter response).

This also personally makes me trust them less to act honestly in the future when the stakes are higher. Many people regard Anthropic as the most responsible frontier AI company. And it seems like something they genuinely care about—they invest a ton in AI safety, security and governance. Honest and straightforward communication seems important to maintain this trust.

I'm sympathetic to wanting to keep your identity small, particularly if you think the person asking about your identity is a journalist writing a hit piece, but if everyone takes funding, staff, etc. from the EA commons and don't share that they got value from that commons, the commons will predictably be under-supported in the future.

I hope Anthropic leadership can find a way to share what they do and don't get out of EA (e.g. in comments here).

I understand why people shy away from/hide their identities when speaking with journalists but I think this is a mistake, largely for reasons covered in this post but I think a large part of the name brand of EA deteriorating is not just FTX but the risk-averse reaction to FTX by individuals (again, for understandable reasons) but that harms the movement in a way where the costs are externalized.

When PG refers to keeping your identity small, he means don't defend it or its characteristics for the sake of it. There's nothing wrong with being a C/C++ programmer, but realizing it's not the best for rapid development needs or memory safety. In this case, you can own being an EA/your affiliation to EA and not need to justify everything about the community.

We had a bit of a tragedy of the commons problem because a lot of people are risk-averse and don't want to be associated with EA in case something bad happens to them but this causes the brand to lose a lot of good people you'd be happy to be associated with.

I'm a proud EA.

Note that much of the strongest opposition to Anthropic is also associated with EA, so it's not obvious that the EA community has been an uncomplicated good for the company, though I think it likely has been fairly helpful on net (especially if one measures EA's contribution to Anthropic's mission of making transformative AI go well for the world rather than its contribution to the company's bottom line). I do think it would be better if Anthropic comms were less evasive about the degree of their entanglement with EA.

(I work at Anthropic, though I don't claim any particular insight into the views of the cofounders. For my part I'll say that I identify as an EA, know many other employees who do, get enormous amounts of value from the EA community, and think Anthropic is vastly more EA-flavored than almost any other large company, though it is vastly less EA-flavored than, like, actual EA orgs. I think the quotes in the paragraph of the Wired article give a pretty misleading picture of Anthropic when taken in isolation and I wouldn't personally have said them, but I think "a journalist goes through your public statements looking for the most damning or hypocritical things you've ever said out of context" is an incredibly tricky situation to come out of looking good and many of the comments here seem a bit uncharitable given that.)

Edit: the comment above has been edited, the below was a reply to a previous version and it makes less sense now, leaving it for posterity

You know much more than I do, but I'm surprised by this take. My sense is that Anthropic is giving a lot back:

My understanding is that all early investors in Anthropic made a ton of money, it's plausible that Moskovitz made as much money by investing in Anthropic as by founding Asana. (Of course this is all paper money for now, but I think they could sell it for billions).

As mentioned in this post, co-founders also pledged to donate 80% of their equity, which seems to imply they'll give much more funding than they got. (Of course in EV, it could still go to zero)

I don't see why hiring people is more "taking" than "giving", especially if the hires get to work on things that they believe are better for the world than any other role they could work on

My sense is that (even ignoring funding mentioned above) they are giving a ton back in terms of research on alignment, interpretability, model welfare, and general AI Safety work

To be clear, I don't know if Anthropic is net-positive for the world, bu... (read more)

Great points, I don't want to imply that they contribute nothing back, I will think about how to reword my comment.

I do think 1) community goods are undersupplied relative to some optimum, 2) this is in part because people aren't aware how useful those goods are to orgs like Anthropic, and 3) that in turn is partially downstream of messaging like what OP is critiquing.

I want to flag that the EA-aligned equity from Anthropic might well be worth $5-$30B+, and their power in Anthropic could be worth more (in terms of shaping AI and AI safety).

So on the whole, I'm mostly hopeful that they do good things with those two factors. It seems quite possible to me that they have more power and ability now than the rest of EA combined.

That's not to say I'm particularly optimistic. Just that I'm really not focused on their PR/coms related to EA right now; I'd ideally just keep focused on those two things - meaning I'd encourage them to focus on those, and to the extent that other EAs could apply support/pressure, I'd encourage other EAs to focus on these two.

There's a lesson here for everyone in/around EA, which is why I sent the pictured tweet: it is very counterproductive to downplay what or who you know for strategic or especially "optics" reasons. The best optics are honesty, earnestness, and candor. If you have explain and justify why your statements that are perceived as evasive and dishonest are in fact okay, you probably did a lot worse than you could have on these fronts.

Also, on the object level, for the love of God, no one cares about EA except EAs and some obviously bad faith critics trying to tar you with guilt-by-association. Don't accept their premise and play into their narrative by being evasive like this. *This validates the criticisms and makes you look worse in everyone's eyes than just saying you're EA or you think it's great or whatever.*

But what if I'm really not EA anymore? Honesty requires that you at least acknowledge that you *were.* Bonus points for explaining what changed. If your personal definition of EA changed over that time, that's worth pondering and disclosing as well.

I agree with your broad points, but this seems false to me. I think that lots of people seem to have negative associations with EA, especially given SBF and in the AI and tech space where eg it's widely (and imo falsely) believed that the openai coup was for EA reasons

I overstated this, but disagree. Overall very few people have ever heard of EA. In tech, maybe you get up to ~20% recognition, but even there, the amount of headspace people give it is very small and you should act as though this is the case. I agree it's negative directionally, but evasive comments like these are actually a big part of how we got to this point.

I'm specifically claiming silicon valley AI, where I think it's a fair bit higher?

I think we feel this more than is the case. I think a lot of people know about it but don't have much of an opinion on it, similar to how I feel about NASCAR or something.

I recently caught up with a friend who worked at OpenAI until very recently and he thought it was good that I was part of EA and what I did since college.

Because Sam was engaging in a bunch of highly inappropriate behaviour for a CEO like lying to the board which is sufficient to justify the board firing him without need for more complex explanations. And this matches private gossip I've heard, and the board's public statements

Further, Adam d'Angelo is not, to my knowledge, an EA/AI safety person, but also voted to remove Sam and was a necessary vote, which is strong evidence there were more legit reasons

I'm a bit confused about people suggesting this is defendable.

"I'm not the expert on effective altruism. I don't identify with that terminology. My impression is that it's a bit of an outdated term".

There are three statements here

1. I'm not the expert on effective altruism - Its hard to see this as anything other than a lie. She's married to Holden Karnofsky and knows ALL about Effective Altruism. She would probably destroy me on a "Do you understand EA" quiz.... I wonder how @Holden Karnofsky feels about this?

2. I don't identify with that terminology. - yes true at least now! Maybe she's still got some residual warmth for us deep in her heart?

3. My impression is that it's a bit of an outdated term". - Her husband set up 2 of the biggest EA (or heavily EA based) institutions that are still going strong today. On what planet is it an "outdated" term? Perhaps on the planet where your main goal is growing and defending your company?

In addition to the clear associations from the OP, from Their wedding page 2017 seemingly written by Daniela "We are both excited about effective altruism: using evidence and reason to figure out how to benefit others as much as possible, ... (read more)

Everything you say is correct I think, but I think in more normal circles, pointing out the inconsistency between someone's wedding page and their corporate PR bullshit would seem a bit weird and obsessive and mean. I don't find it so, but I think ordinary people would get a bad vibe from it.

fwiw I think in any circle I've been a part of critiquing someone publicly based on their wedding website would be considered weird/a low blow. (Including corporate circles.) [1]

I think there is a level of influence at which everything becomes fair game, e.g. Donald Trump can't really expect a public/private communication disconnect. I don't think that's true of Daniela, although I concede that her influence over the light cone might not actually be that much lower than Trump's.

Wow again I just haven't moved in circles where this would even be considered. Only the most elite 0.1 percent of people can even have a meaningful "public private disconnect" as you have to have quite a prominent public profile for that to even be an issue. Although we all have a "public profile" in theory, very few people are famous/powerful enough for it to count.

I don't think I believe in a public/private disconnect but I'll think about it some more. I believe in integrity and honesty in most situations, especially when your are publicly disparaging a movement. If you have chosen to lie and smear a movement with"My impression is that it's a bit of an outdated term" then I think this makes what you say a bit more fair game than for other statements where you aren't low-key attacking a group of well meaning people.

Hmm yeah, that's kinda my point? Like complaining about your annoying coworker anonymously online is fine, but making a public blog post like "my coworker Jane Doe sucks for these reasons" would be weird, people get fired for stuff like that. And referencing their wedding website would be even more extreme.

(Of course, most people's coworkers aren't trying to reshape the lightcone without public consent so idk, maybe different standards should apply here. I can tell you that a non-trivial number of people I've wanted to hire for leadership positions in EA have declined for reasons like "I don't want people critiquing my personal life on the EA Forum" though.)

No one is critiquing Daniela’s personal life though, they’re critiquing something about her public life (ie her voluntary public statements to journalists) for contradicting what she’s said in her personal life. Compare this with a common reason people get cancelled where the critique is that there’s something bad in their personal life, and people are disappointed that the personal life doesn’t reflect the public persona- in this case it’s the other way around.

Yeah, this used to be my take but a few iterations of trying to hire for jobs which exclude shy awkward nerds from consideration when the EA candidate pool consists almost entirely of shy awkward nerds has made the cost of this approach quite salient to me.

There are trade-offs to everything 🤷♂️

There's already been much critique of your argument here, but I will just say that by the "level of influence" metric, Daniela shoots it out of the park compared to Donald Trump. I think it is entirely uncontroversial and perhaps an understatement to claim the world as a whole and EA in particular has a right to know & discuss pretty much every fact about the personal, professional, social, and philosophical lives of the group of people who, by their own admission, are literally creating God. And are likely to be elevated to a permanent place of power & control over the universe for all of eternity.

Such a position should not be a pleasurable job with no repercussions on the level of privacy or degree of public scrutiny on your personal life. If you are among this group, and this level of scrutiny disturbs you, perhaps you shouldn't be trying to "reshape the lightcone without public consent" or knowledge.

I think you shouldn't assume that people are "experts" on something just because they're married to someone who is an expert, even when (like Daniela) they're smart and successful.

I agree that these statements are not defensible. I'm sad to see it. There's maybe some hope that the person making these statements was just caught off guard and it's not a common pattern at Antrhopic to obfuscate things with that sort of misdirection. (Edit: Or maybe the journalist was fishing for quotes and made it seem like they were being more evasive than they actually were.)

I don't get why they can't just admit that Anthropic's history is pretty intertwined with EA history. They could still distance themselves from "EA as the general public perceives it" or even "EA-as-it-is-now."

For instance, they could flag that EA maybe has a bit of a problem with "purism" -- like, some vocal EAs in this comment section and elsewhere seem to think it is super obvious that Anthropic has been selling out/became too much of a typical for-profit corporation. I didn't myself think that this was necessarily the case because I see a lot of valid tradeoffs that Anthropic leadership is having to navigate, and the armchair quarterbacks EAs seem to be failing to take that into account? However, the communications highlighted in the OP made me update that Anthropic leadership probably does lack the integrity needed to do complicated power-seeking stuff that has the potential to corrupt. (If someone can handle the temptions from power, they should at the very least be able to handle the comparatively easy dynamics of don't willingly distort the truth as you know it.)

When I speak of a strong inoculant, I mean something that is very effective in preventing the harm in question -- such as the measles vaccine. Unless there were a measles case at my son's daycare, or a family member were extremely vulnerable to measles, the protection provided by the strong inoculant is enough that I can carry on with life without thinking about measles.

In contrast, the influenza vaccine is a weak inoculant -- I definitely get vaccinated because I'll get infected less and hospitalized less without it. But I'm not surprised when I get the flu. If I were at great risk of serious complications from the flu, then I'd only use vaccination as one layer of my mitigation strategy (and without placing undue reliance on it.) And of course there are strengths in between those two.

I'd call myself moderately cynical. I think history teaches us that the corrupting influence of power is strong and that managing this risk has been a struggle. I don't think I need to take the position that no strong inoculant exists. It is enough to assert that -- based on centuries of human experience across cultures -- our starting point should be that inoculants as weak until proven otherw... (read more)

I think the people in the article you quote are being honest about not identifying with the EA social community, and the EA community on X is being weird about this.

I think the confusion might stem from interpreting EA as "self-identifying with a specific social community" (which they claim they don't, at least not anymore) vs EA as "wanting to do good and caring about others" (which they claim they do, and always did)

Going point by point:

This was more than 10 years ago. EA was a very different concept / community at the time, and this is consistent with Daniela Amodei saying that she considers it an "outdated term"

This was also more than 10 years ago, and giving to charity is not unique to EA. Many early pledgers don't consider themselves EA (e.g. signatory #46 claims it got too stupid for him years ago)

... (read more)The point I was trying to make is, separate from whether these statements are literally false, they give a misleading impression to the reader. If I didn't know anything about Anthropic and I read the words “I definitely have met people here who are effective altruists, but it's not a theme of the organization or anything”, I might think Anthropic is like Google where you may occasionally meet people in the cafeteria who happen to be effective altruists but EA really has nothing to do with the organisation. I would not get the impression that many of the employees are EAs who work at Anthropic or work on AI safety for EA reasons. And that the three members of the trust they've given veto power over the company to have been heavily involved in EA.

I also think being weird and evasive about this isn't a good communication strategy (for reasons @Mjreard discusses above).

As a side point, I'm confused when you say:

That was said by the author of the article who was trying to make the point that there is a link between Anthropic and EA. So I don't see this as evidence of Anthropic being forthcoming.

I never interpreted that to be the crux/problem here. (I know I'm late replying to this.)

People can change what they identify as. For me, what looks shady in their responses is the clusmy attempts at downplaying their past association with EA.

I don't care about it because I still identify with EA; instead, I care because it goes under "not being consistently candid." (I quite like that expression despite its unfortunate history). I'd be equally annoyed if they downplayed some significant other thing unrelated to EA.

Sure, you might say it's fine not being consistently candid with journalists. They may quote you out of context. Pretty common advice for talking to journalists is to keep your statements as short and general as possible, esp. when they ask you things that aren't "on message." Probably they were just trying to avoid actually-unfair bad press here? Still, it's clumsy and ineffective. It backfired. Being candid would probably have been better here even from the perspective of preventing journalists from spinning this against them. Also, they could just decide not to talk to untrusted journalists?

More generally, I feel like we really need leaders who can build trust and talk openly about difficult tradeoffs and realities.

Just as a side point, I do not think Amanda's past relationship with EA can accurately be characterized as much like Jonathan Blow, unless he was far more involved than just being an early GWWC pledge signatory, which I think is unlikely. It's not just that Amanda was, as the article says, once married to Will. She wrote her doctoral thesis on an EA topic, how to deal with infinities in ethics: https://askell.io/files/Askell-PhD-Thesis.pdf Then she went to work in AI for what I think is overwhelmingly likely to be EA reasons (though I admit I don't have any direct evidence to that effect) , given that it was in 2018 before the current excitement about generative AI, and relatively few philosophy PhDs, especially those who could fairly easily have gotten good philosophy jobs, made that transition. She wasn't a public figure back then, but I'd be genuinely shocked to find out she didn't have an at least mildly significant behind the scenes effect through conversation (not just with Will) on the early development of EA ideas.

Not that I'm accusing her of dishonesty here or anything: she didn't say that she wasn't EA or that she had never been EA, just that Anthropic wasn't an EA org. Indeed, given that I just checked and she still mentions being a GWWC member prominently on her website, and she works on AI alignment and wrote a thesis on a weird, longtermism-coded topic, I am somewhat skeptical that she is trying to personally distance from EA: https://askell.io/

You seem to have ignored a central part of what was said by Daniela Amodei; "I'm not the expert on effective altruism," which seems hard to defend.

Edit to add: the above proof that signing on to the GWWC pledge doesn't mean you are an EA is correct, but the person you link to is using having signed as a proof that he understands what EA is.

This works both ways. EA should be distancing itself from Anthropic, given recent pronouncements by Dario about racing China and initiating recursive self-improvement. Not to mention their pushing of the capabilities frontier.

No, but the main orgs in EA can still act in this regard. E.g. Anthropic shouldn't be welcome at EAG events. They shouldn't have their jobs listed on 80k. They shouldn't be collaborated with on research projects etc that allow them to "safety wash" their brand. In fact, they should be actively opposed and protested (as PauseAI have done).

Amanda Askell a few hours ago on twitter:

Giving this an "insightful" because I appreciate the documentation of what is indeed a surprisingly close relationship with EA. But also a disagree because it seems reasonable to be skittish around the subject ("AI Safety" broadly defined is the relevant focus, adding more would just set-off an unnecessary news media firestorm).

Plus, I'm not convinced that Anthropic has actually engaged in outright deception or obfuscation. This seems like a single slightly odd sentence by Daniela, nothing else.

I kind of wish we could do a reader poll as to community opinion on (1) whether these things are lies and (2) why they think they said these things. I can't tell if I'm really poorly calibrated when I read some of the comments here or if the commenters are a non-representative sample.

I think "outdated term" is a power move, trying to say you're a "geek" to separate yourself from the "mops" and "sociopaths". She could genuinely think, or be surrounded by people who think, 2nd wave or 3rd wave EA (i.e. us here on the forum in 2025) are lame, and that the real EA was some older thing that had died.

Re Anthropic and (unpopular) parallels to FTX, just thinking that it's pretty remarkable that no one has brought up the fact that SBF, Caroline Ellison and FTX were major funders of Anthropic. Arguably Anthropic wouldn't be where they are today without their help! It's unfortunate the journalist didn't press them on this.

Thank you for writing this. The comment section is all the evidence you need that EAs need to hear this and not be allowed to excuse Anthropic. It's worse than I thought, honestly, seeing the pages of apologetics here over whether this is technically dishonest.