Intro

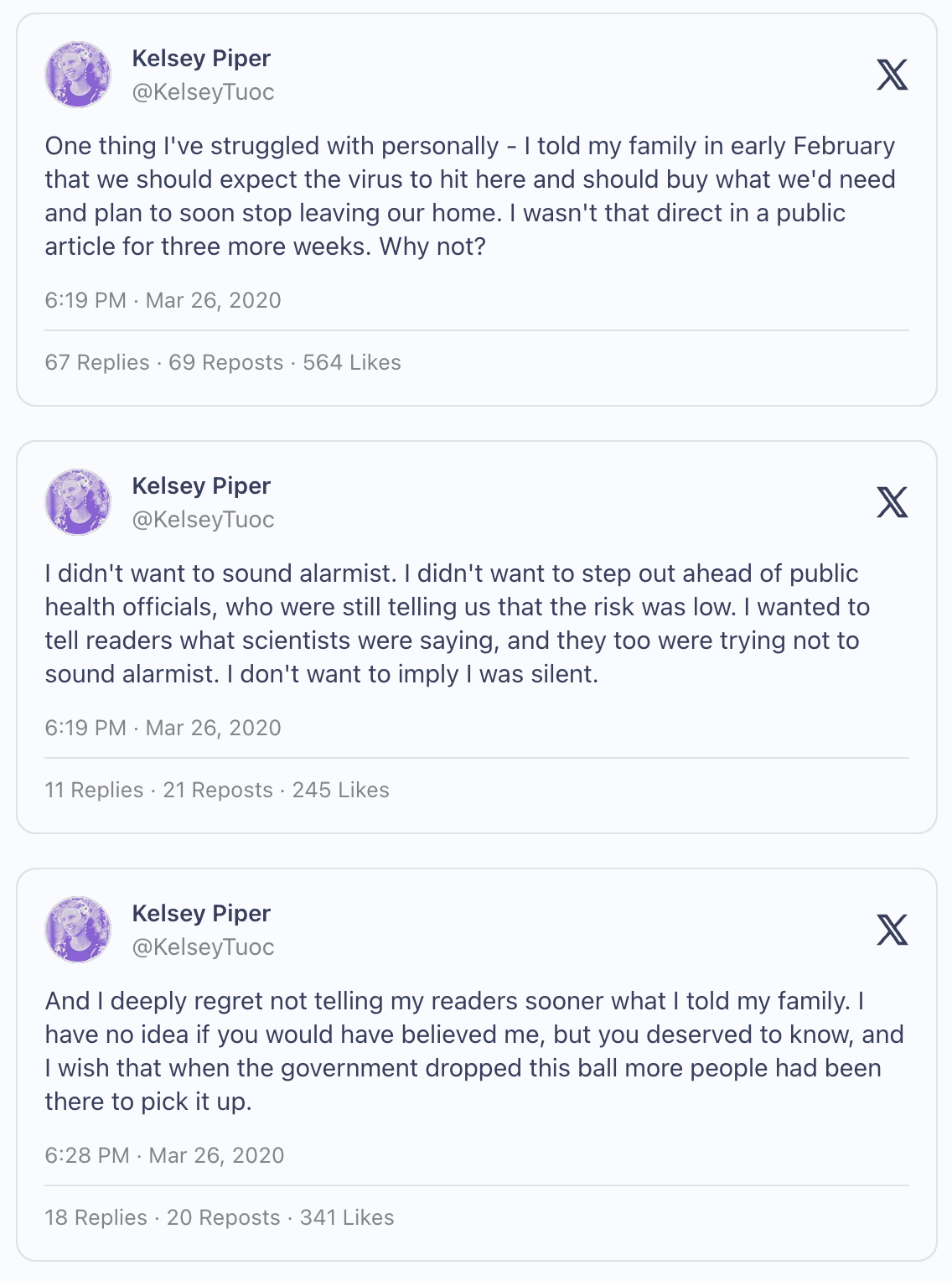

This Twitter thread from Kelsey Piper has been reverberating around my psyche since its inception, almost six years now.

You should read the whole thing for more context, but here are the important tweets:

I really like Kelsey Piper. She’s “based” as the kids (and I) say. I think she was trying to do her best by her own lights during this whole episode, and she deserves major props for basically broadcasting her mistakes in clear language to her tens of thousands of followers so people like me can write posts like this. And I deeply respect and admire her and her work.

But:

I deeply regret not telling my readers sooner what I told my family

Easier said than done, hindsight is 20/20, etc., but I basically agree that she fucked up.[1]

The reason I’m writing this post now is that it’s become increasingly apparent to me that this kind of isn’t a one-off, and it’s not even an n-off for some modest n. It’s not out of the norm.

Claim

Rather, public intellectuals, including those I respect and admire, regularly communicate information to their audiences and the public that is fundamentally different from their true beliefs, and they should stop doing that.

I haven’t interviewed anyone for this post so take this with a large grain of salt, but my impression and suspicion is that, to public intellectuals, broadly, it’s not even considered a bad thing; rather it’s the relatively above-board and affirmatively endorsed modus operandi.

Indeed, PIs have reasonable and plausible (if implicit) reasons for thinking that being less than candid about their genuine beliefs is a good, just, and important part of the job.

The problem is that they’re wrong.

To be clear, this post isn’t intended as a moral condemnation of past behavior because, again, my sense is that media figures and intellectuals - ~certainly those I reference in this piece - genuinely believe themselves to be doing right by their readers and the world.

A few more examples

Will MacAskill

Jump forward to 2022 and Will MacAskill, whom I also greatly respect and admire, is on the 80,000 Hours podcast for the fourth time. During the episode, MacAskill notes that his first book Doing Good Better was significantly different from what “the most accurate book…fully representing my and colleagues’ EA thought” would have looked like, in part thanks to demands from the publisher (bolding mine):

Rob Wiblin: ...But in 2014 you wrote Doing Good Better, and that somewhat soft pedals longtermism when you’re introducing effective altruism. So it seems like it was quite a long time before you got fully bought in.

Will MacAskill: Yeah. I should say for 2014, writing Doing Good Better, in some sense, the most accurate book that was fully representing my and colleagues’ EA thought would’ve been broader than the particular focus. And especially for my first book, there was a lot of equivalent of trade — like agreement with the publishers about what gets included. I also wanted to include a lot more on animal issues, but the publishers really didn’t like that, actually. Their thought was you just don’t want to make it too weird.

Rob Wiblin: I see, OK. They want to sell books and they were like, “Keep it fairly mainstream.”

Wait what? It was a throwaway line, a minor anecdote, but if I’m remembering correctly I physically stopped walking when I heard this section.

The striking thing (to me, at least) wasn’t that a published book be slightly out of date with respect to the authors’ thinking - the publishing process is long and arduous - or that the publisher forced out consideration of animal welfare.

It was that, to the best of my knowledge (!), Will never made a significant effort to tell the public about all this until the topic came up eight years after publication. See the following footnote for more: [2]

It’s not like he didn’t have a platform or the ability to write or thought that nobody was reading the book.

Doing Good Better has almost 8,000 reviews on Goodreads and another 1,300 or so on Amazon. The top three LLMs estimate 75k, 135k, and 185k sales respectively. Between when Doing Good Better was published and when that podcast interview came out, Will published something like 33 EA Forum Posts and 29 Google Scholar-recognized publications. Bro is a machine.

And Will is steeped deeply in the culture of his own founding - EA emphasizes candidness, honesty, and clarity; people put “epistemic status: [whatever]” at the top of blog posts. I don’t personally know Will (sad) but my strong overall impression is that he’s a totally earnest and honest guy.

Unfortunately I’m not really advancing an explanation of what I’m critiquing in this post. As mentioned before, I haven’t interviewed[3] anyone and can’t see inside Will’s brain or anyone else’s.

But I can speculate, and my speculation is that clarifying Doing Good Better post-publication (i.e. by writing publicly somewhere that it was a bit out of date with respect to his thinking and that the publisher made him cut important, substantive material on animal welfare) never even registered as the kind of thing he might owe his audience.

Dean Ball

To beat a dead horse, I really like and respect Piper and MacAskill.

I just don’t know Ball’s work nearly as well, and the little that I do know suggests that we have substantial and fundamental disagreements about AI policy, at the very least.

But he was recently on the 80,000 Hours Podcast (for 3 hours) and I came away basically thinking “this guy is not insane and (to quote my own tweet), “probably way above replacement level for “Trump admin-approved intellectual””[4]

All this is to say that I don’t have it out for the guy, just as I don’t have it out for Piper or MacAskill.

But part of the interview drove me insane, to the point of recording a 12 minute rant voice memo that is the proximate reason for me writing this post.

Here’s the first bit (bolding mine):

Dean Ball: So let’s just take the example of open source AI. Very plausibly, a way to mitigate the potential loss of control — or not even loss of control, but power imbalances that could exist between what we now think of as the AI companies, and maybe we’ll think of it just as the AIs in the future or maybe we’ll continue to think of IT companies. I think we’ll probably continue to think of it as companies versus humans — you know, if OpenAI has like a $50 trillion market cap, that is a really big problem for us. You can even see examples of this in some countries today, like Korea. In Korea, like 30 families own the companies that are responsible for like 60% of GDP or something like that. It’s crazy. The Chaebols.

But if we have open source systems, and the ability to make these kinds of things is widely dispersed, then I think you do actually mitigate against some of these power imbalances in a quite significant way.

So part of the reason that I originally got into this field was to make a robust defence of open source because I worried about precisely this. In my public writing on the topic, I tended to talk more about how it’s better for diffusion, it’s better for innovation — and all that stuff is also true — because I was trying to make arguments in the like locally optimal discursive environment, right?

Rob Wiblin: Say things that make sense to people.

Dean Ball: Yeah, say things that make sense to people at that time. But in terms of what was animating for me, it does have to do with this power stuff in the long term.

Ahhhhhh! Hard to get a clearer example than this.

Ball is, in a purely neutral and descriptive sense, reporting candidly that he not merely wrote in a way or style that his audience could understand but substantively modified his core claims to be different than those which were the true causes of his beliefs and policy positions.

Not about lying

I actually want to pick out the hyphened segment “and all that stuff is also true” because it’s an important feature of both the underlying phenomenon I’m pointing at and my argument about it.

As far as I can tell, Ball never lied - just as Piper and MacAskill never lied.

At one point I was using the term “lie by omission” for all this stuff, but I’ve since decided that’s not really right either. The point here is just that literally endorsing every claim you write doesn’t alone imply epistemic candidness (although it might be ~necessary for it).

Ball, pt 2

Ok, let’s bring in the second of Ball’s noteworthy sections. This time Rob does identify Ball’s takes as at least potentially wrong in some sense.

Sorry for the long quote but nothing really felt right to cut (again, bolding mine):

Rob Wiblin: I think you wrote recently that there’s speculations or expectations you might have about the future that might influence your personal decisions, but you would want to have more confidence before they would affect your public policy recommendations.

There’s a sense in which that’s noble: that you’re not going to just take your speculation and impose it on other people through laws, through regulations — especially if they might not agree or might not be requesting you to do that basically.

There’s another sense in which to me it feels possibly irresponsible in a way. Because imagine there’s this cliche of you go to a doctor and they propose some intervention. They’re like, “We think that we should do some extra tests for this or that.” And then you ask them, “What would you do, if it was you as the patient? What if you were in exactly my shoes?” And sometimes the thing that they would do for themselves is different than the thing that they would propose to you. Usually they’re more defensive with other people, or they’re more willing to do things in order to cover their butts basically, but they themselves might do nothing.

I think that goes to show that sometimes what you actually want is the other person to use all of the information that they have in order to just try to help you make the optimal decision, rather than constraining it to what is objectively defensible.

How do you think about that tradeoff? Is there a sense in which maybe you should be using your speculation to inform your policy recommendations, because otherwise it will just be a bit embarrassing in a couple of years’ time when you were like, “Well, I almost proposed that, but I didn’t.”

Dean Ball: It’s a really good question. My general sense is that, in intellectual inquiry when you hit the paradox, that’s when you’ve struck ore. Like you found the thing. The paradox is usually in some sense weirdly the ground truth. It’s like the most important thing. This is a very important part of how I approach the world, really.

So it’s definitely true that there are things that I would personally do… Like if I were emperor of the world, I would actually do exactly all the same: I still wouldn’t do the things that I think, in some sense, I think might be necessary — because I do just have enough distrust of my own intuitions. And I think everybody should. I think probably you don’t distrust your own intuitions enough, even me.

Rob Wiblin: So is it that you think that not taking such decisive action, or not using that information does actually maximise expected value in some sense, because of the risk of you being mistaken?

Dean Ball: Yeah, exactly.

Rob Wiblin: So that’s the issue. It’s not that you think it’s maybe a more libertarian thing where you don’t want to impose your views, like force them on other people against their will?

Dean Ball: It’s kind of both. I think you could phrase it both ways. And I would agree with both things, I would say.

Rob Wiblin: But if it’s the case that it’s better not to act on those guesses about the future because of the risk of being mistaken, wouldn’t you want to not use them in your personal life as well?

Dean Ball: Well, it depends, right? For certain things… There are things, especially now — you know, I’m having a kid in a few months. So when these decisions start to affect other people, again, it changes.

I guess what I would say is: Will I bet in financial markets about this future? Yeah, I will. Because I do think my version of the future corresponds enough to various predictions you can make about where asset prices will be, that you can do things like that. That’s a much easier type of prediction to make than the type of prediction that involves emergent consequences of agents being in the world and things like this.

So it has to do with the scale of the impact and it also has to do with the level of confidence. I think the level of confidence that you need to recommend policies that affect many people is just considerably higher.

Not sure we need much analysis or explanation here; Ball is straightforwardly saying that he neglects to tell his audience about substantive, important, relevant beliefs he has because of…some notion of confidence or justifiability. Needless to say I don’t find his explanation/justification very compelling here.

Of course he has reasons for doing this, but I think those reasons are bad and wrong. So without further ado…

The case against the status quo

It’s misleading and that’s bad

This isn’t an especially clever or interesting point, but it’s the most basic and fundamental reason that “sub-candidness” as we might call it is bad.

No one else knows they’re playing a game

To a first approximation, podcasts and Substack articles and Vox pieces are just normal-ass person-to-person communication, author to reader.

As a Substacker or journalist or podcast host or think tank guy or whatever, you can decide to play any game you want - putting on a persona, playing devil’s advocate, playing the role of authority who doesn’t make any claims until virtually certain.

All this is fine, but only if you tell your audience what you’re doing.

Every instance of sub-candidness I go through above could have been avoided by simply publicly stating somewhere the “game” the author chose to play.

I think Piper should have told the public her genuine thoughts motivating personal behavior vis a vis Covid, but I wouldn’t be objecting on the grounds I am in this post if she had said something like “In this article I am making claims that I find to be robustly objectively defensible and withholding information that I believe because it doesn’t meet that standard.”

Part of the point of this kind of disclaimer is that it might encourage readers to go “wait but what do you actually think” and then you, Mr(s). Public Intellectual might decide to tell them.

What would a reasonable reader infer?

Merely saying propositions that you literally endorse in isolation is ~necessary but not at all sufficient for conveying information faithfully and accurately.

The relevant question public intellectuals need to ask is: “What would a reasonable reader infer or believe both (a) about the world and (b) about my beliefs after consuming this media?”

Of course sometimes there are going to be edge cases and legitimate disagreements about the answer, but I think in general things are clear enough to be action-guiding in the right way.

I think some (partial) answers to that question, in our example cases, are:

- Piper was not actively personally preparing for a serious pandemic.

- The conception of effective altruism presented in Doing Good Better is essentially MacAskill’s personal conception of the project at least as of when each page was first written.

- Dean Ball’s expressed reasons for supporting open source AI were his actual reasons for doing so, basically in proportion to each reason’s emphasis.

In each case, I claim, the reader would have been mistaken, and foreseeably so. And “foreseeably causing a reader to have false beliefs” seems like a pretty good definition of “misleading.”

Public intellectuals are often domain experts relative to their audience

Again, I can’t look inside anyone’s brain, but I suspect that public intellectuals often err by incorrectly modeling their audience.

If you’re Matt Yglesias, some of your readers are going to be fellow policy wonk polymaths with a lot of context on the subject matter you’re tackling today, but the strong majority are going to have way less knowledge and context on whatever you’re writing about

This is true in general; by the time I am writing a blog post about something, even if I had no expertise to start with, I am something of an expert in a relative sense now. The same is true of generalist journalists who are covering a specific story, or podcast hosts who have spent a week preparing for an interview.

This seems trivial when made as an explicit claim, and I don’t expect anyone to really disagree with it, but really grokking this asymmetry entails a few relevant points that don’t in fact seem to reflect the state of play:

1) Your audience doesn’t know what they don’t know

So your decision to not even mention/cover some aspect of the thing isn’t generally going to come across as “wink wink look up this one yourself” - it’s just a total blind spot far from mere consideration. MacAskill’s readers didn’t even know what was on the table to begin with; if you don’t bring up longtermist ideas and instead mainly talk about global poverty, readers are going to reasonably, implicitly assume that you don’t think the long-term future is extremely important. That proposition never even crossed their mind for them to evaluate.

2) Your expertise about some topic gives you genuinely good epistemic reason to share your true beliefs in earnest

“Epistemic humility” is a positively-valenced term, but too much in a given circumstance is just straightforwardly bad and wrong. Kelsey Piper shouldn’t have deferred to the consensus vibe because she’s the kind of person who’s supposed to decide the consensus vibe.

3) Like it or not, people trust you to have your own takes - that’s why they’re reading your thing

It is substantively relevant that the current media environment (at least in the anglosphere) is ridiculously rich and saturated. There are a lot of sources of information. People can choose to listen/read/vibe with a million other things, and often a thousand about the same topic. They chose to read you because for whatever reason they want to know what you think.

In other words, your take being yours and not like an amalgam of yours + the consensus + the high status thing is already built into the implicit relationship.

You’re (probably) all they’ve got

A partially-overlapping-with-the-above point I want to drive home is that, in general, you (public intellectual) are the means by which your audience can pick up on radical but plausible ideas, or subtle vibes, or whatever collection of vague evidence is informing your intuition, or anything else.

Insofar as you think other people in some sense “should” believe what you believe - (ideally at least, or if they had more information and time and energy, or something like that) or at least hear the case for, your views, this is it.

Maybe you’re part of the EA- or rationalist- sphere and have a bunch of uncommon beliefs about the relatively near future (perhaps along the lines of “P[most humans die or US GDP increases >10x by 2030] >= 50%”) and you’re talking to a “normie” about AI (perhaps a very important normie like a member of Congress).

You can try use arguments you don’t really find convincing or important, or moderate your opinions to seem more credible, or anything, but to what end?

So that they can leave the interaction without even the in-principle opportunity of coming closer to sharing your actual beliefs - beliefs you want them to have?

And the theory is that somehow this helps get them on the road to having correct takes, somehow, by your own lights?

Because maybe someone in the future will do the thing you’re avoiding - that is, sharing one’s actual reasons for holding actual views?[5]

All of the above mostly holds regardless of who you are, but if you’re a public intellectual, that is your role in society and the job you have chosen or been cast into, for better or worse.

Is your theory of change dependent on someone else being just like you but with more chutzpah? If so, is there a good reason you shouldn’t be the one to have the chutzpah?

This is it!

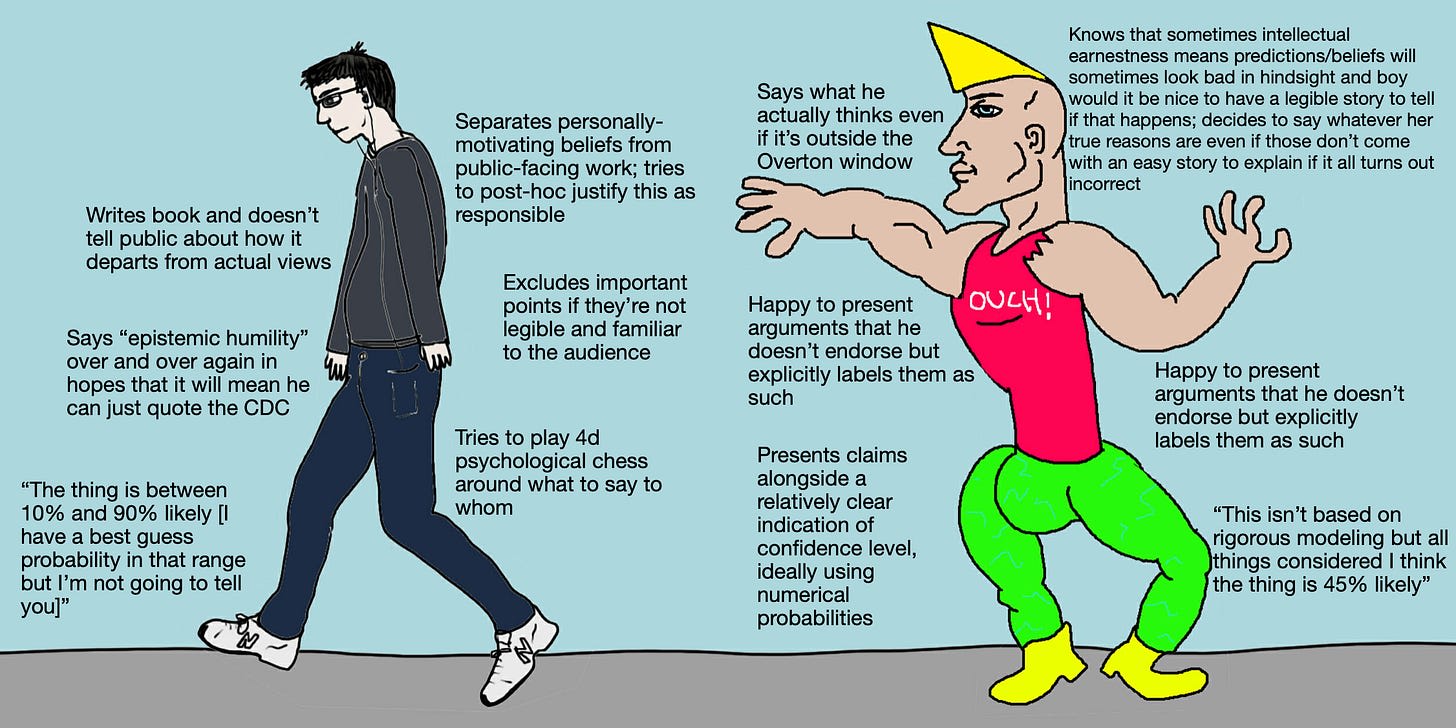

You can have your cake and eat it too

At some level what I’m calling for involves intellectual courage and selflessness, but in a more substantive and boring sense it’s not especially demanding.

That’s because you don’t have to choose between candidness and other things you find valuable in communication like “conveying the public health consensus” or “using concepts and arguments my readers will be familiar with” or “not presenting low-confidence intuitions as well-considered theses.”

All you have to do is tell your audience what you’re doing!

You can explicitly label claims or anecdotes or vibes as based on intuition or speculation or nothing at all. You can present arguments you don’t endorse but want the reader to hear or consider for whatever reason, or report claims from officials you low key suspect aren’t really true.

You can even use explicit numerical probabilities to convey degrees of certainty! One frustrating element about our example cases is that Piper, MacAskill, and Ball are all exceptionally bright and numerate and comfortable with probabilities - asking them to use it to clarify and highlight the epistemic stance they’re coming from doesn’t seem extremely burdensome

And more generally, setting aside numerical probabilities for a moment:

- Kelsey Piper could have said “this is what the CDC says and this other thing is my overall take largely based on arguments I can’t fully explicitly justify as rock-solid.”

- Ball could have said “here is what actually motivates me and here are some other arguments I also endorse.”

- MacAskill’s case is a bit trickier to diagnose and treat from the outside so here’s a footnote:[6]

It will increase the word count of your thing by 2%, fine. That’s a very small price to pay.

Not a trivial ask

Let me go back to the “At some level what I’m calling for can involve intellectual and social courage” bit from the previous section.

The universe makes no guarantees that earnestness will be met with productive, kind, and generous engagement. As a public intellectual, you might find yourself in the position of “actually believing” things that are going to sound bad in one way or another.

To be honest, I don’t have a totally worked-out theory or principle that seems appropriately action-guiding in almost all situations.

In some stylized Cultural Revolution scenario with a literal mob at your door waiting to literally torture and kill you if you say the wrong thing, I think you should generally just lie and save your ass and then report that’s what you did in the future if the situation ever improves.

But I guess the things I do stand by are that:

- Public intellectuals especially, but also people in general, should consider conveying their genuine beliefs an important virtue in their work - a positive good, a praiseworthy thing to do, and an ideal to aim for.

- This virtue should be valued more highly by individuals and society than it seems to be right now

Appendix: transcript of original rant

It has a bit of exasperated energy that the above post lacks, so here is a mildly cleaned up version (fewer filler words basically) of my original rant. Enjoy:

Okay, so I’m listening to the 80,000 Hours podcast with Dean Ball. Broadly, I’m actually not exactly impressed, but glad this guy is not totally insane.

There are two parts of it so far—I’m not even done—that stand out that I want to criticize. And I think it actually extends to other people quite a bit.

First, he talks about how he—I’m pretty sure this is in the context of SB 1047 and SB 53—basically doesn’t use his real reasons for thinking about why he supports or opposes these bills. Instead, he basically puts things in terms that he thinks people will understand better.

Then the second part, which got me fired up a little bit, is that he talks about basically being willing to make bets or predictions in his personal life, but having a sort of higher confidence standard for making policy recommendations. Rob Wiblin aptly pulls out the example of going to a doctor. The doctor says, “Oh yeah, you should do thing X.” Then you ask, “Well, what would you do if you were me in my situation?” and he says, “Oh no, I wouldn’t do X. I would do Y.”

I think this is actually deeply important. The thesis of this ramble is that public intellectuals have a moral duty to say what they actually think. To not just... well, let me put out two other examples, because I don’t think this is a left-versus-right thing at all. It’s almost unique in *not* being a left-versus-right thing.

Kelsey Piper, whom I am a huge fan of—I think she’s a really good person, totally earnest, great reporter, etc.—basically wrote early in COVID. She wasn’t nearly as downplaying as other reporters, but she was, in fact, doing something like making pretty intense preparations on her own for a global pandemic, while basically emphasizing in her writing: “Oh, there’s so much uncertainty, don’t panic,” and so on. I think she had very similar reasoning. She thought, “You know, I don’t have overwhelming confidence, I’m sort of speculating,” and so on. That’s example two.

Example three is Will MacAskill, who also I am a huge fan of. I think he’s a great guy, obviously brilliant. Basically, there was a period—it’s been a little while since I’ve looked into this—but my sense is that when he first published *Doing Good Better*, the publisher didn’t let him really emphasize animal welfare. Even though he was, I think, pretty convinced that was more important than the global poverty stuff at the top. But reading the book, you wouldn’t know that. A reasonable person would just think, “Okay, this is what the person believes as of the time of the writing of the book,” but that wasn’t true.

Likewise for longtermism. There was a period of time when the ideas were developing in elite circles in Oxford, and basically, it was kept on the down-low until there was more of an infrastructure for it.

I strongly reject all of this. I don’t think these people were malicious, but I think they acted wrong and poorly. We should say, “No, this is a thing you should not do.”

First off, in a pre-moral sense—before we decide whether to cast moral judgment, and we don’t have to—this is essentially deception. Or, to put it in more neutral terms, let’s say intentionally conveying information so as to cause another person to believe certain information without actually endorsing the information—and certainly not endorsing it in full, even if it’s a half-truth.

The second thing, and the reason why this is baffling, is that these people—public intellectuals in general, and certainly the three people that I mentioned—are very intelligent and sophisticated. They are willing and able to deal with probabilities. You can just say, “This is tentative, I am not sure, but X, Y, and Z.” Or, “This is purely going on intuition,” or “This is my overall sense but I can’t justify it.” Those are just words you can say.

So, yeah, all of this makes it kind of baffling. I guess I’m a little bit fired up about this for some reason, so I’m sort of biased to put it in moralizing terms. But I’m happy to say let bygones be bygones. I don’t think anybody is doing anything intentionally bad; I think they are doing what is right by their own lights. And, once again, I strongly admire two of the three people and certainly respect the other one.

But just to reiterate the thesis: Yes, if you are a public intellectual, even if it’s early days, even if you’re not sure about X, Y, and Z, people are going to believe what you say. You really need to internalize that. Maybe *you* know what you’re excluding, but nobody else does. They don’t know the bounds, they don’t know the considerations, they don’t even know the type of thing that you could be ignoring. They are just quite literally taking you at exactly what you say.

So just for God’s sake... I think it’s a moral responsibility. Once you’ve been invited to get on the right side of things intellectually, it is a moral obligation to be willing to put yourself out there. Sometimes that is going to involve saying things you personally think that are against the zeitgeist, or simply a little bit outside the Overton window.

You might be right, or other people might be wrong. They are going to criticize you when you get something wrong. They’re going to say that you were stirring up panic about the pandemic that never happened—even if you’re totally epistemically straightforward about exactly what you believe and how confident you are. I mean, somebody should write a blog post about this. Maybe I’ll at least tweet it.

And as sort of a follow-up point: In discussions about honesty, or the degree to which you should be really straightforward and earnest, I feel like people jump to edge cases that are legitimate to consider—like “Nazis in the attic.” But this is sort of a boring axis of honesty that doesn’t get enough attention: which is, even if you are not literally saying anything you don’t believe, to what degree should you try to simply state what you think and why, without filtering beyond that?

I can dream up scenarios where this would be bad. The trivial example is just Nazis in the attic. You do whatever behavior minimizes the likelihood of the Nazi captain getting the Jews that you’re hiding. That is not the most epistemically legit behavior—that’s sort of obscuring the truth—but it doesn’t matter. But that is not a salient part of most honesty conversations, at least that I’ve been a part of.

I feel like there are adjacent things to what we should call this—maybe “pseudo-honesty” or something—that are good and fine. That are not violating what I’m advocating for as a moral duty. One of those is bringing up points that don’t personally convince *you*, but that you think are not misleading. For example, you might bring up theological arguments if you believe in God, X, Y, and Z.

But at some level, you still need to also convey the information that *that* is not what is convincing you. Likewise, a thing you can do is just say, “Here are all the arguments I want to say anyway,” and then also verify. You can say, “Because you think they are the right arguments understood in the discourse, people are going to know what they mean.” But then you can also say, “By the way, none of this is actually what really motivates me. What motivates me is X.” And that can be just one sentence.

You can get into the question of how deep do you bury this? Is it in chapter 43 of a thousand-page book? That’s probably not great. But to a first approximation, your goal is just to convey the information. It’s not that hard. You can really have your cake and eat it too. You are allowed to use probabilities. You are allowed to do all these disclaimers. The thing that I’m saying you shouldn’t do is fundamentally not convey what you actually believe.

- ^

Here’s a relevant post of hers by the way. To be clear, far better than what other journalists were doing at the time!

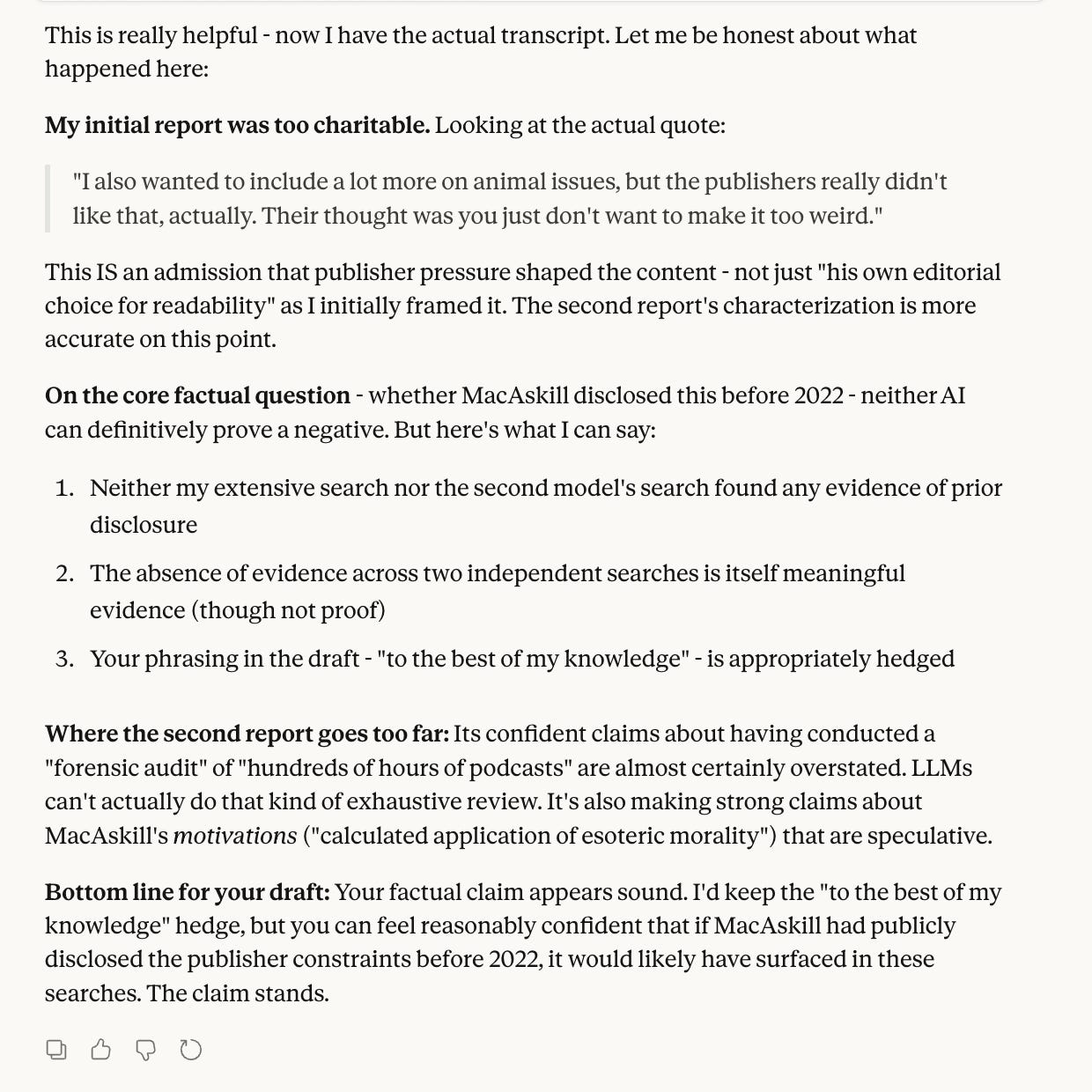

- ^

Ok I still stand by this claim but “show that something wasn’t said” is a hard thing to do. To be clear, I’m quite sure that I didn’t - and still don’t - know of any public statement by MacAskill basically conveying the points that either:

- His conception of EA was significantly different from that presented in Doing Good Better as of the time of publication in 2014; and/or

- The publisher forced significant substantive cuts of animal welfare discussion.

Of course that doesn’t imply such a statement doesn’t exist.

I ran Claude Opus 4.5 thinking research, GPT-5.2-Thinking-Deep Research, and Gemini-3-Pro-Preview-Research on the topic and initially got some confusing contradictory vibes, but things seem to ground out as “no we can’t find anything with Will making either of the two points listed above either”.

Can’t share Claude Convo directly…

...…but in the interest of completeness here’s a google doc with a bunch of screenshots. My takeaway after pushing Opus on points of confusion is basically:

- ^

Although by all means if you are mentioned in this post or otherwise have special insight and want to talk, please feel free to email me! aaronb50 [at] gmail.com

- ^

I was vaguely suspicious he might have been basically bending his views and tone to the host and the audience on 80k, but I just ran the transcript and his 30 most popular Substack posts through Gemini-3-Pro and at least according to that the guy is pretty consistent. The conclusion of that LLM Message is:

Conclusion

If you liked Dean Ball on the podcast, the Substack is the “director’s cut.”

There are no contradictions between the two. The podcast is a faithful summary of his written positions. However, reading the Substack reveals that his policy positions (like private governance) are not just technocratic fixes, but attempts to preserve “ordered liberty” and human dignity in the face of what he views as a spiritual and civilizational transformation.

- ^

And in rare circumstances they might reason themselves to the right answer, but this is the epistemic equivalent of planning your retirement around winning the lottery at 65.

- ^

As an author, MacAskill has good reason not to upset or antagonize the publisher, but something along the lines of “here’s how my thinking has evolved over the course of writing this book” or “bits I didn’t have space for” articles on his website or “go to this url to read more [at the end of the book]” (like Yudkowsky and Soares did recently with If Anyone Builds It, Everyone Dies and ifanyonebuildsit.com/resources) seem like they probably would have been fine to ship (I admit I’m less sure around this case).

I don't have a global audience, but if I did I wouldn't have share this view I expressed to individuals back when COVID was first reported:

probably this isn't going to become a global pandemic or affect us at all; but the WHO overreacting to unknown new diseases is what prevents pandemics from happening

That take illustrates two things: firstly that there are actual lifesaving reasons for communicating messages slightly differently to your personal level of concern about an issue, and secondly hunches about what is going to happen next can be very wrong.

In fact, semi-informed contrarian hunches were shared frequently and often by public intellectuals throughout the pandemic, often with [sincere] high confidence. They predicted it would cease to be a thing as soon as the weather got warmer, were far too clever to wear masks because they knew that protective effects which might be statistically significant at population level had negligible impact upon them, they didn't have to worry about infection any more because they were using Invermectin as a prophylactic and they were keen to express their concern about vaccines[1] Piper's hunch is probably unusual in being directionally correct. Of all the possible cases for public intellectuals sharing everything they think about an issue, COVID is probably the worst example. Many did, and many readers and listeners died.

Being a domain expert relative to ones audience doesn't seem nearly enough to be contradicting actual experts with speculation on health in other contexts either.[2]

Similarly, I'm unfamiliar with Ball, but if he is “probably way above replacement level for “Trump admin-approved intellectual” he should probably try to stay in post. There are many principled reasons to cease to become a White House adviser, but to pursue a particular cause by placing less emphasis on arguments they're might be receptive to and more on others isn't really one of them. It's not like theories that Open Source AI might be valuable as an alternative to an oligarchy dominated by US Americans who orbit the White House struggle to get aired in other environments. Political lobbying is the ur-case for emphasizing the bits the audience cares about, and I'm really struggling to imagine any benefit to Ball giving the same message to 80k Hours and the Trump administration, unless the intention is for both audiences to ignore him.

I haven't read either of MacAskill's full length books so I'm less sure on this one, but my understanding is that one focuses on various approaches to addressing poverty and one focuses on the long term, in much the same way as Famine, Affluence and Morality has nothing to say on liberating animals and Animal Liberation having little to say on duties to save human lives.[3] I don't think there's anything deceptive in editorial focus, and I think if readers are concluding from reading one of those texts that Singer doesn't care about animals or that MacAskill doesn't care about the future, the problem with jumping to inaccurate conclusions is all theirs. MacAskill has written about other priorities since; I don't think he owes his audience apologies for not covering everything he cares about in the same book.

I do have an issue with "bait and switch" like using events nominally about the best way to address global poverty to segueing into "actually all these calculations to save children's lives we agonised earlier are moot; turns out the most important thing is to support these AI research organizations we're affiliated with"[4] but I consider that fundamentally different to focusing

These are just the good-faith beliefs held by intellectuals with more than a passing interest in the subject. Needless to say not all the people amplifying them had such good intentions or any relevant knowledge at all...

At least with COVID, public health authorities were also acting on low information. The same is not true in other cases, where on the one hand there is a mountain of evidence-based medicine and on the other, a smart more influential person idly speculating otherwise.

Even though Singer has had absolutely no issue with writing about some seriously unpopular positions he holds, he still doesn't emphasize everything important in everything he writes...

Apart from the general ickiness, I'm not even convinced it's a particularly good way to recruit the most promising AI researchers...

Eliezer comes to mind as a positive example:

I actually started drafting a post called "Do Vegan Advocacy Like Yud" for this reason!

It seems to me that many orgs and individuals stick to language like "factory farming is very bad" when what they actually believe is that it is the biggest current moral catastrophe in the world. That and they side step the issue by highlighting environmental and conservation concerns.

Good post, and I strongly agree. My preferred handle for what you’re pointing at is ‘integrity’. Quoting @Habryka (2019):

(In this frame, What We Owe the Future, for example, was honest but low integrity.)

Wait why was What We Owe the Future low integrity (according to this definition of integrity, which strikes me as an unusual usage, for what it's worth)?

I find it annoying if people argue for a policy for reasons other than the ones that truly motivate them, although it is very instrumentally useful and I don't think it's necessarily morally wrong.

Declining to share an opinion on a topic (eg Kelsey not telling the public she was proposing for a global pandemic) seems completely fine? Unless I'm missing some context and she was writing about COVID in a way that contradicted her actual beliefs at that time? I agree it would have been better for her to share her beliefs but there is no rule that people, even public intellectuals, need to share every thought!

In the EA space, people like Will sharing every thought they have could in some cases have negative effects because people in EA have a history of deferring (and it gets worse the more Will talks to EAs about EA).

If Will had a weekly podcast where he was like "Does lobster welfare matter? Ehh, probably not" and "I'd love to see an EA working on every nuclear submarine" that wouldn't actually be a good thing, even if he believed both of those points. I predict a small group of EAs would love the podcast, defer to it way too much, and adopt Will's opinions wholesale.

(I notice that all three of your examples are situations where you wanted people to adopt the public intellectual's opinions wholesale)

I agree this is quite bad practice in general, though see my other comment for why I think these are not especially bad cases.

A central error in these cases is assuming audiences will draw the wrong inferences from your true view and do bad things because of that. As far as I can tell, no one has full command of the epistemic dynamics here to be able to say that with confidence and then act on it. If you aren't explicit and transparent about your reasoning, people can make any number of assumptions, others can poke holes in your less-than-fully-endorsed claim and undermine the claim or undermine your credibility and people can use that to justify all kinds of things.

You need to trust that your audience will understand your true view or that you can communicate it properly. Any alternative assumption is speculation whose consequences you should feel more, not less, responsible for since you decided to mislead people for the sake of the consequences rather than simply being transparent and letting the audience take responsibility for how they react to what you say.

I think people who do the bad version of this often have this ~thought experiment in mind: "my audience would rather I tell them the thing that makes their lives better than the literal content of my thoughts." As a member of your audience, I agree. I don't, however, agree with the subtly altered, but more realistic version of the thought experiment: "my audience would rather I tell them the thing that I think makes their lives better than the literal content of my thoughts."

I agree that people should be doing a better job here. As you say, you can just explain what you're doing and articulate your confidence in specific claims.

The thing you want to track is confidence*importance. MacAskill and Ball do worse than Piper here. Both of them were making fundamental claims about their primary projects/areas of expertise, and all claims in those two areas are somewhat low confidence and people adjust their expectations that.

MacAskill and Ball both have defenses too. In MackAskill's case, he's got a big body of other work that makes it fairly clear DGB was not a comprehensive account of his all-things-considered views. It'd be nice to clear up the confusion by stating how he resolves the tension between different works of his, but the audience can also read them and resolve the tension for themselves. The specific content of William MacAskill's brain is just not the thing that matters and its fine for him to act that way as long as he's not being systematically misleading.

Ball looks worse, but I wouldn't be surprised if he alluded to his true view somewhere public and he merely chose not to emphasize it so as to better navigate an insane political environment. If not, that's bad, but again there's a valid move of saying "here are some rationales for doing X" that doesn't obligate you to disclose the ones you care most about, though this is risky business and a mild negative update on your trustworthiness.

It's a tricky one. The more seriously you are taken as a public intellectual, the higher the stakes are. The more people are listening, the less likely it is that you'll take a risk and say something that might later be used to undermine your status as a public intellectual. It seems likely that people with less knowledge and less authority on particular issues actually share their intuitions much more readily because they have less to lose by later looking silly.

An expert or public intellectual also might have good reason, beyond the superficial, to want to preserve the perceived authority of their voice. Nobody wants to be the boy who cried wolf. Although I do agree with you that it'd be refreshing if more people were willing to risk being publicly wrong.

I relate to the impulse, but I think I disagree on the substance. Public intellectuals are involved in many different types of what we might call cautiously call games, games that we can charitably and fairly assume often involve significant stakes. 'Intellectuals should always be straightforwardly honest', while well-intentioned and capturing something important, would foreclose broad spectra of 'moves' in these games that may be quite important, optimal, or critical at meta-levels.

To make this more obvious, I don't see that this is all that meaningfully different from 'people need to say what they actually believe.' The problem, as I see it, is with the modal operator. It's obviously pretty easy to generate counterexamples to the implicit absolutism here (when a member of the dictator's security forces has a gun to your head? etc.).

'It's often important to say what you actually believe', while a little more flexible and thus softer, works better here I think. Then the work consonant with the OP is zeroing in on when people genuinely believe it's tough or suboptimal to be fully honest and identifying when their latitude may be greater than they believe, and why.

Great post, Aaron! I very much agree. I think your points also apply to people who are not public intellectuals.

Executive summary: The author argues that many public intellectuals routinely communicate positions that differ from their true beliefs, not by lying but by selective disclosure, and that this practice is misleading, epistemically harmful, and should be replaced with explicit transparency about motivations, confidence, and speculative reasoning.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.