Since writing The Precipice, one of my aims has been to better understand how reducing existential risk compares with other ways of influencing the longterm future. Helping avert a catastrophe can have profound value due to the way that the short-run effects of our actions can have a systematic influence on the long-run future. But it isn't the only way that could happen.

For example, if we advanced human progress by a year, perhaps we should expect to see us reach each subsequent milestone a year earlier. And if things are generally becoming better over time, then this may make all years across the whole future better on average.

I've developed a clean mathematical framework in which possibilities like this can be made precise, the assumptions behind them can be clearly stated, and their value can be compared.

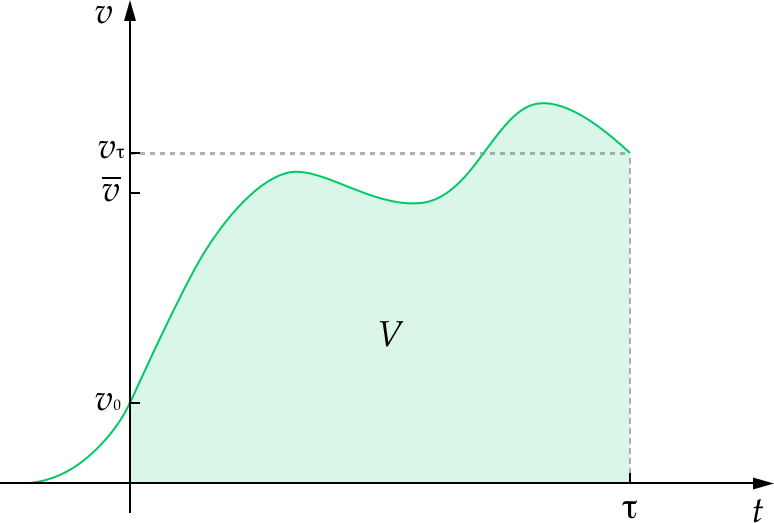

The starting point is the longterm trajectory of humanity, understood as how the instantaneous value of humanity unfolds over time. In this framework, the value of our future is equal to the area under this curve and the value of altering our trajectory is equal to the area between the original curve and the altered curve.

This allows us to compare the value of reducing existential risk to other ways our actions might improve the longterm future, such as improving the values that guide humanity, or advancing progress.

Ultimately, I draw out and name 4 idealised ways our short-term actions could change the longterm trajectory:

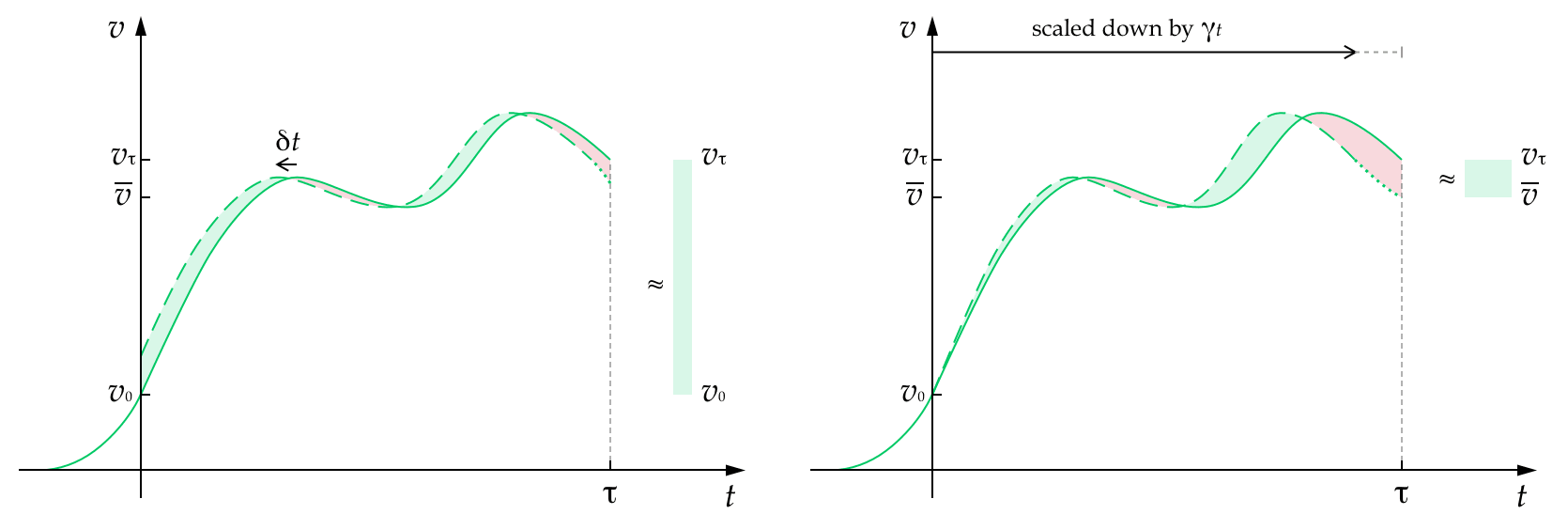

- advancements

- speed-ups

- gains

- enhancements

And I show how these compare to each other, and to reducing existential risk.

My hope is that this framework, and this categorisation of some of the key ways we might hope to shape the longterm future, can improve our thinking about longtermism.

Some upshots of the work:

- Some ways of altering our trajectory only scale with humanity's duration or its average value — but not both. There is a serious advantage to those that scale with both: speed-ups, enhancements, and reducing existential risk.

- When people talk about 'speed-ups', they are often conflating two different concepts. I disentangle these into advancements and speed-ups, showing that we mainly have advancements in mind, but that true speed-ups may yet be possible.

- The value of advancements and speed-ups depends crucially on whether they also bring forward the end of humanity. When they do, they have negative value.

- It is hard for pure advancements to compete with reducing existential risk as their value turns out not to scale with the duration of humanity's future. Advancements are competitive in outcomes where value increases exponentially up until the end time, but this isn't likely over the very long run. Work on creating longterm value via advancing progress is most likely to compete with reducing risk if the focus is on increasing the relative progress of some areas over others, in order to make a more radical change to the trajectory.

The work is appearing as a chapter for the forthcoming book, Essays on Longtermism, but as of today, you can also read it online here.

Speed-ups

You write: “How plausible are speed-ups? The broad course of human history suggests that speed-ups are possible,” and, “though there is more scholarly debate about whether the industrial revolution would have ever happened had it not started in the way it did. And there are other smaller breakthroughs, such as the phonetic alphabet, that only occurred once and whose main effect may have been to speed up progress. So contingent speed-ups may be possible.”

This was the section of the paper I was most surprised / confused by. You seemed open to speed-ups, but it seems to me that a speed-up for the whole rest of the future is extremely hard to do.

The more natural thought is that, at some point in time, we either hit a plateau, or hit some hard limit of how fast v(.) can grow (perhaps driven by cubic or quadratic growth as future people settle the stars). But if so, then what looks like a “speed up” is really an advancement.

I really don’t see what sort of action could result in a speed-up across the whole course of v(.), unless the future is short (e.g. 1000 years).

I think "open to speed-ups" is about right. As I said in the quoted text, my conclusion was that contingent speed-ups "may be possible". They are not an avenue for long-term change that I'm especially excited about. The main reason for including them here was to distinguish them from advancements (these two things are often run together) and because they fall out very natural as one of the kinds of natural marginal change to the trajectory whose value doesn't depend on the details of the curve.

That said, it sounds like I think they are a bit more lik... (read more)