It's fashionable these days to ask people about their AI timelines. And it's fashionable to have things to say in response.

But relative to the number of people who report their timelines, I suspect that only a small fraction have put in the effort to form independent impressions about them. And, when asked about their timelines, I don't often hear people also reporting how they arrived at their views.

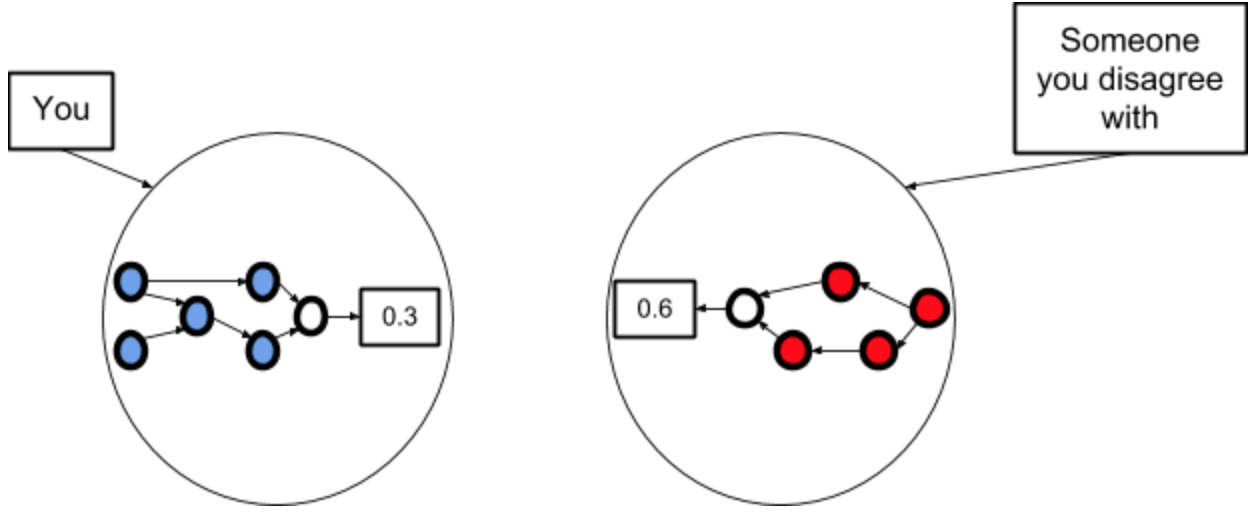

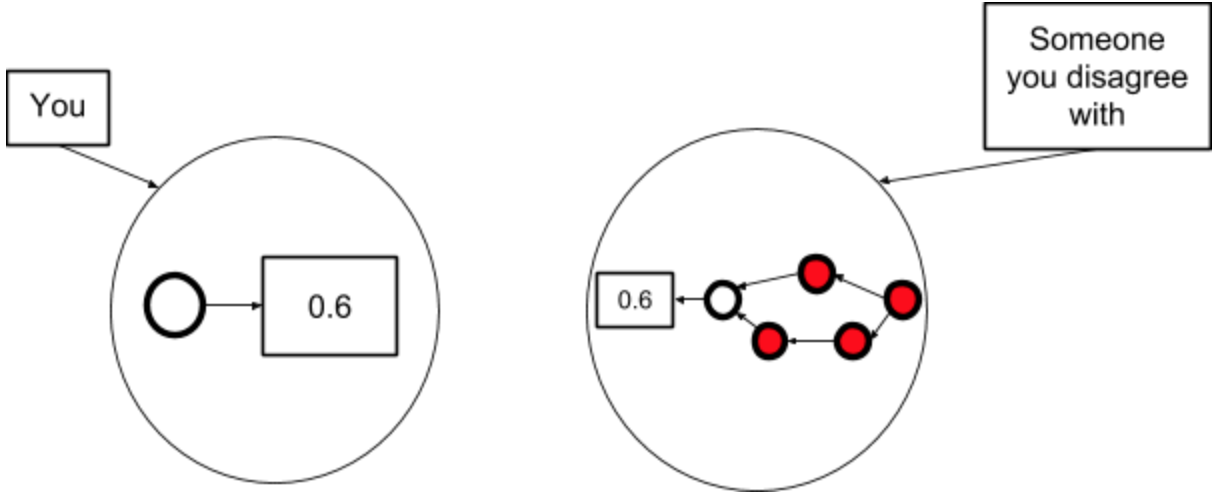

If this is true, then I suspect everyone is updating on everyone else's views as if they were independent impressions, when in fact all our knowledge about timelines stems from the same (e.g.) ten people.

This could have several worrying effects:

- People's timelines being overconfident (i.e. too resilient), because they think they have more evidence than they actually do.

- In particular, people in this community could come to believe that we have the timelines question pretty worked out (when we don't), because they keep hearing the same views being reported.

- Weird subgroups forming where people who talk to each other most converge to similar timelines, without good reason.[1]

- People using faulty deference processes. Deference is hard and confusing, and if you don't discuss how you’re deferring then you're not forced to check if your process makes sense.

So: if (like most people) you don't have time to form your own views about AI timelines, then I suggest being clear who you're deferring to (and how), rather than just saying "median 2040" or something.[2]

And: if you’re asking someone about their timelines, also ask how they arrived at their views.

(Of course, the arguments here apply more widely too. Whilst I think AI timelines is a particularly worrying case, being unclear if/how you're deferring is a generally poor way of communicating. Discussions about p(doom) are another case where I suspect we could benefit from being clearer about deference.)

Finally: if you have 30 seconds and want to help work out who people do in fact defer to, take the timelines deference survey!

Thanks to Daniel Kokotajlo and Rose Hadshar for conversation/feedback, and to Daniel for suggesting the survey.

- ^

This sort of thing may not always be bad. There should be people doing serious work based on various different assumptions about timelines. And in practice, since people tend to work in groups, this will often mean groups doing serious work based on various different assumptions about timelines.

- ^

Here are some things you might say, which exemplify clear communication about deference:

- "I plugged my own numbers into the bio anchors framework (after 30 minutes of reflection) and my median is 2030. I haven't engaged with the report enough to know if I buy all of its assumptions, though"

- "I just defer to Ajeya's timelines because she seems to have thought the most about it"

- "I don't have independent views and I honestly don't know who to defer to"

First of all, thank you for reporting who you've deferred to in different ways in specific terms. Second, thank you for putting in some extra effort to not only name this or that person but make your reasoning more transparent and legible.

I respect Matthew because when I read what he writes, it feels like I tend to agree with half of the points he makes and disagree with the other half. It's what makes him interesting. While some are more real and some are only perceived, there are barriers to posting on the EA Forum, like an expectation of too high a burden of rigour, that have people post on social media or other forums off of this one when they can't resist the urge to express a novel viewpoint to advance progress in EA. Matthew is one of the people I think of when I wish a lot of insightful people were more willing to post on the EA Forum.

I don't agree with all of what you've presented from Matthew here or what you've said yourself. I might come back to specify which parts I agree and disagree with later when I've got more time. Right now, though, I just want to positively reinforce your writing a comment that is more like the kind of feedback from others I'd like to see more of in EA.