A recent post by Simon_M argued that StrongMinds should not be a top recommended charity (yet), and many people seemed to agree. While I think Simon raised several useful points regarding StrongMinds, he didn't engage with the cost-effectiveness analysis of StrongMinds that I conducted for the Happier Lives Institute (HLI) in 2021 and justified this decision on the following grounds:

“Whilst I think they have some of the deepest analysis of StrongMinds, I am still confused by some of their methodology, it’s not clear to me what their relationship to StrongMinds is.”.

By failing to discuss HLI’s analysis, Simon’s post presented an incomplete and potentially misleading picture of the evidence base for StrongMinds. In addition, some of the comments seemed to call into question the independence of HLI’s research. I’m publishing this post to clarify the strength of the evidence for StrongMinds, HLI’s independence, and to acknowledge what we’ve learned from this discussion.

I raise concerns with several of Simon’s specific points in a comment on the original post. In the rest of this post, I’ll respond to four general questions raised by Simon’s post that were too long to include in my comment. I briefly summarise the issues below and then discuss them in more detail in the rest of the post

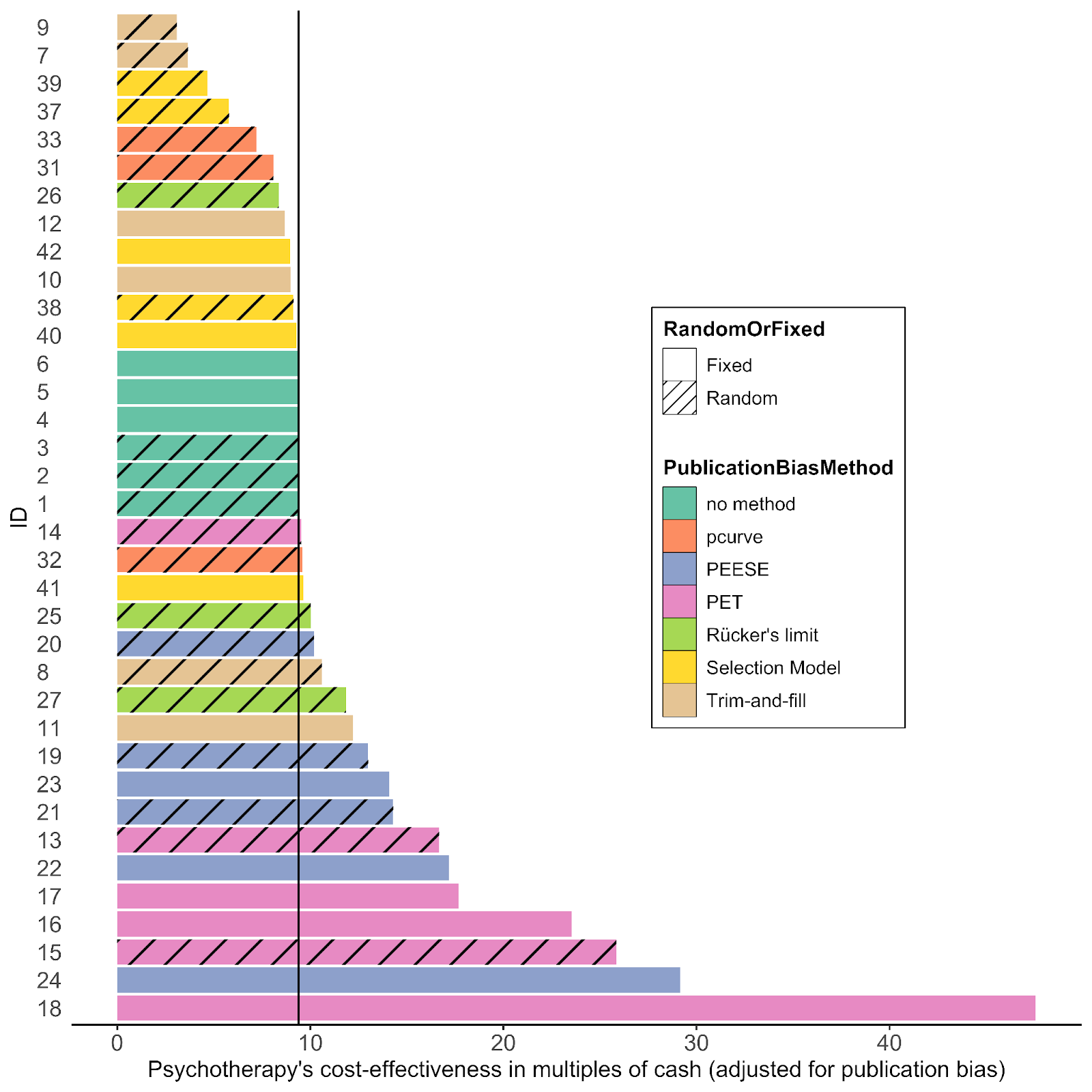

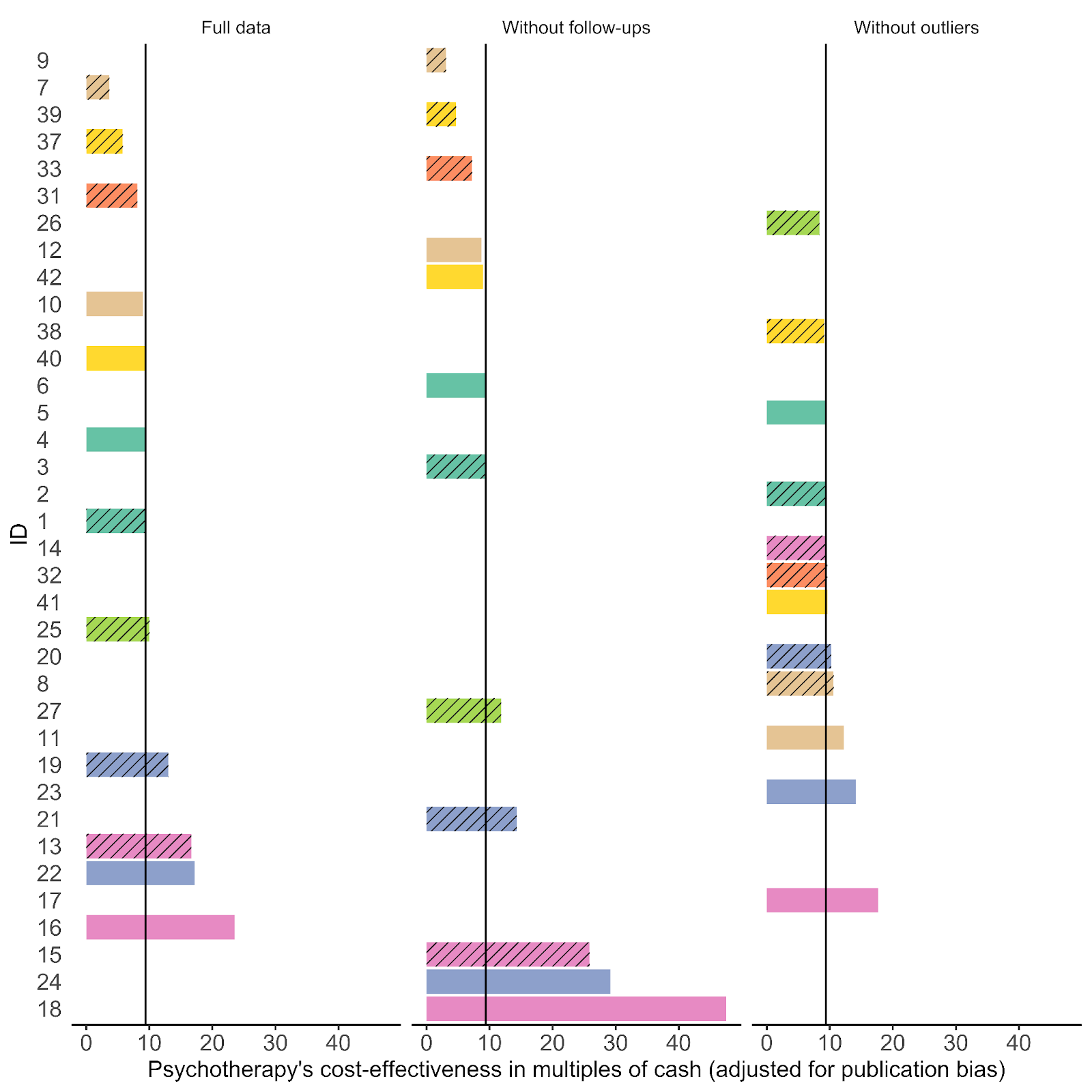

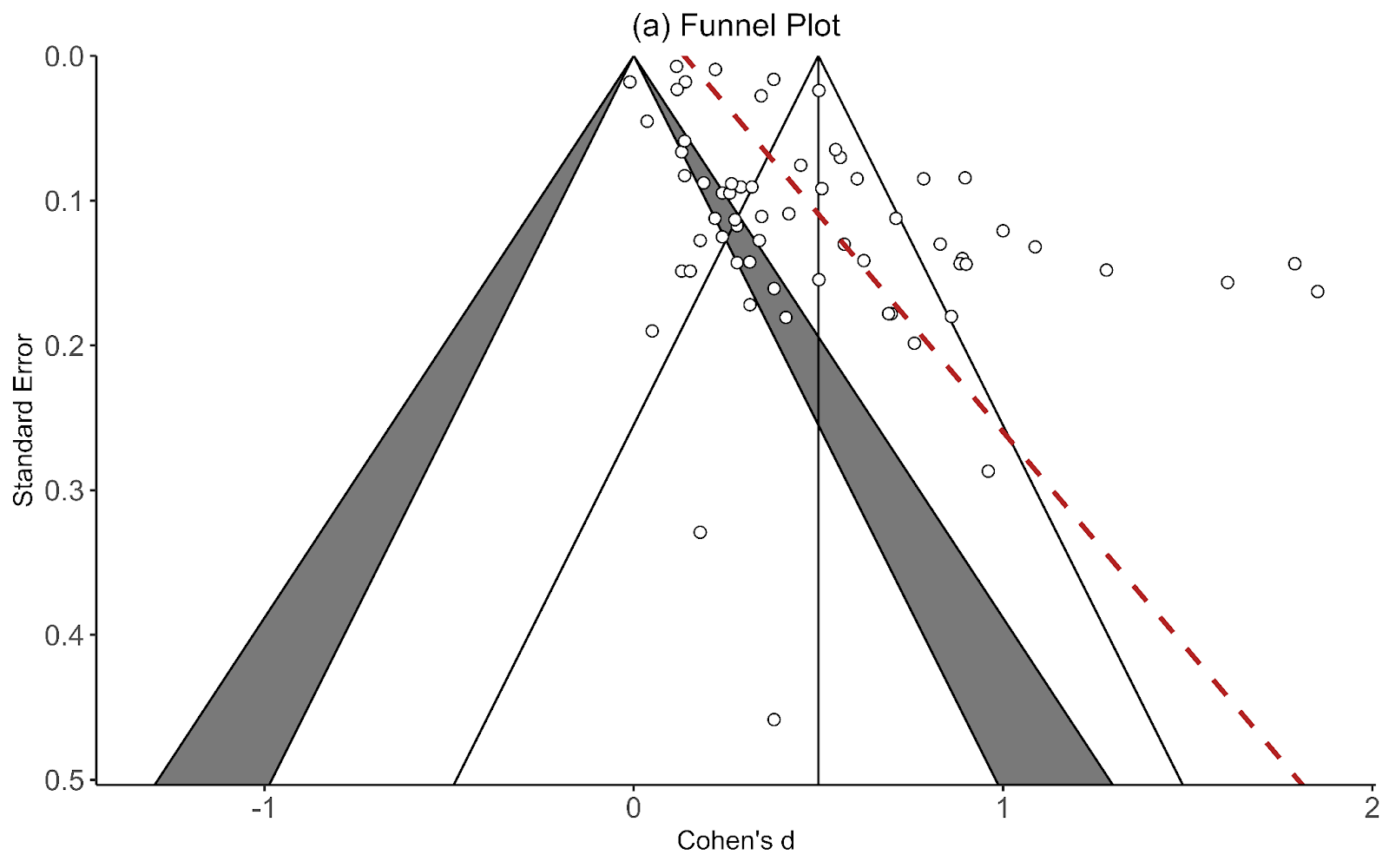

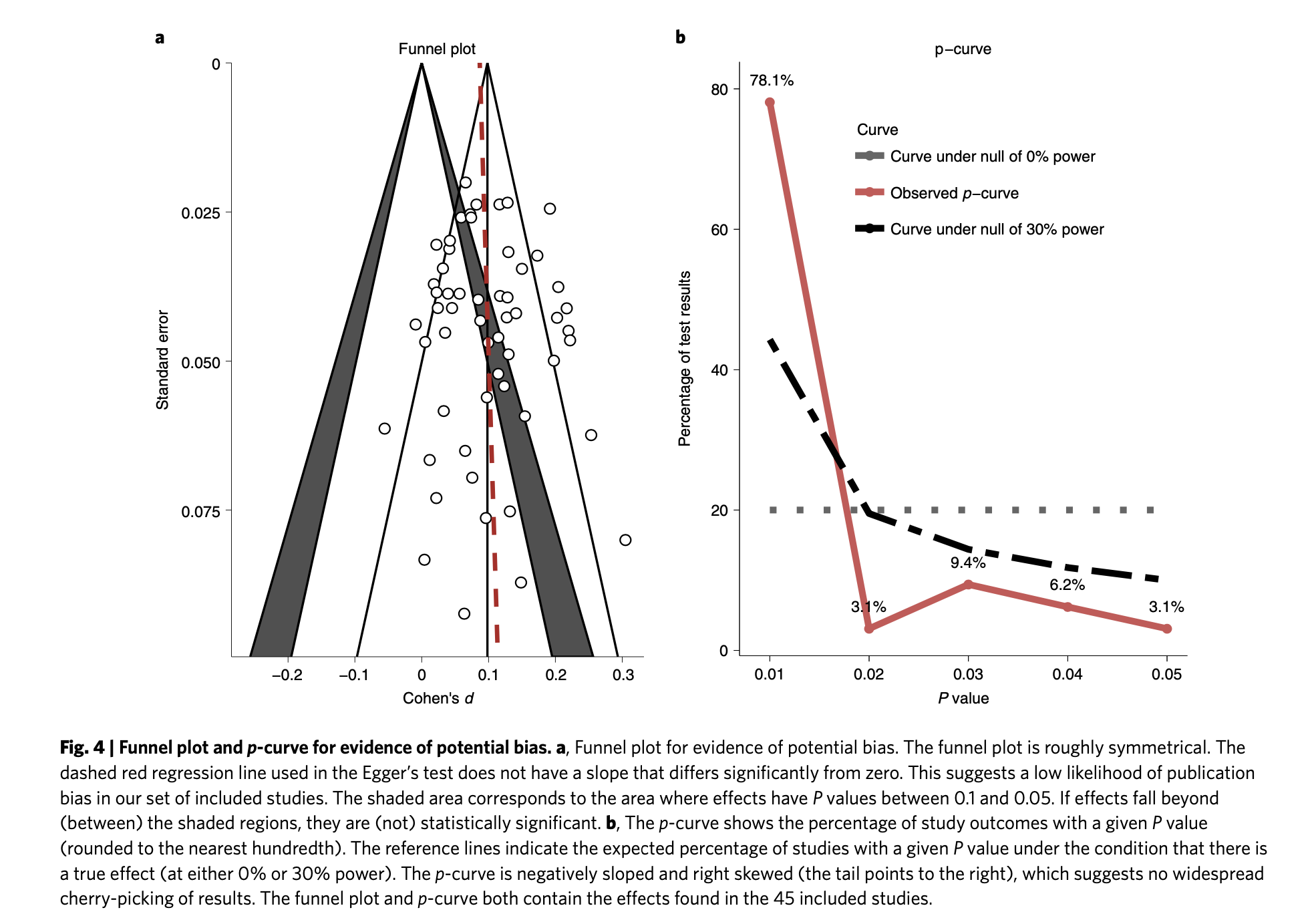

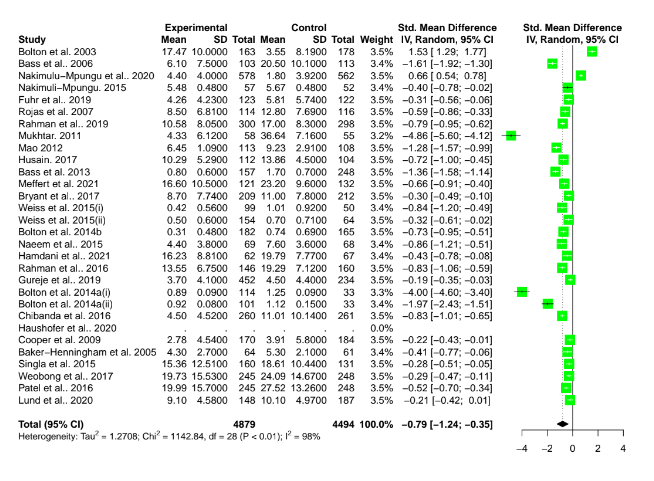

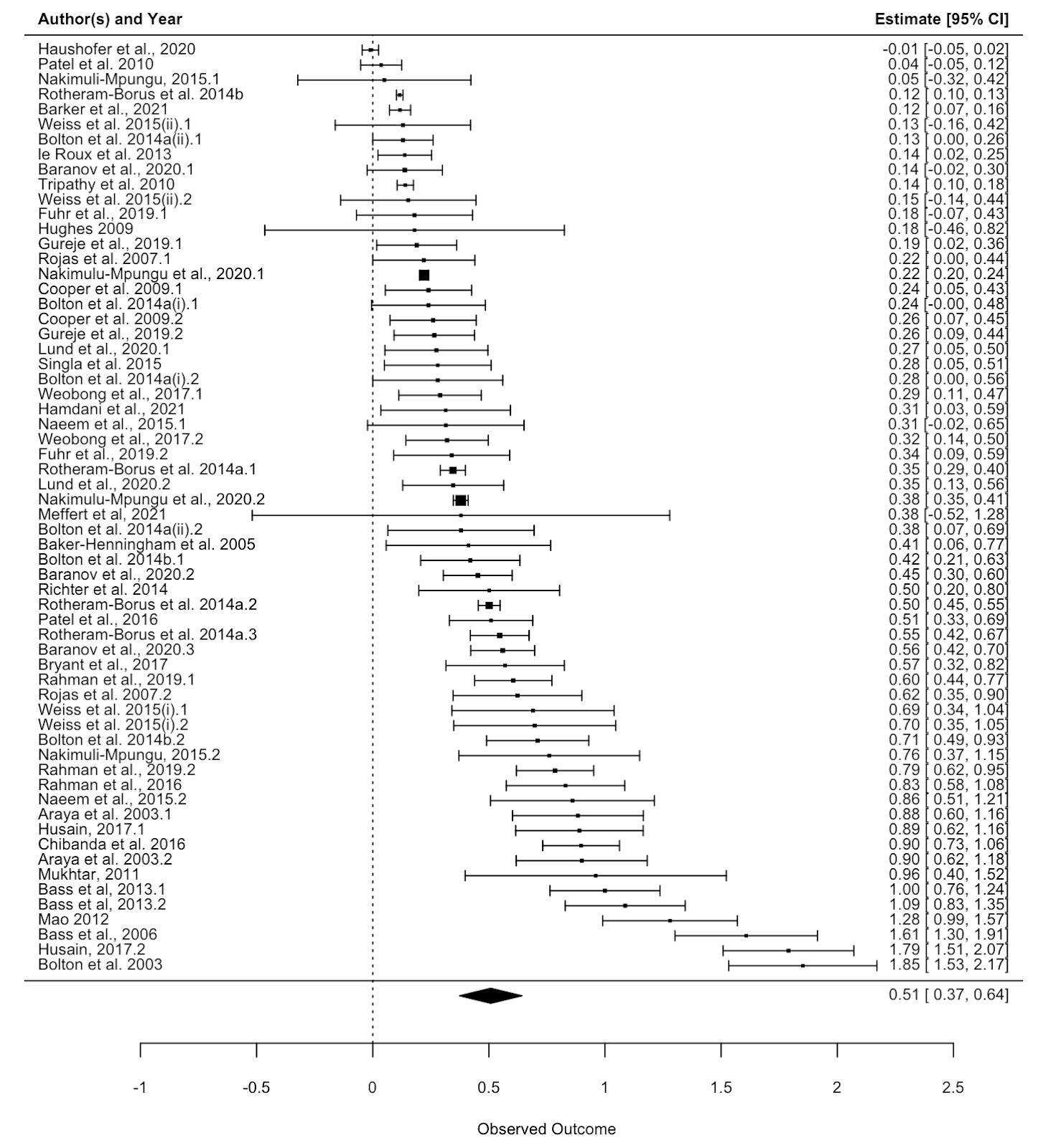

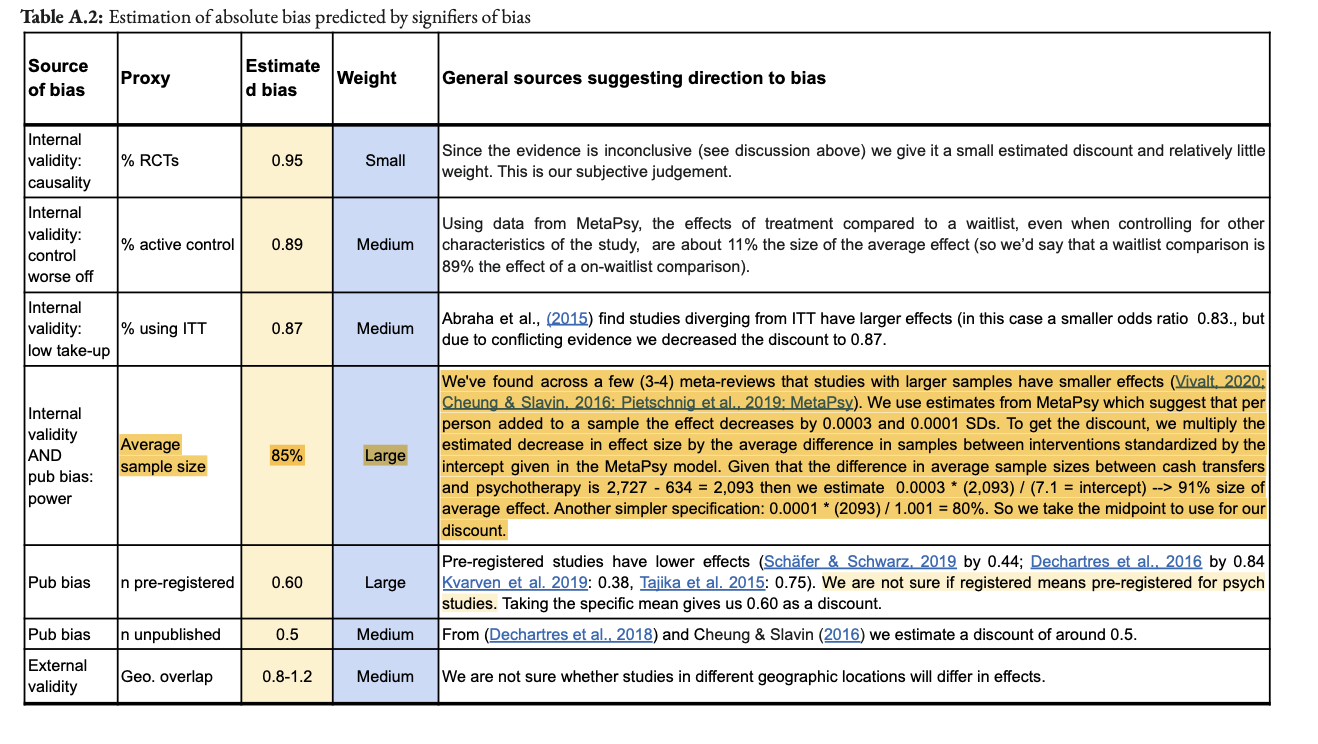

1. Should StrongMinds be a top-rated charity? In my view, yes. Simon claims the conclusion is not warranted because StrongMinds’ specific evidence is weak and implies implausibly large results. I agree these results are overly optimistic, so my analysis doesn’t rely on StrongMind’s evidence alone. Instead, the analysis is based mainly on evidence synthesised from 39 RCTs of primarily group psychotherapy deployed in low-income countries.

2. When should a charity be classed as “top-rated”? I think that a charity could be considered top-rated when there is strong general evidence OR charity-specific evidence that the intervention is more cost-effective than cash transfers. StrongMinds clears this bar, despite the uncertainties in the data.

3. Is HLI an independent research institute? Yes. HLI’s mission is to find the most cost-effective giving opportunities to increase wellbeing. Our research has found that treating depression is very cost-effective, but we’re not committed to it as a matter of principle. Our work has just begun, and we plan to publish reports on lead regulation, pain relief, and immigration reform in the coming months. Our giving recommendations will follow the evidence.

4. What can HLI do better in the future? Communicate better and update our analyses. We didn’t explicitly discuss the implausibility of StrongMinds’ data in our work. Nor did we push StrongMinds to make more reasonable claims when we could have done so. We acknowledge that we could have done better, and we will try to do better in the future. We also plan to revise and update our analysis of StrongMinds before Giving Season 2023.

1. Should StrongMinds be a top-rated charity?

I agree that StrongMinds’ claims of curing 90+% of depression are overly optimistic, and I don’t rely on them in my analysis. This figure mainly comes from StrongMinds’ pre-post data rather than a comparison between a treatment group and a control. These data will overstate the effect because depression scores tend to decline over time due to a natural recovery rate. If you monitored a group of depressed people and provided no treatment, some would recover anyway.

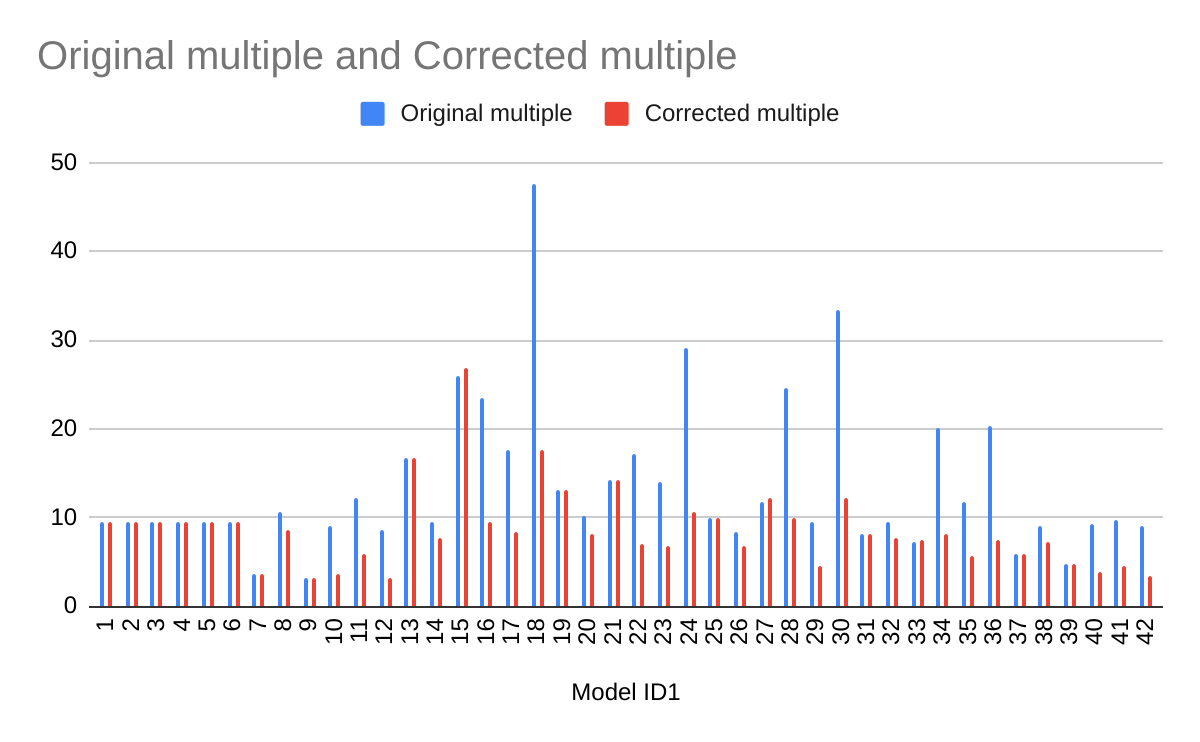

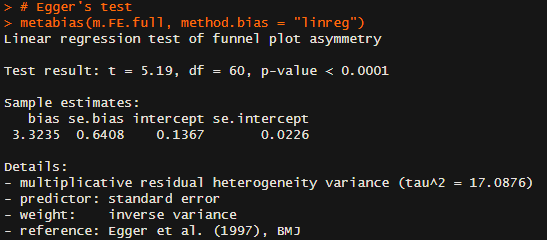

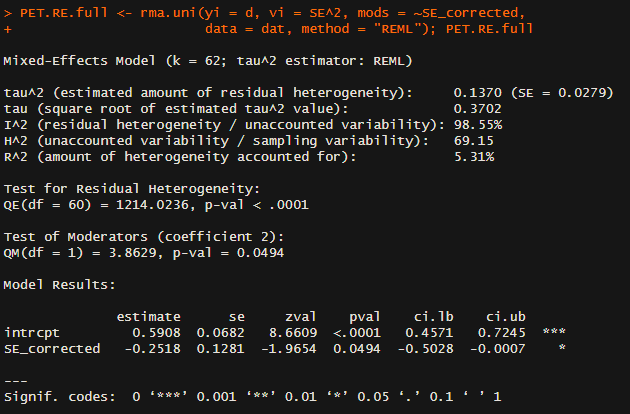

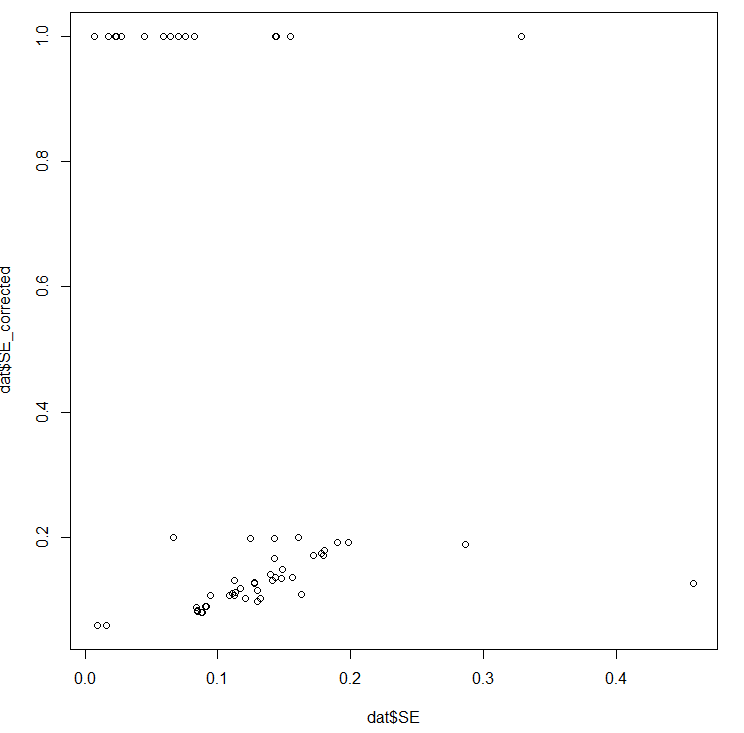

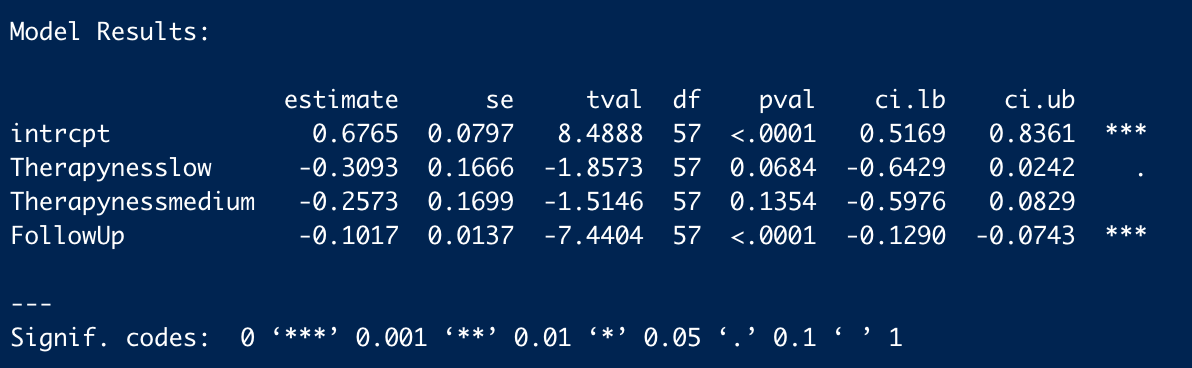

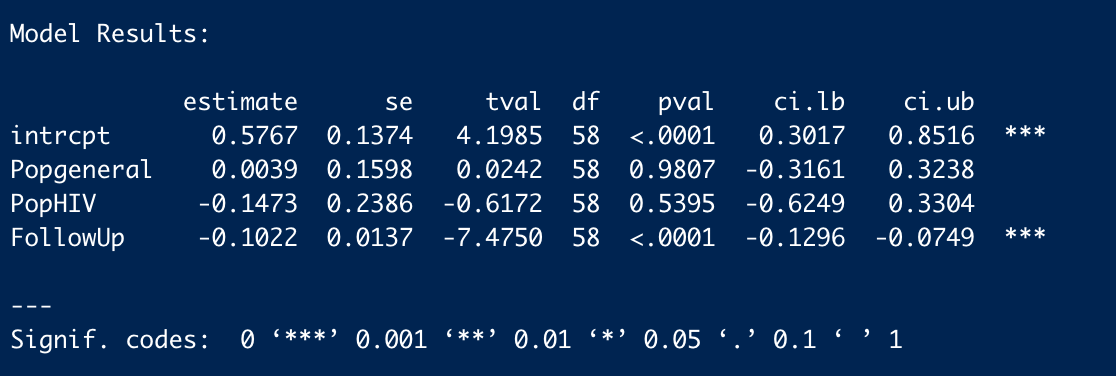

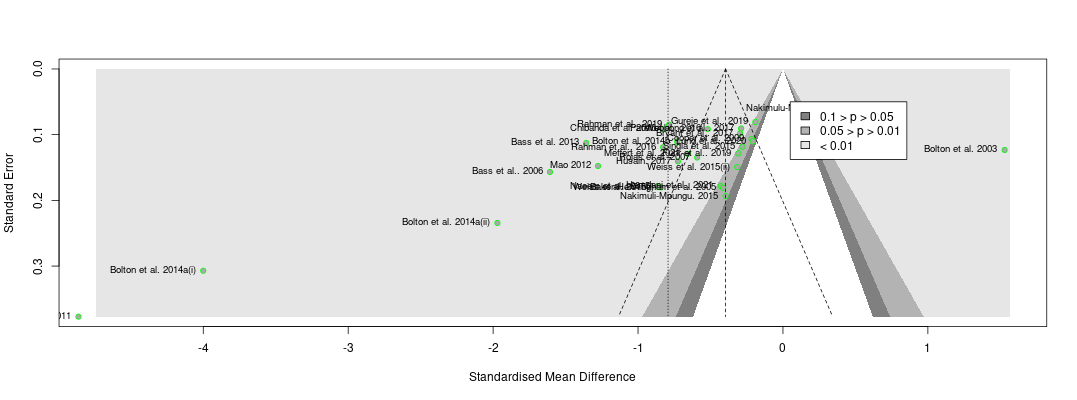

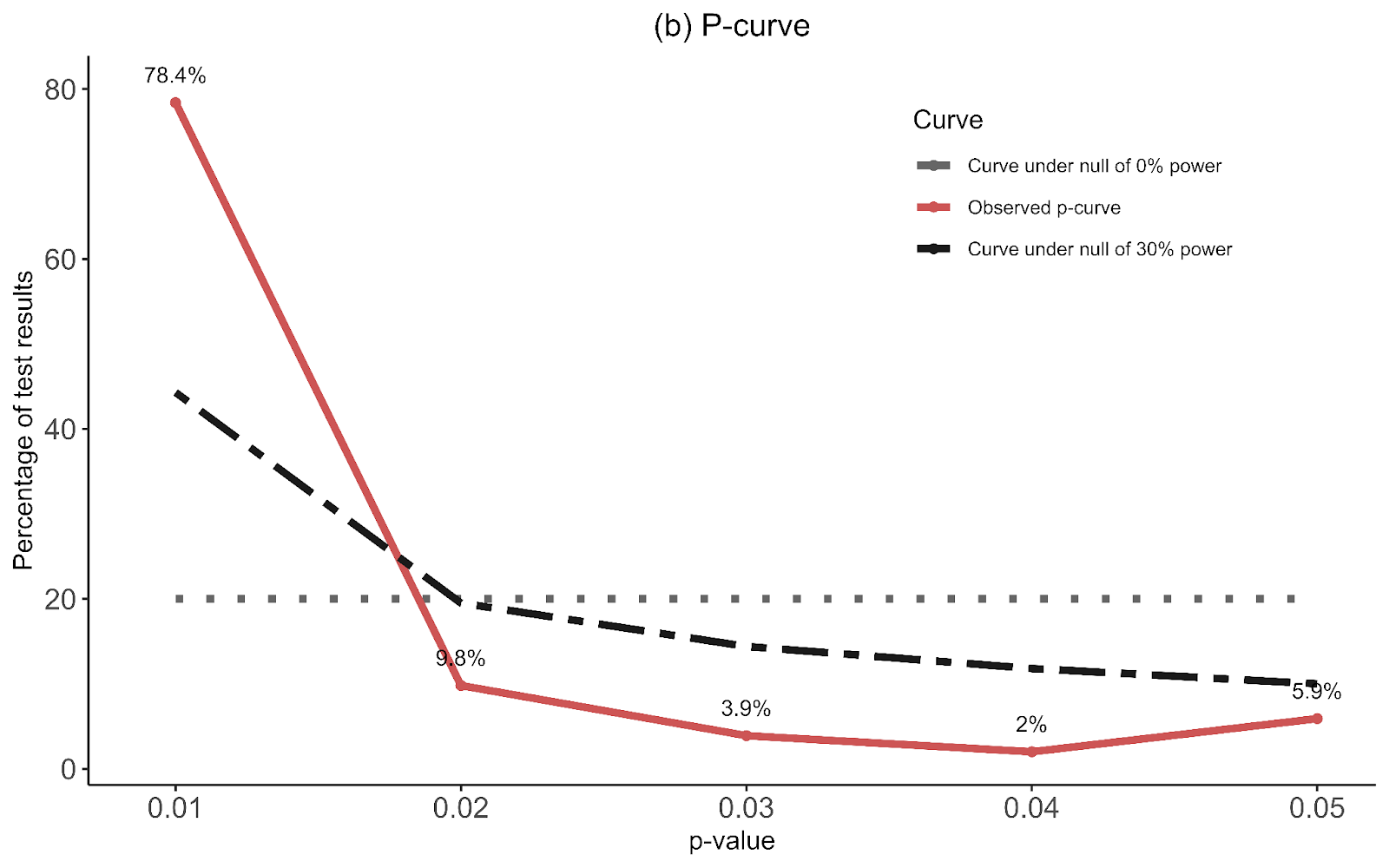

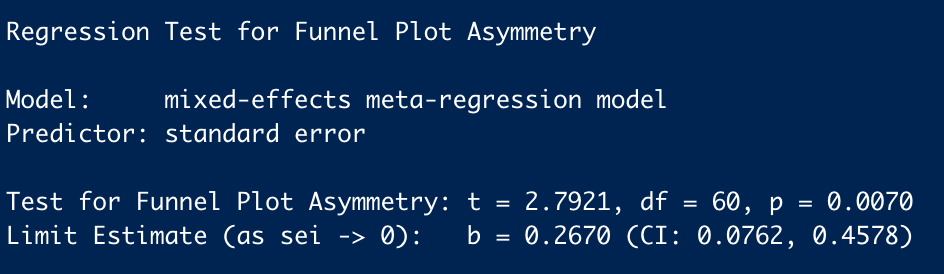

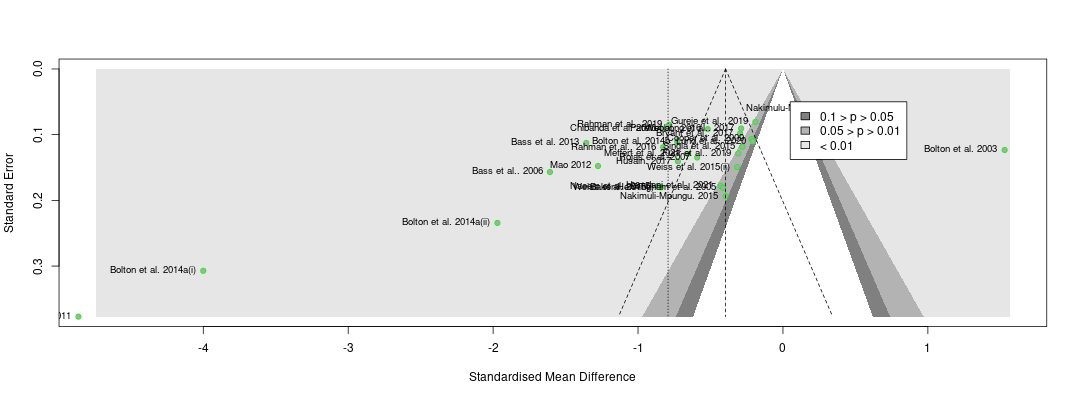

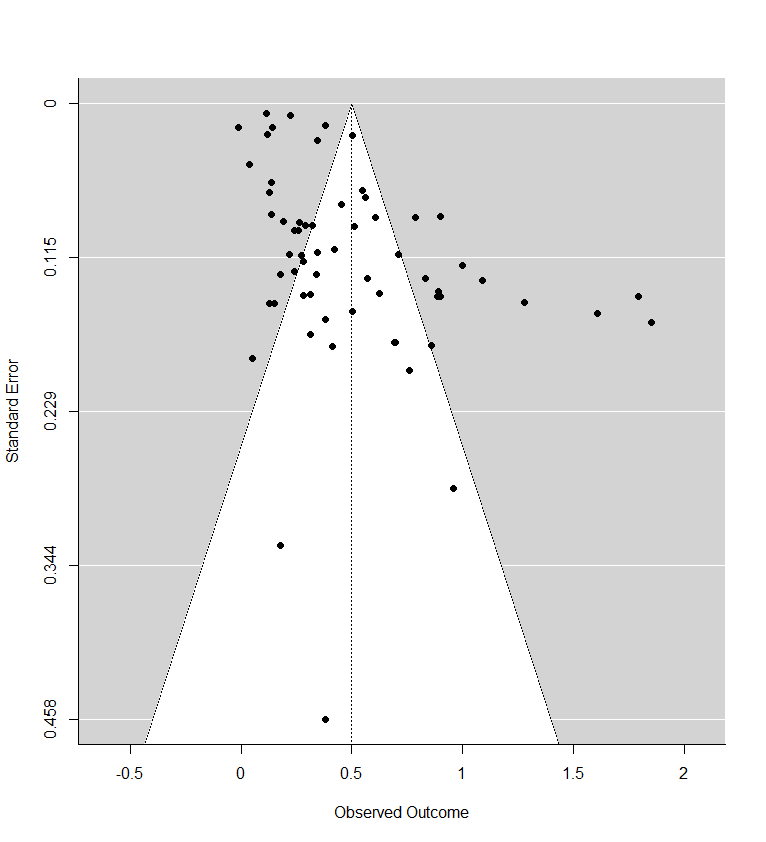

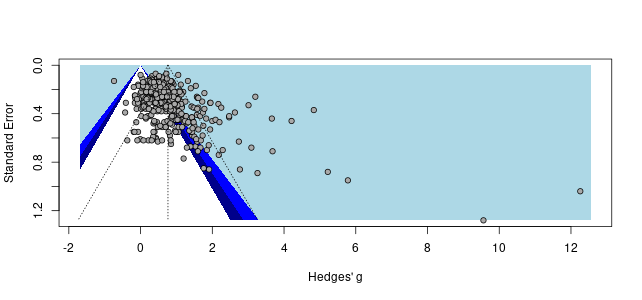

My analysis of StrongMinds is based on a meta-analysis of 39 RCTS of group psychotherapy in low-income countries. I didn’t rely solely on StrongMinds’ own evidence alone, I incorporated the broader evidence base from other similar interventions too. This strikes me, in a Bayesian sense, as the sensible thing to do. In the end, StrongMinds' controlled trials only make up 21% of the effect of the estimate (see Section 4 of the report for a discussion of the evidence base). It's possible to quibble with the appropriate weight of this evidence, but the key point is that it is much less than the 100% Simon seems to suggest.

2. When should a charity be classed as “top-rated”?

At HLI, we think the relevant factors for recommending a charity are:

(1) cost-effectiveness is substantially better than our chosen benchmark (GiveDirectly cash transfers); and

(2) strong evidence of effectiveness.

I think Simon would agree with these factors, but we define “strong evidence” differently.

I think Simon would define “strong evidence” as recent, high-quality, and charity-specific. If that’s the case, I think that’s too stringent. That standard would imply that GiveWell should not recommend bednets, deworming, or vitamin-A supplementation. Like us, GiveWell also relies on meta-analyses of the general evidence (not charity-specific data) to estimate the impact of malaria prevention (AMF, row 36) and vitamin-A supplementation (HKI, row 24) on mortality, and they use historical evidence for the impact of malaria prevention on income (AMF, row 109). Their deworming CEA infamously relies on a single RCT (DtW, row 7) of a programme quite different from the one deployed by the deworming charities they support.

In an ideal world, all charities would have the same quality of evidence that GiveDirectly does (i.e., multiple, high-quality RCTs). In the world we live in, I think GiveWell’s approach is sensible: use high-quality, charity-specific evidence if you have it. Otherwise, look at a broad base of relevant evidence.

As a community, I think that we should put some weight on a recommendation if it fits the two standards I listed above, according to a plausible worldview (i.e., GiveWell’s moral weights or HLI’s subjective wellbeing approach). All that being said, we’re still developing our charity evaluation methodology, and I expect our views to evolve in the future.

3. Is HLI an independent research institute?

In the original post, Simon said:

I’m going to leave aside discussing HLI here. Whilst I think they have some of the deepest analysis of StrongMinds, I am still confused by some of their methodology, it’s not clear to me what their relationship to StrongMinds is (emphasis added).

The implication, which others endorsed in the comments, seems to be that HLI’s analysis is biased because of a perceived relationship with StrongMinds or an entrenched commitment to mental health as a cause area which compromises the integrity of our research. While I don’t assume that Simon thinks we’ve been paid to reach these conclusions, I think the concern is that we’ve already decided what we think is true, and we aim to prove it.

To be clear, the Happier Lives Institute is an independent, non-profit research institute. We do not, and will not, take money from anyone we do or might recommend. Like every organisation in the effective altruism community, we’re trying to work out how to do the most good, guided by our beliefs and views about the world.

That said, I can see how this confusion may have arisen. We are advocating for a new approach (evaluating impact in terms of subjective wellbeing), we have been exploring a new cause area (mental health), and we currently only recommend one charity (StrongMinds).

While this may seem suspicious to some, the reason is simple: we’re a new organisation that started with a single full-time researcher in 2020 and has only recently expanded to three researchers. We started by comparing psychotherapy to GiveWell’s top charities, but it’s not the limit of our ambitions. It just seemed like the most promising place to test our hypothesis that taking happiness seriously would indicate different priorities. We think StrongMinds is the best giving option, given our research to date, but we are actively looking for other charities that might be as good or better.

In the next few weeks, we will publish cause area exploration reports for reducing lead exposure, increasing immigration, and providing pain relief. We plan to continue looking for neglected causes and cost-effective interventions within and beyond mental health.

4. What can HLI do better in the future?

There are a few things I think HLI could learn from and do better due to Simon’s post and the ensuing discussion around it.

We didn’t explicitly discuss the implausibility of StrongMinds’ headline figures in our work, and, in retrospect, that was an error. We should also have raised these concerns with StrongMinds and asked them to clarify what causal evidence they are relying on. We have done this now and will provide them with more guidance on how they can improve their evidence base and communicate more clearly about their impact.

I also think we can do better at highlighting our key uncertainties, the quality of the evidence we are using in our analysis, and pointing out the places where different priors would lead a reader to update less on our analysis.

Furthermore, I think we can improve how we present our research regarding the cost-effectiveness of psychotherapy and StrongMinds in particular. This is something that we were already considering, but after this giving season, I’ve realised that there are some consistent sources of confusion we need to address.

Despite the limitations of their charity-specific data, we still think StrongMinds should be top-rated. It is the most cost-effective, evidence-backed organisation we’ve assessed so far, even when we compare it to some very plausible alternatives that are currently considered top-rated. That being said, we’ve learned a lot since we published our StrongMinds report in 2021, and there is room for improvement. This year, we plan to update our meta-analysis and cost-effectiveness analysis of psychotherapy and StrongMinds with new evidence and more robustness checks for Giving Season 2023.

If you think there are other ways we can improve, then please respond to our annual impact survey which closes at 8 am GMT on Monday 30 January. We look forward to refining our approach in response to valuable, constructive feedback.

There's two separate topics here, the one I was discussing in the quoted text was about whether an intra RCT comparison of two interventions was necessary or whether two meta-analyeses of two interventions would be sufficient. The references to GiveWell were not about the control groups they accept, but about their willingness to use meta-analyses instead of RCTs with arms comparing different interventions they suggest.

Another topic is the appropriate control group to compare psychotherapy against. But, I think you make a decent argument that placebo quality could matter. It's given me some things to think about, thank you.