Author: Alex Cohen, GiveWell Senior Researcher

In a nutshell

- The Happier Lives Institute (HLI) has argued in a series of posts that GiveWell should use subjective well-being measures in our moral weights and that if we did, we would find StrongMinds, which provides group-based interpersonal psychotherapy (IPT-G), is as or more cost-effective than marginal funding to our top charities.

- This report provides our initial thoughts on HLI's assessment, based on a shallow review of the relevant literature and considerations.

- Our best guess is that StrongMinds is approximately 25% as cost-effective as our marginal funding opportunities. This assessment is based on several subjective adjustments, and we’ve identified limited evidence to discipline these adjustments. As a result, we think a wide range of cost-effectiveness is possible (approximately 5% to 80% as cost-effective as our marginal funding opportunities), and additional research could lead to different conclusions.

- The main factors that cause us to believe StrongMinds is less cost-effective than HLI believes it is—and that we are unsure about—are:

- Spillovers to household members: It’s possible that therapy for women served by StrongMinds benefits other members of their households. Our best guess is that the evidence HLI uses to estimate spillover effects overestimates spillovers, but we think it’s possible that direct evidence on the effect of StrongMinds on non-recipients could lead to a different conclusion.

- Adjustments for internal validity of therapy studies: In our analysis, we apply downward adjustments for social desirability bias and publication bias in studies of psychotherapy. Further desk research and conversation with experts could help inform adjustments for social desirability and publication bias.

- Lower effects outside of trial contexts: Our general expectation is that programs implemented as part of randomized trials are higher quality than similar programs implemented at scale outside of trial settings. We expect forthcoming results from two randomized controlled trials (RCTs) of StrongMinds could provide an update on this question.

- Duration of effects of StrongMinds: There is evidence for long-term effects for some lay-person-delivered psychotherapy programs but not IPT-G, and we’re skeptical that a 4- to 8-week program like StrongMinds would have benefits that persist far beyond a year. We expect one of the forthcoming RCTs of StrongMinds, which has a 2-year follow-up, will provide an update on this question.

- Translating improvements in depression to life satisfaction scores: HLI’s subjective well-being approach requires translating effects of StrongMinds into effects on life satisfaction scores, but studies of psychotherapy generally do not report effects on life satisfaction. We think HLI overestimates the extent to which improvements in depression lead to increases in life satisfaction. However, we think direct evidence on the effect of StrongMinds on life satisfaction scores could update that view.

- Comparing StrongMinds to our top charities relies heavily on one’s moral views about the trade-off between StrongMinds’ program and averting a death or improving a life. As a result, we also think it’s important to understand what HLI’s estimates imply about these trade-offs at a high level, in addition to considering the specific factors above. HLI’s estimates imply, for example, that a donor would pick offering StrongMinds’ intervention to 20 individuals over averting the death of a child, and that receiving StrongMinds’ program is 80% as good for the recipient as an additional year of healthy life. We’re uncertain about how much weight to put on these considerations, since these trade-offs are challenging to assess, but they seem unintuitive to us and further influence our belief that StrongMinds is less cost-effective than HLI’s estimates.

- We may conduct further work on this topic, but we’re uncertain about the timeline because even under optimistic scenarios, StrongMinds is less cost-effective than our marginal funding opportunities. If we did additional work, we would prioritize reviewing two forthcoming RCTs of StrongMinds and conducting additional desk research and conversations with subject matter experts to try to narrow our uncertainty on some of the key questions above.

Background

Group interpersonal psychotherapy (IPT-G) is a time-limited course of group therapy sessions which aims to tackle depression. StrongMinds implements an IPT-G program that specifically targets women with depression and consists of participants meeting with a facilitator in groups of five to ten, on average, for 90 minutes one to two times per week for four to eight weeks. [1]

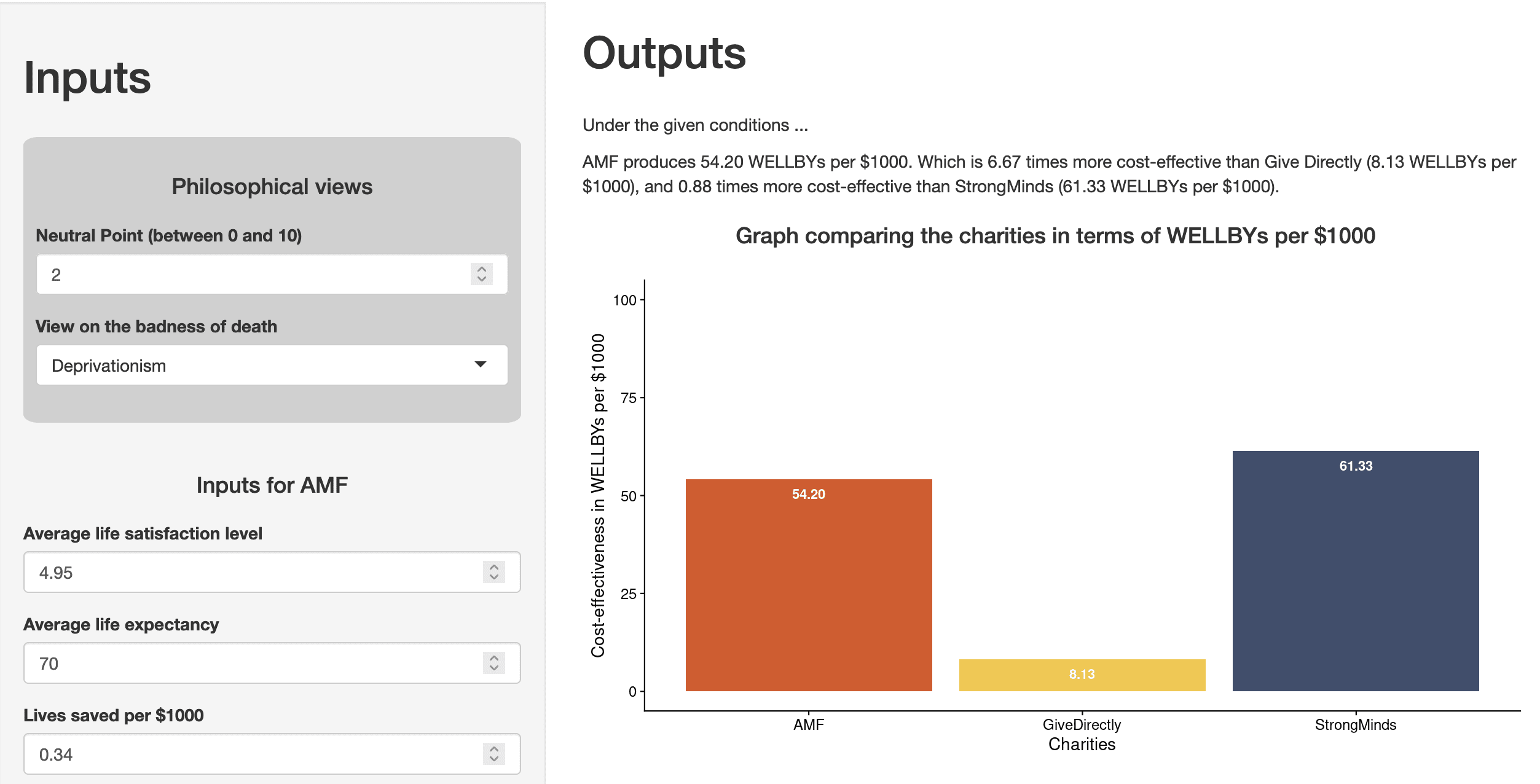

The Happier Lives Institute (HLI) has argued in a series of reports and posts that if we were to assess charities we recommend funding based on their impact on subjective well-being measures (like life satisfaction scores), StrongMinds’ interpersonal group psychotherapy program would be competitive with Against Malaria Foundation (AMF), one of our top charities. [2]

This report is intended to share our view of HLI’s assessment and StrongMinds’ cost-effectiveness, based on a shallow review of the relevant literature and considerations. It incorporates feedback we’ve received from HLI on a previous (unpublished) draft of our work and follow-up research HLI has done since then.

HLI’s estimate of the cost-effectiveness of StrongMinds

HLI estimates the effect of StrongMinds compared to AMF, one of our top charities, by measuring the impact of both on life satisfaction scores.[3] Life satisfaction scores measure how people respond, on a scale from 0-10, to the question, “All things considered, how satisfied are you with your life as a whole these days?"[4]

HLI estimates that psychotherapy from StrongMinds creates 77 life satisfaction point-years (or WELLBYs) per $1,000 spent.[5] Summary of its calculations:

- Main effect: It estimates that StrongMinds' program increases mental health scores among recipients of psychotherapy by 1.69 standard deviation (SD)-years.[6] This is based on combining estimates from studies of programs similar to StrongMinds in low- and middle-income countries (Thurman et al. 2017, Bolton et al. 2007, Bolton et al. 2003), studies of StrongMinds, and a meta-regression of indirect evidence.[7]

- Internal and external validity: In its meta-regression of indirect evidence, HLI includes adjustments for internal validity (including publication bias) and external validity (proxied by geographic overlap). These are reported relative to cash transfers studies.[8] It notes that social desirability bias and concerns about effects being smaller at higher scale are excluded.[9] These internal and external validity adjustments lead to a 90% discount for therapy relative to cash transfers.[10]

- Spillovers: HLI estimates that non-recipients of the program in the recipient’s household see 53% of the benefits of psychotherapy from StrongMinds and that each recipient lives in a household with 5.85 individuals.[11] This is based on three studies (Kemp et al. 2009, Mutamba et al. 2018a, and Swartz et al. 2008) of therapy programs where recipients were selected based on negative shocks to children (e.g., automobile accident, children with nodding syndrome, children with psychiatric illness).[12] This leads to a 3.6x multiplier for other household members.[13] The total benefit to the household is 6.06 SD-years.[14]

- Translating depression scores into life satisfaction: To convert improvements in depression scores, measured in SDs, into life satisfaction scores, HLI assumes (i) 1 SD improvement in depression scores is equivalent to 1 SD improvement in life satisfaction scores and (ii) a 1 SD improvement in life satisfaction is 2.17 points. [15] This 2.17 estimate is based on data from low-income countries, which finds an average SD in life satisfaction scores of 2.37, and data from high-income countries, which finds an average SD in life satisfaction scores of 1.86.[16]

- Cost: HLI estimates that StrongMinds costs $170 per recipient.[17]

- Cost-effectiveness: This implies 77 life satisfaction point-years per $1,000 spent. [18]

HLI estimates that AMF creates 81 life satisfaction point-years (or WELLBYs) per $1,000 spent. Note: This is under the deprivationist framework and assuming a “neutral point” of 0.5 life satisfaction points. HLI’s report presents estimates using a range of views of the badness of death and range of neutral points. It does not share an explicit view about the value of saving a life. We are benchmarking against this approach, since we think this is what we would use and it seems closest to our current moral weights. Summary of HLI's calculations:

-

Using a deprivationist framework, HLI estimates the value of averting a death using the following formula: ([Average life satisfaction score out of 10 during remaining years of life] minus [Score out of 10 on life satisfaction scale that is equivalent to death]) times [years of life gained due to death being averted].[19]

-

Average life satisfaction is 4.95/10. Average age of death from malaria is 20 and they live to be 70, giving them 50 extra years of life. As a result, WELLBYs gained equal (4.95-neutral point)*(70-20).[20]

-

HLI cites a cost per death averted of $3,000 for AMF.[21]

-

With a neutral point of 0.5, this would be approximately 74 WELLBYs (life satisfaction point-years) per $1,000 spent.[22] (Note: HLI presents a range of neutral points. We chose 0.5 since that’s the neutral point we’re using.)

-

HLI also adds grief effects of 2.4 WELLBYs per $1,000 spent and income-increasing effects of 4 WELLBYs per $1,000 spent.[23]

-

This yields a bottom line of approximately 80 WELLBYs per $1,000 spent for a neutral point of 0.5.[24]

HLI estimates cash transfers from GiveDirectly create 8 life satisfaction point-years (or WELLBYs) per $1,000 spent.[25]

Our assessment

Overall, we estimate StrongMinds is approximately 25% as cost-effective as marginal funding to AMF and our other top charities. However, this is based on several subjective adjustments, and we think a wide range of cost-effectiveness is possible (approximately 5% to 80% as cost-effective as our marginal dollar).

Best guess on cost-effectiveness

We put together an initial analysis of StrongMinds' cost-effectiveness under a subjective well-being approach, based on HLI’s analysis.

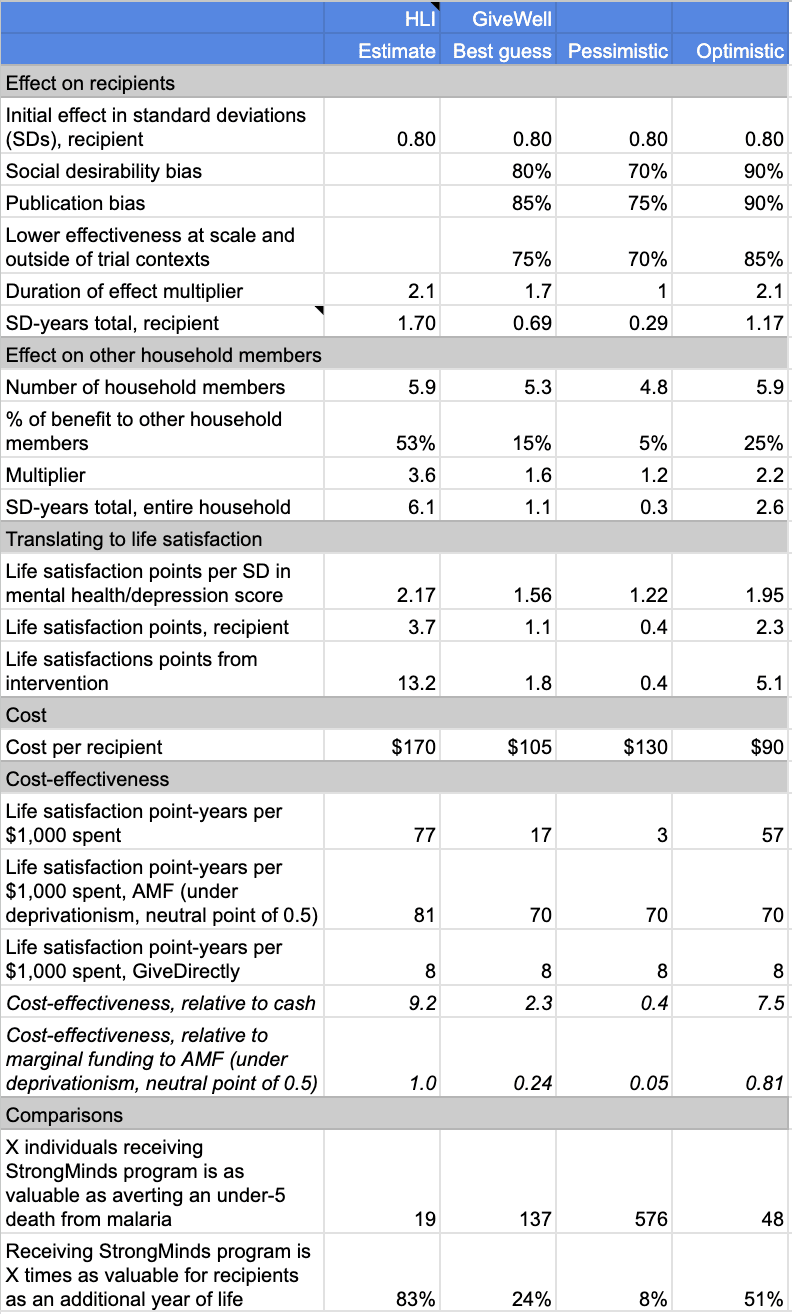

We estimate StrongMinds is roughly 25% as cost-effective as our marginal funding to AMF and other top charities. Compared to HLI, we estimate lower spillover effects and stricter downward adjustments for social desirability bias, publication bias, and lower effects at scale. This is partially counterbalanced by estimating lower costs and lower cost-effectiveness of AMF under a subjective well-being approach, compared to HLI.

A summary of HLI’s estimates vs. our view:

Our best guess is that StrongMinds leads to 17 life satisfaction point-years (or WELLBYs) per $1,000 spent. Summary of our calculations:

-

Main effect: HLI’s estimate of the effect of the main effect of IPT-G on SDs in depression scores is roughly similar to our estimate, which was based on a shallower review of the literature.[26] We’re uncertain about these estimates and think it’s possible our estimates could change if we prioritized a more in-depth review. (We describe this further below.)

-

Internal and external validity adjustments: We include downward adjustments for three additional factors that are not incorporated into HLI’s estimates. These are subjective guesses, but we believe they’re worth including to make StrongMinds comparable to top charities and other funding opportunities. These include:

-

Duration of benefits: There is evidence for long-term effects for some lay-person-delivered psychotherapy programs but not IPT-G, and we’re skeptical that a 4- to 8-week program like StrongMinds would have benefits that occur far beyond a year. We also expect some of the internal and external validity adjustments we apply would also lead to shorter duration of effects. We apply an 80% adjustment factor for this. (We describe this further below.)

-

Spillovers: We roughly double the effects to account for spillovers to other household members. This is lower than HLI’s adjustment for spillovers. This reflects our assumption that the evidence HLI uses to estimate spillovers may overestimate spillover effects and household size is lower than HLI estimates. (We describe these further below.)

-

Translating depression scores into life satisfaction: We make a slight discount (90% adjustment) to account for improvements in depression scores translating less than 1:1 to improvements in life satisfaction scores and a discount (80% adjustment) to account for individuals participating in StrongMinds having depression at baseline and therefore having a more concentrated distribution of life satisfaction scores. (We describe this further below.)

-

Cost: StrongMinds cited a figure of $105 per person receiving StrongMinds for 2022.[27] We use that more recent figure instead of the $170 per person figure used by HLI.

-

Cost-effectiveness: This implies 17 life satisfaction point-years per $1,000 spent.

We estimate marginal funding to AMF creates 70 life satisfaction point-years per $1,000 spent. We define marginal funding to AMF as funding that is roughly 10 times as cost-effective as unconditional cash transfers through GiveDirectly (our current bar for funding opportunities). This is similar to marginal funding to our other top charities.[28] Our assumptions are similar to HLI's on life satisfaction point-years per death averted from AMF. When we input this into our current cost-effectiveness analysis, we find an effect of 70 life satisfaction point-years per $1,000 spent.

We also estimate that GiveDirectly creates 8 life satisfaction point-years per $1,000 spent, which is similar to HLI's estimate. This is largely because we rely on HLI’s meta-analysis of the effect of cash transfers on life satisfaction scores.

Key uncertainties and judgment calls that we would like to explore further

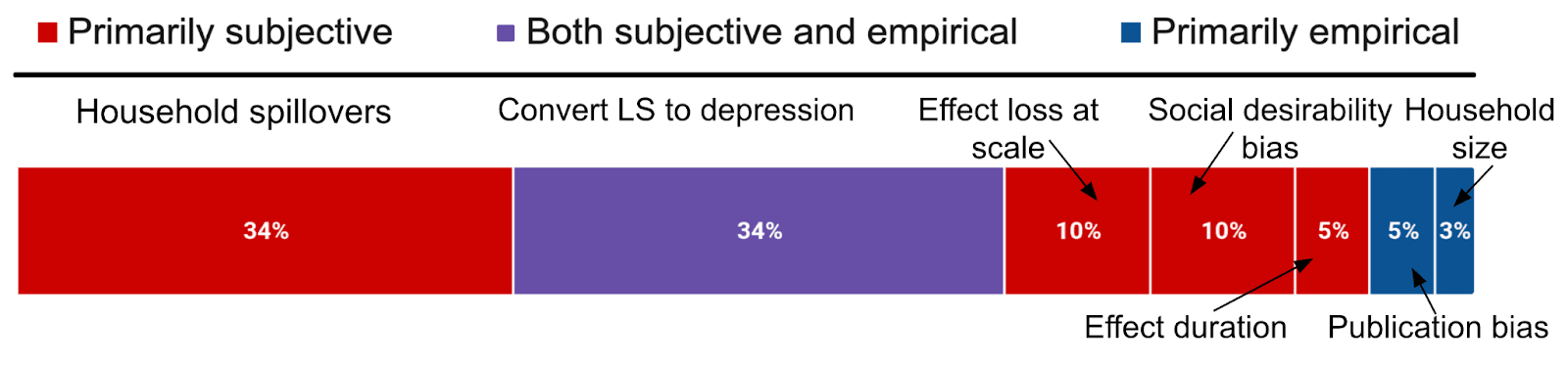

The cost-effectiveness of StrongMinds relies on several judgment calls that we’re uncertain about. We would like to explore these further.

Spillover effects to other household members

It’s possible that improvements in well-being of StrongMinds participants lead to improvements in well-being of other individuals in their household. We had excluded these benefits in our initial analysis, and HLI’s work updated us toward believing these could be a substantial part of the benefits of therapy.

To estimate spillover effects of StrongMinds, HLI relies on three studies that measure spillovers from therapy given to caregivers or children with severe health issues:[29]

- Mutamba et al. 2018a: This trial measured the effect of therapy to caregivers of children with nodding syndrome. Spillovers were assessed by comparing the effect of therapy on caregivers and the child with nodding syndrome. The study was non-randomized.

- Kemp et al. 2009: This trial measured the effect of eye movement desensitization and reprocessing for post-traumatic stress disorder from a motor vehicle accident among children 6-12 years old. Spillovers were assessed by comparing mental health among children vs. parents.

- Swartz et al. 2008: This trial measured the effect of interpersonal therapy for mothers of children with psychiatric illness. Spillovers were assessed by comparing effects on mothers to their children.

These three studies find a household member effect of 0.35 SDs (95% confidence interval, -0.04, 074), compared to 0.66 SDs for the recipient (95% confidence interval, 0.35, 0.97),[30] or a benefit that’s 53% as large as the recipient’s benefits.

We think it’s possible this evidence leads to greater spillovers than we would expect from StrongMinds, though these are speculative:

- Mutamba et al. 2008 and Swartz et al. 2008 were oriented specifically toward caregivers having better relationships with children with severe health conditions.[31] As a result, we may expect the effects on those children to be larger than children of StrongMinds recipients, since StrongMinds participants may not focus as intensively on relationships with household members.

- Mutamba et al. 2008 and Swartz et al. 2008 look at therapy provided to caregivers of children with severe health issues and measure spillovers to children. It seems plausible that this would have a larger spillover effect than StrongMinds, since those children may rely on that caregiver more intensively for care, and therefore be more affected by changes in that caregiver’s depression scores, than household members of typical StrongMinds participants.

- In Mutamba et al. 2008 and Swartz et al. 2008, it seems possible children may also experience higher rates of treatment for their psychiatric conditions as a result of caregivers receiving therapy, which would confer direct benefits to non-recipients (in this case, children with nodding syndrome or psychiatric illness).

In addition, a recent blog post points out that the results of Kemp et al. 2009 show that parents' depression scores increased, rather than decreased, which should lower HLI’s estimates. HLI notes in a comment on this post that updating for this error lowers the spillover effect to 38%.[32]

We also did a shallow review of correlational estimates and found a range of 5% to 60% across studies. We haven’t reviewed these studies in depth but view them as illustrative of a range of possible effect sizes.

-

Das et al. 2008 estimates that a one standard deviation change in mental health of household members is associated with a 22% to 59% of a standard deviation change in own mental health, across a sample of low- and middle-income countries.[33]

-

Powdthavee and Vignoles 2008 find a one standard deviation increase in parents' mental distress in the previous year lowers life satisfaction in the current year by 25% of a standard deviation for girls, using a sample from the UK. Effects are smaller for boys.[34]

-

Mendolia et al. 2018 find a standard deviation increase in partner’s life satisfaction leads to a 5% of a standard deviation increase in individual life satisfaction, using data from Australia.[35]

These correlations may overestimate the extent of spillovers if there are shocks that are common to the household driving this correlation, assortative matching based on life satisfaction, or genetic transmission of life satisfaction scores within households. The authors control for some of these (consumption, physical health indicators),[36] but it’s possible there are unobserved differences driving the correlation. These also do not account for assortative matching or genetic transmission of life satisfaction. On the other hand, measurement error in life satisfaction scores could bias the relationship downward.

Our best guess is that spillovers to other household members is 15%, but we don’t feel very confident and think new research could update us a lot.

We think additional research on StrongMinds’ costs could lead us to higher or lower estimates of program cost.

We would be interested in exploring ways to fund further research to understand the extent to which improvements in depression scores or life satisfaction measures of StrongMinds participants lead to improvements in these outcomes for others in the household.

Household size

The extent of spillovers also depends on the number of individuals in StrongMinds participants’ households.

HLI estimates household size using data from the Global Data Lab and UN Population Division.[37] They estimate a household size of 5.9[38] in Uganda based on these data, which appears to be driven by high estimates for rural household size in the Global Data Lab data, which estimate a household size of 6.3 in rural areas in 2019.[39] A recent Uganda National Household Survey, on the other hand, estimates household size of 4.8 in rural areas.[40]

We’re not sure what’s driving differences in estimates across these surveys, but our best guess is that household size is smaller than the 5.9 estimate HLI is using.

We would also be interested in understanding what may be driving differences in estimates and whether it’s possible to collect data directly on household size for women participating in StrongMinds program, since these data could potentially be collected cheaply and provide a meaningful update on the extent of spillovers.

Effect of depression on life satisfaction

HLI’s estimates of the effect of StrongMinds are in terms of SD-years of improvement in mental health measures like depression scores.[41]

To translate these measures into life satisfaction, HLI assumes that (i) 1 SD improvement in depression scores is equivalent to 1 SD improvement in life satisfaction scores in trials of the effect of psychotherapy programs similar to StrongMinds and (ii) the SD in life satisfaction among StrongMinds recipients is equal to the SD in life satisfaction among a pooled average of individuals in low-, middle-, and high-income countries.[42]

We apply a 90% adjustment to assumption (i) to account for the possibility that improvements in depression scores do not translate 1:1 to improvements in life satisfaction scores. This is based on:

-

HLI’s review of five therapeutic interventions that report effects on subjective well-being measures and depression. This finds a ratio in effects of 0.89 SD.[43]

-

Our prior that there may be conceptual reasons why depression scores and life satisfaction might not map 1:1 onto each other. The highest/worst values on depression scales correspond to the most severe cases of depression. It seems likely that having the highest score on a depression scale would correspond to a life satisfaction score of 0. However, it’s less clear that this applies on the other end of the scale. Those who have low scores on depression scales have an absence of depressive symptoms.[44] These individuals likely have a higher life satisfaction, but it’s not obvious that the absence of depressive symptoms corresponds to a life satisfaction of 10. If absence of depression means people are completely satisfied with their lives, then it makes sense to scale in this way. But if high life satisfaction requires not just the absence of depressive symptoms but something more, then this approach seems less plausible. We’re uncertain about this line of reasoning, though, and would be interested in direct evidence on the effect of IPT-G or similar programs on life satisfaction measures.

We apply an 80% adjustment to assumption (ii). This is because it seems likely that SD in life satisfaction score is lower among StrongMinds recipients, who are screened for depression at baseline[45] and therefore may be more concentrated at the lower end of the life satisfaction score distribution than the average individual.

For both of these adjustments, we’re unsure about any non-linearities in the relationship between improvements in depression and improvements in life satisfaction. For example, it’s possible that going from severely depressed to moderately depressed leads to a larger than 1:1 increase in life satisfaction measures.

Because adjustments here are highly subjective, we would be open to considering collecting more evidence on this question. A potential approach would be to include surveys on life satisfaction, in addition to depression measures and other mental health scores, in subsequent studies of IPT-G. It may also be possible to explore whether existing datasets allow estimating SD in life satisfaction separately for individuals classified as depressed at baseline vs. not.

Effects at scale and outside of trial contexts

HLI does not include discounts for StrongMinds having a smaller effect when implemented at a larger scale and outside of trial contexts.

Our general expectation is that programs implemented as part of randomized trials are higher quality than similar programs implemented at scale. We would guess that the dominant factor here is that it is difficult to maintain quality of services as an organization scales. This seems particularly relevant for a program that relies on trained practitioners, such as interpersonal group therapy. It's plausible that as StrongMinds scales up its program in the real world, the quality of implementation will decrease relative to the academic trials.[46]

For example, HLI notes that StrongMinds uses a reduced number of sessions and slightly reduced training, compared to Bolton (2003), which its program is based on.[47] We think this type of modification could reduce program effectiveness relative to what is found in trials. However, we have not done a side-by-side of training, session duration, or other program characteristics of StrongMinds compared to other programs in HLI’s full meta-analysis.

We can also see some evidence for lower effects in larger trials:

- Thurman et al. 2017 finds no effect of IPT-G and suggests that one reason for this might be that the study was conducted following rapid program scale-up (unlike the Bolton et al. 2017 trials).[48]

- In the studies included in HLI’s meta-analysis, larger trials tend to find smaller effects.[49] This could be consistent with either smaller effects at scale or publication bias.

We would be eager to see studies that measure the effect of StrongMinds, as it is currently implemented, directly and think the ongoing trial from Baird and Ozler will provide a useful data point here.[50] We’re also interested in learning more about the findings from StrongMinds' RCT and how the program studied in that RCT compares to its typical program.[51]

In addition, we would be interested in understanding how StrongMinds’ costs might change if it were to expand (see below).

Social desirability bias

One major concern we have with these studies is that participants might report a lower level of depression after the intervention because they believe that is what the experimenter wants to see (more detail in footnote).[52] This is a concern because the depression outcomes in therapy programs are self-reported (i.e. participants answer questions regarding their own mental health before and after the intervention).

HLI responded to this criticism and noted that studies that try to assess experimenter-demand effects typically find small effects.[53] These studies test how much responses to questions about depression scores (or other mental health outcomes) change when the surveyor says they expect a particular response (either higher or lower score).

We’re not sure these tests would resolve this bias so we still include a downward adjustment (80% adjustment factor).

Our guess is that individuals who have gone through IPT-G programs would still be inclined to report having lower depression scores and better mental health on a survey that is related to the IPT-G program they received. If the surveyor told them they expected the program to worsen their mental health or improve their mental health, it seems unlikely to overturn whatever belief they had about the program’s expected effect that was formed during their group therapy sessions. This is speculative, however, and we don’t feel confident in this adjustment.

We also guess this would not detect bias arising from individuals changing their responses in order to receive treatment subsequently (or allowing others to do so),[54] though we’re unsure how important this concern is or how typical this is of other therapy interventions included in HLI’s meta-analysis.

We would be interested in speaking more to subject matter experts about ways to detect self-reporting bias and understand possible magnitude.

Publication bias

HLI’s analysis includes a roughly 10% downward adjustment for publication bias in the therapy literature relative to cash transfers literature.[55] We have not explored this in depth but guess we would apply a steeper adjustment factor for publication bias in therapy relative to our top charities.

After publishing its cost-effectiveness analysis, HLI published a funnel plot showing a high level of publication bias, with well-powered studies finding smaller effects than less-well-powered studies.[56] This is qualitatively consistent with a recent meta-analysis of therapy finding a publication bias of 25%.[57]

We roughly estimate an additional 15% downward adjustment (85% adjustment) to account for this bias. We may look into this further if we prioritize more work on StrongMinds by speaking to researchers, HLI, and other experts and by explicitly estimating publication bias in this literature.

Main effect of StrongMinds

We undertook a shallow review of the evidence for IPT-G prior to reviewing HLI’s analysis. Because we ended up with a similar best guess on effect size,[58] we did not prioritize further review. There are several key questions we have not investigated in depth:

- How much weight should we put on different studies? In our shallow review, we rely on three RCTs that tested the impact of IPT-G on depression in a low- and middle-income country context (Bolton et al. 2003, Bolton et al. 2007, Thurman et al. 2017) and broader meta-analysis of psychotherapy programs across low-income and non-low-income countries. HLI uses Thurman et al. 2017, Bolton et al. 2007, and Bolton et al. 2003, studies of StrongMinds, and a meta-regression of indirect evidence.[59] We’re not sure how much to weight these pieces of evidence to generate a best guess for the effect of StrongMinds in countries where it would operate with additional funding.

- How do Bolton et al. 2003, Bolton et al. 2006, Thurman et al. 2017, and the programs included in HLI’s meta-analysis vary from StrongMinds program, in terms of the program being delivered and target population, and how should that affect how much we generalize results? A key piece of the assessment of how much weight to put on different trials is the similarity in program type and target population. We have not done a thorough review of study populations (e.g., the extent to which different trials targeted women with depression at baseline, like StrongMinds) and programs (e.g., number of sessions, level of training, group size, etc.).[60]

- What interventions did control groups receive? It’s possible that counterfactual treatment varied across studies. If control groups received some type of effective treatment, this could bias effects downward.

- Would we come to the same conclusions if we replicated HLI’s meta-analysis? We have not vetted its analysis of the studies it uses in its meta-analysis,[61] and it’s possible further work could uncover changes. We think it’s possible that further review of these questions could lead to changes in our best guess on the main effects of StrongMinds and similar programs on depression scores.

Durability of benefits

HLI estimates durability of benefits by fitting a decay model of the effects of therapy programs over time, based on studies of the effect of therapy at different follow-up periods.[62] HLI estimates that effects persist up to five years, based on programs it deems similar to StrongMinds.[63] To the best of our knowledge, there are no long-term follow-up studies (beyond 15 months) of IPT-G in low-income countries specifically.[64]

We do think it’s plausible that lay-person-delivered therapy programs can have persistent long-term effects, based on recent trials by Bhat et al. 2022 and Baranov et al. 2020.

However, we’re somewhat skeptical of HLI’s estimate, given that it seems unlikely to us that a time-limited course of group therapy (4-8 weeks) would have such persistent effects. We also guess that some of the factors that cause StrongMinds’ program to be less effective than programs studied in trials (see above) could also limit how long the benefits of the program endure. As a result, we apply an 80% adjustment factor to HLI’s estimates.

We view this adjustment as highly speculative, though, and think it’s possible we could update our view with more work. We also expect the forthcoming large-scale RCT of StrongMinds in Uganda by Sarah Baird and Berk Ozler, which will measure follow-up at 2 years, could provide an update to these estimates.[65]

Costs

HLI’s most recent analysis includes a cost of $170 per person treated by StrongMinds, but StrongMinds cited a 2022 figure of $105 in a recent blog post and said it expects costs to decline to $85 per person treated by the end of 2024.[66]

We would be interested in learning more about StrongMinds’ costs and understanding what may be driving fluctuations over time and whether these are related to program impact (e.g., in-person or teletherapy, amount of training).

We are also interested in understanding whether to value volunteers’ time, since StrongMinds relies on volunteers for many of its models.[67]

Additional considerations

-

Potential unmodeled upsides: There may be additional benefits of StrongMinds’ programs that our model is excluding. An example is advocacy for improved government mental health policies by StrongMinds recipients.[68]

-

Implications for cost-effectiveness of other programs: HLI’s analysis has updated us toward putting more weight on household spillover effects of therapy and to consider putting at least some weight on subjective well-being measures in assessing the benefits of therapy. It’s possible that incorporating both of these could cause us to increase our estimates of cost-effectiveness of other morbidity-averting programs (e.g., cataract surgery, fistula surgery, clubfoot), since they may also benefit from within-household spillovers or look better under a subjective well-being approach.

-

Measures of grief: We have not prioritized an in-depth review of the effects of grief on life satisfaction, since this seems relatively unlikely to change the bottom line in our current analysis. We are taking HLI’s estimates at face value for now.

Plausibility

Stepping back, we also think HLI’s estimates of the cost-effectiveness of StrongMinds seem surprisingly large compared to other benchmarks, which gives us some additional reservations about this approach.

Because we’re uncertain about the right way to trade off improvements in subjective well-being from therapy vs. other interventions like cash transfers and averting deaths, we think it’s useful to compare against other perspectives. This includes:

-

Examining the trade-offs between offering StrongMinds, averting a death, and providing unconditional cash transfers: HLI’s estimates imply that offering 17 recipients StrongMinds is as valuable as averting a death from malaria and that offering 19 recipients StrongMinds is as valuable as averting an under-5 death from malaria.[69] HLI’s estimates also imply that providing someone a $1,000 cash transfer would be _less _valuable to them than offering StrongMinds.[70] These feel like unintuitive trade-offs that beneficiaries and donors would be unlikely to want to make. We acknowledge that this type of reasoning has limitations: It’s not obvious we should defer to whatever trade-offs we’d expect individuals to make (even if we knew individuals’ preferences) or that individuals are aware of what would make them the best off (e.g., individuals might not prefer bed nets at the same rate as our cost-effectiveness analysis implies).

-

Comparing the benefits of IPT-G to an additional year of life. HLI’s estimates imply that receiving IPT-G is roughly 40% as valuable as an additional year of life per year of benefit or 80% of the value of an additional year of life total.[71] This feels intuitively high.

-

Comparing HLI’s estimates of grief from deaths averted to impact of StrongMinds: HLI estimates that each death prevented by AMF causes a gain of 7 WELLBYs that would have been lost due to grief.[72] It estimates that StrongMinds causes a gain of 13 WELLBYs. This is nearly twice the benefits of averting the grief over someone’s death, which seems intuitively high. We have not dug deeply into evidence on grief effects on life satisfaction but think the current comparison seems implausible.

We’re uncertain about how much weight to put on these considerations, since these trade-offs are challenging to assess, but they seem unintuitive to us and influence our belief that StrongMinds is less cost-effective than HLI estimates.

Cost-effectiveness using our moral weights, rather than a subjective well-being approach

The above analysis focuses on estimating cost-effectiveness under a subjective well-being approach. Our bottom line is similar if we use our current moral weights.

HLI argues for using subjective well-being measures like life satisfaction scores to compare outcomes like averting a death, increasing consumption, and improving mental health through psychotherapy.

We think there are important advantages to this approach:

-

Subjective well-being measures provide an independent approach to assessing the effect of charities. The subjective well-being approach relies on measures that we have not previously used (i.e., life satisfaction scores) and provides a distinct and coherent methodology for establishing the goodness of different interventions. How we value charities that improve different outcomes, such as increasing consumption or averting death, are one of the most uncertain parts of our process of selecting cost-effective charities, and we believe we will reach more robust decisions if we consider multiple independent lines of reasoning.[73]

-

These measures capture a relevant outcome. We think most would agree that subjective well-being is an important component of overall well-being. Measuring effects that interventions have on changes in life satisfaction may be a more accurate way to assess impacts on subjective well-being than using indirect proxies, such as increases in income.

-

The subjective well-being approach is empirical. It seems desirable for a moral weights approach to be able to change based on new research or facts about a particular context. The subjective well-being approach can change based on, for example, new studies about the effect of a particular intervention on life satisfaction.

We’re unsure what approach we should adopt for morbidity-averting interventions. We’d like to think further about the pros and cons of the subjective well-being approach and also the extent to which disability-adjusted life years may fail to capture some of the benefits of therapy (or other morbidity-averting interventions).

In this case, however, using a subjective well-being approach vs. our current moral weights does not make a meaningful difference in cost-effectiveness. If we use disability-adjusted life years instead of subjective well-being (i.e., effect on life satisfaction scores), we estimate StrongMinds is roughly 20% as cost-effective as a grant to AMF that is 10 times as cost-effective as unconditional cash transfers.[74]

Our next steps

We may prioritize further work on StrongMinds in the future to try to narrow some of our major uncertainties on this program. This may include funding additional studies, conducting additional desk-based research, and speaking with experts.

We should first prioritize the following:

- Reviewing the forthcoming large-scale RCT of StrongMinds in Uganda by Sarah Baird and Berk Ozler, as well as a recent (unpublished) RCT by StrongMinds

- Speaking to StrongMinds, Happier Lives Institute, researchers, and other subject matter experts about the key questions we’ve raised

Beyond that, we would consider:

- Exploring ways to fund research on spillover effects and on effects on life satisfaction directly, potentially as part of ongoing trials or new trials

- Exploring data collection on household size among StrongMinds participants

- Learning more about program costs from StrongMinds

- Conducting additional desk research that may inform adjustments for social desirability bias, publication bias, effects on grief, and duration of benefits of StrongMinds

- Considering whether to incorporate subjective well-being measures directly into our moral weights and how much weight we would put on a subjective well-being approach vs. our typical approach in our assessment of StrongMinds

- Considering how much weight to put on plausibility checks, potentially including additional donor surveys to understand where they fall on questions about moral intuitions

- Considering research to measure effect of StrongMinds or other therapy programs on life satisfaction directly

- Exploring how this might change our assessment of other programs addressing morbidity (because, e.g., they also have spillover effects or they also look more cost-effective under a subjective well-being approach) and consider collecting data on the effect on life satisfaction of those programs as well

Sources

Notes

"Over 8-10 sessions, counselors guide structured discussions to help participants identify the underlying triggers of their depression and examine how their current relationships and their depression are linked." StrongMinds, "Our Model at Work"

"StrongMinds treats African women with depression through talk therapy groups led by community workers. Groups consist of 5-10 women with depression or anxiety, meeting for a 90-minute session 1-2x per week for 4-8 weeks. Groups can meet in person or by phone." StrongMinds, "StrongMinds FAQs" ↩︎

See, for example: Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022. ↩︎

1.“In order to do as much good as possible, we need to compare how much good different things do in a single ‘currency’. At the Happier Lives Institute (HLI), we believe the best approach is to measure the effects of different interventions in terms of ‘units’ of subjective well-being (e.g. self-reports of happiness and life satisfaction)." Plant and McGuire, "Donating money, buying happiness: new meta-analyses comparing the cost-effectiveness of cash transfers and psychotherapy in terms of subjective well-being," 2021

2.“We will say that 1 WELLBY (wellbeing-adjusted life year) is equivalent to a 1-point improvement on a 0-10 life satisfaction scale for 1 year.” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 14. ↩︎

“Example evaluative measures: … An overall life satisfaction question, as adopted in the World Values Survey (Bjørnskov, 2010): All things considered, how satisfied are you with your life as a whole these days? Using this card on which 1 means you are “completely dissatisfied” and 10 means you are “completely satisfied” where would you put your satisfaction with life as a whole?” OECD Guidelines on Measuring Subjective Well-Being: Annex A, p. 1 ↩︎

"We found that GiveDirectly’s cash transfers produce 8 WELLBYs/$1,000 and StrongMinds’ psychotherapy produces 77 WELLBYs/$1,000, making the latter about 10 times more cost-effective than GiveDirectly." Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 16. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, p. 22, Table 2: summary of estimated spillover effects and change in comparison. ↩︎

-

Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 15, Table 2: Evidence of direct and indirect evidence of StrongMinds' effectiveness.

-

Studies are described in "Section 4. Effectiveness of StrongMinds' core programme," Pp. 9-18.

-

These are described in this spreadsheet and Table A.3 of this page. ↩︎

Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, Section "6.5 Considerations and limitations," Pp. 26-27. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, p. 22, Table 2: summary of estimated spillover effects and change in comparison. ↩︎

-

2.“A limitation to the external validity of this evidence is that all of the samples were selected based on negative shocks happening to the children in the sample. In Kemp et al. (2009), children received EMDR for PTSD symptoms following an automobile accident. In Mutamba et al. (2018), caregivers of children with nodding syndrome received group interpersonal psychotherapy. In Swartz et al. (2008), depressed mothers of children with psychiatric illness received interpersonal psychotherapy. We are not sure if this would lead to an over or underestimate of the treatment effects, but it is potentially a further deviation from the type of household we are trying to predict the effects of psychotherapy for. Whilst recipients of programmes like StrongMinds might have children who have experienced negative shocks, we expect this is not the case for all of them.” Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, p. 12. ↩︎

1 + (5.85-1)*0.53)= 3.5705 ↩︎

-

6.06 = 1.69 + 4.85 * 1.69 * 0.53

-

-

Life satisfaction point-years are also known as WELLBYs.

-

“To convert from SD-years to WELLBYs we multiply the SD-years by the average SD of life satisfaction (2.17, see row 8, “Inputs” tab), which results in 0.6 x 2.17 = 1.3 WELLBYs.” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 25.

-

See this cell.

-

“Our previous results (McGuire et al., 2022b) are in standard deviation changes over time (SD-years) of subjective wellbeing gained. Since these effects are standardised by dividing the raw effect by its SD, we convert it into life satisfaction points by unstandardising it with the global SD (2.2, see row 8) for life satisfaction (Our World in Data). Crucially, we assume a one-to-one exchange rate between a 1 SD change in affective mental health and subjective wellbeing measures. We’re concerned this may not be justified, but our investigations so far have not supported a different exchange rate.” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, footnote 24, p. 16.

-

See these cells. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, p. 22, Table 2: summary of estimated spillover effects and change in comparison. ↩︎

-

6.06 * 2.17 / $170 * $1,000

-

This is 36 SD-years improvements in depression scores per $1,000 spent. That matches the value here.

-

“We start with the simplest account, deprivationism. On this view: badness of death = net wellbeing level x years of life lost

“We assume that the average age of the individual who dies from malaria is 20 years old, they would expect to live to 70, and so preventing their death leads to 50 extra years. We estimate their average expected life satisfaction to be 4.95/10. Hence, the WELLBYs gained by the person whose death is prevented is (4.95 – neutral point) * (70 – 20).” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 17. ↩︎

“We assume that the average age of the individual who dies from malaria is 20 years old, they would expect to live to 70, and so preventing their death leads to 50 extra years. We estimate their average expected life satisfaction to be 4.95/10. Hence, the WELLBYs gained by the person whose death is prevented is (4.95 – neutral point) * (70 – 20).” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 17. ↩︎

“According to GiveWell, it costs $3,000 for AMF to prevent a death (on average).” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 18. ↩︎

Equals $3,000 per death averted divided by (4.95-0.05)*50 WELLBYs per death averted x $1,000. ↩︎

“We estimate the grief-averting effect of preventing a death is 7 WELLBYs for each death prevented (see Appendix A.2), so 2.4 WELLBYs/$1,000. We estimate the income-increasing effects to be 4 WELLBYs/$1,000 (see Appendix A.1).” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 18 ↩︎

We're ignoring HLI's estimates of the cost-effectiveness of cash transfers because the most important point of comparison is to our top charities, and because we don’t come to a meaningfully different bottom line on cost-effectiveness of GiveDirectly than HLI does. An overview of its calculations are here. ↩︎

Calculations are summarized in this spreadsheet. ↩︎

We identified three RCTs that tested the impact of IPT-G on depression in a low- and middle-income country context (Bolton et al. 2003, Bolton et al. 2007, Thurman et al. 2017). Two of these trials (Bolton et al. 2003 and Bolton et al. 2007) find IPT-G decreases symptoms of depression, while one (Thurman et al. 2017) does not find evidence of an effect. Averaging across trials, we estimate an effect on depression scores of 1.1 standard deviations. We describe our weighting in this document.

We also did a quick review of three meta-analyses of the effect of various forms of therapy on depression score in low-, middle- and high-income countries. We have low confidence that these papers provide a comprehensive look at the effect of therapy on depression, and we view it as an intuitive check on the findings from the three RCTs of IPT-G in Sub-Saharan Africa.

These trials have effects that range from 0.2 to 0.9 standard deviations, which is lower than what we found in IPT-G trials in Sub-Saharan Africa reported above.

- Cuijpers et al. 2016 is a meta-analysis of RCTs of the effect interpersonal psychotherapy (IPT) on mental health. It finds IPT for depression had an effect of 0.6 standard deviations (95% CI 0.45-0.75) across 31 studies.

- Morina et al. 2017 is a meta-analysis of RCTs of psychotherapy for adult post-traumatic stress disorder and depression in low- and middle-income countries.# It finds an effect of 0.86 standard deviations (95% CI 0.536-1.18) for 11 studies measuring an effect on depression.

- Cuijpers et al. 2010 is a meta-analysis of RCTs of psychotherapy for adult depression that examines whether effect size varies with study quality. It finds an effect of 0.22 standard deviations for high-quality studies, compared to 0.74 for low-quality studies.

Based on this, we apply a 25% downward adjustment to these trials. We put slightly higher weight on Cuijpers et al. 2010, which finds a 0.2 standard deviations effect, since it explicitly takes into account study quality, and our best guess is the typical therapy program reduces depression scores by 0.4 standard deviations. We put a 40% weight on these meta-analyses and 60% weight on the trials from Sub-Saharan Africa reported above (Bolton et al. 2003, Bolton et al. 2007, and Thurman et al. 2017), which implies an average effect of 0.82, or a 25% downward adjustment. Weight effect is 0.82 = 1.160% + 0.440%. This is 75% of the effect reported in IPT-G trials from Sub-Saharan Africa, or a 25% discount. ↩︎

“In 2022 we expect the cost to treat one patient will be $105 USD.” Mayberry, "AMA: Sean Mayberry, Founder & CEO of StrongMinds," November 2022 ↩︎

For example, we estimate Malaria Consortium in Oyo, Nigeria is 11 times as cost-effective as GiveDirectly under our current moral weights and 69 life satisfaction point-years per $1,000 spent under a subjective well-being approach, and HKI in Guinea is 11 times as cost-effective as GiveDirectly under our current approach and 71 life satisfaction point-years per $1,000 spent under a subjective well-being approach. See this spreadsheet for calculations. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, Appendix A, Section A2. Psychotherapy studies, p. 32. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, Figure 3, p. 19. ↩︎

-

“The therapist also related interpersonal therapy’s emphasis on addressing interpersonal stressors, which led Ms. A to say that she needed guidance in dealing with Ann’s problems. The therapist explained that IPT-MOMS would specifically help Ms. A find ways to interact with Ann that would be more helpful to both mother and daughter.” Swartz et al. 2008, p. 7.

-

“ITP-MOMS has been described elsewhere (18). Briefly, it consists of an initial engagement session based on principles of motivational interviewing and ethnographic interviewing (28), which is designed to explore and resolve potential barriers to treatment seeking (17, 29), followed by eight sessions of brief in- terpersonal psychotherapy (30). IPT-MOMS differs from standard interpersonal psychotherapy (16) in that 1) it follows the brief in- terpersonal psychotherapy model that is both shorter than standard interpersonal psychotherapy and uses some “soft” behavioral strategies to rapidly activate depressed patients (30), 2) it incorporates a motivational interviewing- and ethnographic in- terviewing-based engagement session and continues to draw on these engagement strategies as needed during the treatment, and 3) it uses specific strategies to assist mothers in managing prob- lematic interpersonal relationships with their dependent, psychi- atrically ill offspring. [...] Subjects assigned to treatment as usual were informed of their diagnoses, given psychoeducational materials, and told to seek treatment.” Swartz et al 2008, p. 3.

-

A video shared in Mutamba et al. 2018b shows sample sessions with women discussing care of children with nodding syndrome.

-

Mutamba et al. 2018b provides more description of the intervention in Mutambda et al. 2008. “Quantitative results of the trial have been published and demonstrate the effectiveness of IPT-G in treating depression in both caregivers and their children [34].” Mutamba et al. 2018b, p. 10.

-

-

"In the forest plot above, HLI reports that Kemp et al. (2009) finds a non-significant 0.35 [-0.43,1.13] standard deviation improvement in mental health for parents of treated children. But table 2 reports that parents in the treatment groups’ score on the GHQ-12 increased relative to the wait-list group (higher scores on the GHQ-12 indicate more self-reported mental health problems)." Snowden, "Why I don’t agree with HLI’s estimate of household spillovers from therapy," February 24, 2023

-

From HLI's comment: "This correction would reduce the spillover effect from 53% to 38% and reduce the cost-effectiveness comparison from 9.5 to 7.5x, a clear downwards correction." Snowden, "Why I don’t agree with HLI’s estimate of household spillovers from therapy," February 24, 2023

-

Das et al. 2008, Table 2 for results. ↩︎

“In summary, Table 4 suggests that there is variation by gender in the estimated transmission coefficient from parental distress to child’s LS. Specifically, mothers’ distress levels do not appear to be an important determinant of boys’ LS. … Transmission correlations are quantitatively important as well as statistically significant. For example, the mean of _FDhi(t-1) _and _MDhi(t-1) _are 1.759 and 2.186, and their standard deviations are 2.914 and 3.208, respectively. An increase of one standard deviation from the means of _FDhi(t-1) _and _MDhi(t-1) _imply a change in the mental distress level to 4.674 for fathers and 5.394 for mothers. Taking conservative estimates of _FDhi(t-1) _and _MDhi(t-1) _for girls to be -.029 and -.022, the implied changes in the girl’s LS are approximately -.051 and - .048. Given that the mean of LS for girls is 5.738 and its standard deviation is 1.348, a _ceteris paribus _increase of one standard deviation in either parent’s mental distress level explains around a 25% drop in the standard deviation in the girl’s LS.” Powdthavee and Vignoles 2008, p. 18. ↩︎

“In Table 7, we begin with the analysis of the impact of the partner’s standardised SF36 mental health score (0-100, where higher values represent higher level of well-being). Increasing this score by one standard deviation increases individual’s life satisfaction by 0.07 points (on a 1-10 scale), which is equivalent to 5% of a standard deviation in life satisfaction. To put this in context, this is similar to the (reversed) effect of becoming unemployed or being victim of a property crime (see Table 10).” Mendolia et al. 2018, p. 12. ↩︎

-

For Das et al. 2008, Table 2 includes controls for age, female, married, widowed, education indicators, household consumption, household size, physical health, elderly dependents, and young dependents. See Pp. 39-40.

-

For Powdthavee and Vignoles 2008: “We include a set of youth attributes, as well as both parents’ characteristics and some household characteristics ((taken from the main BHPS dataset) as control variables in the child’s LS regressions. Youth attributes include child’s age and the number of close friends the child has. Age and the number of close friends are measured as continuous variables, and are time-varying across the observation waves. Parental characteristics include education, employment status, and health status of both parents if present in the household. Education is captured by two dummy variables, which represent (i) whether the parent achieved A levels or not and (ii) whether they achieved a degree. More disaggregated measures of parental education are not feasible with these data. Parental employment status is measured as a categorical variable identifying selfemployment and full-time employment. Health status is also measured as a categorical variable, ranging from “1.very poor health” to “5.excellent health”. Household characteristics include household income in natural log form and the number of children in the household. Household income is calculated by taking the summation of all household members’ annual incomes and is converted into real income in 1995 prices by dividing it by the annual consumer prices index (CPI). The number of children is a continuous variable and time varying across the panel. We include these variables because they are known to be correlated with measures of LS, and they may also be correlated with the mental distress of the parents (for a review, see Oswald, 1997). Following prior studies on how to model psychological well-being (Clark, 2003; Gardner & Oswald, 2007), a similar set of controls were included in each parent’s mental distress equations, with the addition of each parent’s age. The spouse’s observed characteristics are not included in the parent’s own mental distress equation as the model already allows for the correlations between the residuals. We also include the gender of the child in later analyses of moderating gender effects. Details of mean scores and standard deviations in the final sample for each of the dependent and control variables are given in Appendix B. In order to avoid non-response bias, we create dummy variables representing missing values for all control variables in the final sample.” p. 12.

-

For Mendolia et al. 2018: “Our main model (Specification 1) includes an extensive set of independent variables, to consider other factors that may influence life satisfaction, such as individual’s and partner’s self-assessed health, education, gender, employment and marital status, number and age of children, geographic remoteness, time binary variables1 , and life events that took place in the last 12 months (personal injury or illness, serious illness of a family member, victim of physical violence, death of a close relative or family member, victim of a property crime). We also estimate two additional specifications (Specifications 2 and 3) of each model, including other variables, such as partners’ long term conditions, and possible strategies to help the individual to deal with partners’ mental health, such as presence of social networks, and engagement in social activities. The complete list of variables included in the model is reported in Table 4.” p. 9.

-

“We calculate average household sizes by averaging the latest available data from the United Nations Population Division (2019a), with average rural household sizes in these countries. Rural household size data comes from the Global Data Lab. We do this because StrongMinds and GiveDirectly operate mainly in rural or suburban areas.” Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, p. 33. ↩︎

Happier Lives Institute, "Happiness for the whole family: Accounting for household spillovers when comparing the cost-effectiveness of psychotherapy to cash transfers," February 2022, Table 2, p. 22. ↩︎

-

The Global Data Lab household size estimate is approximately 6.3 for rural areas in Kenya and Uganda 2019.

-

The UN household size estimate for Uganda is approximately 4.9 based on 2019 DHS data (from "dataset" spreadsheet, sheet "HH Size and Composition 2022," downloaded here).

-

Uganda Bureau of Statistics, Uganda National Household Survey 2019/2020, Figure 2.4, p. 36. ↩︎

-

See, for example, Table 1B. Effect on depression at t=0 in Cohen’s d.

-

“We assume that treatment improves the ‘subjective well-being’ factors to the same extent as the ‘functioning’ factors, and therefore we could unproblematically compare depression measures to ‘pure’ SWB measures using changes in standard deviations (Cohen’s d).” Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 14.

-

-

“To convert from SD-years to WELLBYs we multiply the SD-years by the average SD of life satisfaction (2.17, see row 8, “Inputs” tab), which results in 0.6 x 2.17 = 1.3 WELLBYs.” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, p. 25.

-

See this cell.

-

“Our previous results (McGuire et al., 2022b) are in standard deviation changes over time (SD-years) of subjective wellbeing gained. Since these effects are standardised by dividing the raw effect by its SD, we convert it into life satisfaction points by unstandardising it with the global SD (2.2, see row 8) for life satisfaction (Our World in Data). Crucially, we assume a one-to-one exchange rate between a 1 SD change in affective mental health and subjective wellbeing measures. We’re concerned this may not be justified, but our investigations so far have not supported a different exchange rate.” Happier Lives Institute, "The elephant in the bednet: the importance of philosophy when choosing between extending and improving lives," 2022, footnote 24, p. 16.

-

“We summarized the relative effectiveness of five different therapeutic interventions on SWB (not just LS specifically) and depression. The results are summarized in this spreadsheet. The average ratio of SWB to depression changes in the five meta-analyses is 0.89 SD; this barely changes if we remove the SWB measures that are specifically affect-based.” Happier Lives Institute, "Cost-effectiveness analysis: Group or task-shifted psychotherapy to treat depression," 2021, p. 29. ↩︎

Examples of scales are here:

- American Psychological Association, Hamilton Depression Rating Scale (HAM-D)

- Patient, Patient Health Questionnaire (PHQ-9)

- Bright Futures, Center for Epidemiological Studies Depression Scale for Children (CES-DC)

StrongMinds reports in its 2022 Q4 report that across all programs 100% of participants had depression (3% mild, 41% moderate, 41% moderate-severe, 15% severe). StrongMinds, Q4 2022 Report, p. 2 ↩︎

Some possibilities are as follows. As StrongMinds scales up its program:

- It has to train more facilitators. It seems possible that the first individuals to come forward to get trained as facilitators are the highest quality, and that as more individuals come forward as the program scales up in an area the marginal facilitator is of decreasing quality.

- It may not have the resources to oversee the quality of implementation at scale to the same extent as academic researchers in a small trial.

- It may start to operate in new contexts in which it has less experience or understanding of locally relevant concepts/causes of depression. It may take time to tailor its program accordingly, and in the meantime the program may be less effective.

“Bolton et al. (2003) and its six-month follow-up (Bass et al., 2006) were studies of an RCT deployed in Uganda (where StrongMinds primarily operates). StrongMinds based its core programme on the form, format, and facilitator training[footnote 5] of this RCT, which makes it highly relevant as a piece of evidence. StrongMinds initially used the same number of sessions (16) but later reduced its number of sessions to 12. They did this because the extra sessions did not appear to confer much additional benefit (StrongMinds, 2015, p.18), so it did not seem worth the cost to maintain it….Footnote 5: In personal communication StrongMinds says that their mental health facilitators receive slightly less training than those in the Bolton et al., (2003) RCT.” Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 10. ↩︎

-

"Finally, the study was conducted following rapid program scale-up. Mechanisms to ensure implementation fidelity were not overseen by the researchers, in contrast to the Uganda trial (Bolton et al., 2007). This study captured World Vision South Africa’s first experience delivering IPTG in these communities; time and experience may contribute to increased effectiveness. A variety of new local implementation contexts, many of them resource-constrained, and the pace and scope of scale-up likely contributed to variation in the level of program quality and fidelity to the original model", Thurman et al. 2017, p. 229.

-

"While 23% of adolescents in the intervention group did not attend any IPTG sessions, average attendance was 12 out of 16 possible sessions among participants. The intervention was not associated with changes in depression symptomology." Thurman et al. 2017, Abstract, "Results."

-

Whilst we might not want to additionally adjust the estimates in Thurman et al. 2017 much for this concern, we do want to adjust the estimates in the two Bolton et al. studies downwards. Without much detail in the Thurman et al. 2017 study as to the problems that rapid scale up caused with implementation, we have not adjusted the relative weights in the meta-analysis to favor the Thurman et al. 2017 study (because it is hard to know to what extent those same factors would apply to StrongMinds' program).

-

See “Effect vs. sample size” chart in this spreadsheet. These charts are based on the meta-analysis described in section 4.1.2 of this report and the spreadsheet linked below:

“We include evidence from psychotherapy that isn’t directly related to StrongMinds (i.e., not based on IPT or delivered to groups of women). We draw upon a wider evidence base to increase our confidence in the robustness of our results. We recently reviewed any form of face-to-face modes of psychotherapy delivered to groups or by non-specialists, deployed in LMICs (HLI, 2020b).9 At the time of writing, we have extracted data from 39 studies that appeared to be delivered by non-specialists and or to groups from five meta-analytic sources10 and any additional studies we found in our search for the costs of psychotherapy.

“These studies are not exhaustive. We stopped collecting new studies due to time constraints (after 10 hours), and the perception that we had found most of the large and easily accessible studies from the extant literature.11 The studies we include and their features can be viewed in this spreadsheet.” ↩︎

"Actual enrollment: 1914 participants." Ozler and Baird, "Using Group Interpersonal Psychotherapy to Improve the Well-Being of Adolescent Girls," (ongoing), "Study Design." ↩︎

“StrongMinds recently conducted a geographically-clustered RCT (n = 394 at 12 months) but we were only given the results and some supporting details of the RCT. The weight we currently assign to it assumes that it improves on StrongMinds’ impact evaluation and is more relevant than Bolton et al., (2003). We will update our evaluation once we have read the full study.” Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 11. ↩︎

A couple examples of why social desirability bias might exist in this setting:

- If a motivated and pleasant IPT facilitator comes to your village and is trying to help you to improve your mental health, you may feel some pressure to report that the program has worked to reward the effort that facilitator has put into helping you.

- In Bolton et al. 2003, the experimenters told participants at the start of the study that the control group will receive the treatment at a later date if it proved effective. Participants might then feel some pressure to report that the treatment worked so as not to deprive individuals in control villages from receiving the treatment too. "Prior to randomization, all potential participants were informed that if the intervention proved effective, it would later be offered to controls (currently being implemented by World Vision International)", Bolton et al. 2003, p. 3118.

- Individuals may report worse findings if they think doing so would lead to them receiving the intervention. “On this occasion informed consent included advising each youth of the study group to which he or she had been allocated. Our NGO partners had previously agreed to provide/continue on a permanent basis whichever intervention proved effective. Individuals assigned to the wait-control group were told they would be first to receive whichever intervention (if any) proved effective.” Bolton et al. 2007, p. 522.

"As far as we can tell, this is not a problem. Haushofer et al., (2020), a trial of both psychotherapy and cash transfers in a LMIC, perform a test ‘experimenter demand effect’, where they explicitly state to the participants whether they expect the research to have a positive or negative effect on the outcome in question. We take it this would generate the maximum effect, as participants would know (rather than have to guess) what the experimenter would like to hear. Haushofer et al., (2020), found no impact of explicitly stating that they expected the intervention to increase (or decrease) self-reports of depression. The results were non-significant and close to zero (n = 1,545). [...]

Other less relevant evidence of experimenter demand effects finds that it results in effects that are small or close to zero. Bandiera et al., (n =5966; 2020) studied a trial that attempted to improve the human capital of women in Uganda. They found that experimenter demand effects were close to zero. In an online experiment Mummolo & Peterson, (2019) found that “Even financial incentives to respond in line with researcher expectations fail to consistently induce demand effects.” Finally, in de Quidt et al., (2018) while they find experimenter demand effects they conclude by saying “Across eleven canonical experimental tasks we … find modest responses to demand manipulations that explicitly signal the researcher’s hypothesis… We argue that these treatments reasonably bound the magnitude of demand in typical experiments, so our … findings give cause for optimism.”” Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 26. ↩︎

-

Individuals may choose to report more favorable findings due to thinking that if they say it was helpful, others will receive the program.

-

“Prior to randomization, all potential participants were informed that if the intervention proved effective, it would later be offered to controls (currently being implemented by World Vision International).” Bolton et al. 2003, p. 3118.

-

Individuals may report worse findings if they think doing so would lead to them receiving the intervention.

-

“On this occasion informed consent included advising each youth of the study group to which he or she had been allocated. Our NGO partners had previously agreed to provide/continue on a permanent basis whichever intervention proved effective. Individuals assigned to the wait-control group were told they would be first to receive whichever intervention (if any) proved effective.” Bolton et al. 2007, p. 522.

-

Calculations are in this spreadsheet. HLI applies an overall discount factor of 89%. Removing the publication bias adjustment (setting the weight in cell I7 to 0) changes this adjustment factor to 96%. ↩︎

“Among comparisons to control conditions, adding unpublished studies (Hedges’ g = 0.20; CI95% -0.11~0.51; k = 6) to published studies (g = 0.52; 0.37~0.68; k = 20) reduced the psychotherapy effect size point estimate (g = 0.39; 0.08~0.70) by 25%.” Driessen et al. 2015, p. 1. ↩︎

-

"We include evidence from psychotherapy that isn’t directly related to StrongMinds (i.e., not based on IPT or delivered to groups of women)." Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 12.

-

Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, p. 15, Table 2: Evidence of direct and indirect evidence of StrongMinds' effectiveness.

-

Studies are described in "Section 4. Effectiveness of StrongMinds' core programme," Pp. 9-18.

-

For example:

- Bolton et al. 2003 and Bolton et al. 2007 also only include individuals who have been screened for depression in the study. By contrast, Thurman et al. 2017 does not directly screen participants for depression (but rather targets an "at risk" group - children who have been orphaned as a result of HIV/AIDS or are otherwise vulnerable).

- Bolton et al. 2007 treats individuals in camps for internally displaced people, 40% of whom had been abducted as children. Thurman et al. 2017 treats children who have been orphaned as a result of HIV/AIDS or are otherwise vulnerable.

These are in this spreadsheet and described in Appendix B (p. 30) of this page. ↩︎

Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, Section 4.2 Trajectory of efficacy through time. ↩︎

Happier Lives Institute, "Cost-effectiveness analysis: StrongMinds," October 2021, Figure 3, p. 18. “We assume the effects have entirely dissipated in five years19 (95% CI: 2, 10).” https://www.happierlivesinstitute.org/report/strongminds-cost-effectiveness-analysis/ ↩︎

-

The longest follow-up in the RCT literature on IPT-G in developing countries is 1y3m in Thurman et al. 2017 (which demonstrates the persistence of a null effect measured 3 months after follow up).

-

The next longest follow-up is the 6 month follow-up in Bass et al. 2006, which demonstrates the persistence of the treatment effect on the treated (although there is no direct test of the persistence of the intent-to-treat effect).

-

It is possible that the effects persist for longer than the first 1-2 years, but that longer duration just hasn't been tested.

-

However, I am skeptical that the effects persist beyond the first 1-2 years for two reasons: a) My prior is that a time-limited and fairly light-touch intervention (12 90-minute group sessions) is unlikely to have a persistent effect, b) These RCTs were done sufficiently long ago that the authors have had time to be able to conduct longer-term follow-ups. There are many possible reasons why they haven't done so which are not related to the effect size. However, I believe that a long-term follow-up demonstrating a persistent effect of IPT-G would make for a good academic publication and so the absence of a published long-term follow-up updates me slightly in the direction that the effect does not persist.

-

See "Outcome Measures" section, "Primary Outcome Measures" subsection here. ↩︎

“In 2022 we expect the cost to treat one patient will be $105 USD. By the end of 2024, we anticipate the cost per patient will have decreased to just $85. We will continue to reduce the cost of treating one woman even while our numbers increase. This is through effective scaling and continuing to evaluate where we can gain more cost savings. A donation to StrongMinds will be used as effectively and efficiently as possible. And when you think about what it costs for therapy in the United States, to spend just $105 and treat a woman for depression is a pretty incredible feat.” Sean Mayberry, Founder and CEO, StrongMinds, responses to questions on the Effective Altruism Forum, November 2022. ↩︎

“Group tele-therapy (38.27% of 2021 budget) is delivered over the phone by trained mental health facilitators and volunteers (peers) to groups of 5 (mostly women) for 8 weeks. We expect the share of the budget this programme receives to decline as the threat of COVID diminishes.” [...]