Summary

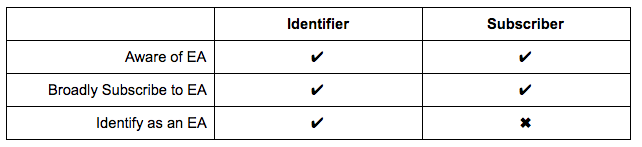

- In this report, we will explore the difference between those who self-identify as effective altruists versus those who say they broadly subscribe to effective altruism but do not self-identify. As there is variation in levels of involvement in the effective altruism movement, we were interested in assessing people who are outside the scope of the typical analysis.

- Past reports in the EA Survey Series have exclusively reported only on respondents who are aware of effective altruism, subscribe to effective altruism, and describe themselves as effective altruists.

To perform this analysis, we used three questions* to classify people into two segments – “subscribers” and “identifiers.”

- Subscribers are defined as those that are aware of effective altruism and broadly subscribe to the ideals, but do not identify as effective altruists

- Identifiers are defined as those respondents that are aware of effective altruism, broadly subscribe to the ideals, and identify as effective altruists

After analyzing the data we found:

- Subscribers are demographically similar to identifiers, but on average have been involved in EA for less time.

- The scope of subscriber involvement is fairly limited – they donate less money, volunteer less, and are less likely to be a part of an effective altruism group.

- As this is the first year we have asked the question in this way, we do not yet have the longitudinal analysis needed to see how/if subscribers convert to identifiers. However, the population demographic similarities as well as utilization of similar resources suggests that over time subscribers may deepen their involvement and later become identifiers.

Insights

Demographics

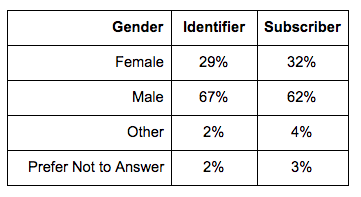

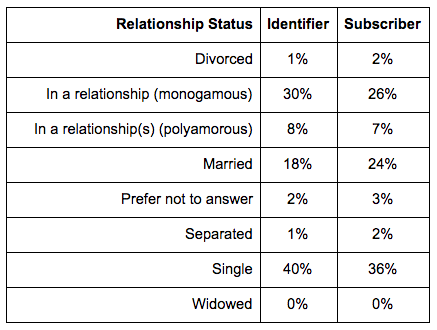

Within the sample of valid Effective Altruist survey respondents, 2582 (90%) claimed to be Identifiers and 302 (10%) were Subscribers. These populations were broadly similar with relation to age, gender, ethnicity, religion, employment, and marital status.

Getting Into EA

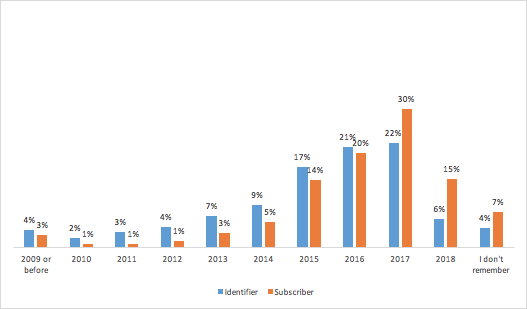

As may have been expected, the amount of time that these two populations have spent in the community is significantly different. Subscribers are largely newer to the community, 9% report their involvement in EA prior to 2014, compared to 21% of Identifiers.

The graph below shows the distribution of when the two groups report having first started being involved in effective altruism.

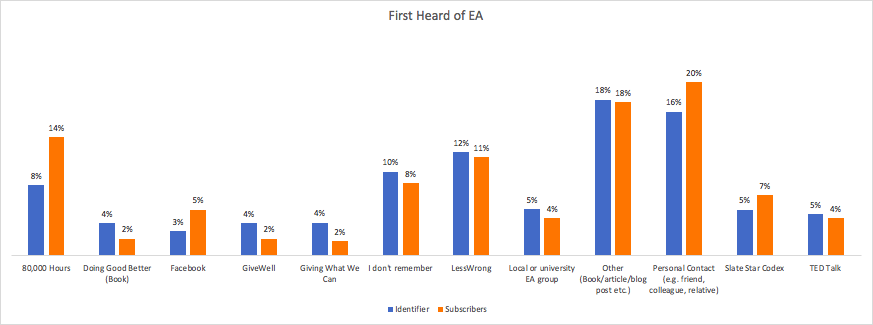

It may be the case that as subscribers spend more time as a part of the effective altruism community, they convert to identifying as effective altruists. Or it may be the case that people who subscribe to the ideals but do not identify are a growing subgroup in the community given the expansion of awareness of effective altruism as a concept. Further research will be needed to explore how people relate to the ideals of effective altruism over time. The below graph displays the top sources of where subscribers and identifiers first heard of effective altruism.

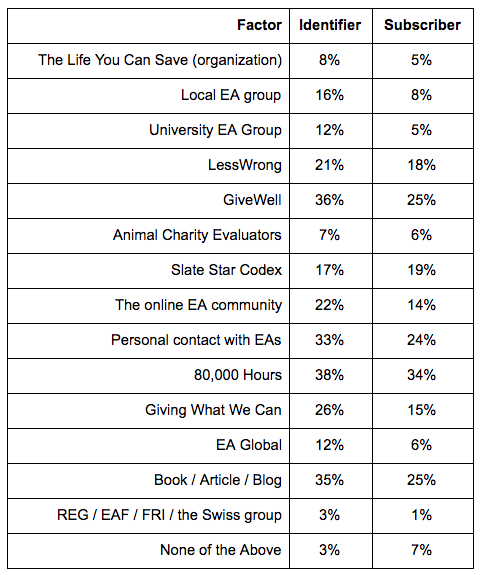

When looking at the below table of important factors for involvement below, the primary source that both identifiers and subscribers report is 80,000 Hours. Identifiers then report that GiveWell, Other (books/articles), and personal contact were the most important factors for involvement.

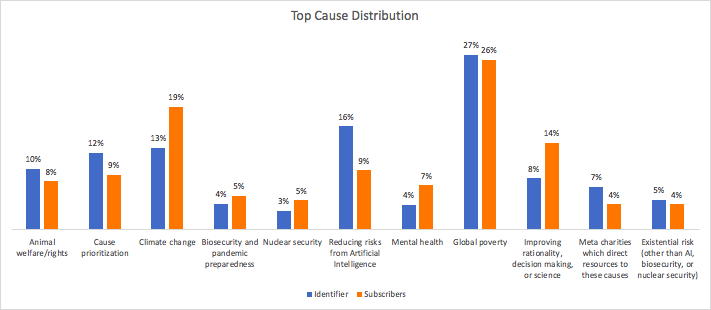

Cause Prioritization

Among all respondents in the survey, global poverty is the single cause most selected as a top priority. Subscribers and identifiers also are in agreement with that selection, but vary with the distribution of cause preference. Climate change is a priority for subscribers, with 19% of them seeing it as the top priority compared to 13% of identifiers. After global poverty, a large portion of identifiers (16%) think that reducing risk from artificial intelligence is the most important cause, while only 9% of subscribers agree.

Community Involvement

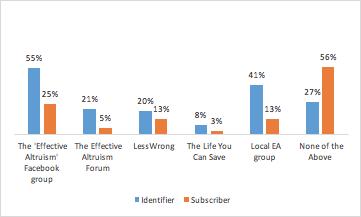

The majority of subscribers (56%) are not involved in any effective altruism groups (groups includes the EA Facebook Group, LessWrong, the EA Forum, as well as local EA groups). This appears to be partially due to lack of knowledge about where to access resources. 59% of subscribers state that they do not know of a local EA group, and only 7% of them are involved in local EA groups. 33% of identifiers are involved in local groups. Subscribers generally feel unsure about their desire to be involved in local effective altruism communities (45%). Identifiers are also doubly likely to volunteer for an effective altruism cause (32% vs 14%).

The graph below shows distribution of involvement with different effective altruism groups.

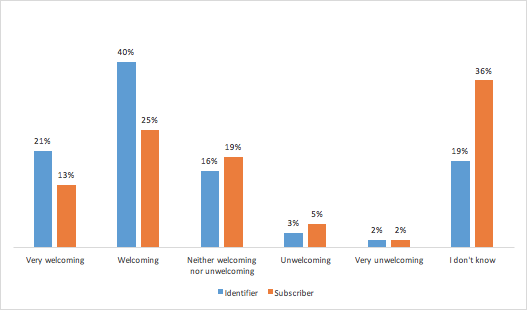

It appears that the majority of subscribers do not know how they feel about the community at large. Subscribers are most likely (36%) to say that “I don’t know” when asked about how welcoming they find the community, this is likely due to their relative lack of involvement.

Career Path

Where most identifiers (78%) feel that learning about effective altruism has shifted or will shift their career path, 48% of subscribers do not feel that learning about effective altruism has changed or will change their career. A plurality of subscribers do not plan to follow direct charity work, earning to give, or research.

Donations

When it comes to behavioral markers of involvement such as plans to donate money as well as desire to be involved in effective altruism in the future - subscribers and identifiers vary as well. 22% of subscribers report that they do not plan to donate money in the coming year, while this applies to only 12% of identifiers. Additionally, only 5% of subscribers have taken the Giving What We Can pledge, while 33% of identifiers have done so.

Other Findings

In future surveys, it would be interesting to explore if people convert from subscribers to identifiers and what inflection points may be correlated to that mindset shift.

Methodology

*Questions used for segmentation (Answers are Yes/No):

- Are you aware of effective altruism?

- Do you broadly subscribe to the basic ideas behind effective altruism?

- Could you, however loosely, be described as "an Effective Altruist"?

Credits

This post was written by Lauren Whetstone.

Thanks to Tee Barnett and David Moss for editing.

The annual EA Survey is a project of Rethink Charity with analysis and commentary from researchers at Rethink Priorities.

Supporting Documents

Other articles in the 2018 EA Survey Series:

I - Community Demographics & Characteristics

II - Distribution & Analysis Methodology

III - How do people get involved in EA?

VIII- Where People First Hear About EA and Higher Levels of Involvement

IX- Geographic Differences in EA

X- Welcomingness- How Welcoming is EA?

XI- How Long Do EAs Stay in EA?

XII- Do EA Survey Takers Keep Their GWWC Pledge?

Prior EA Surveys conducted by Rethink Charity

Thanks for this analysis. If there's time for more, I'd be keen to see something more focused on 'level of contribution' rather than subscriber vs. identifier. I'm not too concerned about whether someone identifies with EA, but rather with how much impact they're able to have. It would be useful to know which sources are most responsible for the people who are most contributing.

I'm not sure what proxies you have for this in the survey data, but I'm thinking ideally of concrete achievements, like working full-time in EA; or donating over $5,000 per year.

You could also look at how dedicated to social impact they say they are combined with things like academic credentials, but these proxies are much more noisy.

One potential source of proxies is how involved someone says they are in EA, but again I don't care about that so much compared to what they're actually contributing.

Great! I was wondering if this might be it.