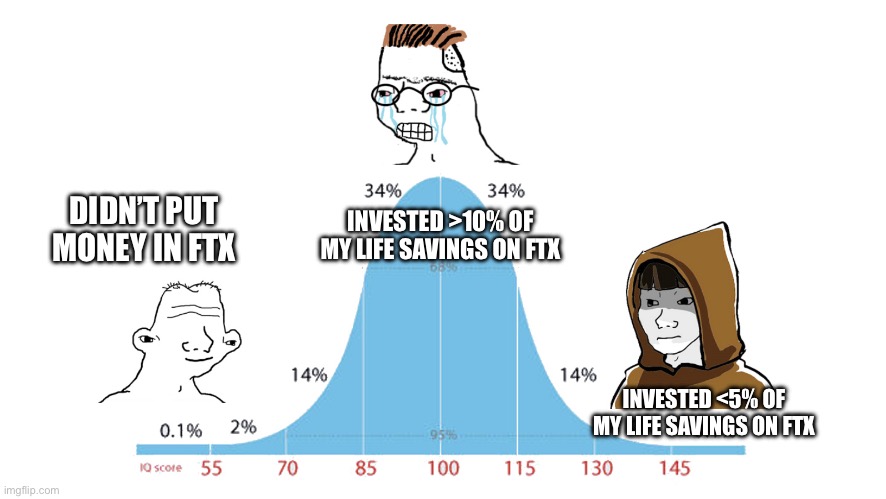

Dylan Matthews has an interesting piece up in Vox, 'How effective altruism let SBF happen'. I feel very conflicted about it, as I think it contains some criticisms that are importantly correct, but then takes it in a direction I think is importantly mistaken. I'll be curious to hear others' thoughts.

Here's what I think is most right about it:

There’s still plenty we don’t know, but based on what we do know, I don’t think the problem was earning to give, or billionaire money, or longtermism per se. But the problem does lie in the culture of effective altruism... it is deeply immature and myopic, in a way that enabled Bankman-Fried and Ellison, and it desperately needs to grow up. That means emulating the kinds of practices that more mature philanthropic institutions and movements have used for centuries, and becoming much more risk-averse.

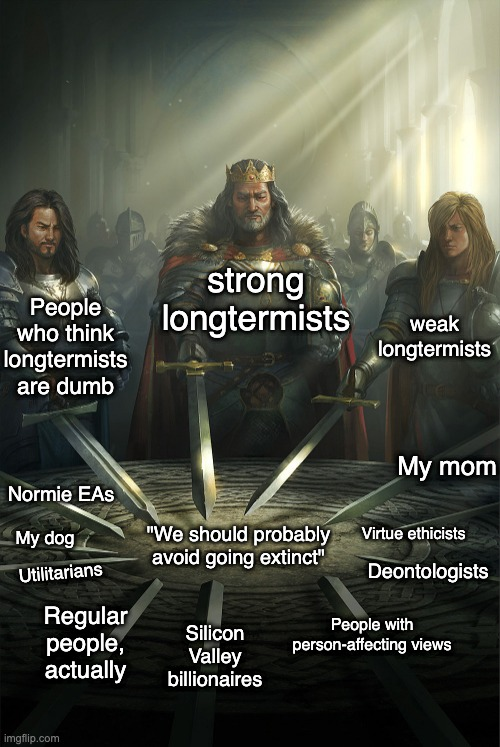

Like many youth-led movements, there's a tendency within EA to be skeptical of established institutions and ways of running things. Such skepticism is healthy in moderation, but taken to extremes can lead to things like FTX's apparent total failure of financial oversight and corporate governance. Installing SBF as a corporate "philosopher-king" turns out not to have been great for FTX, in much the same way that we might predict installing a philosopher-king as absolute dictator would not be great for a country.

I'm obviously very pro-philosophy, and think it offers important practical guidance too, but it's not a substitute for robust institutions. So here is where I feel most conflicted about the article. Because I agree we should be wary of philosopher-kings. But that's mostly just because we should be wary of "kings" (or immature dictators) in general.

So I'm not thrilled with a framing that says (as Matthews goes on to say) that "the problem is the dominance of philosophy", because I don't think philosophy tells you to install philosopher-kings. Instead, I'd say, the problem is immaturity, and lack of respect for established institutional guard-rails for good governance (i.e., bureaucracy). What EA needs to learn, IMO, is this missing respect for "established" procedures, and a culture of consulting with more senior advisers who understand how institutions work (and why).

It's important to get this diagnosis right, since there's no reason to think that replacing 30 y/o philosophers with equally young anticapitalist activists (say) would do any good here. What's needed is people with more institutional experience (which will often mean significantly older people), and a sensible division of labour between philosophy and policy, ideas and implementation.

There are parts of the article that sort of point in this direction, but then it spins away and doesn't quite articulate the problem correctly. Or so it seems to me. But again, curious to hear others' thoughts.

Distinguish:

(i) philosophically-informed ethical practice, vs

(ii) "erod[ing] the boundary between [fantastical thought experiments] and real-world decision-making"

I think that (i) is straightforwardly good, central to EA, and a key component of what makes EA distinctively good. You seem to be asserting that (ii) is a common problem within EA, and I'm wondering what the evidence for this is. I don't see anyone advocating for implementing the repugnant conclusion in real life, for example.

I think this is conflating distinct ideas. The "risky business" is simply real-world decision-making. There is no sense to the idea that philosophically-informed decision-making is inherently more risky than philosophically ignorant decision-making. [Quite the opposite: it wasn't until philosophers raised the stakes to salience that x-risk started to be taken even close to sufficiently seriously.]

Philosophers think about tricky edge cases which others tend to ignore, but unless you've some evidence that thinking about the edge cases makes us worse at responding to central cases -- and again, I'm still waiting for evidence of this -- then it seems to me that you're inventing associations where none exist in reality.

Of course. The end of the Mill quote is just flagging that traditional social norms are not beyond revision. We may have good grounds for critiquing the anti-gay sexual morality of our ancestors, for example, and so reject such outmoded norms (for everyone, not just ourselves) when we have truly "succeeded in finding better".

Do you take yourself to be disagreeing with me here? (Me: "People shouldn't be kings". You: "systematizing philosophers shouldn't be kings!" You realize that my claim entails yours, right?) I'm finding a lot of this exchange somewhat frustrating, because we seem to be talking past each other, and in a way where you seem to be implicitly attributing to me views or positions that I've already explicitly disavowed.

My sense is that we probably agree about which concrete things are bad, you perhaps have the false belief that I disagree with you on that, but actually the only disagreement is about whether philosophy tells us to do the things we both agree are bad (I say it doesn't). But if that doesn't match your sense of the dialectic, maybe you can clarify what it is that you take us to disagree about?

[12/15: Edited to tone down an intemperate sentence.]