Well known EA sympathizer Richard Hanania writes about his donation to the Shrimp Welfare Project.

I have some hesitations about supporting Richard Hanania given what I understand of his views and history. But in the same way I would say I support *example economic policy* of *example politician I don't like* if I believed it was genuinely good policy, I think I should also say that I found this article of Richard's quite warming.

This is an interesting datapoint, though... just to be clear, I would not consider the Manhattan project a success on the dimension of wisdom or even positive impact.

They did sure build some powerful technology, and they also sure didn't seem to think much about whether it was good to build that powerful technology (with many of them regretting it later).

I feel like the argument of "the only other community that was working on technology of world-ending proportions, which to be clear, did end up mostly just running full steam ahead at building the world-destroyer, was also very young" is not an amazing argument against criticism of EA/AI-Safety.

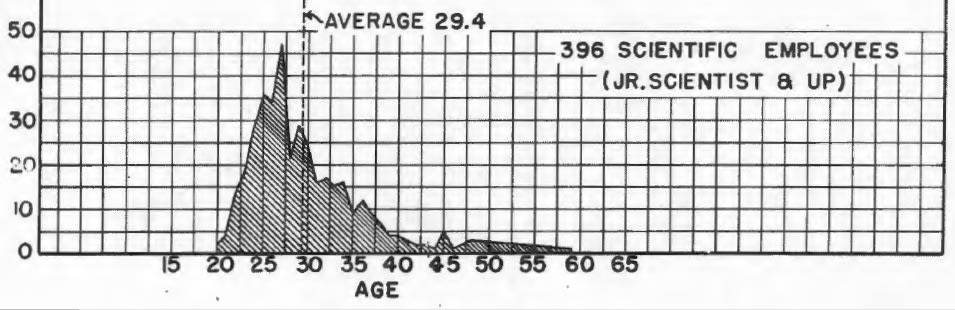

My super rough impression here is many of the younger people on the project were the grad students of the senior researchers on the project; such an age distribution seems like it would've been really common throughout most academia if so.

In my perception, the criticism levelled against EA is different. The version I've seen people argue revolves around EA lacking the hierarchy of experience required to restrain the worst impulses of having a lot of young people in concentration. The Manhattan Project had an unusual amount of intellectual freedom for a military project, sure, but it also did have a pretty defined hierarchy that would've restrained those impulses. Nor do I think EA necessarily lacks leadership, but I think it does lack a permission structure.

And I think that gets to the heart of the actual criticism. An age-based one is, well, blatantly ageist and not worth paying attention to. But that lack of a permission structure might be important!

Another aspect here is that scientists in the 1940s are at a different life stage/might just be more generally "mature" than people of a similar age/nationality/social class today. (eg most Americans back then in their late twenties probably were married and had multiple children, life expectancy at birth in the 1910s is about 50 so 30 is middle-aged, society overall was not organized as a gerontocracy, etc).

Here's a crazy idea. I haven't run it by any EAIF people yet.

I want to have a program to fund people to write book reviews and post them to the EA Forum or LessWrong. (This idea came out of a conversation with a bunch of people at a retreat; I can’t remember exactly whose idea it was.)

Basic structure:

- Someone picks a book they want to review.

- Optionally, they email me asking how on-topic I think the book is (to reduce the probability of not getting the prize later).

- They write a review, and send it to me.

- If it’s the kind of review I want, I give them $500 in return for them posting the review to EA Forum or LW with a “This post sponsored by the EAIF” banner at the top. (I’d also love to set up an impact purchase thing but that’s probably too complicated).

- If I don’t want to give them the money, they can do whatever with the review.

What books are on topic: Anything of interest to people who want to have a massive altruistic impact on the world. More specifically:

- Things directly related to traditional EA topics

- Things about the world more generally. Eg macrohistory, how do governments work, The Doomsday Machine, history of science (eg Asimov’s “A Short History of Chemistry”)

- I think that b

I worry sometimes that EAs aren’t sufficiently interested in learning facts about the world that aren’t directly related to EA stuff.

I share this concern, and I think a culture with more book reviews is a great way to achieve that (I've been happy to see all of Michael Aird's book summaries for that reason).

CEA briefly considered paying for book reviews (I was asked to write this review as a test of that idea). IIRC, the goal at the time was more about getting more engagement from people on the periphery of EA by creating EA-related content they'd find interesting for other reasons. But book reviews as a push toward levelling up more involved people // changing EA culture is a different angle, and one I like a lot.

One suggestion: I'd want the epistemic spot checks, or something similar, to be mandatory. Many interesting books fail the basic test of "is the author routinely saying true things?", and I think a good truth-oriented book review should check for that.

Yeah, I really like this. SSC currently already has a book-review contest running on SSC, and maybe LW and the EAF could do something similar? (Probably not a contest, but something that creates a bit of momentum behind the idea of doing this)

I’ve recently been thinking about medieval alchemy as a metaphor for longtermist EA.

I think there’s a sense in which it was an extremely reasonable choice to study alchemy. The basic hope of alchemy was that by fiddling around in various ways with substances you had, you’d be able to turn them into other things which had various helpful properties. It would be a really big deal if humans were able to do this.

And it seems a priori pretty reasonable to expect that humanity could get way better at manipulating substances, because there was an established history of people figuring out ways that you could do useful things by fiddling around with substances in weird ways, for example metallurgy or glassmaking, and we have lots of examples of materials having different and useful properties. If you had been particularly forward thinking, you might even have noted that it seems plausible that we’ll eventually be able to do the full range of manipulations of materials that life is able to do.

So I think that alchemists deserve a lot of points for spotting a really big and important consideration about the future. (I actually have no idea if any alchemists were thinking about it this way; th... (read more)

I think it's bad when people who've been around EA for less than a year sign the GWWC pledge. I care a lot about this.

I would prefer groups to strongly discourage new people from signing it.

I can imagine boycotting groups that encouraged signing the GWWC pledge (though I'd probably first want to post about why I feel so strongly about this, and warn them that I was going to do so).

I regret taking the pledge, and the fact that the EA community didn't discourage me from taking it is by far my biggest complaint about how the EA movement has treated me. (EDIT: TBC, I don't think anyone senior in the movement actively encouraged we to do it, but I am annoyed at them for not actively discouraging it.)

(writing this short post now because I don't have time to write the full post right now)

I'd be pretty interested in you writing this up. I think it could cause some mild changes in the way I treat my salary.

Hi Buck,

I’m very sorry to hear that you regret taking The Pledge and feel that the EA community in 2014 should have actively discouraged you from taking it in the first place.

If you believe it’s better for you and the world that you unpledge then you should feel free to do so. I also strongly endorse this statement from the 2017 post that KevinO quoted:

“The spirit of the Pledge is not to stop you from doing more good, and is not to lead you to ruin. If you find that it’s doing either of these things, you should probably break the Pledge.”

I would very much appreciate hearing further details about why you feel as strongly as you do about actively discouraging other people from taking The Pledge and the way this is done circa 2021.

Last year we collaborated with group leaders and CEA groups team to write a new guide to promoting GWWC within local and university groups (comment using this link). In that guide we tried to be pretty clear about the things to be careful of such as proposing that younger adults be encouraged to consider taking a trial pledge first if that is more appropriate for them (while also respecting their agency as adults) – there are many more people taking this opt... (read more)

From "Clarifying the Giving What We Can pledge" in 2017 (https://forum.effectivealtruism.org/posts/drJP6FPQaMt66LFGj/clarifying-the-giving-what-we-can-pledge#How_permanent_is_the_Pledge__)

"""

How permanent is the Pledge?

The Pledge is a promise, or oath, to be made seriously and with every expectation of keeping it. But if someone finds that they can no longer keep the Pledge (for instance due to serious unforeseen circumstances), then they can simply contact us, discuss the matter if need be, and cease to be a member. They can of course rejoin later if they renew their commitment.

Some of us find the analogy of marriage a helpful one: you make a promise with firm intent, you make life plans based on it, you structure things so that it’s difficult to back out of, and you commit your future self to doing something even if you don’t feel like it at the time. But at the same time, there’s a chance that things will change so drastically that you will break this tie.

Breaking the Pledge is not something to be done for reasons of convenience, or simply because you think your life would be better if you had more money. But we believe there are two kinds of situations where it’s acceptab... (read more)

I regret taking the pledge

I feel like you should be able to "unpledge" in that case, and further I don't think you should feel shame or face stigma for this. There's a few reasons I think this:

- You're working for an EA org. If you think your org is ~as effective as where you'd donate, it doesn't make sense for them to pay you money that you then donate (unless if you felt there was some psychological benefit to this, but clearly you feel the reverse)

- The community has a LOT of money now. I'm not sure what your salary is, but I'd guess it's lower than optimal given community resources, so you donating money to the community pot is probably the reverse of what I'd want.

- I don't want the community to be making people feel psychologically worse, and insofar as it is, I want an easy out for them. Therefore, I want people in your situation in general to unpledge and not feel shame or face stigma. My guess is that if you did so, you'd be sending a signal to others that doing so is acceptable.

- You signed the pledge under a set of assumptions which appear to no longer hold (eg., about how you'd feel about the pledge years out, how much money the community would have, etc)

- I'm generally pro

Here is the relevant version of the pledge, from December 2014:

I recognise that I can use part of my income to do a significant amount of good in the developing world. Since I can live well enough on a smaller income, I pledge that for the rest of my life or until the day I retire, I shall give at least ten percent of what I earn to whichever organisations can most effectively use it to help people in developing countries, now and in the years to come. I make this pledge freely, openly, and sincerely.

A large part of the point of the pledge is to bind your future self in case your future self is less altruistic. If you allow people to break it based on how they feel, that would dramatically undermine the purpose of the pledge. It might well be the case that the pledge is bad because it contains implicit empirical premises that might cease to hold - indeed I argued this at the time! - but that doesn't change the fact that someone did in fact make this commitment. If people want to make a weak statement of intent they are always able to do this - they can just say "yeah I will probably donate for as long as I feel like it". But the pledge is significantly different from this, and atte... (read more)

I strongly agree that local groups should encourage people to give for a couple years before taking the GWWC Pledge, and that the Pledge isn't right for everyone (I've been donating 10% since childhood and have never taken the pledge).

When it comes to the 'Further Giving' Pledge, I think it wouldn't be unreasonable to encourage people to get some kind of pre-Pledge counselling or take a pre-Pledge class, to be absolutely certain people have thought through the implications of the commitment they are making .

Edited to add: I think that I phrased this post misleadingly; I meant to complain mostly about low quality criticism of EA rather than eg criticism of comments. Sorry to be so unclear. I suspect most commenters misunderstood me.

I think that EAs, especially on the EA Forum, are too welcoming to low quality criticism [EDIT: of EA]. I feel like an easy way to get lots of upvotes is to make lots of vague critical comments about how EA isn’t intellectually rigorous enough, or inclusive enough, or whatever. This makes me feel less enthusiastic about engaging with the EA Forum, because it makes me feel like everything I’m saying is being read by a jeering crowd who just want excuses to call me a moron.

I’m not sure how to have a forum where people will listen to criticism open mindedly which doesn’t lead to this bias towards low quality criticism.

1. At an object level, I don't think I've noticed the dynamic particularly strongly on the EA Forum (as opposed to eg. social media). I feel like people are generally pretty positive about each other/the EA project (and if anything are less negative than is perhaps warranted sometimes?). There are occasionally low-quality critical posts (that to some degree reads to me as status plays) that pop up, but they usually get downvoted fairly quickly.

2. At a meta level, I'm not sure how to get around the problem of having a low bar for criticism in general. I think as an individual it's fairly hard to get good feedback without also being accepting of bad feedback, and likely something similar is true of groups as well?

I feel like an easy way to get lots of upvotes is to make lots of vague critical comments about how EA isn’t intellectually rigorous enough, or inclusive enough, or whatever. This makes me feel less enthusiastic about engaging with the EA Forum, because it makes me feel like everything I’m saying is being read by a jeering crowd who just want excuses to call me a moron.

Could you unpack this a bit? Is it the originating poster who makes you feel that there's a jeering crowd, or the people up-voting the OP which makes you feel the jeers?

As counterbalance...

Writing, and sharing your writing, is how you often come to know your own thoughts. I often recognise the kernel of truth someone is getting at before they've articulated it well, both in written posts and verbally. I'd rather encourage someone for getting at something even if it was lacking, and then guide them to do better. I'd especially prefer to do this given I personally know that it's difficult to make time to perfect a post whilst doing a job and other commitments.

This is even more the case when it's on a topic that hasn't been explored much, such as biases in thin... (read more)

I have felt this way as well. I have been a bit unhappy with how many upvotes in my view low quality critiques of mine have gotten (and think I may have fallen prey to a poor incentive structure there). Over the last couple of months I have tried harder to avoid that by having a mental checklist before I post anything but not sure whether I am succeeding. At least I have gotten fewer wildly upvoted comments!

I actually strong upvoted that post, because I wanted to see more engagement with the topic, decision-making under deep uncertainty, since that's a major point in my skepticism of strong longtermism. I just reduced my vote to a regular upvote. It's worth noting that Rohin's comment had more karma than the post itself (even before I reduced my vote).

I pretty much agree with your OP. Regarding that post in particular, I am uncertain about whether it's a good or bad post. It's bad in the sense that its author doesn't seem to have a great grasp of longtermism, and the post basically doesn't move the conversation forward at all. It's good in the sense that it's engaging with an important question, and the author clearly put some effort into it. I don't know how to balance these considerations.

I agree that post is low-quality in some sense (which is why I didn't upvote it), but my impression is that its central flaw is being misinformed, in a way that's fairly easy to identify. I'm more worried about criticism where it's not even clear how much I agree with the criticism or where it's socially costly to argue against the criticism because of the way it has been framed.

It also looks like the post got a fair number of downvotes, and that its karma is way lower than for other posts by the same author or on similar topics. So it actually seems to me the karma system is working well in that case.

(Possibly there is an issue where "has a fair number of downvotes" on the EA FOrum corresponds to "has zero karma" in fora with different voting norms/rules, and so the former here appearing too positive if one is more used to fora with the latter norm. Conversely I used to be confused why posts on the Alignment Forum that seemed great to me had more votes than karma score.)

I've proposed before that voting shouldn't be anonymous, and that (strong) downvotes should require explanation (either your own comment or a link to someone else's). Maybe strong upvotes should, too?

It seems this could lead to a lot of comments and very rapid ascending through the meta hierarchy! What if I want to strong downvote your strong downvote explanation?

When I was 19, I moved to San Francisco to do a coding bootcamp. I got a bunch better at Ruby programming and also learned a bunch of web technologies (SQL, Rails, JavaScript, etc).

It was a great experience for me, for a bunch of reasons.

- I got a bunch better at programming and web development.

- It was a great learning environment for me. We spent basically all day pair programming, which makes it really easy to stay motivated and engaged. And we had homework and readings in the evenings and weekends. I was living in the office at the time, with a bunch of the other students, and it was super easy for me to spend most of my waking hours programming and learning about web development. I think that it was very healthy for me to practice working really long hours in a supportive environment.

- The basic way the course worked is that every day you’d be given a project with step-by-step instructions, and you’d try to implement the instructions with your partner. I think it was really healthy for me to repeatedly practice the skill of reading the description of a project, then reading the step-by-step breakdown, and then figuring out how to code everything.

- Because we pair programmed ever

Doing lots of good vs getting really rich

Here in the EA community, we’re trying to do lots of good. Recently I’ve been thinking about the similarities and differences between a community focused on doing lots of good and a community focused on getting really rich.

I think this is interesting for a few reasons:

- I found it clarifying to articulate the main differences between how we should behave and how the wealth-seeking community should behave.

- I think that EAs make mistakes that you can notice by thinking about how the wealth-seeking community would behave, and then thinking about whether there’s a good reason for us behaving differently.

—— Here are some things that I think the wealth-seeking community would do.

- There are some types of people who should try to get rich by following some obvious career path that’s a good fit for them. For example, if you’re a not-particularly-entrepreneurial person who won math competitions in high school, it seems pretty plausible that you should work as a quant trader. If you think you’d succeed at being a really high-powered lawyer, maybe you should do that.

- But a lot of people should probably try to become entrepreneurs. In college, they s

Thanks, this is an interesting analogy.

If too few EAs go into more bespoke roles, then one reason could be risk-aversion. Rightly or wrongly, they may view those paths as more insecure and risky (for them personally; though I expect personal and altruistic risk correlate to a fair degree). If so, then one possibility is that EA funders and institutions/orgs should try to make them less risky or otherwise more appealing (there may already be some such projects).

In recent years, EA has put less emphasis on self-sacrifice, arguing that we can't expect people to live on very little. There may be a parallel to risk - that we can't expect people to take on more risk than they're comfortable with, but instead must make the risky paths more appealing.

You seem to be wise and thoughtful, but I don't understand the premise of this question or this belief:

One explanation for why entrepreneurship has high financial returns is information asymmetry/adverse selection: it's hard to tell if someone is a good CEO apart from "does their business do well", so they are forced to have their compensation tied closely to business outcomes (instead of something like "does their manager think they are doing a good job"), which have high variance; as a result of this variance and people being risk-averse, expected returns need to be high in order to compensate these entrepreneurs.

It's not obvious to me that this information asymmetry exists in EA. E.g. I expect "Buck thinks X is a good group leader" correlates better with "X is a good group leader" than "Buck thinks X will be a successful startup" correlates with "X is a successful startup".

But the reasoning [that existing orgs are often poor at rewarding/supporting/fostering new (extraordinary) leadership] seems to apply:

For example, GiveWell was a scrappy, somewhat polemical startup, and the work done there ultimately succeeded and created Open Phil and to a large degree, the present EA movemen... (read more)

I know a lot of people through a shared interest in truth-seeking and epistemics. I also know a lot of people through a shared interest in trying to do good in the world.

I think I would have naively expected that the people who care less about the world would be better at having good epistemics. For example, people who care a lot about particular causes might end up getting really mindkilled by politics, or might end up strongly affiliated with groups that have false beliefs as part of their tribal identity.

But I don’t think that this prediction is true: I think that I see a weak positive correlation between how altruistic people are and how good their epistemics seem.

----

I think the main reason for this is that striving for accurate beliefs is unpleasant and unrewarding. In particular, having accurate beliefs involves doing things like trying actively to step outside the current frame you’re using, and looking for ways you might be wrong, and maintaining constant vigilance against disagreeing with people because they’re annoying and stupid.

Altruists often seem to me to do better than people who instrumentally value epistemics; I think this is because valuing epistemics terminally ... (read more)

[This is an excerpt from a longer post I'm writing]

Suppose someone’s utility function is

U = f(C) + D

Where U is what they’re optimizing, C is their personal consumption, f is their selfish welfare as a function of consumption (log is a classic choice for f), and D is their amount of donations.

Suppose that they have diminishing utility wrt (“with respect to”) consumption (that is, df(C)/dC is strictly monotonically decreasing). Their marginal utility wrt donations is a constant, and their marginal utility wrt consumption is a decreasing function. There has to be some level of consumption where they are indifferent between donating a marginal dollar and consuming it. Below this level of consumption, they’ll prefer consuming dollars to donating them, and so they will always consume them. And above it, they’ll prefer donating dollars to consuming them, and so will always donate them. And this is why the GWWC pledge asks you to input the C such that dF(C)/d(C) is 1, and you pledge to donate everything above it and nothing below it.

This is clearly not what happens. Why? I can think of a few reasons.

- The above is what you get if the selfish and altruistic parts of you “negotiate” once, befo

[epistemic status: I'm like 80% sure I'm right here. Will probably post as a main post if no-one points out big holes in this argument, and people seem to think I phrased my points comprehensibly. Feel free to leave comments on the google doc here if that's easier.]

I think a lot of EAs are pretty confused about Shapley values and what they can do for you. In particular Shapley values are basically irrelevant to problems related to coordination between a bunch of people who all have the same values. I want to talk about why.

So Shapley values are a solution to the following problem. You have a bunch of people who can work on a project together, and the project is going to end up making some total amount of profit, and you have to decide how to split the profit between the people who worked on the project. This is just a negotiation problem.

One of the classic examples here is: you have a factory owner and a bunch of people who work in the factory. No money is made by this factory unless there's both a factory there and people who can work in the factory, and some total amount of profit is made by selling all the things that came out of the factory. But how should the profi... (read more)

Redwood Research is looking for people to help us find flaws in our injury-detecting model. We'll pay $30/hour for this, for up to 2 hours; after that, if you’ve found interesting stuff, we’ll pay you for more of this work at the same rate. I expect our demand for this to last for maybe a month (though we'll probably need more in future).

If you’re interested, please email adam@rdwrs.com so he can add you to a Slack or Discord channel with other people who are working on this. This might be a fun task for people who like being creative, being tricky, and fi... (read more)

I feel like you should be able to "unpledge" in that case, and further I don't think you should feel shame or face stigma for this. There's a few reasons I think this: