Summary

- Recently, “agency” has become heavily encouraged and socially rewarded within EA, and I am concerned about the unintended consequences.

- The un-nuanced encouragement of “social agency” could be harmful for the community; it encourages violating social norms and social dominance. At the extreme, it can feel parasitic, with dominant individuals monopolising resources.

- “Agency” being a high status buzzword incentivises Goodharting– where big and bold actions are encouraged (at the expense of actions that actually achieve an individual's goals).

- I have an impression that, sometimes, people say “agency” and others hear “work more hours; be more ambitious; make bolder moves! Why haven’t you started a project already?!” “Agency” doesn’t entail “hustling hard” – it entails acting intentionally, and these are importantly different. Agency is not a tradeoff against rest; in fact, doing things to high standards and achieving your goals often requires tons of slack.

- My experience and mistakes

- If you feel uncomfortable when violating a social norm or taking a bold move, take this feeling into consideration, there is probably a reason for this feeling.

- It’s not always low cost for others to say no to requests; failing to model this can make others uncomfortable and lead to a relative overconsumption of resources.

- Making authorities upset with you is (emotionally and instrumentally) costly. Don’t ignore this cost when taking big and bold moves.

- Being too willing to ask for help made me worse at solo problem-solving.

Introduction

Recently, “agency” has been heavily encouraged within EA. I often feel like it’s become both a status symbol and an unquestionable good.[1]

I wrote a post on agency a few months ago, which I now think missed important caveats. While I broadly think that the world (and EA) could do with an injection of proactiveness and intentionality, I’m concerned about some of the unintended consequences.

In this post, I lay out some concerns, some unexpected costs of taking agentic actions for me (and mistakes I’ve made), and things I’ve changed my mind on since my last post.

Important caveats

- Most people don’t ask for help enough, and this post is applicable to a minority of people; [2]

- This post is many layers of abstraction away from object-level issues that improve the world. I’m worried about meta-conversations (like this) taking away attention from more important and relevant topics. If you’ve read the summary, I’m not sure how much benefit you’ll get from the rest of the post. Consider not reading it.

“Social Agency” in moderation

I’m going to define “social agency”[3] as a willingness to make bold social moves, leverage social capital, and make trades within your social network to achieve your goals.[4]

My previous post mostly focused on encouraging social agency – “ask people for help,” “network online,” and “don’t be too constrained by social boundaries,” are some key themes. I am now concerned that this lacked nuance.

Social agency is a small part of doing good. Having a network and mentors and friends-in-high-places is not enough to actually do meaningful work in the world. The other part of agency is about Actually Doing Things: the nitty-gritty, engagement with reality that actually makes things happen. Taking heroic responsibility; learning about the technical, object level details of important problems; intentionally building models of the world; noticing gaps in existing strategies; developing a plan to solve bottlenecks; executing those plans and actually trying; developing feedback loops; rapidly iterating your existing plans.

The un-nuanced encouragement of social agency could be harmful for the community; it encourages individuals to violate social norms to achieve their goals, be socially dominant, and not constrain their use of finite resources (e.g. the time and attention of senior people). Taken to its extreme, agency can feel parasitic, with grasping, “agentic” individuals monopolising resources at the expense of others. I worry that operating with high “social agency” pattern-matches onto climbing a status ladder, with little regard for the consequences on others.

Don’t get me wrong – social agency is important and often necessary for achieving your goals. And the flip side is too much “Actually Doing Things,” where a lone wolf fails to benefit from cooperation, mentorship, and feedback.

Goodharting agency

"Agency" simply refers to the ability to achieve your goals, which is always instrumentally useful, but low-resolution versions can be harmful.

I claim that the following traits are being rewarded due to the “agency is high status and always good” meme:

- Willingness to violate social norms to achieve your goals;

- Being a confident go-getter;

- Having a low bar for making requests of others (and assuming that others will say no to your requests if they want to);

- Willingness to be socially dominant and “take up space”;

- Not attempting to limit your consumption of finite resources (e.g. in-demand person’s time; the attention of a teacher in a class);

- A “hustle hard” attitude.

Most of these are helpful in moderation. But in a community where “agency” becomes an increasingly virtuous trait (and “you’re so agentic!!” is highly desired praise), we may see a lot of Goodharting.

I’m concerned that this meme incentivises big and bold actions – that pattern-match to “being agentic” – instead of the actions that actually achieve your goal. [5]

It’s important not to take actions that look agentic for their own sake; use agency as a vehicle to get to the things you actually care about. Agency isn’t a helpful terminal goal, but like productivity or ambition, it is instrumentally useful.

“Agency” ≠ “hustle hard”

I am concerned about the agency meme having Hustle Culture undertones.[6] There seems to be a caricature of agency that: founds a start-up; sends an absurd number of cold emails; does weird and unconventional experiments; writes lots of blog posts; has strong inside views which overrule the outside view.

I prefer a version of agency that advocates for acting intentionally. Agency does not entail working long hours and having an ambitious to-do list, and it is not a tradeoff against rest; in fact, doing things to high standards and achieving your goals often requires tons of slack.

We all (probably) have goals that do not feel like hustling and striving, for example:

- make sure I can get 8-10 hours of sleep a night;

- create room for slack in my life;

- have meaningful and emotionally close relationships;

- call my mum regularly;

- be a caring friend.

Intentionally taking steps to achieve these goals are just as agentic as reaching out to a potential mentor or upskilling at [virtuous technical skill]. [7]

Ways that “agency” has been costly for me (and mistakes I’ve made)

Most people are not proactive enough, so the law of equal and opposite advice applies throughout all the following examples.

However, the following might especially apply to people who: have spent time in an environment where agency is socially rewarded; aren’t afraid to “take up space” socially; or have deliberately worked on the skill of becoming more agentic.

Ignoring a gut feeling that I was overstepping a boundary

Sometimes it is “worth it” to violate social norms to achieve your goals. Sometimes it is not.

Last year, I attended the European Summer Program on Rationality (ESPR), where agency was a core theme and was socially encouraged. A common joke was to enthusiastically yell “AGENCY!” whenever anyone did anything weird/ bold/ unconventional.[8] This was useful and it helped me overcome fears that others would perceive me as obnoxious or annoying if I “took up space” socially – something that was seriously holding me back at the time.

But afterwards, whenever I had a voice of doubt in my head (“you might be overstepping a boundary! You might be being “too much”! You might be making it harder for others to participate in this conversation! Are you sure it’s good for you to ask for this?”), I would crush it with the memory of an instructor yelling “AGENCY!” [9]

Unfortunately, this voice in my head was serving a very real purpose. It was trying to make sure that others around me were comfortable, and that I wasn’t socially domineering.

I am concerned that a takeaway from the agency meme could be: “do big and bold things, even if you’re uncomfortable and scared! Don’t let fear hold you back,” and I want to give a reminder that discomfort is serving a purpose.

Assuming that it is low-cost for others to say “no” to requests

I have become increasingly ask culture over the past year. I have developed a low bar to asking for things from others and a (mostly) thick skin for people saying “no”.

I recently realised that I had been expecting others to know and enforce their own boundaries, and overestimating how easy it is to say “no." I now think that this is an unrealistic expectation to have.

Someone recently told me that I was “terrible at guess culture.” This was helpful honest feedback, so I polled some friends on whether they agreed.[10] After pondering the evidence for a while (and considering whether I was too neurodiverse to be good at silly social games like guess culture[11]), I realised that I thought it wasn’t my responsibility to participate in guess culture – I implicitly believed it was dumb.

My implicit belief was something like:

"If someone doesn't want to agree to something I ask, then it’s their responsibility to say no! We’re all agents with decision making capacity here! I want to take people at their word – if they agree to something I ask, then I’ll believe them. It would be patronising to second guess them. I don’t want to be tracking subtext and implicit social cues to see whether they actually mean what they say.

Of course, I want to deliberately make it easy for people to say no to me. I only want them to agree if they actually want to. I don’t want to exert pressure on people at all. But I also want to be careful to not micromanage their emotions and wrap them in a blanket – they can enforce their own boundaries."

I still endorse much of the sentiment here.[12] But I now want to incorporate the reality that some people actually just find it hard to say no, and this is fine, and I don’t want to put them in uncomfortable situations. (Very big caveat that it is not unvirtuous to find it hard to say no to things! I do not want that to be the subtext of this section.)

Now, I’m making more of an effort to track how people respond when I make a request (e.g. body language, tone of voice, eye contact). I am also trying to build models of whether I expect a given person to feel comfortable saying no. For people who might find it harder to say no, I will usually: make fewer requests; be very clear that it’s fine if they don’t respond (and I want them not to respond if that’s the right call); give them space (and time) to think about whether they actually want to say yes; and generally approach the conversation with more tact.

Ideally, we would implicitly reward decisions not to respond when it’s the right call (but I’m not really sure what this looks like in practice.)

Not regulating my consumption of finite resources (e.g. the time of others)

I now realise that I used to model resources (in EA) as a free-market. For example, I thought that:

- I can ask people for their time, because if it’s not worth it, they will say no;

- I can ask lots of questions in a classroom, because the instructor will answer fewer of my questions if they aren’t useful for the whole class;

- I can send emails to whoever I want, because they will ignore them if they don’t want to answer.

I now think this model is flawed, and want to regulate my consumption of resources within the community more. Especially with regard to people’s time, which is valuable and finite.

(Again, this will not apply to most people reading this.)

Not cooperating with authority systems

For two months last year, I was studying for an important, difficult university entrance exam. Unfortunately, I had to be in school for seven hours a day, where the working conditions were unideal. There wasn’t a quiet place where I could reliably work uninterrupted; it was costly to take my textbooks to school every day; my school mandated that everyone attend sessions that I didn’t find useful (for example, about apprenticeships and applying to university).

I decided to be absent from school for three weeks before the exam (which, combined with a school break, meant that I didn’t attend school for five weeks straight). At home, I had control over my sleep schedule, working routine, and eating times – which I optimised the hell out of. I also gained the useful data that I enjoyed self-studying.[13]

Unfortunately, my poorly explained absence made my teachers and peers unhappy with me. My mum had told the school that I was unwell, but this was suspected to be false.[14] I believe that my teachers felt betrayed and confused: my actions had been uncooperative. It was perceived as me arrogantly thinking I was above the rules. This burned through a lot of trust and social capital with my teachers – which had been slowly built up over years. One day, I was taken out of class and scolded, which felt really bad and shame-provoking. I also felt more distant from my peers upon returning. The strained relationships with my teachers and peers made school a much more unpleasant and difficult environment for me to be in.[15]

To be clear, I endorse having taken time off school to study. This decision meaningfully increased my exam success (and therefore the likelihood that I could attend my top-choice university). But, there were major costs to not cooperating with my school authorities. For one, I care about the feelings of people around me, and this situation left others feeling undermined, which I wish I could have avoided. For another, life is much more enjoyable when you’re playing cooperatively with other agents in your environment. Life is much more enjoyable when you're regarded as a "nice person" who others like and trust. [16]

I’m honestly not sure what I would have done differently here.[17] My closing point is just that actions have costs – and that big, “agentic,” unconventional actions have bigger costs.

Learned helplessness from leaning too far into social agency

I suspect that leaning heavily into “social agency” can make people worse at “Actually Doing Things agency.” If you’re in a headspace of readily seeking help from others, you might temporarily develop a helplessness around doing things for yourself.

I experienced a period of reduced sense of self-sufficiency recently. When I encountered a problem, my default thought process was often“who can help me?” instead of ‘how can I solve this problem myself?”

Examples:

- My bike chain fell off my bike and I didn’t really consider putting it back on myself (even though I would be perfectly capable of figuring out how to do that). Instead, some guy on the street helped. [18]

- When I feel sad, my default is to message friends and talk about it with them. This can be a very good default – definitely better than feeling too ashamed to my friends about what’s bothering me. But my friends aren’t always available and it’s important to me that I don’t need to depend on them to feel better. If my friends aren’t available, I often feel stuck in a rut until I can speak to them.

- Occasionally, my Anki flashcards build up and I develop an ugh-field around reviewing my flashcards. When this happens, I tend to avoid it until I am stressed enough to tell a friend about it. Then, the friend usually helps me overcome it (by coworking, or encouragement, or a monetary fine if I don’t do it). Until I tell the friend, I feel helpless and defeated. I then rely on my friend to overcome it.

- I rarely debug anything by myself, instead defaulting to problem-solving with friends.

This is a clear case where the laws of equal and opposite advice apply; all of these examples are completely fine in moderation, but I could certainly do with developing my solo-problem-solving skills more than my asking-for-help skills.

Things I’ve changed my mind on since my last post

I think my previous post (perhaps unhelpfully) contributed to the “agency is high status and always good” meme.

I have changed my mind the following things since then:

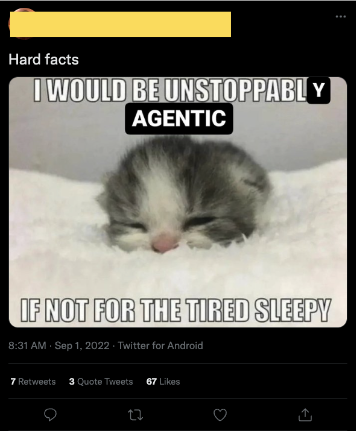

- The title of my previous post on agency was meant to be ironic, but I was glorifying the idea of being “unstoppable,” in a way that I no longer endorse. “Unstoppable” has some of the “parasite-like” undertones.

- In the post, I advocate for having a very low bar for asking for help, but I no longer think that this is universally good. Some people could certainly do with asking for help more. But, it’s possible for dominant individuals to monopolise resources – in a way that is net harmful to the community.

- I said in a comment: "it seems strictly good for EAs to be socially domineering in non-EA contexts. Like… I want young EAs to out-compete non-EAs for internship or opportunities that will help them skill build. (This framing has a bad aesthetic, but I can’t think of a nicer way to say it.)" I now disagree with this; it feels selfish in a way that's hard to imagine is good, deontologically.[19]

- It’s less clear to me that Twitter is a good use of a given person’s time.[20]

I really like Owen’s comments on the post.

“I feel uneasy about is the reinforcement of the message "the things you need are in other people's gift", and the way the post (especially #1) kind of presents "agency" as primarily a social thing (later points do this less but I think it's too late to impact the implicit takeaway.

Sometimes social agency is good, but I'm not sure I want to generically increase it in society, and I'm not sure I want EA associated with it. I'm especially worried about people getting social agency without having something like "groundedness".

... At the very least I think there's something like a "missing mood" of ~sadness here about pushing for EAs to do lots of [being socially dominant]. The attitude I want EAs to be adopting is more like "obviously in an ideal world this wouldn't be rewarded, but in the world we live in it is, and the moral purity of avoiding this generally isn't worth the foregone benefits". If we don't have that sadness I worry that (a) it's more likely that we forget our fundamentals and this becomes part of the culture unthinkingly, and (b) a bunch of conscientious people who intuit the costs of people turning this dial up see the attitudes towards it and decide that EA isn't for them."

I'm grateful for the conversations that prompted much of this post, and for helpful feedback from friends on a draft.

- ^

"Agency" refers to a range of proactive, ambitious, deliberate, goal-directed traits and habits. See the beginning of this post for a less abstract definition.

- ^

A friend commented that this post could be an info-hazard for people who aren't proactive enough.

- ^

This was coined by Owen Cotton-Barratt in a comment on the original post.

- ^

For example: reaching out to someone you admire online and asking to have a call with them; organising social events; asking for help on a research project from someone more senior; asking your peers for feedback; organising a coworking session with a friend where you both overcome your ugh-fields.

- ^

For example, maybe the best action for a given individual is quiet and simple, like studying hard in their room. But maybe they are incentivised to do other things like organise events and write blog posts, because these actions look agentic.

- ^

This website defines Hustle Culture: "Also known as burnout culture and grind culture, hustle culture refers to the mentality that one must work all day every day in pursuit of their professional goals."

- ^

To be clear, I mean "virtuous" in a tongue in cheek way here.

- ^

Examples: yell to a room to be quiet and listen; give an impromptu lightning talk; jump in a river at night; ask for more food at dinner; seek out instructors and ask them for a 1-1.

- ^

I mainly used this example because I find it funny, and I am not blaming ESPR or any staff member. I am responsible for my own actions .

- ^

My close friends all felt moderately to strongly positively towards my communication style – which is to be expected because of the extremely strong selection bias. Someone who I know less well said that they have sometimes found it hard to say no t0 me, which was very helpful feedback.

- ^

This is a joke; I don't actually think guess culture is silly. I think it serves a valuable purpose, socially.

- ^

Of treating people as agents and not trying to enforce their boundaries for them.

- ^

Note that I found self-studying much harder when I did it for five months this year.

- ^

Towards the end, she told the school that I was staying home to study for the exam.

- ^

I had been struggling in school before then, but my absence certainly amplified it.

- ^

Obviously, my goal isn't to be regarded as a nice person, it's to be kind and act with care towards the emotional wellbeing of others (and the two are importantly different).

But it does feel bad (for me at least) when others don't think of you as a "nice person," and this is a cost worth tracking, even when taking actions that you overall endorse.

- ^

Maybe I would have discussed it with my teachers beforehand. Maybe I would have told the school that I was taking time off to study, instead of ill. I don’t know; I’m doubtful that either of those solutions would have led to better outcomes.

- ^

This happened again recently and I put the chain back on my bike by myself! Win.

- ^

Multiplied, this strategy destroys everything and eats up all the resources.

- ^

I am concerned about an implicit assumption that engaging with the EA community on Twitter makes the world better. I also feel uncomfortable that an implicit goal of Twitter is to climb the Twitter status ladder. My rough thoughts/feelings now are probably: “if you’re bottlenecked by your network, consider using Twitter to grow your network. But seriously consider the possibility that Twitter could be a time and attention sink.” The above is conditional on using Twitter for impact and networking. But if you find Twitter fun and exciting (which is great), it might be worth it.

I personally find Twitter too addictive if I use it regularly, and it's better for my mental health if I'm on social media less (but this is just my experience).

I enjoyed reading these updated thoughts!

A benefit of some of the agency discourse, as I tried to articulate in this post, is that it can foster a culture of encouragement. I think EA is pretty cool for giving people the mindset to actually go out and try to improve things; tall poppy syndrome and 'cheems mindsets' are still very much the norm in many places!

I think a norm of encouragement is distinct from installing an individualistic sense of agency in everyone, though. The former should reduce the chances of Goodharting, since you'll ideally be working out your goals iteratively with likeminded people (mitigating the risk of single-mindedly pursuing an underspecified goal). It's great to have conviction — but conviction in everything you do by default could stop you from finding the things you really believe in.